Jian Jiang

HeteroCache: A Dynamic Retrieval Approach to Heterogeneous KV Cache Compression for Long-Context LLM Inference

Jan 20, 2026Abstract:The linear memory growth of the KV cache poses a significant bottleneck for LLM inference in long-context tasks. Existing static compression methods often fail to preserve globally important information, principally because they overlook the attention drift phenomenon where token significance evolves dynamically. Although recent dynamic retrieval approaches attempt to address this issue, they typically suffer from coarse-grained caching strategies and incur high I/O overhead due to frequent data transfers. To overcome these limitations, we propose HeteroCache, a training-free dynamic compression framework. Our method is built on two key insights: attention heads exhibit diverse temporal heterogeneity, and there is significant spatial redundancy among heads within the same layer. Guided by these insights, HeteroCache categorizes heads based on stability and redundancy. Consequently, we apply a fine-grained weighting strategy that allocates larger cache budgets to heads with rapidly shifting attention to capture context changes, thereby addressing the inefficiency of coarse-grained strategies. Furthermore, we employ a hierarchical storage mechanism in which a subset of representative heads monitors attention shift, and trigger an asynchronous, on-demand retrieval of contexts from the CPU, effectively hiding I/O latency. Finally, experiments demonstrate that HeteroCache achieves state-of-the-art performance on multiple long-context benchmarks and accelerates decoding by up to $3\times$ compared to the original model in the 224K context. Our code will be open-source.

Proteomic Learning of Gamma-Aminobutyric Acid (GABA) Receptor-Mediated Anesthesia

Jan 06, 2025

Abstract:Anesthetics are crucial in surgical procedures and therapeutic interventions, but they come with side effects and varying levels of effectiveness, calling for novel anesthetic agents that offer more precise and controllable effects. Targeting Gamma-aminobutyric acid (GABA) receptors, the primary inhibitory receptors in the central nervous system, could enhance their inhibitory action, potentially reducing side effects while improving the potency of anesthetics. In this study, we introduce a proteomic learning of GABA receptor-mediated anesthesia based on 24 GABA receptor subtypes by considering over 4000 proteins in protein-protein interaction (PPI) networks and over 1.5 millions known binding compounds. We develop a corresponding drug-target interaction network to identify potential lead compounds for novel anesthetic design. To ensure robust proteomic learning predictions, we curated a dataset comprising 136 targets from a pool of 980 targets within the PPI networks. We employed three machine learning algorithms, integrating advanced natural language processing (NLP) models such as pretrained transformer and autoencoder embeddings. Through a comprehensive screening process, we evaluated the side effects and repurposing potential of over 180,000 drug candidates targeting the GABRA5 receptor. Additionally, we assessed the ADMET (absorption, distribution, metabolism, excretion, and toxicity) properties of these candidates to identify those with near-optimal characteristics. This approach also involved optimizing the structures of existing anesthetics. Our work presents an innovative strategy for the development of new anesthetic drugs, optimization of anesthetic use, and deeper understanding of potential anesthesia-related side effects.

Is Segment Anything Model 2 All You Need for Surgery Video Segmentation? A Systematic Evaluation

Dec 31, 2024

Abstract:Surgery video segmentation is an important topic in the surgical AI field. It allows the AI model to understand the spatial information of a surgical scene. Meanwhile, due to the lack of annotated surgical data, surgery segmentation models suffer from limited performance. With the emergence of SAM2 model, a large foundation model for video segmentation trained on natural videos, zero-shot surgical video segmentation became more realistic but meanwhile remains to be explored. In this paper, we systematically evaluate the performance of SAM2 model in zero-shot surgery video segmentation task. We conducted experiments under different configurations, including different prompting strategies, robustness, etc. Moreover, we conducted an empirical evaluation over the performance, including 9 datasets with 17 different types of surgeries.

CP-DETR: Concept Prompt Guide DETR Toward Stronger Universal Object Detection

Dec 13, 2024

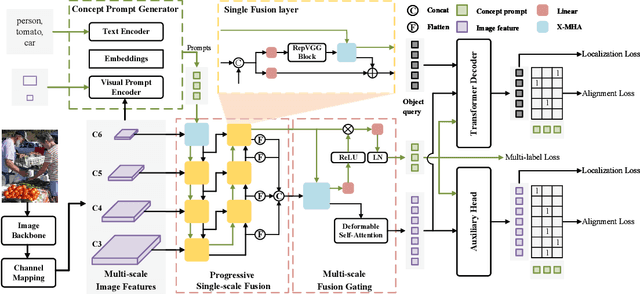

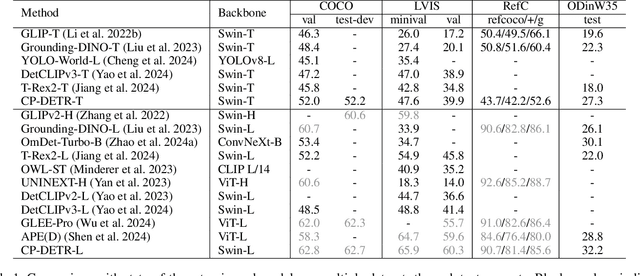

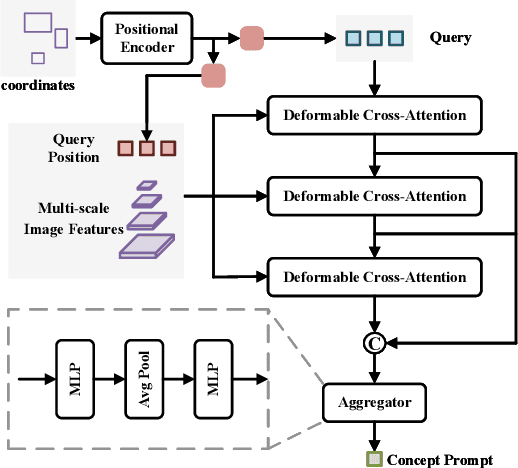

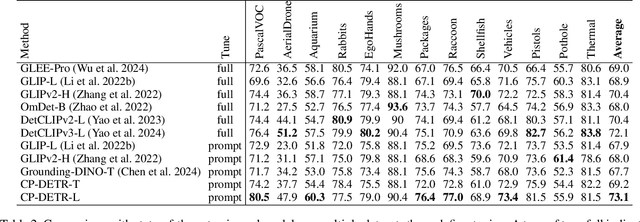

Abstract:Recent research on universal object detection aims to introduce language in a SoTA closed-set detector and then generalize the open-set concepts by constructing large-scale (text-region) datasets for training. However, these methods face two main challenges: (i) how to efficiently use the prior information in the prompts to genericise objects and (ii) how to reduce alignment bias in the downstream tasks, both leading to sub-optimal performance in some scenarios beyond pre-training. To address these challenges, we propose a strong universal detection foundation model called CP-DETR, which is competitive in almost all scenarios, with only one pre-training weight. Specifically, we design an efficient prompt visual hybrid encoder that enhances the information interaction between prompt and visual through scale-by-scale and multi-scale fusion modules. Then, the hybrid encoder is facilitated to fully utilize the prompted information by prompt multi-label loss and auxiliary detection head. In addition to text prompts, we have designed two practical concept prompt generation methods, visual prompt and optimized prompt, to extract abstract concepts through concrete visual examples and stably reduce alignment bias in downstream tasks. With these effective designs, CP-DETR demonstrates superior universal detection performance in a broad spectrum of scenarios. For example, our Swin-T backbone model achieves 47.6 zero-shot AP on LVIS, and the Swin-L backbone model achieves 32.2 zero-shot AP on ODinW35. Furthermore, our visual prompt generation method achieves 68.4 AP on COCO val by interactive detection, and the optimized prompt achieves 73.1 fully-shot AP on ODinW13.

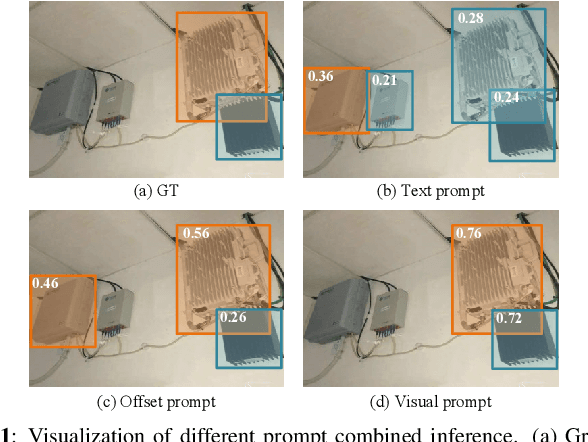

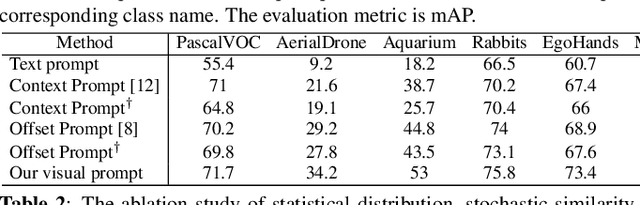

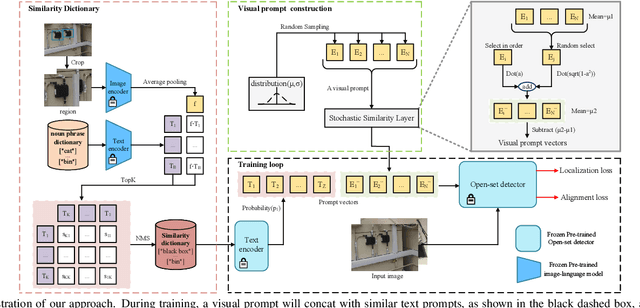

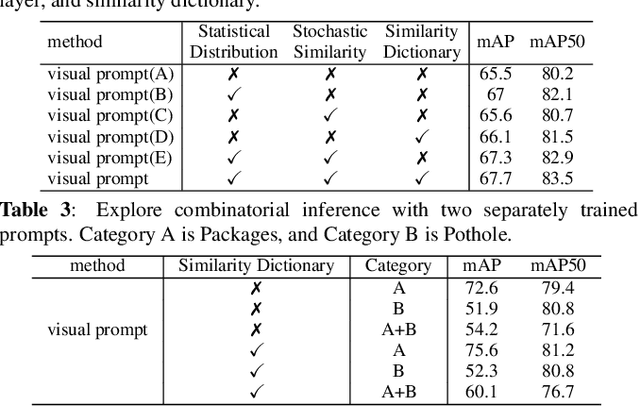

Exploration of visual prompt in Grounded pre-trained open-set detection

Dec 14, 2023

Abstract:Text prompts are crucial for generalizing pre-trained open-set object detection models to new categories. However, current methods for text prompts are limited as they require manual feedback when generalizing to new categories, which restricts their ability to model complex scenes, often leading to incorrect detection results. To address this limitation, we propose a novel visual prompt method that learns new category knowledge from a few labeled images, which generalizes the pre-trained detection model to the new category. To allow visual prompts to represent new categories adequately, we propose a statistical-based prompt construction module that is not limited by predefined vocabulary lengths, thus allowing more vectors to be used when representing categories. We further utilize the category dictionaries in the pre-training dataset to design task-specific similarity dictionaries, which make visual prompts more discriminative. We evaluate the method on the ODinW dataset and show that it outperforms existing prompt learning methods and performs more consistently in combinatorial inference.

Machine Learning Study of the Extended Drug-target Interaction Network informed by Pain Related Voltage-Gated Sodium Channels

Jul 11, 2023

Abstract:Pain is a significant global health issue, and the current treatment options for pain management have limitations in terms of effectiveness, side effects, and potential for addiction. There is a pressing need for improved pain treatments and the development of new drugs. Voltage-gated sodium channels, particularly Nav1.3, Nav1.7, Nav1.8, and Nav1.9, play a crucial role in neuronal excitability and are predominantly expressed in the peripheral nervous system. Targeting these channels may provide a means to treat pain while minimizing central and cardiac adverse effects. In this study, we construct protein-protein interaction (PPI) networks based on pain-related sodium channels and develop a corresponding drug-target interaction (DTI) network to identify potential lead compounds for pain management. To ensure reliable machine learning predictions, we carefully select 111 inhibitor datasets from a pool of over 1,000 targets in the PPI network. We employ three distinct machine learning algorithms combined with advanced natural language processing (NLP)-based embeddings, specifically pre-trained transformer and autoencoder representations. Through a systematic screening process, we evaluate the side effects and repurposing potential of over 150,000 drug candidates targeting Nav1.7 and Nav1.8 sodium channels. Additionally, we assess the ADMET (absorption, distribution, metabolism, excretion, and toxicity) properties of these candidates to identify leads with near-optimal characteristics. Our strategy provides an innovative platform for the pharmacological development of pain treatments, offering the potential for improved efficacy and reduced side effects.

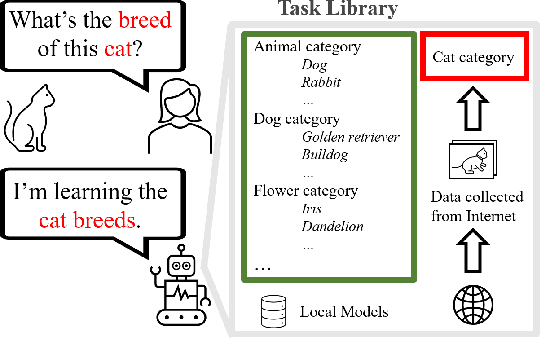

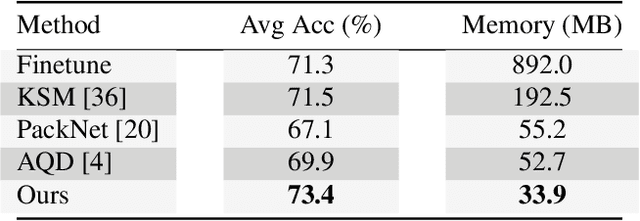

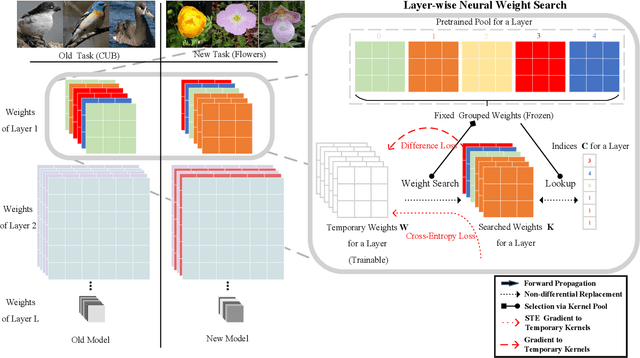

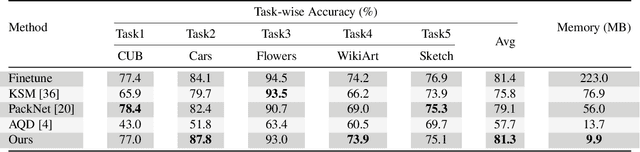

Neural Weight Search for Scalable Task Incremental Learning

Nov 24, 2022

Abstract:Task incremental learning aims to enable a system to maintain its performance on previously learned tasks while learning new tasks, solving the problem of catastrophic forgetting. One promising approach is to build an individual network or sub-network for future tasks. However, this leads to an ever-growing memory due to saving extra weights for new tasks and how to address this issue has remained an open problem in task incremental learning. In this paper, we introduce a novel Neural Weight Search technique that designs a fixed search space where the optimal combinations of frozen weights can be searched to build new models for novel tasks in an end-to-end manner, resulting in scalable and controllable memory growth. Extensive experiments on two benchmarks, i.e., Split-CIFAR-100 and CUB-to-Sketches, show our method achieves state-of-the-art performance with respect to both average inference accuracy and total memory cost.

Improving COVID-19 CT Classification of CNNs by Learning Parameter-Efficient Representation

Aug 09, 2022

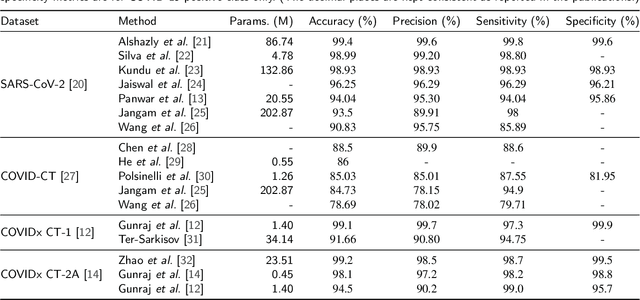

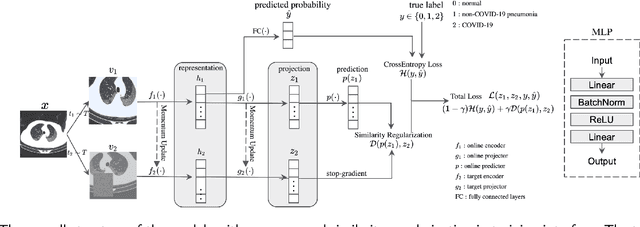

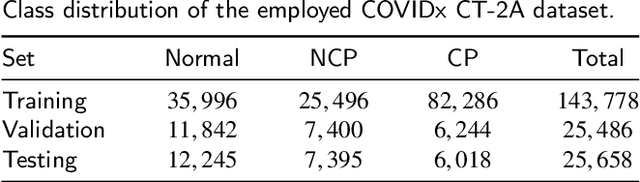

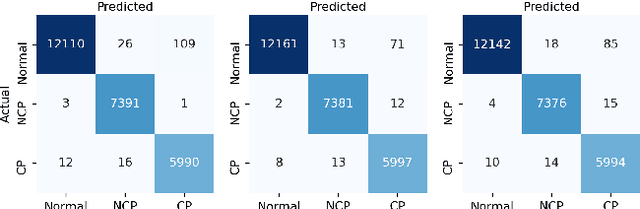

Abstract:COVID-19 pandemic continues to spread rapidly over the world and causes a tremendous crisis in global human health and the economy. Its early detection and diagnosis are crucial for controlling the further spread. Many deep learning-based methods have been proposed to assist clinicians in automatic COVID-19 diagnosis based on computed tomography imaging. However, challenges still remain, including low data diversity in existing datasets, and unsatisfied detection resulting from insufficient accuracy and sensitivity of deep learning models. To enhance the data diversity, we design augmentation techniques of incremental levels and apply them to the largest open-access benchmark dataset, COVIDx CT-2A. Meanwhile, similarity regularization (SR) derived from contrastive learning is proposed in this study to enable CNNs to learn more parameter-efficient representations, thus improving the accuracy and sensitivity of CNNs. The results on seven commonly used CNNs demonstrate that CNN performance can be improved stably through applying the designed augmentation and SR techniques. In particular, DenseNet121 with SR achieves an average test accuracy of 99.44% in three trials for three-category classification, including normal, non-COVID-19 pneumonia, and COVID-19 pneumonia. And the achieved precision, sensitivity, and specificity for the COVID-19 pneumonia category are 98.40%, 99.59%, and 99.50%, respectively. These statistics suggest that our method has surpassed the existing state-of-the-art methods on the COVIDx CT-2A dataset.

MIIDL: a Python package for microbial biomarkers identification powered by interpretable deep learning

Sep 24, 2021

Abstract:Detecting microbial biomarkers used to predict disease phenotypes and clinical outcomes is crucial for disease early-stage screening and diagnosis. Most methods for biomarker identification are linear-based, which is very limited as biological processes are rarely fully linear. The introduction of machine learning to this field tends to bring a promising solution. However, identifying microbial biomarkers in an interpretable, data-driven and robust manner remains challenging. We present MIIDL, a Python package for the identification of microbial biomarkers based on interpretable deep learning. MIIDL innovatively applies convolutional neural networks, a variety of interpretability algorithms and plenty of pre-processing methods to provide a one-stop and robust pipeline for microbial biomarkers identification from high-dimensional and sparse data sets.

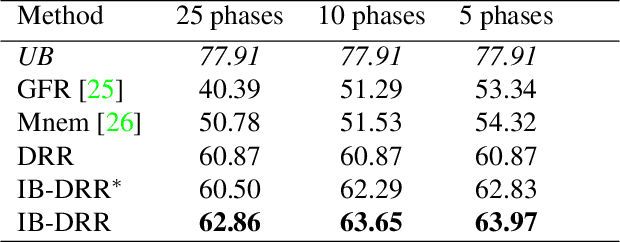

IB-DRR: Incremental Learning with Information-Back Discrete Representation Replay

Apr 21, 2021

Abstract:Incremental learning aims to enable machine learning models to continuously acquire new knowledge given new classes, while maintaining the knowledge already learned for old classes. Saving a subset of training samples of previously seen classes in the memory and replaying them during new training phases is proven to be an efficient and effective way to fulfil this aim. It is evident that the larger number of exemplars the model inherits the better performance it can achieve. However, finding a trade-off between the model performance and the number of samples to save for each class is still an open problem for replay-based incremental learning and is increasingly desirable for real-life applications. In this paper, we approach this open problem by tapping into a two-step compression approach. The first step is a lossy compression, we propose to encode input images and save their discrete latent representations in the form of codes that are learned using a hierarchical Vector Quantised Variational Autoencoder (VQ-VAE). In the second step, we further compress codes losslessly by learning a hierarchical latent variable model with bits-back asymmetric numeral systems (BB-ANS). To compensate for the information lost in the first step compression, we introduce an Information Back (IB) mechanism that utilizes real exemplars for a contrastive learning loss to regularize the training of a classifier. By maintaining all seen exemplars' representations in the format of `codes', Discrete Representation Replay (DRR) outperforms the state-of-art method on CIFAR-100 by a margin of 4% accuracy with a much less memory cost required for saving samples. Incorporated with IB and saving a small set of old raw exemplars as well, the accuracy of DRR can be further improved by 2% accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge