Kunlin Liu

Rethinking the Generation of High-Quality CoT Data from the Perspective of LLM-Adaptive Question Difficulty Grading

Apr 16, 2025Abstract:Recently, DeepSeek-R1 (671B) (DeepSeek-AIet al., 2025) has demonstrated its excellent reasoning ability in complex tasks and has publiclyshared its methodology. This provides potentially high-quality chain-of-thought (CoT) data for stimulating the reasoning abilities of small-sized large language models (LLMs). To generate high-quality CoT data for different LLMs, we seek an efficient method for generating high-quality CoT data with LLM-Adaptive questiondifficulty levels. First, we grade the difficulty of the questions according to the reasoning ability of the LLMs themselves and construct a LLM-Adaptive question database. Second, we sample the problem database based on a distribution of difficulty levels of the questions and then use DeepSeek-R1 (671B) (DeepSeek-AI et al., 2025) to generate the corresponding high-quality CoT data with correct answers. Thanks to the construction of CoT data with LLM-Adaptive difficulty levels, we have significantly reduced the cost of data generation and enhanced the efficiency of model supervised fine-tuning (SFT). Finally, we have validated the effectiveness and generalizability of the proposed method in the fields of complex mathematical competitions and code generation tasks. Notably, with only 2k high-quality mathematical CoT data, our ZMath-32B surpasses DeepSeek-Distill-32B in math reasoning task. Similarly, with only 2k high-quality code CoT data, our ZCode-32B surpasses DeepSeek-Distill-32B in code reasoning tasks.

Speech Pattern based Black-box Model Watermarking for Automatic Speech Recognition

Oct 19, 2021

Abstract:As an effective method for intellectual property (IP) protection, model watermarking technology has been applied on a wide variety of deep neural networks (DNN), including speech classification models. However, how to design a black-box watermarking scheme for automatic speech recognition (ASR) models is still an unsolved problem, which is a significant demand for protecting remote ASR Application Programming Interface (API) deployed in cloud servers. Due to conditional independence assumption and label-detection-based evasion attack risk of ASR models, the black-box model watermarking scheme for speech classification models cannot apply to ASR models. In this paper, we propose the first black-box model watermarking framework for protecting the IP of ASR models. Specifically, we synthesize trigger audios by spreading the speech clips of model owners over the entire input audios and labeling the trigger audios with the stego texts, which hides the authorship information with linguistic steganography. Experiments on the state-of-the-art open-source ASR system DeepSpeech demonstrate the feasibility of the proposed watermarking scheme, which is robust against five kinds of attacks and has little impact on accuracy.

LG-GAN: Label Guided Adversarial Network for Flexible Targeted Attack of Point Cloud-based Deep Networks

Nov 01, 2020

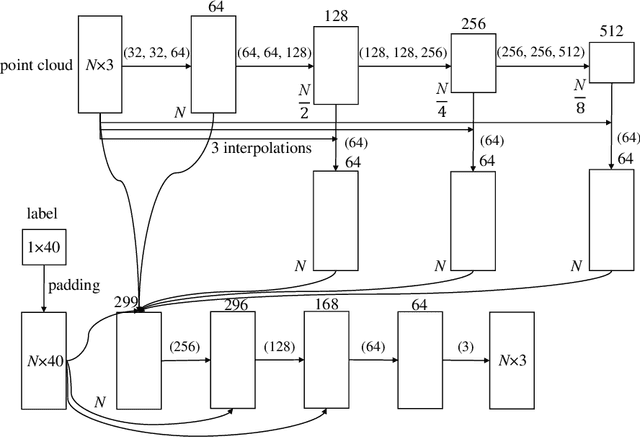

Abstract:Deep neural networks have made tremendous progress in 3D point-cloud recognition. Recent works have shown that these 3D recognition networks are also vulnerable to adversarial samples produced from various attack methods, including optimization-based 3D Carlini-Wagner attack, gradient-based iterative fast gradient method, and skeleton-detach based point-dropping. However, after a careful analysis, these methods are either extremely slow because of the optimization/iterative scheme, or not flexible to support targeted attack of a specific category. To overcome these shortcomings, this paper proposes a novel label guided adversarial network (LG-GAN) for real-time flexible targeted point cloud attack. To the best of our knowledge, this is the first generation based 3D point cloud attack method. By feeding the original point clouds and target attack label into LG-GAN, it can learn how to deform the point clouds to mislead the recognition network into the specific label only with a single forward pass. In detail, LGGAN first leverages one multi-branch adversarial network to extract hierarchical features of the input point clouds, then incorporates the specified label information into multiple intermediate features using the label encoder. Finally, the encoded features will be fed into the coordinate reconstruction decoder to generate the target adversarial sample. By evaluating different point-cloud recognition models (e.g., PointNet, PointNet++ and DGCNN), we demonstrate that the proposed LG-GAN can support flexible targeted attack on the fly while guaranteeing good attack performance and higher efficiency simultaneously.

DeepFaceLab: A simple, flexible and extensible face swapping framework

May 20, 2020

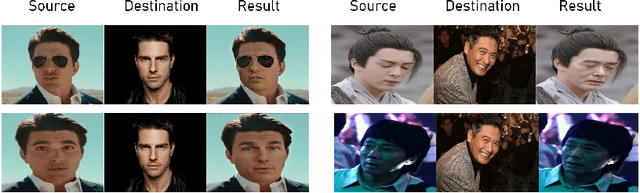

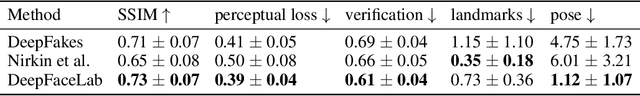

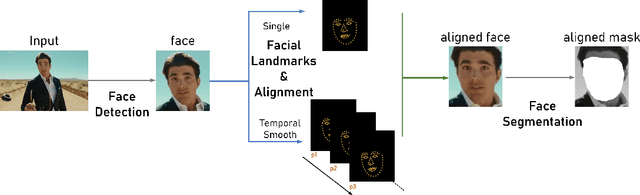

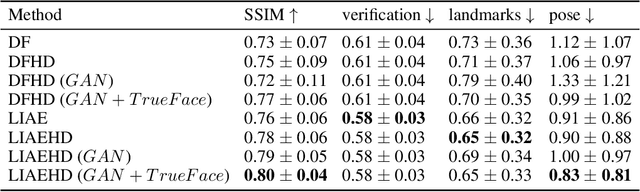

Abstract:DeepFaceLab is an open-source deepfake system created by \textbf{iperov} for face swapping with more than 3,000 forks and 13,000 stars in Github: it provides an imperative and easy-to-use pipeline for people to use with no comprehensive understanding of deep learning framework or with model implementation required, while remains a flexible and loose coupling structure for people who need to strengthen their own pipeline with other features without writing complicated boilerplate code. In this paper, we detail the principles that drive the implementation of DeepFaceLab and introduce the pipeline of it, through which every aspect of the pipeline can be modified painlessly by users to achieve their customization purpose, and it's noteworthy that DeepFaceLab could achieve results with high fidelity and indeed indiscernible by mainstream forgery detection approaches. We demonstrate the advantage of our system through comparing our approach with current prevailing systems. For more information, please visit: https://github.com/iperov/DeepFaceLab/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge