Jan Kieseler

Karlsruhe Institute of Technology, Germany

End-to-End Optimal Detector Design with Mutual Information Surrogates

Mar 18, 2025

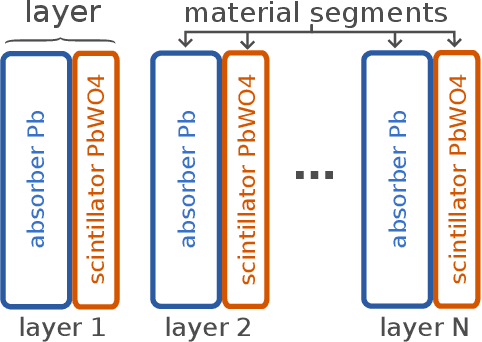

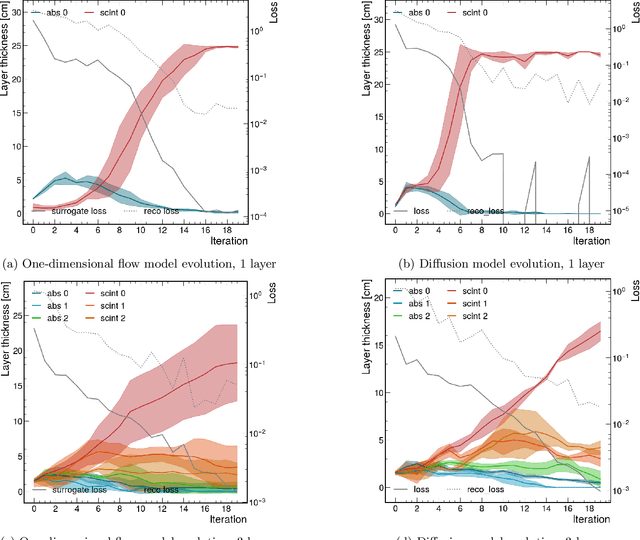

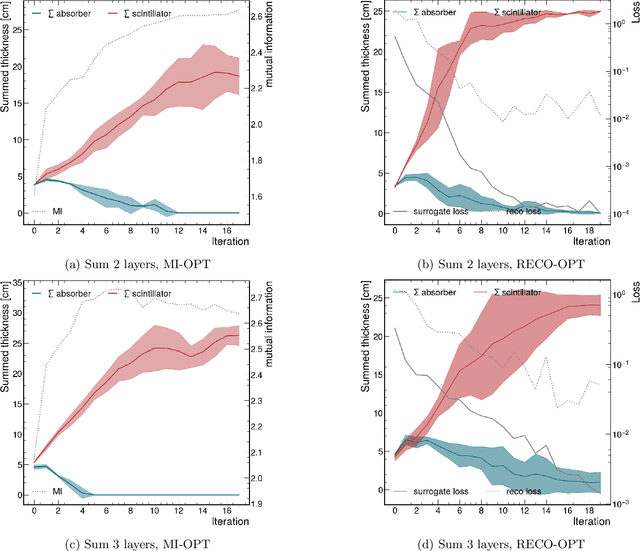

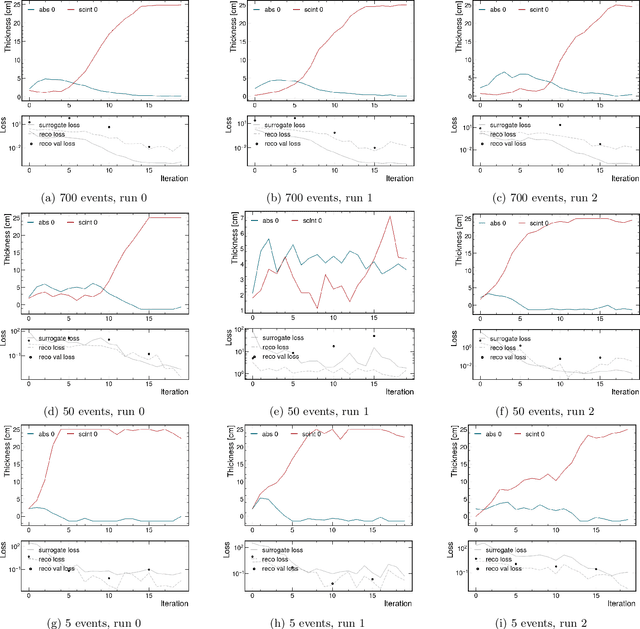

Abstract:We introduce a novel approach for end-to-end black-box optimization of high energy physics (HEP) detectors using local deep learning (DL) surrogates. These surrogates approximate a scalar objective function that encapsulates the complex interplay of particle-matter interactions and physics analysis goals. In addition to a standard reconstruction-based metric commonly used in the field, we investigate the information-theoretic metric of mutual information. Unlike traditional methods, mutual information is inherently task-agnostic, offering a broader optimization paradigm that is less constrained by predefined targets. We demonstrate the effectiveness of our method in a realistic physics analysis scenario: optimizing the thicknesses of calorimeter detector layers based on simulated particle interactions. The surrogate model learns to approximate objective gradients, enabling efficient optimization with respect to energy resolution. Our findings reveal three key insights: (1) end-to-end black-box optimization using local surrogates is a practical and compelling approach for detector design, providing direct optimization of detector parameters in alignment with physics analysis goals; (2) mutual information-based optimization yields design choices that closely match those from state-of-the-art physics-informed methods, indicating that these approaches operate near optimality and reinforcing their reliability in HEP detector design; and (3) information-theoretic methods provide a powerful, generalizable framework for optimizing scientific instruments. By reframing the optimization process through an information-theoretic lens rather than domain-specific heuristics, mutual information enables the exploration of new avenues for discovery beyond conventional approaches.

Neuromorphic Readout for Hadron Calorimeters

Feb 18, 2025Abstract:We simulate hadrons impinging on a homogeneous lead-tungstate (PbWO4) calorimeter to investigate how the resulting light yield and its temporal structure, as detected by an array of light-sensitive sensors, can be processed by a neuromorphic computing system. Our model encodes temporal photon distributions as spike trains and employs a fully connected spiking neural network to estimate the total deposited energy, as well as the position and spatial distribution of the light emissions within the sensitive material. The extracted primitives offer valuable topological information about the shower development in the material, achieved without requiring a segmentation of the active medium. A potential nanophotonic implementation using III-V semiconductor nanowires is discussed. It can be both fast and energy efficient.

Constrained Optimization of Charged Particle Tracking with Multi-Agent Reinforcement Learning

Jan 09, 2025

Abstract:Reinforcement learning demonstrated immense success in modelling complex physics-driven systems, providing end-to-end trainable solutions by interacting with a simulated or real environment, maximizing a scalar reward signal. In this work, we propose, building upon previous work, a multi-agent reinforcement learning approach with assignment constraints for reconstructing particle tracks in pixelated particle detectors. Our approach optimizes collaboratively a parametrized policy, functioning as a heuristic to a multidimensional assignment problem, by jointly minimizing the total amount of particle scattering over the reconstructed tracks in a readout frame. To satisfy constraints, guaranteeing a unique assignment of particle hits, we propose a safety layer solving a linear assignment problem for every joint action. Further, to enforce cost margins, increasing the distance of the local policies predictions to the decision boundaries of the optimizer mappings, we recommend the use of an additional component in the blackbox gradient estimation, forcing the policy to solutions with lower total assignment costs. We empirically show on simulated data, generated for a particle detector developed for proton imaging, the effectiveness of our approach, compared to multiple single- and multi-agent baselines. We further demonstrate the effectiveness of constraints with cost margins for both optimization and generalization, introduced by wider regions with high reconstruction performance as well as reduced predictive instabilities. Our results form the basis for further developments in RL-based tracking, offering both enhanced performance with constrained policies and greater flexibility in optimizing tracking algorithms through the option for individual and team rewards.

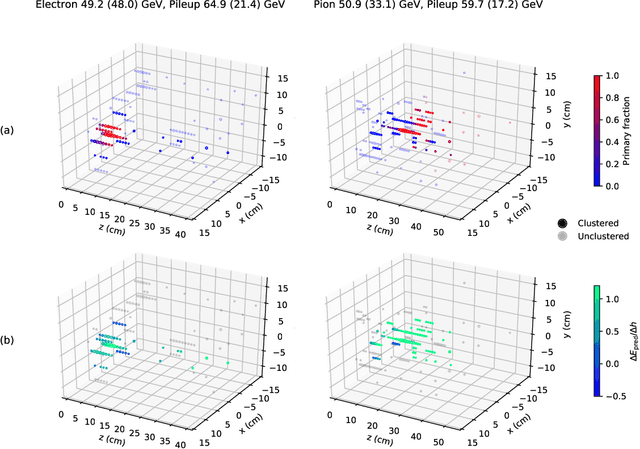

End-to-end multi-particle reconstruction in high occupancy imaging calorimeters with graph neural networks

Apr 14, 2022

Abstract:We present an end-to-end reconstruction algorithm to build particle candidates from detector hits in next-generation granular calorimeters similar to that foreseen for the high-luminosity upgrade of the CMS detector. The algorithm exploits a distance-weighted graph neural network, trained with object condensation, a graph segmentation technique. Through a single-shot approach, the reconstruction task is paired with energy regression. We describe the reconstruction performance in terms of efficiency as well as in terms of energy resolution. In addition, we show the jet reconstruction performance of our method and discuss its inference computational cost. To our knowledge, this work is the first-ever example of single-shot calorimetric reconstruction of ${\cal O}(1000)$ particles in high-luminosity conditions with 200 pileup.

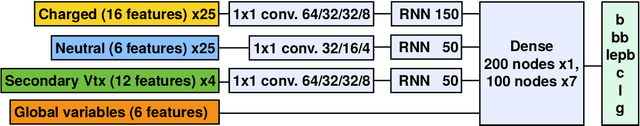

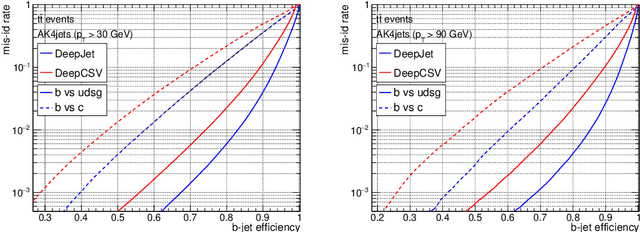

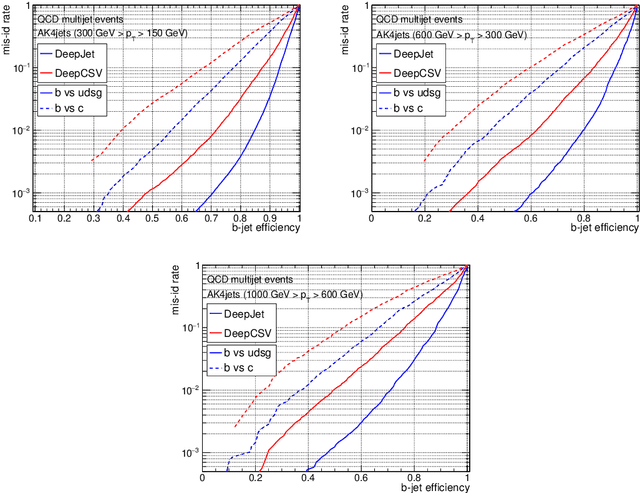

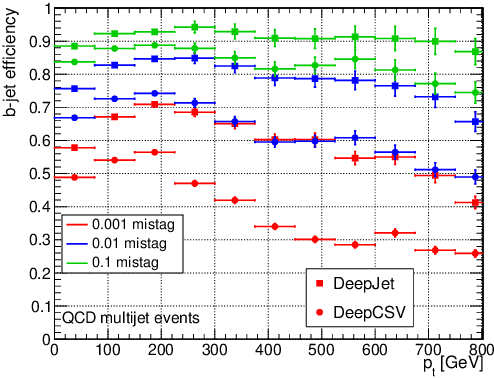

Jet Flavour Classification Using DeepJet

Aug 24, 2020

Abstract:Jet flavour classification is of paramount importance for a broad range of applications in modern-day high-energy-physics experiments, particularly at the LHC. In this paper we propose a novel architecture for this task that exploits modern deep learning techniques. This new model, called DeepJet, overcomes the limitations in input size that affected previous approaches. As a result, the classification performance improves and the number of classes to be predicted can be increased. Such, DeepJet is paving the way to an all-inclusive jet classifier.

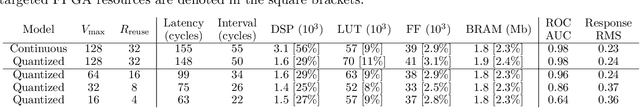

Distance-Weighted Graph Neural Networks on FPGAs for Real-Time Particle Reconstruction in High Energy Physics

Aug 08, 2020

Abstract:Graph neural networks have been shown to achieve excellent performance for several crucial tasks in particle physics, such as charged particle tracking, jet tagging, and clustering. An important domain for the application of these networks is the FGPA-based first layer of real-time data filtering at the CERN Large Hadron Collider, which has strict latency and resource constraints. We discuss how to design distance-weighted graph networks that can be executed with a latency of less than 1$\mu\mathrm{s}$ on an FPGA. To do so, we consider a representative task associated to particle reconstruction and identification in a next-generation calorimeter operating at a particle collider. We use a graph network architecture developed for such purposes, and apply additional simplifications to match the computing constraints of Level-1 trigger systems, including weight quantization. Using the $\mathtt{hls4ml}$ library, we convert the compressed models into firmware to be implemented on an FPGA. Performance of the synthesized models is presented both in terms of inference accuracy and resource usage.

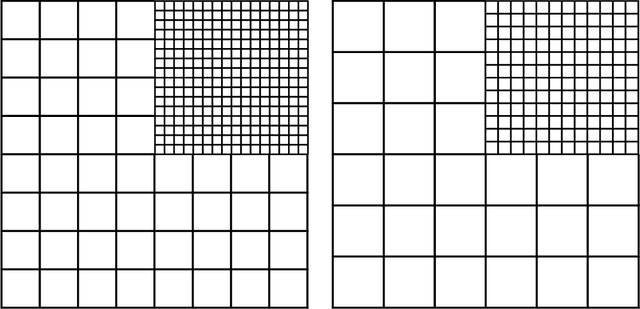

Object condensation: one-stage grid-free multi-object reconstruction in physics detectors, graph and image data

Feb 10, 2020

Abstract:High-energy physics detectors, images, and point clouds share many similarities as far as object detection is concerned. However, while detecting an unknown number of objects in an image is well established in computer vision, even machine learning assisted object reconstruction algorithms in particle physics almost exclusively predict properties on an object-by-object basis. One of the reasons is that traditional approaches to deep-neural network based multi-object detection usually employ anchor boxes, imposing implicit constraints on object sizes and density, which are not well suited for highly sparse detector data with differences in densities spanning multiple orders of magnitude. Other approaches rely heavily on objects being dense and solid, with well defined edges and a central point that is used as a keypoint to attach properties. This approach is also not directly applicable to generic detector signals. The object condensation method proposed here is independent of assumptions on object size, sorting or object density, and further generalises to non-image like data structures, such as graphs and point clouds, which are more suitable to represent detector signals. The pixels or vertices themselves serve as representations of the entire object. A combination of learnable local clustering in a latent space and confidence assignment allows one to collect condensates of the predicted object properties with a simple algorithm. This paper also includes a proof of concept application to simple image data.

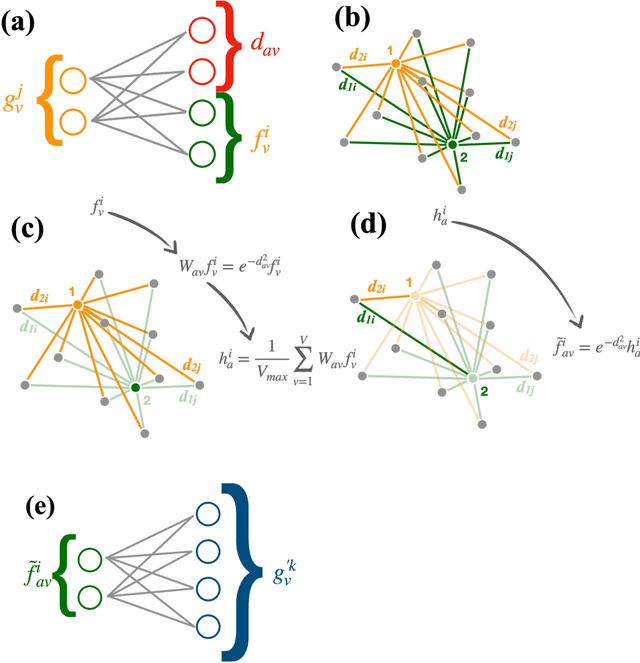

Learning representations of irregular particle-detector geometry with distance-weighted graph networks

Feb 21, 2019

Abstract:We explore the use of graph networks to deal with irregular-geometry detectors in the context of particle reconstruction. Thanks to their representation-learning capabilities, graph networks can exploit the full detector granularity, while natively managing the event sparsity and arbitrarily complex detector geometries. We introduce two distance-weighted graph network architectures, dubbed GarNet and GravNet layers, and apply them to a typical particle reconstruction task. The performance of the new architectures is evaluated on a data set of simulated particle interactions on a toy model of a highly granular calorimeter, loosely inspired by the endcap calorimeter to be installed in the CMS detector for the High-Luminosity LHC phase. We study the clustering of energy depositions, which is the basis for calorimetric particle reconstruction, and provide a quantitative comparison to alternative approaches. The proposed algorithms outperform existing methods or reach competitive performance with lower computing-resource consumption. Being geometry-agnostic, the new architectures are not restricted to calorimetry and can be easily adapted to other use cases, such as tracking in silicon detectors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge