Jaewoong Choi

Rate-Optimal Noise Annealing in Semi-Dual Neural Optimal Transport: Tangential Identifiability, Off-Manifold Ambiguity, and Guaranteed Recovery

Feb 04, 2026Abstract:Semi-dual neural optimal transport learns a transport map via a max-min objective, yet training can converge to incorrect or degenerate maps. We fully characterize these spurious solutions in the common regime where data concentrate on low-dimensional manifold: the objective is underconstrained off the data manifold, while the on-manifold transport signal remains identifiable. Following Choi, Choi, and Kwon (2025), we study additive-noise smoothing as a remedy and prove new map recovery guarantees as the noise vanishes. Our main practical contribution is a computable terminal noise level $\varepsilon_{\mathrm{stat}}(N)$ that attains the optimal statistical rate, with scaling governed by the intrinsic dimension $m$ of the data. The formula arises from a theoretical unified analysis of (i) quantitative stability of optimal plans, (ii) smoothing-induced bias, and (iii) finite-sample error, yielding rates that depend on $m$ rather than the ambient dimension. Finally, we show that the reduced semi-dual objective becomes increasingly ill-conditioned as $\varepsilon \downarrow 0$. This provides a principled stopping rule: annealing below $\varepsilon_{\mathrm{stat}}(N)$ can $\textit{worsen}$ optimization conditioning without improving statistical accuracy.

Overcoming Fake Solutions in Semi-Dual Neural Optimal Transport: A Smoothing Approach for Learning the Optimal Transport Plan

Feb 07, 2025

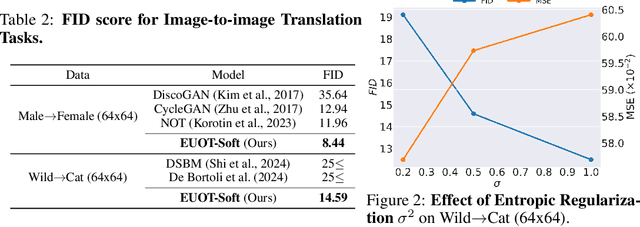

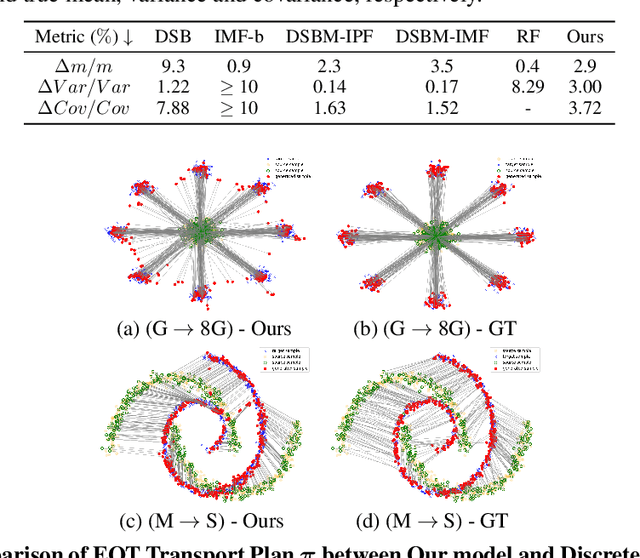

Abstract:We address the convergence problem in learning the Optimal Transport (OT) map, where the OT Map refers to a map from one distribution to another while minimizing the transport cost. Semi-dual Neural OT, a widely used approach for learning OT Maps with neural networks, often generates fake solutions that fail to transfer one distribution to another accurately. We identify a sufficient condition under which the max-min solution of Semi-dual Neural OT recovers the true OT Map. Moreover, to address cases when this sufficient condition is not satisfied, we propose a novel method, OTP, which learns both the OT Map and the Optimal Transport Plan, representing the optimal coupling between two distributions. Under sharp assumptions on the distributions, we prove that our model eliminates the fake solution issue and correctly solves the OT problem. Our experiments show that the OTP model recovers the optimal transport map where existing methods fail and outperforms current OT-based models in image-to-image translation tasks. Notably, the OTP model can learn stochastic transport maps when deterministic OT Maps do not exist, such as one-to-many tasks like colorization.

Dynamic technology impact analysis: A multi-task learning approach to patent citation prediction

Nov 14, 2024

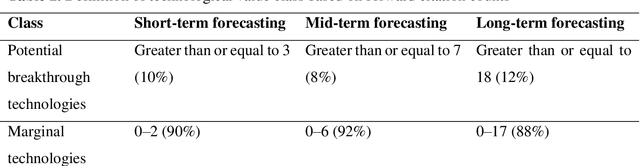

Abstract:Machine learning (ML) models are valuable tools for analyzing the impact of technology using patent citation information. However, existing ML-based methods often struggle to account for the dynamic nature of the technology impact over time and the interdependencies of these impacts across different periods. This study proposes a multi-task learning (MTL) approach to enhance the prediction of technology impact across various time frames by leveraging knowledge sharing and simultaneously monitoring the evolution of technology impact. First, we quantify the technology impacts and identify patterns through citation analysis over distinct time periods. Next, we develop MTL models to predict citation counts using multiple patent indicators over time. Finally, we examine the changes in key input indicators and their patterns over different periods using the SHapley Additive exPlanation method. We also offer guidelines for validating and interpreting the results by employing statistical methods and natural language processing techniques. A case study on battery technologies demonstrates that our approach not only deepens the understanding of technology impact, but also improves prediction accuracy, yielding valuable insights for both academia and industry.

Novelty-focused R&D landscaping using transformer and local outlier factor

Nov 05, 2024

Abstract:While numerous studies have explored the field of research and development (R&D) landscaping, the preponderance of these investigations has emphasized predictive analysis based on R&D outcomes, specifically patents, and academic literature. However, the value of research proposals and novelty analysis has seldom been addressed. This study proposes a systematic approach to constructing and navigating the R&D landscape that can be utilized to guide organizations to respond in a reproducible and timely manner to the challenges presented by increasing number of research proposals. At the heart of the proposed approach is the composite use of the transformer-based language model and the local outlier factor (LOF). The semantic meaning of the research proposals is captured with our further-trained transformers, thereby constructing a comprehensive R&D landscape. Subsequently, the novelty of the newly selected research proposals within the annual landscape is quantified on a numerical scale utilizing the LOF by assessing the dissimilarity of each proposal to others preceding and within the same year. A case study examining research proposals in the energy and resource sector in South Korea is presented. The systematic process and quantitative outcomes are expected to be useful decision-support tools, providing future insights regarding R&D planning and roadmapping.

Robust Barycenter Estimation using Semi-Unbalanced Neural Optimal Transport

Oct 04, 2024

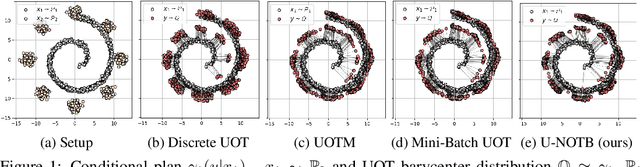

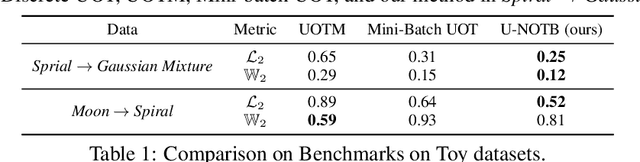

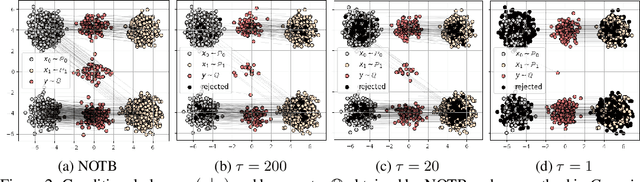

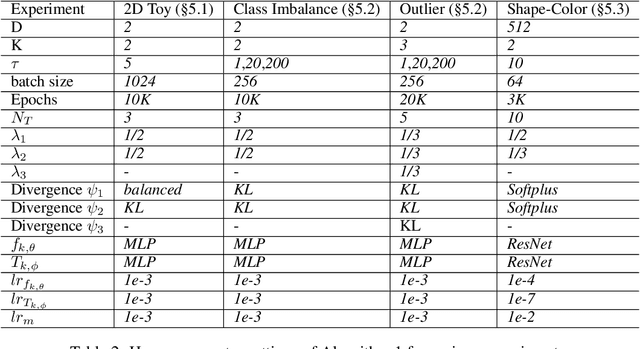

Abstract:A common challenge in aggregating data from multiple sources can be formalized as an \textit{Optimal Transport} (OT) barycenter problem, which seeks to compute the average of probability distributions with respect to OT discrepancies. However, the presence of outliers and noise in the data measures can significantly hinder the performance of traditional statistical methods for estimating OT barycenters. To address this issue, we propose a novel, scalable approach for estimating the \textit{robust} continuous barycenter, leveraging the dual formulation of the \textit{(semi-)unbalanced} OT problem. To the best of our knowledge, this paper is the first attempt to develop an algorithm for robust barycenters under the continuous distribution setup. Our method is framed as a $\min$-$\max$ optimization problem and is adaptable to \textit{general} cost function. We rigorously establish the theoretical underpinnings of the proposed method and demonstrate its robustness to outliers and class imbalance through a number of illustrative experiments.

Unsupervised Point Cloud Completion through Unbalanced Optimal Transport

Oct 03, 2024Abstract:Unpaired point cloud completion explores methods for learning a completion map from unpaired incomplete and complete point cloud data. In this paper, we propose a novel approach for unpaired point cloud completion using the unbalanced optimal transport map, called Unbalanced Optimal Transport Map for Unpaired Point Cloud Completion (UOT-UPC). We demonstrate that the unpaired point cloud completion can be naturally interpreted as the Optimal Transport (OT) problem and introduce the Unbalanced Optimal Transport (UOT) approach to address the class imbalance problem, which is prevalent in unpaired point cloud completion datasets. Moreover, we analyze the appropriate cost function for unpaired completion tasks. This analysis shows that the InfoCD cost function is particularly well-suited for this task. Our model is the first attempt to leverage UOT for unpaired point cloud completion, achieving competitive or superior results on both single-category and multi-category datasets. In particular, our model is especially effective in scenarios with class imbalance, where the proportions of categories are different between the incomplete and complete point cloud datasets.

Improving Neural Optimal Transport via Displacement Interpolation

Oct 03, 2024

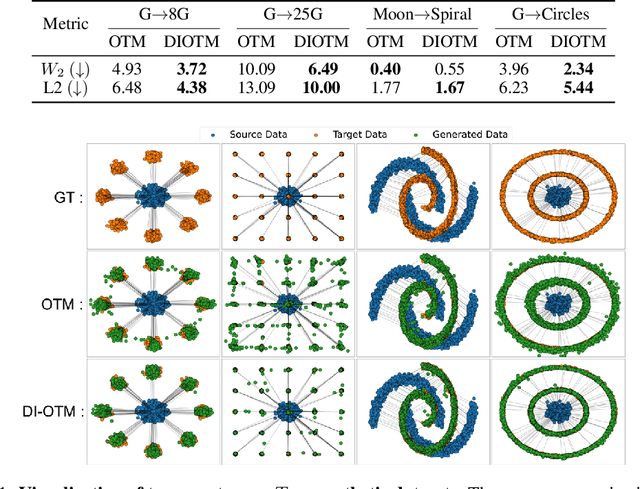

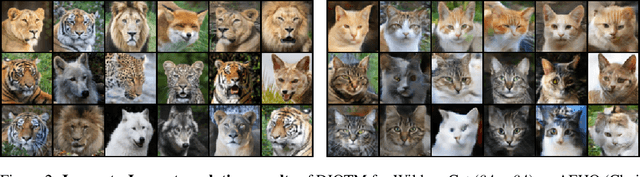

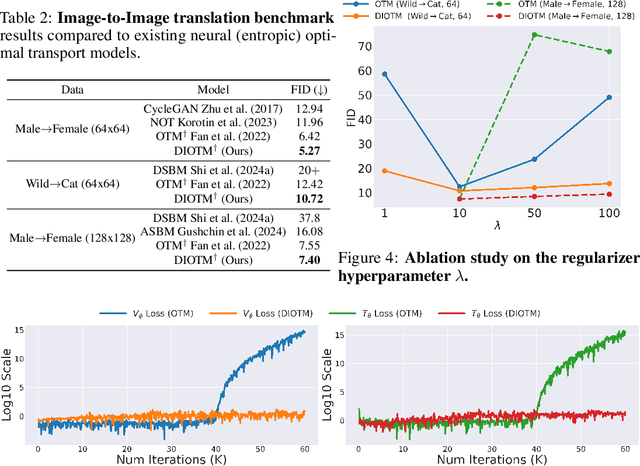

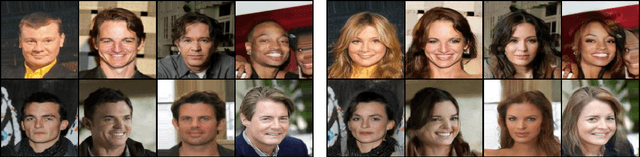

Abstract:Optimal Transport (OT) theory investigates the cost-minimizing transport map that moves a source distribution to a target distribution. Recently, several approaches have emerged for learning the optimal transport map for a given cost function using neural networks. We refer to these approaches as the OT Map. OT Map provides a powerful tool for diverse machine learning tasks, such as generative modeling and unpaired image-to-image translation. However, existing methods that utilize max-min optimization often experience training instability and sensitivity to hyperparameters. In this paper, we propose a novel method to improve stability and achieve a better approximation of the OT Map by exploiting displacement interpolation, dubbed Displacement Interpolation Optimal Transport Model (DIOTM). We derive the dual formulation of displacement interpolation at specific time $t$ and prove how these dual problems are related across time. This result allows us to utilize the entire trajectory of displacement interpolation in learning the OT Map. Our method improves the training stability and achieves superior results in estimating optimal transport maps. We demonstrate that DIOTM outperforms existing OT-based models on image-to-image translation tasks.

Scalable Simulation-free Entropic Unbalanced Optimal Transport

Oct 03, 2024

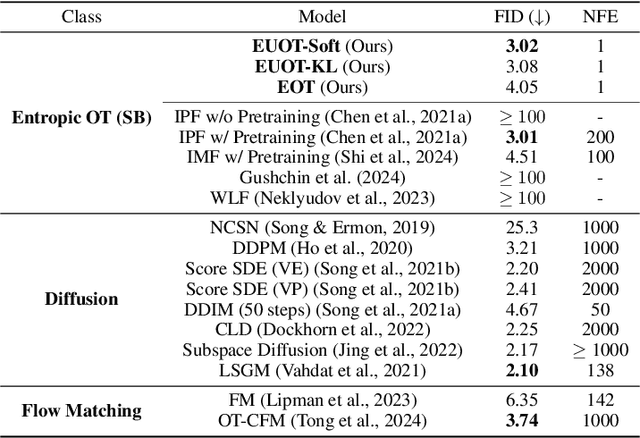

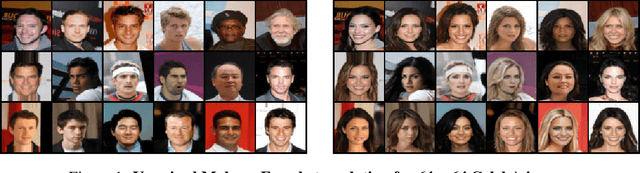

Abstract:The Optimal Transport (OT) problem investigates a transport map that connects two distributions while minimizing a given cost function. Finding such a transport map has diverse applications in machine learning, such as generative modeling and image-to-image translation. In this paper, we introduce a scalable and simulation-free approach for solving the Entropic Unbalanced Optimal Transport (EUOT) problem. We derive the dynamical form of this EUOT problem, which is a generalization of the Schr\"odinger bridges (SB) problem. Based on this, we derive dual formulation and optimality conditions of the EUOT problem from the stochastic optimal control interpretation. By leveraging these properties, we propose a simulation-free algorithm to solve EUOT, called Simulation-free EUOT (SF-EUOT). While existing SB models require expensive simulation costs during training and evaluation, our model achieves simulation-free training and one-step generation by utilizing the reciprocal property. Our model demonstrates significantly improved scalability in generative modeling and image-to-image translation tasks compared to previous SB methods.

Early screening of potential breakthrough technologies with enhanced interpretability: A patent-specific hierarchical attention network model

Jul 24, 2024

Abstract:Despite the usefulness of machine learning approaches for the early screening of potential breakthrough technologies, their practicality is often hindered by opaque models. To address this, we propose an interpretable machine learning approach to predicting future citation counts from patent texts using a patent-specific hierarchical attention network (PatentHAN) model. Central to this approach are (1) a patent-specific pre-trained language model, capturing the meanings of technical words in patent claims, (2) a hierarchical network structure, enabling detailed analysis at the claim level, and (3) a claim-wise self-attention mechanism, revealing pivotal claims during the screening process. A case study of 35,376 pharmaceutical patents demonstrates the effectiveness of our approach in early screening of potential breakthrough technologies while ensuring interpretability. Furthermore, we conduct additional analyses using different language models and claim types to examine the robustness of the approach. It is expected that the proposed approach will enhance expert-machine collaboration in identifying breakthrough technologies, providing new insight derived from text mining into technological value.

Text-to-Battery Recipe: A language modeling-based protocol for automatic battery recipe extraction and retrieval

Jul 22, 2024Abstract:Recent studies have increasingly applied natural language processing (NLP) to automatically extract experimental research data from the extensive battery materials literature. Despite the complex process involved in battery manufacturing -- from material synthesis to cell assembly -- there has been no comprehensive study systematically organizing this information. In response, we propose a language modeling-based protocol, Text-to-Battery Recipe (T2BR), for the automatic extraction of end-to-end battery recipes, validated using a case study on batteries containing LiFePO4 cathode material. We report machine learning-based paper filtering models, screening 2,174 relevant papers from the keyword-based search results, and unsupervised topic models to identify 2,876 paragraphs related to cathode synthesis and 2,958 paragraphs related to cell assembly. Then, focusing on the two topics, two deep learning-based named entity recognition models are developed to extract a total of 30 entities -- including precursors, active materials, and synthesis methods -- achieving F1 scores of 88.18% and 94.61%. The accurate extraction of entities enables the systematic generation of 165 end-toend recipes of LiFePO4 batteries. Our protocol and results offer valuable insights into specific trends, such as associations between precursor materials and synthesis methods, or combinations between different precursor materials. We anticipate that our findings will serve as a foundational knowledge base for facilitating battery-recipe information retrieval. The proposed protocol will significantly accelerate the review of battery material literature and catalyze innovations in battery design and development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge