Jaehee Park

Reflections from the 2024 Large Language Model (LLM) Hackathon for Applications in Materials Science and Chemistry

Nov 20, 2024

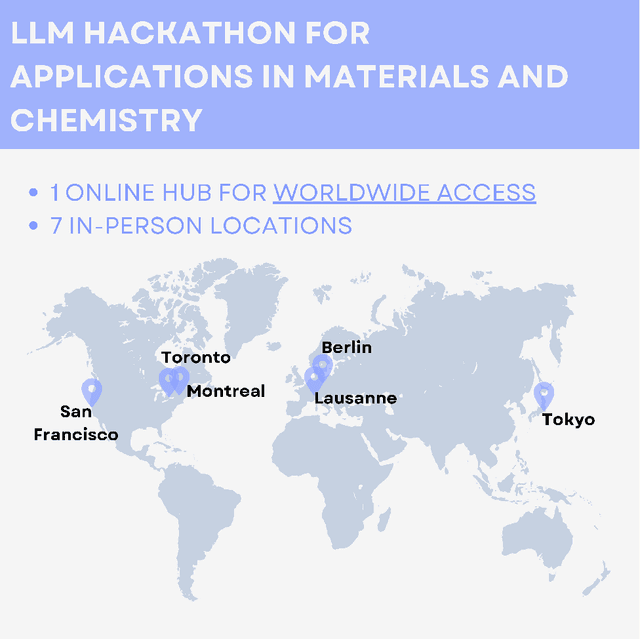

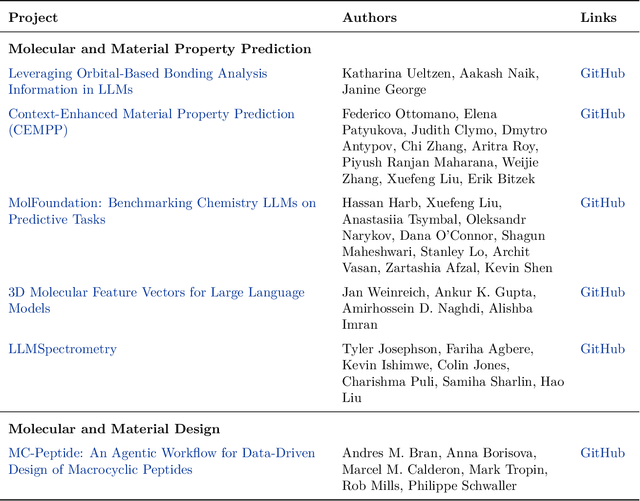

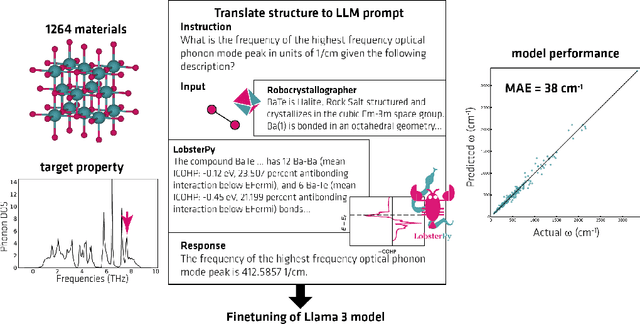

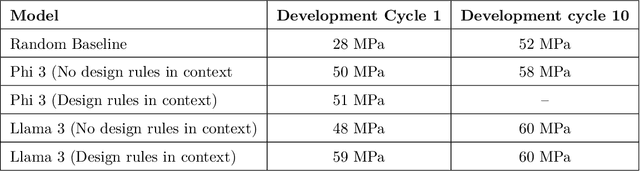

Abstract:Here, we present the outcomes from the second Large Language Model (LLM) Hackathon for Applications in Materials Science and Chemistry, which engaged participants across global hybrid locations, resulting in 34 team submissions. The submissions spanned seven key application areas and demonstrated the diverse utility of LLMs for applications in (1) molecular and material property prediction; (2) molecular and material design; (3) automation and novel interfaces; (4) scientific communication and education; (5) research data management and automation; (6) hypothesis generation and evaluation; and (7) knowledge extraction and reasoning from scientific literature. Each team submission is presented in a summary table with links to the code and as brief papers in the appendix. Beyond team results, we discuss the hackathon event and its hybrid format, which included physical hubs in Toronto, Montreal, San Francisco, Berlin, Lausanne, and Tokyo, alongside a global online hub to enable local and virtual collaboration. Overall, the event highlighted significant improvements in LLM capabilities since the previous year's hackathon, suggesting continued expansion of LLMs for applications in materials science and chemistry research. These outcomes demonstrate the dual utility of LLMs as both multipurpose models for diverse machine learning tasks and platforms for rapid prototyping custom applications in scientific research.

Parallelized computational 3D video microscopy of freely moving organisms at multiple gigapixels per second

Jan 19, 2023Abstract:To study the behavior of freely moving model organisms such as zebrafish (Danio rerio) and fruit flies (Drosophila) across multiple spatial scales, it would be ideal to use a light microscope that can resolve 3D information over a wide field of view (FOV) at high speed and high spatial resolution. However, it is challenging to design an optical instrument to achieve all of these properties simultaneously. Existing techniques for large-FOV microscopic imaging and for 3D image measurement typically require many sequential image snapshots, thus compromising speed and throughput. Here, we present 3D-RAPID, a computational microscope based on a synchronized array of 54 cameras that can capture high-speed 3D topographic videos over a 135-cm^2 area, achieving up to 230 frames per second at throughputs exceeding 5 gigapixels (GPs) per second. 3D-RAPID features a 3D reconstruction algorithm that, for each synchronized temporal snapshot, simultaneously fuses all 54 images seamlessly into a globally-consistent composite that includes a coregistered 3D height map. The self-supervised 3D reconstruction algorithm itself trains a spatiotemporally-compressed convolutional neural network (CNN) that maps raw photometric images to 3D topography, using stereo overlap redundancy and ray-propagation physics as the only supervision mechanism. As a result, our end-to-end 3D reconstruction algorithm is robust to generalization errors and scales to arbitrarily long videos from arbitrarily sized camera arrays. The scalable hardware and software design of 3D-RAPID addresses a longstanding problem in the field of behavioral imaging, enabling parallelized 3D observation of large collections of freely moving organisms at high spatiotemporal throughputs, which we demonstrate in ants (Pogonomyrmex barbatus), fruit flies, and zebrafish larvae.

Multi-scale gigapixel microscopy using a multi-camera array microscope

Nov 30, 2022

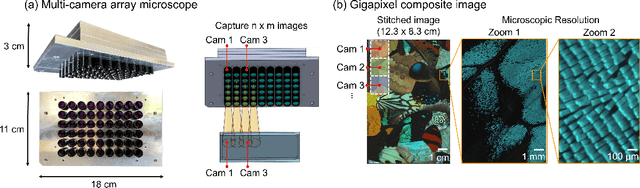

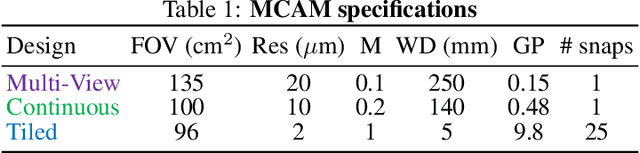

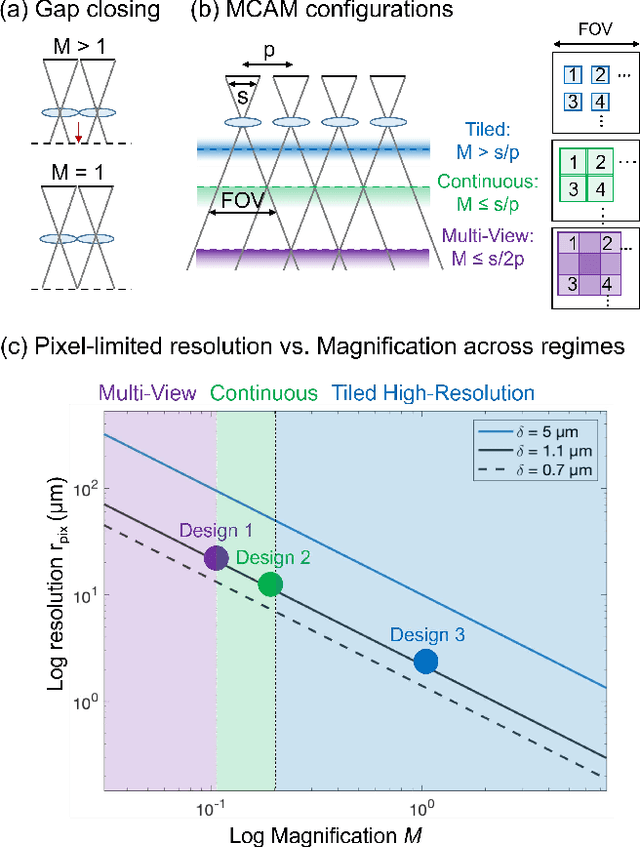

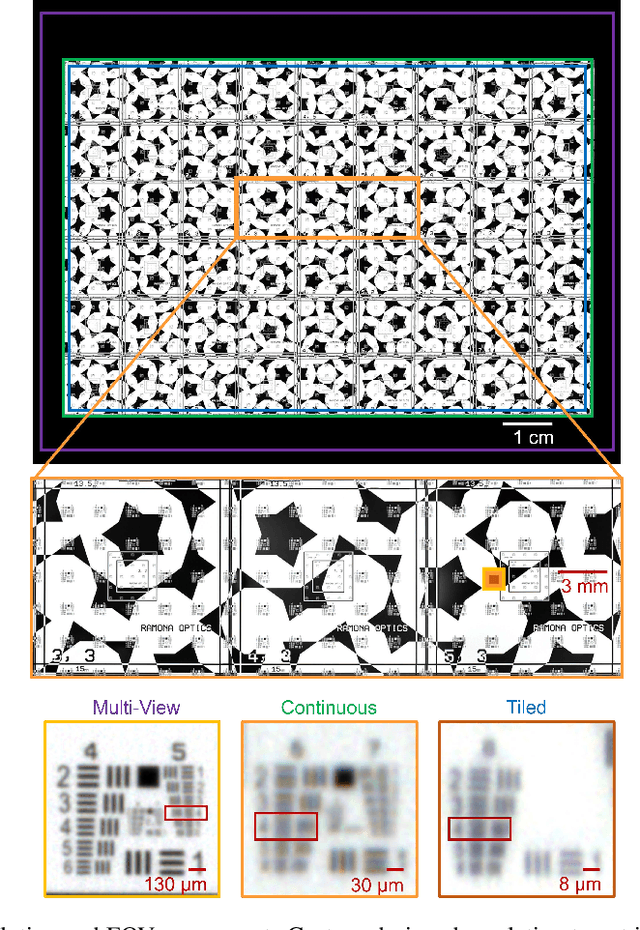

Abstract:This article experimentally examines different configurations of a novel multi-camera array microscope (MCAM) imaging technology. The MCAM is based upon a densely packed array of "micro-cameras" to jointly image across a large field-of-view at high resolution. Each micro-camera within the array images a unique area of a sample of interest, and then all acquired data with 54 micro-cameras are digitally combined into composite frames, whose total pixel counts significantly exceed the pixel counts of standard microscope systems. We present results from three unique MCAM configurations for different use cases. First, we demonstrate a configuration that simultaneously images and estimates the 3D object depth across a 100 x 135 mm^2 field-of-view (FOV) at approximately 20 um resolution, which results in 0.15 gigapixels (GP) per snapshot. Second, we demonstrate an MCAM configuration that records video across a continuous 83 x 123 mm^2 FOV with two-fold increased resolution (0.48 GP per frame). Finally, we report a third high-resolution configuration (2 um resolution) that can rapidly produce 9.8 GP composites of large histopathology specimens.

Mesoscopic photogrammetry with an unstabilized phone camera

Dec 11, 2020

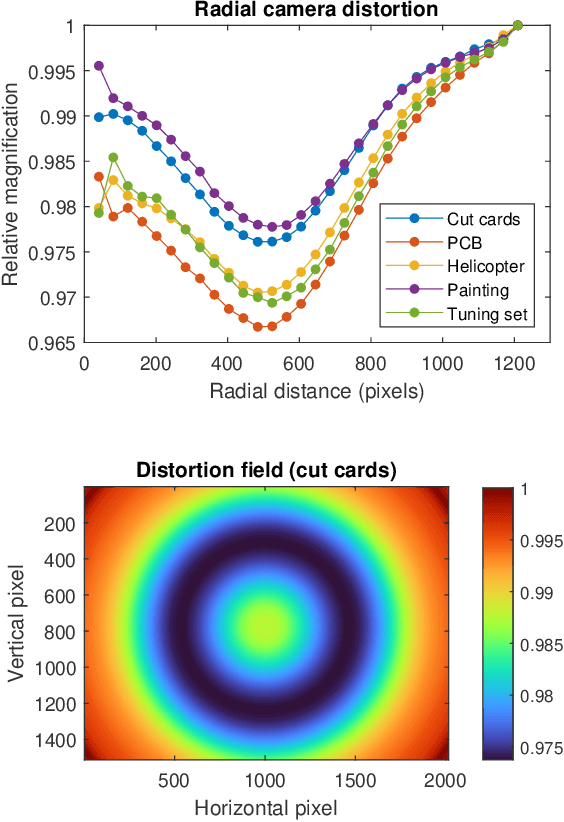

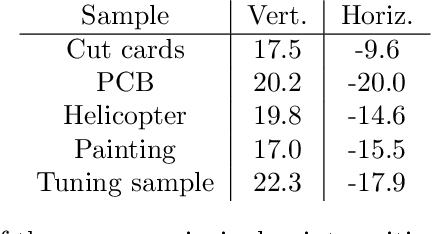

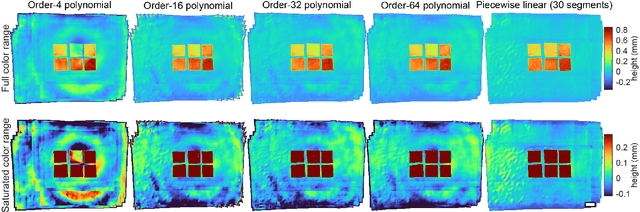

Abstract:We present a feature-free photogrammetric technique that enables quantitative 3D mesoscopic (mm-scale height variation) imaging with tens-of-micron accuracy from sequences of images acquired by a smartphone at close range (several cm) under freehand motion without additional hardware. Our end-to-end, pixel-intensity-based approach jointly registers and stitches all the images by estimating a coaligned height map, which acts as a pixel-wise radial deformation field that orthorectifies each camera image to allow homographic registration. The height maps themselves are reparameterized as the output of an untrained encoder-decoder convolutional neural network (CNN) with the raw camera images as the input, which effectively removes many reconstruction artifacts. Our method also jointly estimates both the camera's dynamic 6D pose and its distortion using a nonparametric model, the latter of which is especially important in mesoscopic applications when using cameras not designed for imaging at short working distances, such as smartphone cameras. We also propose strategies for reducing computation time and memory, applicable to other multi-frame registration problems. Finally, we demonstrate our method using sequences of multi-megapixel images captured by an unstabilized smartphone on a variety of samples (e.g., painting brushstrokes, circuit board, seeds).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge