Jérôme Bolte

TSE-R

Improving in-context learning with a better scoring function

Aug 20, 2025Abstract:Large language models (LLMs) exhibit a remarkable capacity to learn by analogy, known as in-context learning (ICL). However, recent studies have revealed limitations in this ability. In this paper, we examine these limitations on tasks involving first-order quantifiers such as {\em all} and {\em some}, as well as on ICL with linear functions. We identify Softmax, the scoring function in attention mechanism, as a contributing factor to these constraints. To address this, we propose \textbf{scaled signed averaging (SSA)}, a novel alternative to Softmax. Empirical results show that SSA dramatically improves performance on our target tasks. Furthermore, we evaluate both encoder-only and decoder-only transformers models with SSA, demonstrating that they match or exceed their Softmax-based counterparts across a variety of linguistic probing tasks.

When majority rules, minority loses: bias amplification of gradient descent

May 19, 2025Abstract:Despite growing empirical evidence of bias amplification in machine learning, its theoretical foundations remain poorly understood. We develop a formal framework for majority-minority learning tasks, showing how standard training can favor majority groups and produce stereotypical predictors that neglect minority-specific features. Assuming population and variance imbalance, our analysis reveals three key findings: (i) the close proximity between ``full-data'' and stereotypical predictors, (ii) the dominance of a region where training the entire model tends to merely learn the majority traits, and (iii) a lower bound on the additional training required. Our results are illustrated through experiments in deep learning for tabular and image classification tasks.

A second-order-like optimizer with adaptive gradient scaling for deep learning

Oct 08, 2024

Abstract:In this empirical article, we introduce INNAprop, an optimization algorithm that combines the INNA method with the RMSprop adaptive gradient scaling. It leverages second-order information and rescaling while keeping the memory requirements of standard DL methods as AdamW or SGD with momentum.After having recalled our geometrical motivations, we provide quite extensive experiments. On image classification (CIFAR-10, ImageNet) and language modeling (GPT-2), INNAprop consistently matches or outperforms AdamW both in training speed and accuracy, with minimal hyperparameter tuning in large-scale settings. Our code is publicly available at \url{https://github.com/innaprop/innaprop}.

Inexact subgradient methods for semialgebraic functions

Apr 30, 2024Abstract:Motivated by the widespread use of approximate derivatives in machine learning and optimization, we study inexact subgradient methods with non-vanishing additive errors and step sizes. In the nonconvex semialgebraic setting, under boundedness assumptions, we prove that the method provides points that eventually fluctuate close to the critical set at a distance proportional to $\epsilon^\rho$ where $\epsilon$ is the error in subgradient evaluation and $\rho$ relates to the geometry of the problem. In the convex setting, we provide complexity results for the averaged values. We also obtain byproducts of independent interest, such as descent-like lemmas for nonsmooth nonconvex problems and some results on the limit of affine interpolants of differential inclusions.

One-step differentiation of iterative algorithms

May 23, 2023

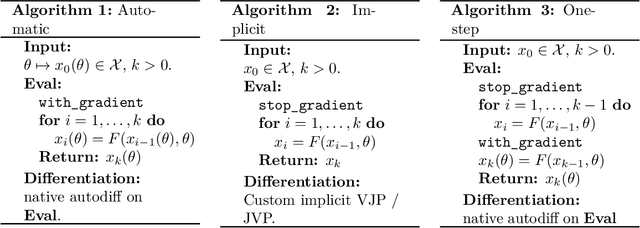

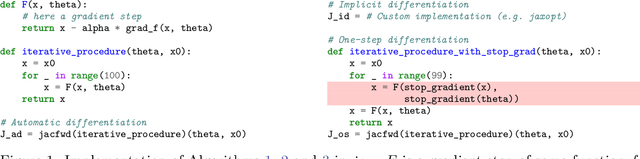

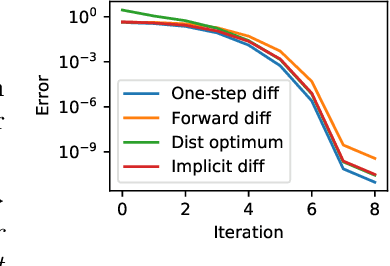

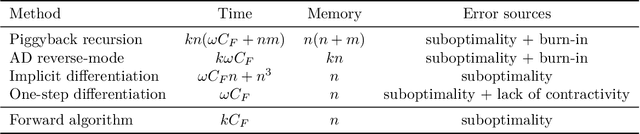

Abstract:In appropriate frameworks, automatic differentiation is transparent to the user at the cost of being a significant computational burden when the number of operations is large. For iterative algorithms, implicit differentiation alleviates this issue but requires custom implementation of Jacobian evaluation. In this paper, we study one-step differentiation, also known as Jacobian-free backpropagation, a method as easy as automatic differentiation and as performant as implicit differentiation for fast algorithms (e.g., superlinear optimization methods). We provide a complete theoretical approximation analysis with specific examples (Newton's method, gradient descent) along with its consequences in bilevel optimization. Several numerical examples illustrate the well-foundness of the one-step estimator.

Differentiating Nonsmooth Solutions to Parametric Monotone Inclusion Problems

Dec 15, 2022Abstract:We leverage path differentiability and a recent result on nonsmooth implicit differentiation calculus to give sufficient conditions ensuring that the solution to a monotone inclusion problem will be path differentiable, with formulas for computing its generalized gradient. A direct consequence of our result is that these solutions happen to be differentiable almost everywhere. Our approach is fully compatible with automatic differentiation and comes with assumptions which are easy to check, roughly speaking: semialgebraicity and strong monotonicity. We illustrate the scope of our results by considering three fundamental composite problem settings: strongly convex problems, dual solutions to convex minimization problems and primal-dual solutions to min-max problems.

Nonsmooth automatic differentiation: a cheap gradient principle and other complexity results

Jun 01, 2022

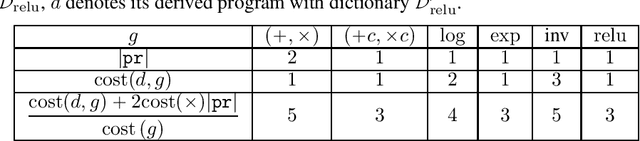

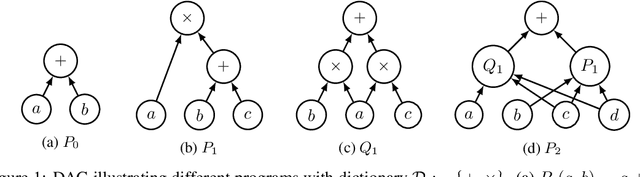

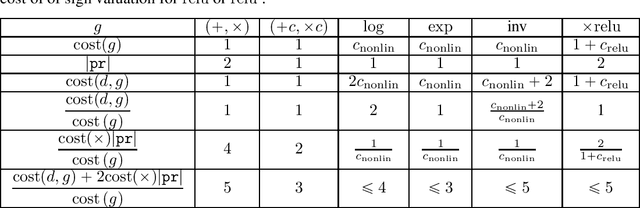

Abstract:We provide a simple model to estimate the computational costs of the backward and forward modes of algorithmic differentiation for a wide class of nonsmooth programs. Prominent examples are the famous relu and convolutional neural networks together with their standard loss functions. Using the recent notion of conservative gradients, we then establish a "nonsmooth cheap gradient principle" for backpropagation encompassing most concrete applications. Nonsmooth backpropagation's cheapness contrasts with concurrent forward approaches which have, at this day, dimensional-dependent worst case estimates. In order to understand this class of methods, we relate the complexity of computing a large number of directional derivatives to that of matrix multiplication. This shows a fundamental limitation for improving forward AD for that task. Finally, while the fastest algorithms for computing a Clarke subgradient are linear in the dimension, it appears that computing two distinct Clarke (resp. lexicographic) subgradients for simple neural networks is NP-Hard.

Automatic differentiation of nonsmooth iterative algorithms

May 31, 2022

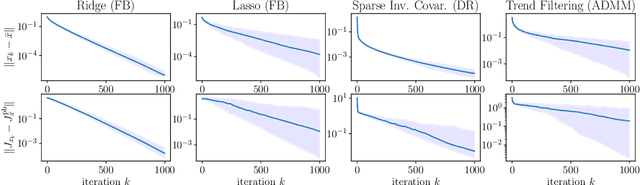

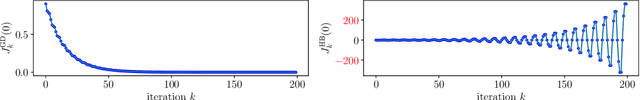

Abstract:Differentiation along algorithms, i.e., piggyback propagation of derivatives, is now routinely used to differentiate iterative solvers in differentiable programming. Asymptotics is well understood for many smooth problems but the nondifferentiable case is hardly considered. Is there a limiting object for nonsmooth piggyback automatic differentiation (AD)? Does it have any variational meaning and can it be used effectively in machine learning? Is there a connection with classical derivative? All these questions are addressed under appropriate nonexpansivity conditions in the framework of conservative derivatives which has proved useful in understanding nonsmooth AD. For nonsmooth piggyback iterations, we characterize the attractor set of nonsmooth piggyback iterations as a set-valued fixed point which remains in the conservative framework. This has various consequences and in particular almost everywhere convergence of classical derivatives. Our results are illustrated on parametric convex optimization problems with forward-backward, Douglas-Rachford and Alternating Direction of Multiplier algorithms as well as the Heavy-Ball method.

Numerical influence of ReLU'(0) on backpropagation

Jun 29, 2021

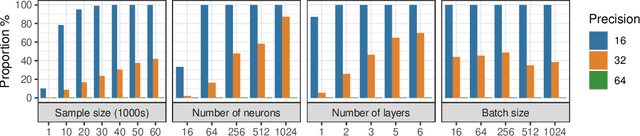

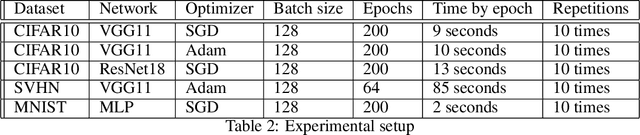

Abstract:In theory, the choice of ReLU'(0) in [0, 1] for a neural network has a negligible influence both on backpropagation and training. Yet, in the real world, 32 bits default precision combined with the size of deep learning problems makes it a hyperparameter of training methods. We investigate the importance of the value of ReLU'(0) for several precision levels (16, 32, 64 bits), on various networks (fully connected, VGG, ResNet) and datasets (MNIST, CIFAR10, SVHN). We observe considerable variations of backpropagation outputs which occur around half of the time in 32 bits precision. The effect disappears with double precision, while it is systematic at 16 bits. For vanilla SGD training, the choice ReLU'(0) = 0 seems to be the most efficient. We also evidence that reconditioning approaches as batch-norm or ADAM tend to buffer the influence of ReLU'(0)'s value. Overall, the message we want to convey is that algorithmic differentiation of nonsmooth problems potentially hides parameters that could be tuned advantageously.

Nonsmooth Implicit Differentiation for Machine Learning and Optimization

Jun 08, 2021

Abstract:In view of training increasingly complex learning architectures, we establish a nonsmooth implicit function theorem with an operational calculus. Our result applies to most practical problems (i.e., definable problems) provided that a nonsmooth form of the classical invertibility condition is fulfilled. This approach allows for formal subdifferentiation: for instance, replacing derivatives by Clarke Jacobians in the usual differentiation formulas is fully justified for a wide class of nonsmooth problems. Moreover this calculus is entirely compatible with algorithmic differentiation (e.g., backpropagation). We provide several applications such as training deep equilibrium networks, training neural nets with conic optimization layers, or hyperparameter-tuning for nonsmooth Lasso-type models. To show the sharpness of our assumptions, we present numerical experiments showcasing the extremely pathological gradient dynamics one can encounter when applying implicit algorithmic differentiation without any hypothesis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge