Ivan Sekulic

Efficient Out-of-Scope Detection in Dialogue Systems via Uncertainty-Driven LLM Routing

Jul 02, 2025Abstract:Out-of-scope (OOS) intent detection is a critical challenge in task-oriented dialogue systems (TODS), as it ensures robustness to unseen and ambiguous queries. In this work, we propose a novel but simple modular framework that combines uncertainty modeling with fine-tuned large language models (LLMs) for efficient and accurate OOS detection. The first step applies uncertainty estimation to the output of an in-scope intent detection classifier, which is currently deployed in a real-world TODS handling tens of thousands of user interactions daily. The second step then leverages an emerging LLM-based approach, where a fine-tuned LLM is triggered to make a final decision on instances with high uncertainty. Unlike prior approaches, our method effectively balances computational efficiency and performance, combining traditional approaches with LLMs and yielding state-of-the-art results on key OOS detection benchmarks, including real-world OOS data acquired from a deployed TODS.

Review and experimental benchmarking of machine learning algorithms for efficient optimization of cold atom experiments

Dec 20, 2023Abstract:The generation of cold atom clouds is a complex process which involves the optimization of noisy data in high dimensional parameter spaces. Optimization can be challenging both in and especially outside of the lab due to lack of time, expertise, or access for lengthy manual optimization. In recent years, it was demonstrated that machine learning offers a solution since it can optimize high dimensional problems quickly, without knowledge of the experiment itself. In this paper we present results showing the benchmarking of nine different optimization techniques and implementations, alongside their ability to optimize a Rubidium (Rb) cold atom experiment. The investigations are performed on a 3D $^{87}$Rb molasses with 10 and 18 adjustable parameters, respectively, where the atom number obtained by absorption imaging was chosen as the test problem. We further compare the best performing optimizers under different effective noise conditions by reducing the Signal-to-Noise ratio of the images via adapting the atomic vapor pressure in the 2D+ MOT and the detection laser frequency stability.

GRM: Generative Relevance Modeling Using Relevance-Aware Sample Estimation for Document Retrieval

Jun 16, 2023

Abstract:Recent studies show that Generative Relevance Feedback (GRF), using text generated by Large Language Models (LLMs), can enhance the effectiveness of query expansion. However, LLMs can generate irrelevant information that harms retrieval effectiveness. To address this, we propose Generative Relevance Modeling (GRM) that uses Relevance-Aware Sample Estimation (RASE) for more accurate weighting of expansion terms. Specifically, we identify similar real documents for each generated document and use a neural re-ranker to estimate their relevance. Experiments on three standard document ranking benchmarks show that GRM improves MAP by 6-9% and R@1k by 2-4%, surpassing previous methods.

Integrating Subgraph-aware Relation and DirectionReasoning for Question Answering

Apr 01, 2021

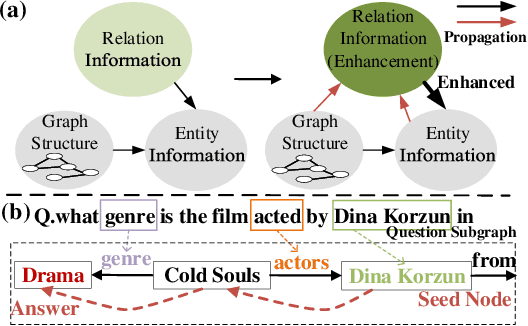

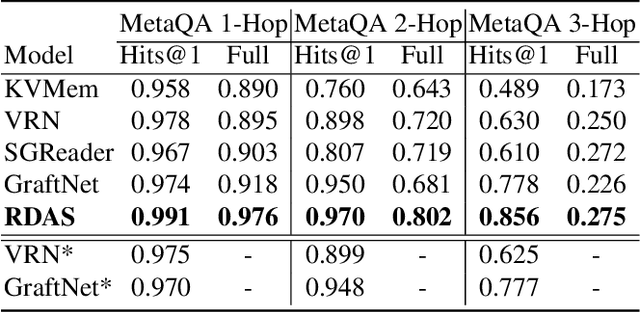

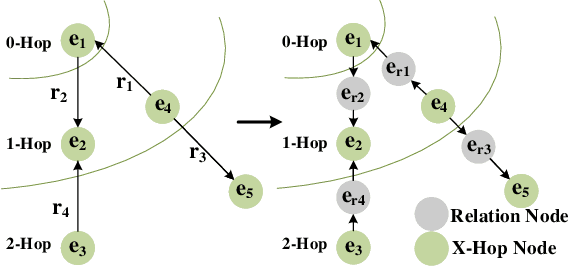

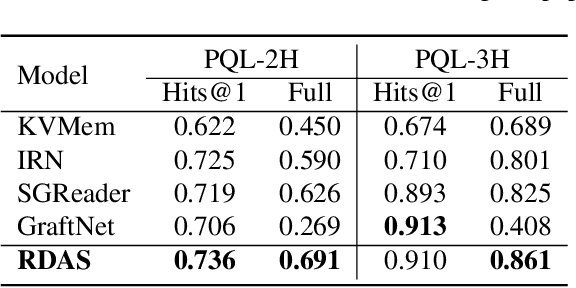

Abstract:Question Answering (QA) models over Knowledge Bases (KBs) are capable of providing more precise answers by utilizing relation information among entities. Although effective, most of these models solely rely on fixed relation representations to obtain answers for different question-related KB subgraphs. Hence, the rich structured information of these subgraphs may be overlooked by the relation representation vectors. Meanwhile, the direction information of reasoning, which has been proven effective for the answer prediction on graphs, has not been fully explored in existing work. To address these challenges, we propose a novel neural model, Relation-updated Direction-guided Answer Selector (RDAS), which converts relations in each subgraph to additional nodes to learn structure information. Additionally, we utilize direction information to enhance the reasoning ability. Experimental results show that our model yields substantial improvements on two widely used datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge