Husheng Li

Space-Time-Frequency Synthetic Integrated Sensing and Communication Networks

Nov 19, 2025Abstract:Integrated sensing and communication (ISAC) promises high spectral and power efficiencies by sharing waveforms, spectrum, and hardware across sensing and data links. Yet commercial cellular networks struggle to deliver fine angular, range, and Doppler resolution due to limited aperture, bandwidth, and coherent observation time. In this paper, we propose a space-time-frequency synthetic ISAC architecture that fuses observations from distributed transmitters and receivers across time intervals and frequency bands. We develop a unified signal model for multistatic and monostatic configurations, derive Cramer-Rao lower bounds (CRLBs) for the estimations of position and velocity. The analysis shows how spatial diversity, multiband operation, and observation scheduling impact the Fisher information. We also compare the estimation performance between a concentrated maximum likelihood estimator (MLE) and a two stage information fusion (TSIF) method that first estimates per-path delay and radial speed and then fuses them by solving a weighted nonlinear least-squares problem via the Gauss-Newton algorithm. Numerical results show that MLE approaches the CRLB in the high signal-to-noise ratio (SNR) regime, while the two stage method remains competitive at moderate to high SNR but degrades at low SNR. A central finding is that fully synthesized network processing is essential, as estimations by individual base stations (BSs) followed by fusion are consistently inferior and unstable at low SNR. This framework offers a practical guidance for upgrading existing communication infrastructure into dense sensing networks.

Continuous-Time Value Iteration for Multi-Agent Reinforcement Learning

Sep 11, 2025

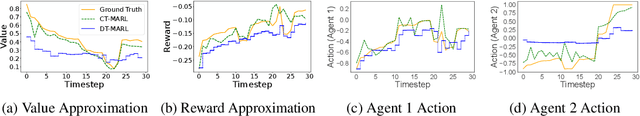

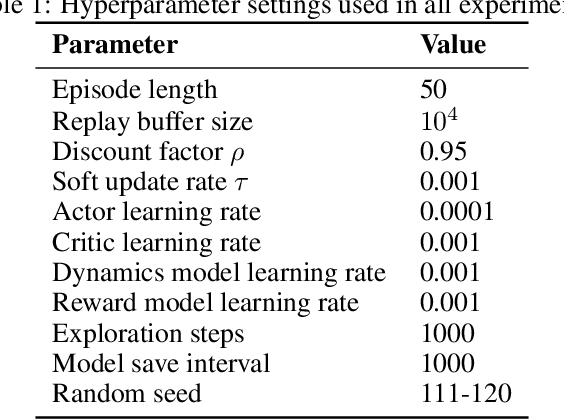

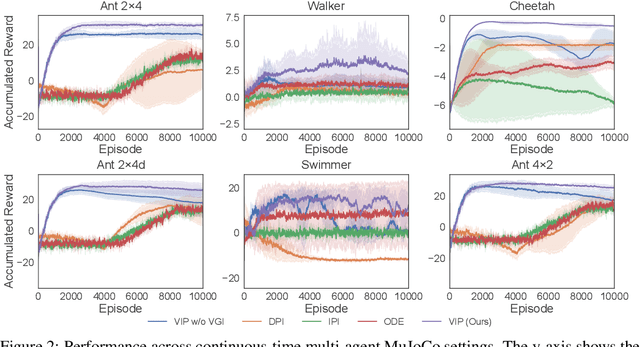

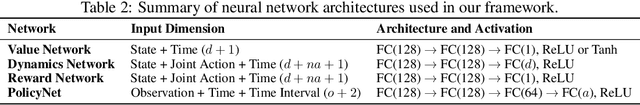

Abstract:Existing reinforcement learning (RL) methods struggle with complex dynamical systems that demand interactions at high frequencies or irregular time intervals. Continuous-time RL (CTRL) has emerged as a promising alternative by replacing discrete-time Bellman recursion with differential value functions defined as viscosity solutions of the Hamilton--Jacobi--Bellman (HJB) equation. While CTRL has shown promise, its applications have been largely limited to the single-agent domain. This limitation stems from two key challenges: (i) conventional solution methods for HJB equations suffer from the curse of dimensionality (CoD), making them intractable in high-dimensional systems; and (ii) even with HJB-based learning approaches, accurately approximating centralized value functions in multi-agent settings remains difficult, which in turn destabilizes policy training. In this paper, we propose a CT-MARL framework that uses physics-informed neural networks (PINNs) to approximate HJB-based value functions at scale. To ensure the value is consistent with its differential structure, we align value learning with value-gradient learning by introducing a Value Gradient Iteration (VGI) module that iteratively refines value gradients along trajectories. This improves gradient fidelity, in turn yielding more accurate values and stronger policy learning. We evaluate our method using continuous-time variants of standard benchmarks, including multi-agent particle environment (MPE) and multi-agent MuJoCo. Our results demonstrate that our approach consistently outperforms existing continuous-time RL baselines and scales to complex multi-agent dynamics.

Hypernetworks for Model-Heterogeneous Personalized Federated Learning

Jul 30, 2025

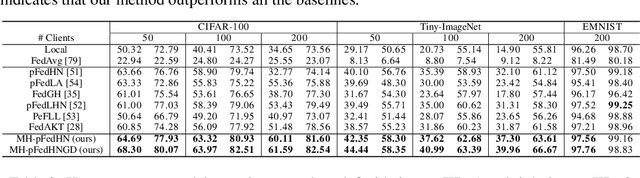

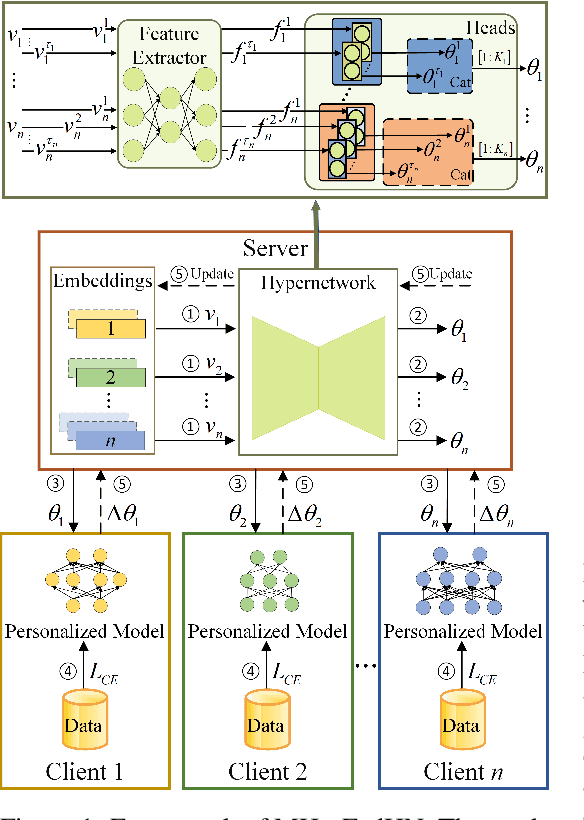

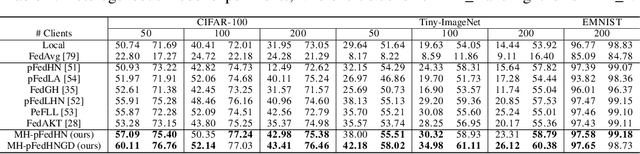

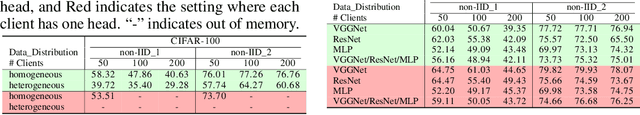

Abstract:Recent advances in personalized federated learning have focused on addressing client model heterogeneity. However, most existing methods still require external data, rely on model decoupling, or adopt partial learning strategies, which can limit their practicality and scalability. In this paper, we revisit hypernetwork-based methods and leverage their strong generalization capabilities to design a simple yet effective framework for heterogeneous personalized federated learning. Specifically, we propose MH-pFedHN, which leverages a server-side hypernetwork that takes client-specific embedding vectors as input and outputs personalized parameters tailored to each client's heterogeneous model. To promote knowledge sharing and reduce computation, we introduce a multi-head structure within the hypernetwork, allowing clients with similar model sizes to share heads. Furthermore, we further propose MH-pFedHNGD, which integrates an optional lightweight global model to improve generalization. Our framework does not rely on external datasets and does not require disclosure of client model architectures, thereby offering enhanced privacy and flexibility. Extensive experiments on multiple benchmarks and model settings demonstrate that our approach achieves competitive accuracy, strong generalization, and serves as a robust baseline for future research in model-heterogeneous personalized federated learning.

Trellis Waveform Shaping for Sidelobe Reduction in Integrated Sensing and Communications: A Duality with PAPR Mitigation

May 14, 2025Abstract:A key challenge in integrated sensing and communications (ISAC) is the synthesis of waveforms that can modulate communication messages and achieve good sensing performance simultaneously. In ISAC systems, standard communication waveforms can be adapted for sensing, as the sensing receiver (co-located with the transmitter) has knowledge of the communication message and consequently the waveform. However, the randomness of communications may result in waveforms that have high sidelobes masking weak targets. Thus, it is desirable to refine communication waveforms to improve the sensing performance by reducing the integrated sidelobe levels (ISL). This is similar to the peak-to-average power ratio (PAPR) mitigation in orthogonal frequency division multiplexing (OFDM), in which the OFDM-modulated waveform needs to be refined to reduce the PAPR. In this paper, inspired by PAPR reduction algorithms in OFDM, we employ trellis shaping in OFDM-based ISAC systems to refine waveforms for specific sensing metrics using convolutional codes and Viterbi decoding. In such a scheme, the communication data is encoded and then mapped to the signaling constellation in different subcarriers, such that the time-domain sidelobes are reduced. An interesting observation is that sidelobe reduction in OFDM-based ISAC is dual to PAPR reduction in OFDM, thereby sharing a similar signaling structure. Numerical simulations and hardware software defined radio USRP experiments are carried out to demonstrate the effectiveness of the proposed trellis shaping approach.

Spreading over OFDM for Integrated Sensing and Communications (ISAC) Ranging: Multi-user Interference Mitigation

May 04, 2025Abstract:In the context of communication-centric integrated sensing and communication (ISAC), the orthogonal frequency division multiplexing (OFDM) waveform was proven to be optimal in minimizing ranging sidelobes when random signaling is used. A typical assumption in OFDM-based ranging is that the max target delay is less than the cyclic prefix (CP) length, which is equivalent to performing a \textit{periodic} correlation between the signal reflected from the target and the transmitted signal. In the multi-user case, such as in Orthogonal Frequency Division Multiple Access (OFDMA), users are assigned disjoint subsets of subcarriers which eliminates mutual interference between the communication channels of the different users. However, ranging involves an aperiodic correlation operation for target ranges with delays greater than the CP length. Aperiodic correlation between signals from disjoint frequency bands will not be zero, resulting in mutual interference between different user bands. We refer to this as \textit{inter-band} (IB) cross-correlation interference. In this work, we analytically characterize IB interference and quantify its impact on the integrated sidelobe levels (ISL). We introduce an orthogonal spreading layer on top of OFDM that can reduce IB interference resulting in ISL levels significantly lower than for OFDM without spreading in the multi-user setup. We validate our claims through simulations, and using an upper bound on IB energy which we show that it can be minimized using our proposed spreading. However, for orthogonal spreading to be effective, a price must be paid in terms of spectral utilization, which is yet another manifestation of the trade-off between sensing accuracy and data communication capacity

OTFS-ISAC System with Sub-Nyquist ADC Sampling Rate

Feb 07, 2025

Abstract:Integrated sensing and communication (ISAC) has emerged as a pivotal technology for next-generation wireless communication and radar systems, enabling high-resolution sensing and high-throughput communication with shared spectrum and hardware. However, achieving a fine radar resolution often requires high-rate analog-to-digital converters (ADCs) and substantial storage, making it both expensive and impractical for many commercial applications. To address these challenges, this paper proposes an orthogonal time frequency space (OTFS)-based ISAC architecture that operates at reduced ADC sampling rates, yet preserves accurate radar estimation and supports simultaneous communication. The proposed architecture introduces pilot symbols directly in the delay-Doppler (DD) domain to leverage the transformation mapping between the DD and time-frequency (TF) domains to keep selected subcarriers active while others are inactive, allowing the radar receiver to exploit under-sampling aliasing and recover the original DD signal at much lower sampling rates. To further enhance the radar accuracy, we develop an iterative interference estimation and cancellation algorithm that mitigates data symbol interference. We propose a code-based spreading technique that distributes data across the DD domain to preserve the maximum unambiguous radar sensing range. For communication, we implement a complete transceiver pipeline optimized for reduced sampling rate system, including synchronization, channel estimation, and iterative data detection. Experimental results from a software-defined radio (SDR)-based testbed confirm that our method substantially lowers the required sampling rate without sacrificing radar sensing performance and ensures reliable communication.

DeepSafeMPC: Deep Learning-Based Model Predictive Control for Safe Multi-Agent Reinforcement Learning

Mar 12, 2024

Abstract:Safe Multi-agent reinforcement learning (safe MARL) has increasingly gained attention in recent years, emphasizing the need for agents to not only optimize the global return but also adhere to safety requirements through behavioral constraints. Some recent work has integrated control theory with multi-agent reinforcement learning to address the challenge of ensuring safety. However, there have been only very limited applications of Model Predictive Control (MPC) methods in this domain, primarily due to the complex and implicit dynamics characteristic of multi-agent environments. To bridge this gap, we propose a novel method called Deep Learning-Based Model Predictive Control for Safe Multi-Agent Reinforcement Learning (DeepSafeMPC). The key insight of DeepSafeMPC is leveraging a entralized deep learning model to well predict environmental dynamics. Our method applies MARL principles to search for optimal solutions. Through the employment of MPC, the actions of agents can be restricted within safe states concurrently. We demonstrate the effectiveness of our approach using the Safe Multi-agent MuJoCo environment, showcasing significant advancements in addressing safety concerns in MARL.

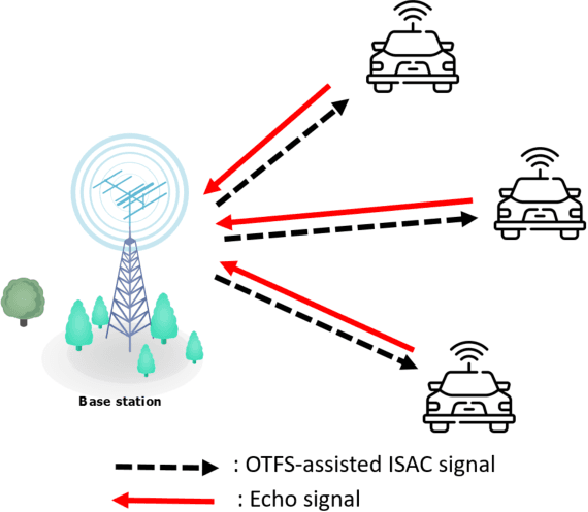

Orthogonal Time Frequency Space for Integrated Sensing and Communication: A Survey

Feb 15, 2024

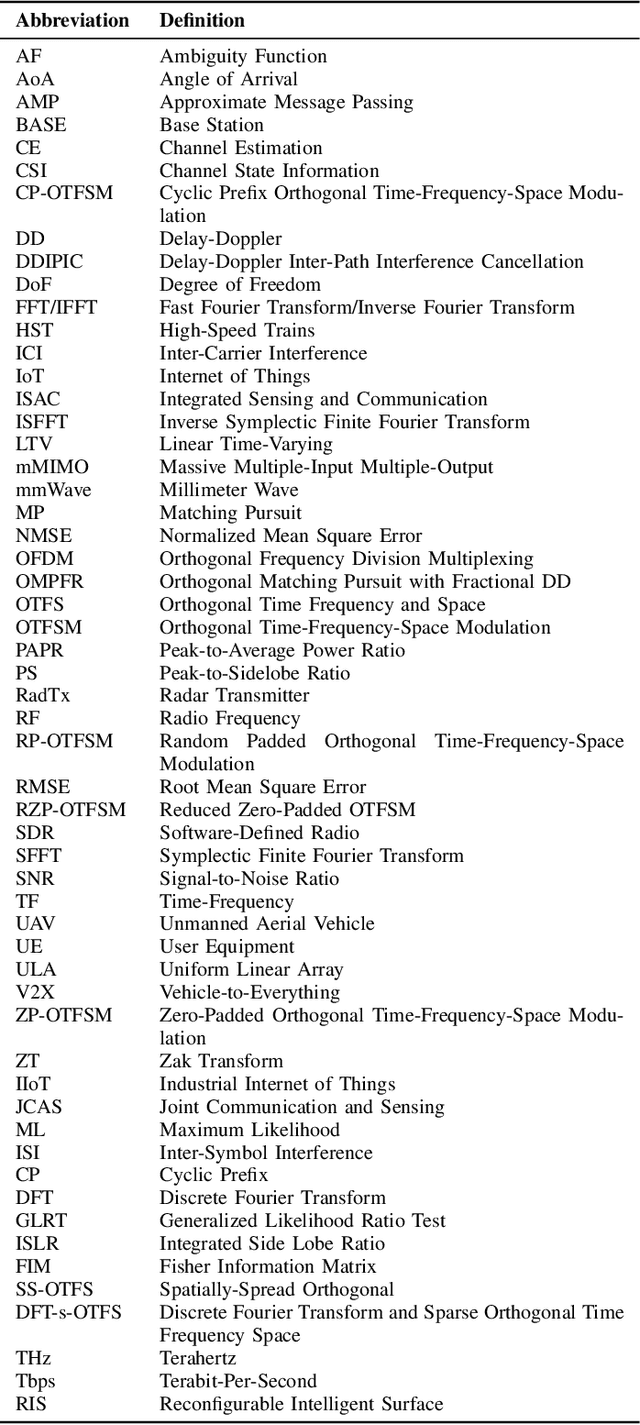

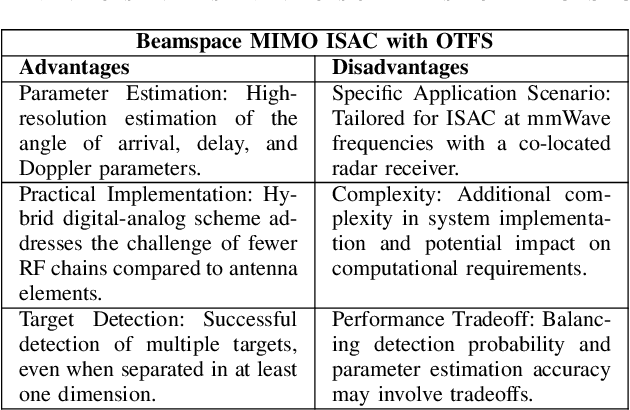

Abstract:Sixth-generation (6G) wireless communication systems, as stated in the European 6G flagship project Hexa-X, are anticipated to feature the integration of intelligence, communication, sensing, positioning, and computation. An important aspect of this integration is integrated sensing and communication (ISAC), in which the same waveform is used for both systems both sensing and communication, to address the challenge of spectrum scarcity. Recently, the orthogonal time frequency space (OTFS) waveform has been proposed to address OFDM's limitations due to the high Doppler spread in some future wireless communication systems. In this paper, we review existing OTFS waveforms for ISAC systems and provide some insights into future research. Firstly, we introduce the basic principles and a system model of OTFS and provide a foundational understanding of this innovative technology's core concepts and architecture. Subsequently, we present an overview of OTFS-based ISAC system frameworks. We provide a comprehensive review of recent research developments and the current state of the art in the field of OTFS-assisted ISAC systems to gain a thorough understanding of the current landscape and advancements. Furthermore, we perform a thorough comparison between OTFS-enabled ISAC operations and traditional OFDM, highlighting the distinctive advantages of OTFS, especially in high Doppler spread scenarios. Subsequently, we address the primary challenges facing OTFS-based ISAC systems, identifying potential limitations and drawbacks. Then, finally, we suggest future research directions, aiming to inspire further innovation in the 6G wireless communication landscape.

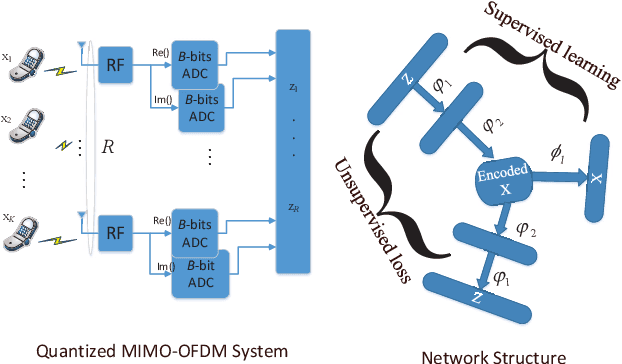

LEMO: Learn to Equalize for MIMO-OFDM Systems with Low-Resolution ADCs

May 14, 2019

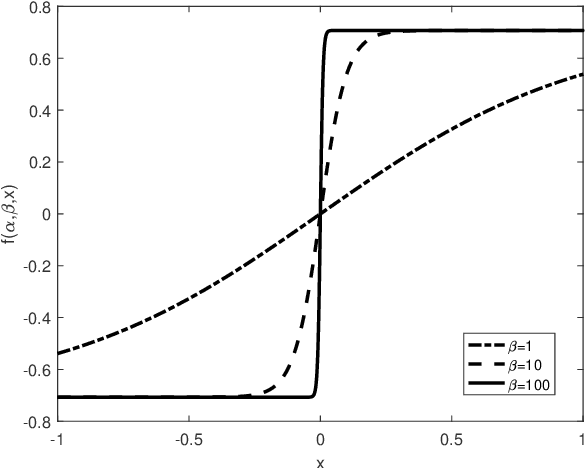

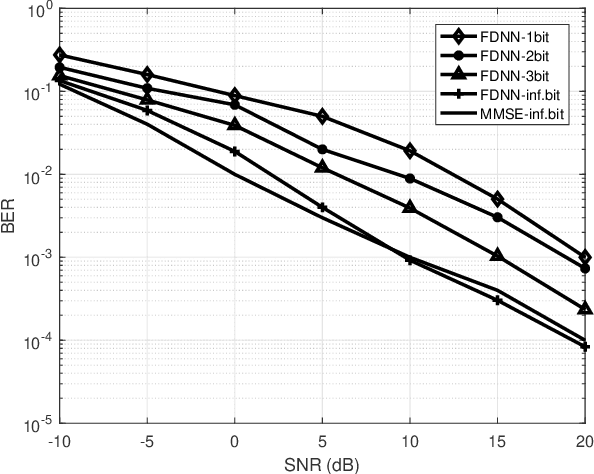

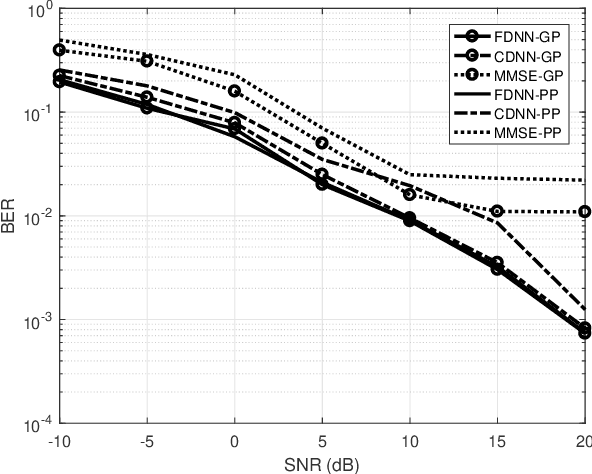

Abstract:This paper develops a new deep neural network optimized equalization framework for massive multiple input multiple output orthogonal frequency division multiplexing (MIMO-OFDM) systems that employ low-resolution analog-to-digital converters (ADCs) at the base station (BS). The use of low-resolution ADCs could largely reduce hardware complexity and circuit power consumption, however, makes the channel station information almost blind to the BS, hence causing difficulty in solving the equalization problem. In this paper, we consider a supervised learning architecture, where the goal is to learn a representative function that can predict the targets (constellation points) from the inputs (outputs of the low-resolution ADCs) based on the labeled training data (pilot signals). Specially, our main contributions are two-fold: 1) First, we design a new activation function, whose outputs are close to the constellation points when the parameters are finally optimized, to help us fully exploit the stochastic gradient descent method for the discrete optimization problem. 2) Second, an unsupervised loss is designed and then added to the optimization objective, aiming to enhance the representation ability (so-called generalization). The experimental results reveal that the proposed equalizer is robust to different channel taps (i.e., Gaussian, and Poisson), significantly outperforms the linearized MMSE equalizer, and shows potential for pilot saving.

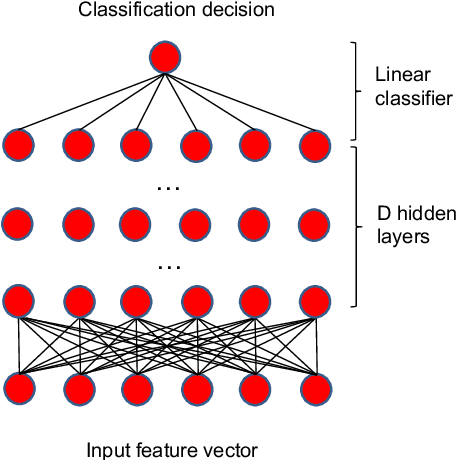

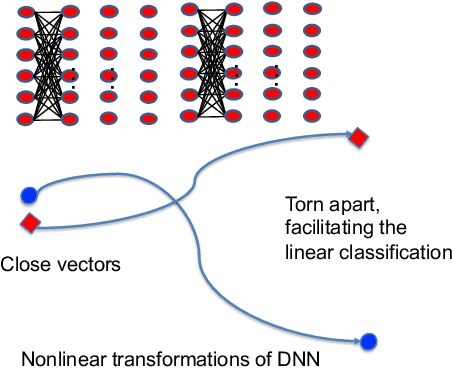

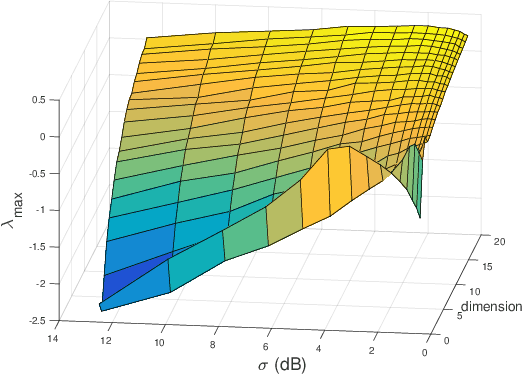

Analysis on the Nonlinear Dynamics of Deep Neural Networks: Topological Entropy and Chaos

Apr 03, 2018

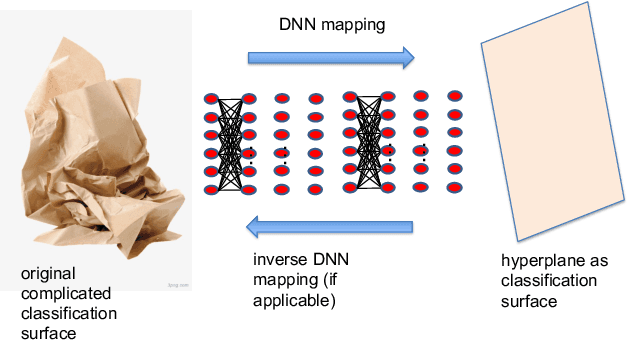

Abstract:The theoretical explanation for deep neural network (DNN) is still an open problem. In this paper DNN is considered as a discrete-time dynamical system due to its layered structure. The complexity provided by the nonlinearity in the dynamics is analyzed in terms of topological entropy and chaos characterized by Lyapunov exponents. The properties revealed for the dynamics of DNN are applied to analyze the corresponding capabilities of classification and generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge