Chengcheng Li

Intrusion Detection System in Smart Home Network Using Bidirectional LSTM and Convolutional Neural Networks Hybrid Model

May 27, 2021

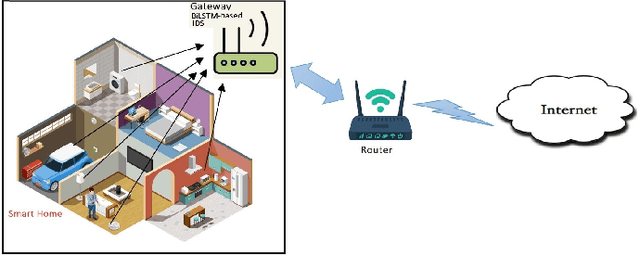

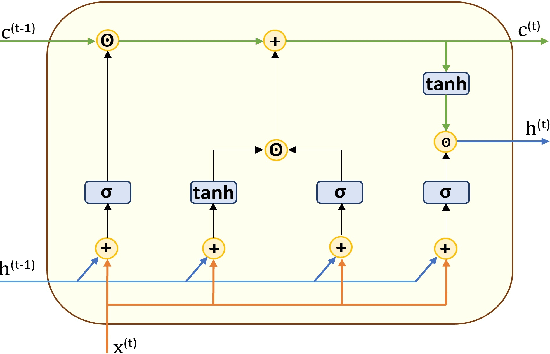

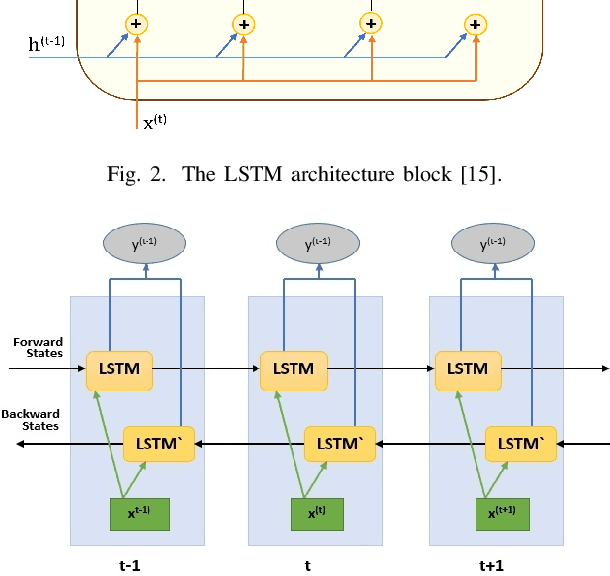

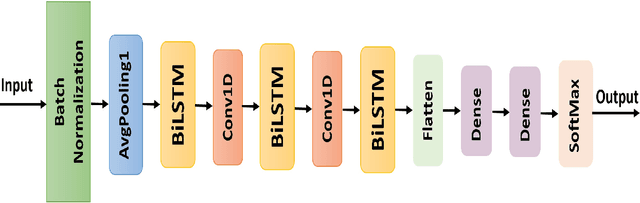

Abstract:Internet of Things (IoT) allowed smart homes to improve the quality and the comfort of our daily lives. However, these conveniences introduced several security concerns that increase rapidly. IoT devices, smart home hubs, and gateway raise various security risks. The smart home gateways act as a centralized point of communication between the IoT devices, which can create a backdoor into network data for hackers. One of the common and effective ways to detect such attacks is intrusion detection in the network traffic. In this paper, we proposed an intrusion detection system (IDS) to detect anomalies in a smart home network using a bidirectional long short-term memory (BiLSTM) and convolutional neural network (CNN) hybrid model. The BiLSTM recurrent behavior provides the intrusion detection model to preserve the learned information through time, and the CNN extracts perfectly the data features. The proposed model can be applied to any smart home network gateway.

Convolutional Neural Network Pruning with Structural Redundancy Reduction

Apr 08, 2021

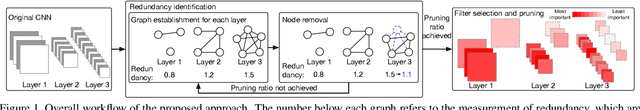

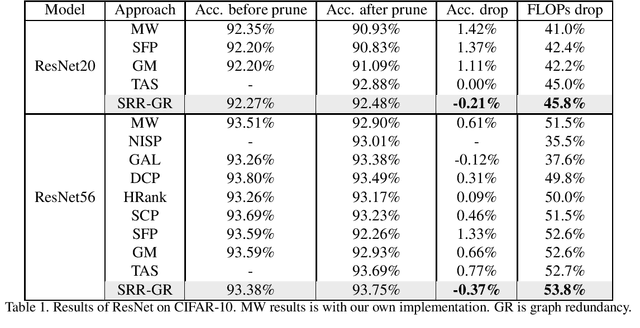

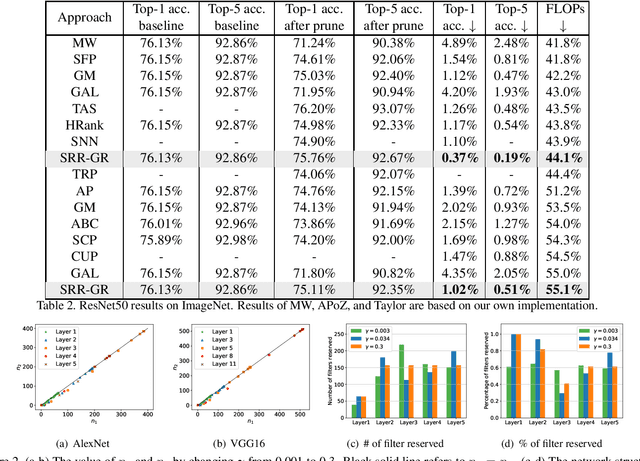

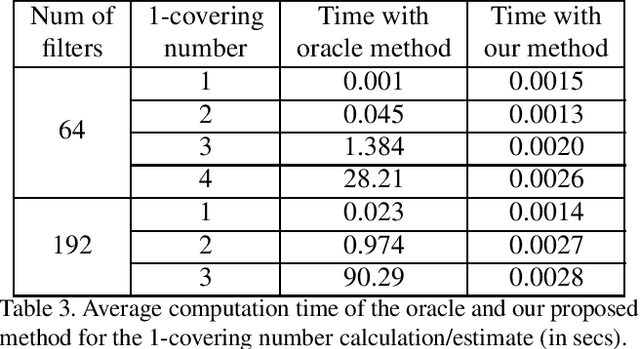

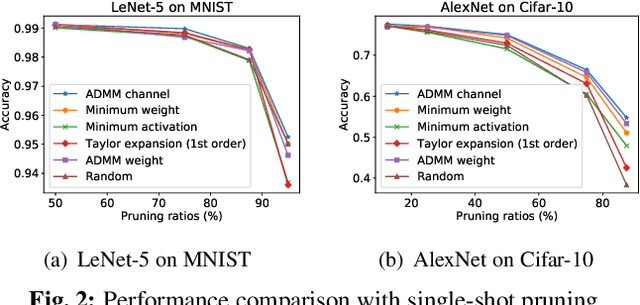

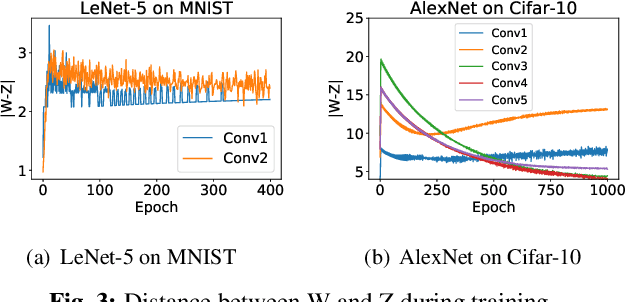

Abstract:Convolutional neural network (CNN) pruning has become one of the most successful network compression approaches in recent years. Existing works on network pruning usually focus on removing the least important filters in the network to achieve compact architectures. In this study, we claim that identifying structural redundancy plays a more essential role than finding unimportant filters, theoretically and empirically. We first statistically model the network pruning problem in a redundancy reduction perspective and find that pruning in the layer(s) with the most structural redundancy outperforms pruning the least important filters across all layers. Based on this finding, we then propose a network pruning approach that identifies structural redundancy of a CNN and prunes filters in the selected layer(s) with the most redundancy. Experiments on various benchmark network architectures and datasets show that our proposed approach significantly outperforms the previous state-of-the-art.

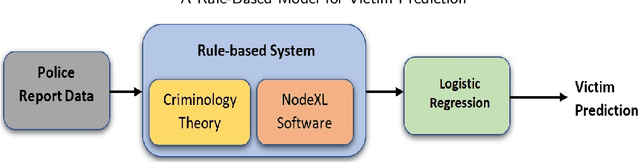

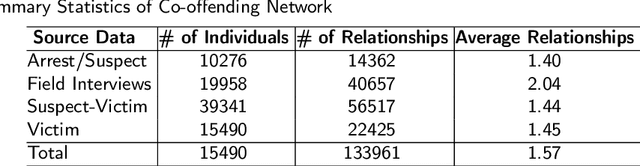

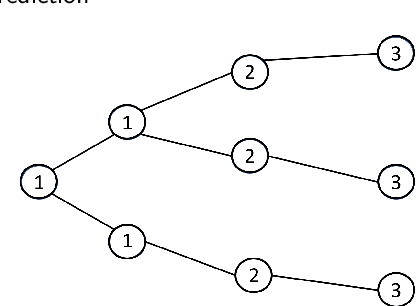

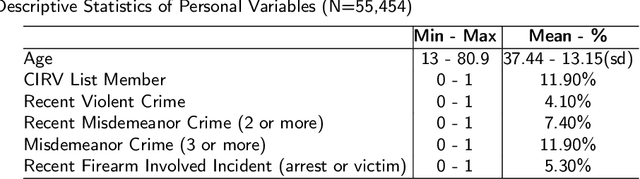

A Rule-Based Model for Victim Prediction

Jan 07, 2020

Abstract:In this paper, we proposed a novel automated model, called Vulnerability Index for Population at Risk (VIPAR) scores, to identify rare populations for their future shooting victimizations. Likewise, the focused deterrence approach identifies vulnerable individuals and offers certain types of treatments (e.g., outreach services) to prevent violence in communities. The proposed rule-based engine model is the first AI-based model for victim prediction. This paper aims to compare the list of focused deterrence strategy with the VIPAR score list regarding their predictive power for the future shooting victimizations. Drawing on the criminological studies, the model uses age, past criminal history, and peer influence as the main predictors of future violence. Social network analysis is employed to measure the influence of peers on the outcome variable. The model also uses logistic regression analysis to verify the variable selections. Our empirical results show that VIPAR scores predict 25.8% of future shooting victims and 32.2% of future shooting suspects, whereas focused deterrence list predicts 13% of future shooting victims and 9.4% of future shooting suspects. The model outperforms the intelligence list of focused deterrence policies in predicting the future fatal and non-fatal shootings. Furthermore, we discuss the concerns about the presumption of innocence right.

Investigating Channel Pruning through Structural Redundancy Reduction - A Statistical Study

May 19, 2019

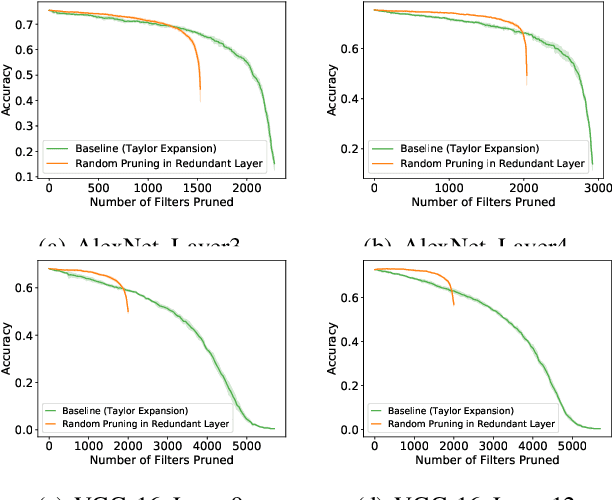

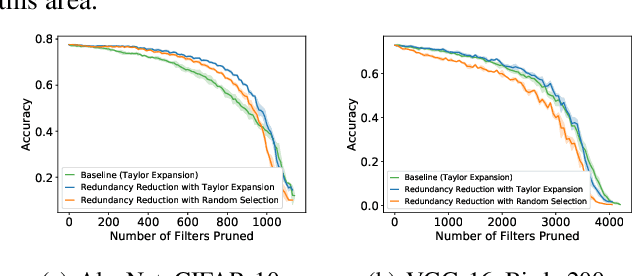

Abstract:Most existing channel pruning methods formulate the pruning task from a perspective of inefficiency reduction which iteratively rank and remove the least important filters, or find the set of filters that minimizes some reconstruction errors after pruning. In this work, we investigate the channel pruning from a new perspective with statistical modeling. We hypothesize that the number of filters at a certain layer reflects the level of 'redundancy' in that layer and thus formulate the pruning problem from the aspect of redundancy reduction. Based on both theoretic analysis and empirical studies, we make an important discovery: randomly pruning filters from layers of high redundancy outperforms pruning the least important filters across all layers based on the state-of-the-art ranking criterion. These results advance our understanding of pruning and further testify to the recent findings that the structure of the pruned model plays a key role in the network efficiency as compared to inherited weights.

Speeding up convolutional networks pruning with coarse ranking

Feb 18, 2019

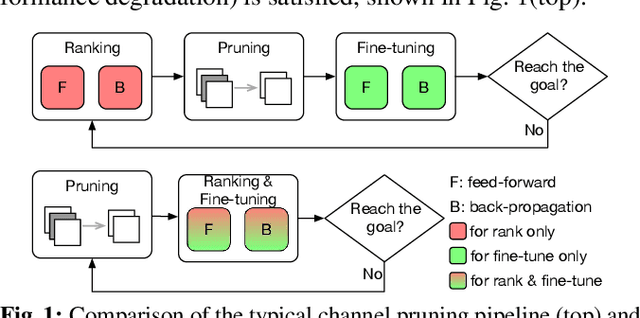

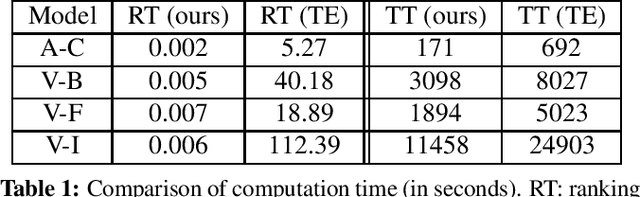

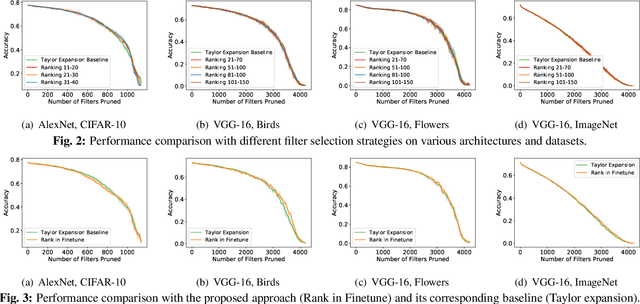

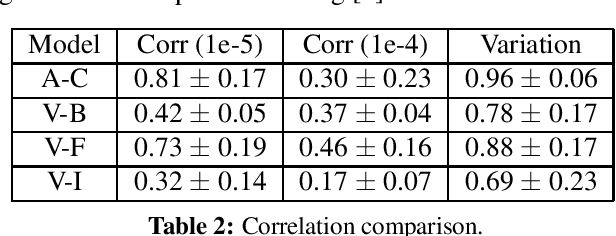

Abstract:Channel-based pruning has achieved significant successes in accelerating deep convolutional neural network, whose pipeline is an iterative three-step procedure: ranking, pruning and fine-tuning. However, this iterative procedure is computationally expensive. In this study, we present a novel computationally efficient channel pruning approach based on the coarse ranking that utilizes the intermediate results during fine-tuning to rank the importance of filters, built upon state-of-the-art works with data-driven ranking criteria. The goal of this work is not to propose a single improved approach built upon a specific channel pruning method, but to introduce a new general framework that works for a series of channel pruning methods. Various benchmark image datasets (CIFAR-10, ImageNet, Birds-200, and Flowers-102) and network architectures (AlexNet and VGG-16) are utilized to evaluate the proposed approach for object classification purpose. Experimental results show that the proposed method can achieve almost identical performance with the corresponding state-of-the-art works (baseline) while our ranking time is negligibly short. In specific, with the proposed method, 75% and 54% of the total computation time for the whole pruning procedure can be reduced for AlexNet on CIFAR-10, and for VGG-16 on ImageNet, respectively. Our approach would significantly facilitate pruning practice, especially on resource-constrained platforms.

Single-shot Channel Pruning Based on Alternating Direction Method of Multipliers

Feb 18, 2019

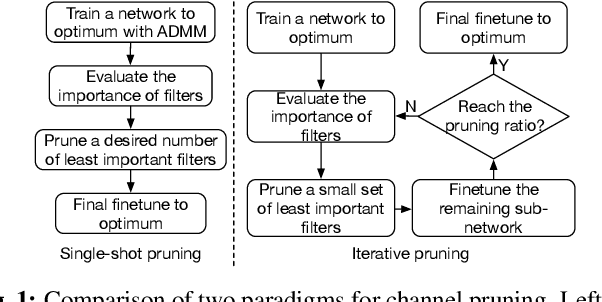

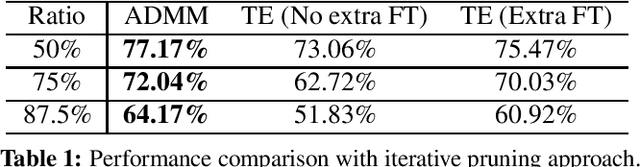

Abstract:Channel pruning has been identified as an effective approach to constructing efficient network structures. Its typical pipeline requires iterative pruning and fine-tuning. In this work, we propose a novel single-shot channel pruning approach based on alternating direction methods of multipliers (ADMM), which can eliminate the need for complex iterative pruning and fine-tuning procedure and achieve a target compression ratio with only one run of pruning and fine-tuning. To the best of our knowledge, this is the first study of single-shot channel pruning. The proposed method introduces filter-level sparsity during training and can achieve competitive performance with a simple heuristic pruning criterion (L1-norm). Extensive evaluations have been conducted with various widely-used benchmark architectures and image datasets for object classification purpose. The experimental results on classification accuracy show that the proposed method can outperform state-of-the-art network pruning works under various scenarios.

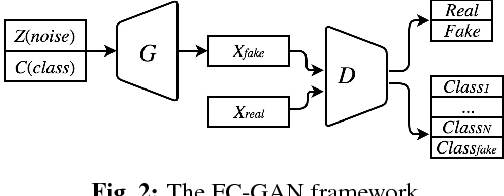

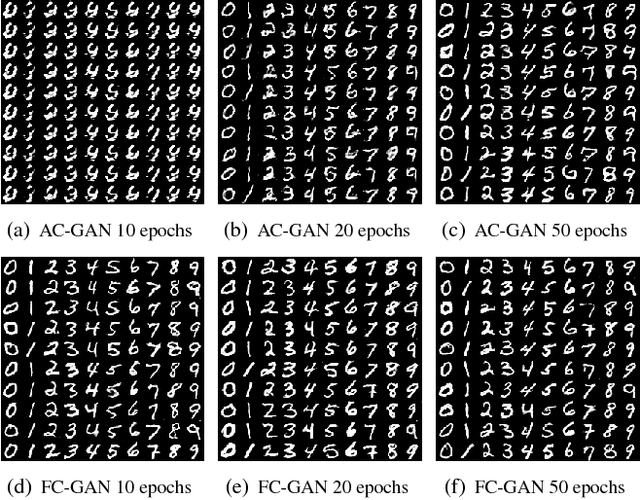

Fast-converging Conditional Generative Adversarial Networks for Image Synthesis

May 05, 2018

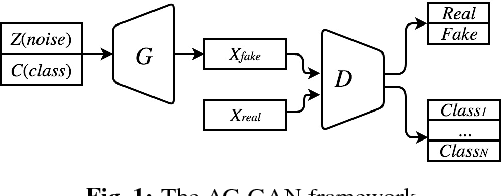

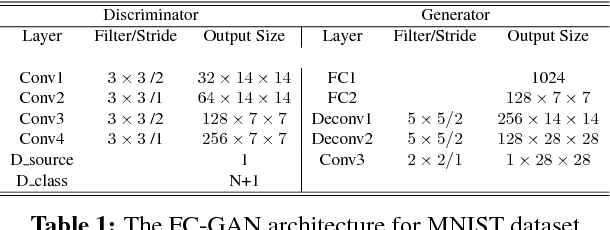

Abstract:Building on top of the success of generative adversarial networks (GANs), conditional GANs attempt to better direct the data generation process by conditioning with certain additional information. Inspired by the most recent AC-GAN, in this paper we propose a fast-converging conditional GAN (FC-GAN). In addition to the real/fake classifier used in vanilla GANs, our discriminator has an advanced auxiliary classifier which distinguishes each real class from an extra `fake' class. The `fake' class avoids mixing generated data with real data, which can potentially confuse the classification of real data as AC-GAN does, and makes the advanced auxiliary classifier behave as another real/fake classifier. As a result, FC-GAN can accelerate the process of differentiation of all classes, thus boost the convergence speed. Experimental results on image synthesis demonstrate our model is competitive in the quality of images generated while achieving a faster convergence rate.

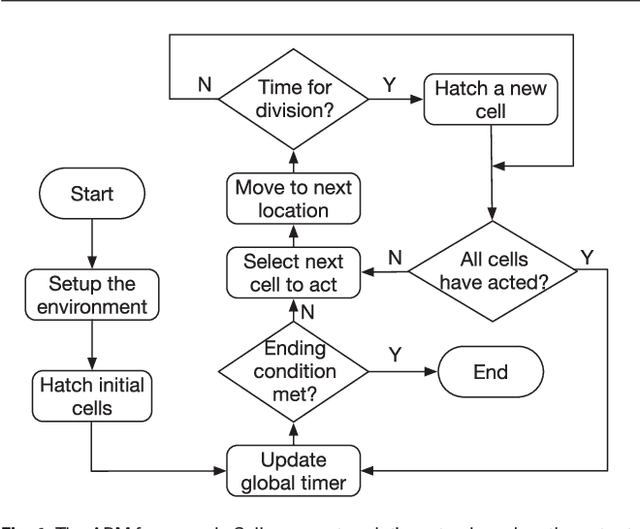

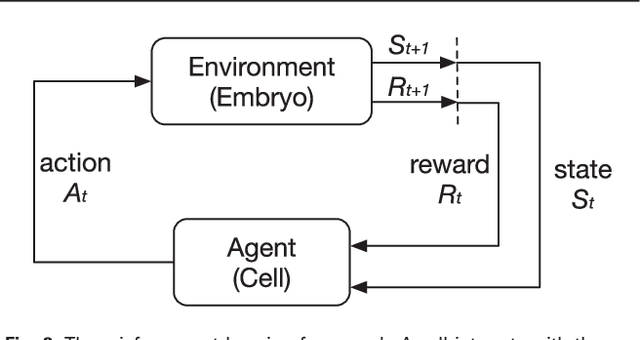

Deep Reinforcement Learning of Cell Movement in the Early Stage of C. elegans Embryogenesis

Mar 02, 2018

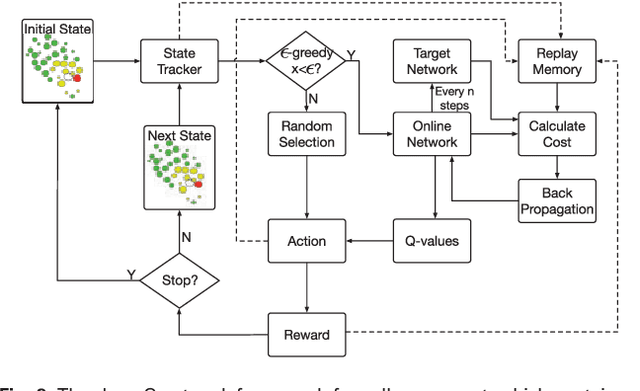

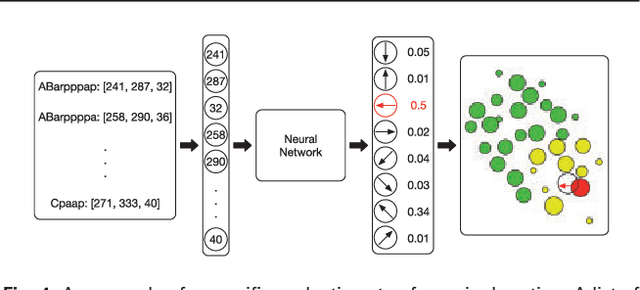

Abstract:Cell movement in the early phase of C. elegans development is regulated by a highly complex process in which a set of rules and connections are formulated at distinct scales. Previous efforts have shown that agent-based, multi-scale modeling systems can integrate physical and biological rules and provide new avenues to study developmental systems. However, the application of these systems to model cell movement is still challenging and requires a comprehensive understanding of regulation networks at the right scales. Recent developments in deep learning and reinforcement learning provide an unprecedented opportunity to explore cell movement using 3D time-lapse images. We present a deep reinforcement learning approach within an ABM system to characterize cell movement in C. elegans embryogenesis. Our modeling system captures the complexity of cell movement patterns in the embryo and overcomes the local optimization problem encountered by traditional rule-based, ABM that uses greedy algorithms. We tested our model with two real developmental processes: the anterior movement of the Cpaaa cell via intercalation and the rearrangement of the left-right asymmetry. In the first case, model results showed that Cpaaa's intercalation is an active directional cell movement caused by the continuous effects from a longer distance, as opposed to a passive movement caused by neighbor cell movements. This is because the learning-based simulation found that a passive movement model could not lead Cpaaa to the predefined destination. In the second case, a leader-follower mechanism well explained the collective cell movement pattern. These results showed that our approach to introduce deep reinforcement learning into ABM can test regulatory mechanisms by exploring cell migration paths in a reverse engineering perspective. This model opens new doors to explore large datasets generated by live imaging.

* We revised the manuscript to make it clearer to follow. Please notice that the Abstract shown in this page is slightly different than that in the manuscript due to the limitation of 1920 characters in arxiv.org

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge