Hengxu Yan

AnyDexGrasp: General Dexterous Grasping for Different Hands with Human-level Learning Efficiency

Feb 23, 2025Abstract:We introduce an efficient approach for learning dexterous grasping with minimal data, advancing robotic manipulation capabilities across different robotic hands. Unlike traditional methods that require millions of grasp labels for each robotic hand, our method achieves high performance with human-level learning efficiency: only hundreds of grasp attempts on 40 training objects. The approach separates the grasping process into two stages: first, a universal model maps scene geometry to intermediate contact-centric grasp representations, independent of specific robotic hands. Next, a unique grasp decision model is trained for each robotic hand through real-world trial and error, translating these representations into final grasp poses. Our results show a grasp success rate of 75-95\% across three different robotic hands in real-world cluttered environments with over 150 novel objects, improving to 80-98\% with increased training objects. This adaptable method demonstrates promising applications for humanoid robots, prosthetics, and other domains requiring robust, versatile robotic manipulation.

Dexterous Manipulation Based on Prior Dexterous Grasp Pose Knowledge

Dec 20, 2024Abstract:Dexterous manipulation has received considerable attention in recent research. Predominantly, existing studies have concentrated on reinforcement learning methods to address the substantial degrees of freedom in hand movements. Nonetheless, these methods typically suffer from low efficiency and accuracy. In this work, we introduce a novel reinforcement learning approach that leverages prior dexterous grasp pose knowledge to enhance both efficiency and accuracy. Unlike previous work, they always make the robotic hand go with a fixed dexterous grasp pose, We decouple the manipulation process into two distinct phases: initially, we generate a dexterous grasp pose targeting the functional part of the object; after that, we employ reinforcement learning to comprehensively explore the environment. Our findings suggest that the majority of learning time is expended in identifying the appropriate initial position and selecting the optimal manipulation viewpoint. Experimental results demonstrate significant improvements in learning efficiency and success rates across four distinct tasks.

A Surprisingly Efficient Representation for Multi-Finger Grasping

Aug 05, 2024

Abstract:The problem of grasping objects using a multi-finger hand has received significant attention in recent years. However, it remains challenging to handle a large number of unfamiliar objects in real and cluttered environments. In this work, we propose a representation that can be effectively mapped to the multi-finger grasp space. Based on this representation, we develop a simple decision model that generates accurate grasp quality scores for different multi-finger grasp poses using only hundreds to thousands of training samples. We demonstrate that our representation performs well on a real robot and achieves a success rate of 78.64% after training with only 500 real-world grasp attempts and 87% with 4500 grasp attempts. Additionally, we achieve a success rate of 84.51% in a dynamic human-robot handover scenario using a multi-finger hand.

ManiPose: A Comprehensive Benchmark for Pose-aware Object Manipulation in Robotics

Mar 20, 2024Abstract:Robotic manipulation in everyday scenarios, especially in unstructured environments, requires skills in pose-aware object manipulation (POM), which adapts robots' grasping and handling according to an object's 6D pose. Recognizing an object's position and orientation is crucial for effective manipulation. For example, if a mug is lying on its side, it's more effective to grasp it by the rim rather than the handle. Despite its importance, research in POM skills remains limited, because learning manipulation skills requires pose-varying simulation environments and datasets. This paper introduces ManiPose, a pioneering benchmark designed to advance the study of pose-varying manipulation tasks. ManiPose encompasses: 1) Simulation environments for POM feature tasks ranging from 6D pose-specific pick-and-place of single objects to cluttered scenes, further including interactions with articulated objects. 2) A comprehensive dataset featuring geometrically consistent and manipulation-oriented 6D pose labels for 2936 real-world scanned rigid objects and 100 articulated objects across 59 categories. 3) A baseline for POM, leveraging the inferencing abilities of LLM (e.g., ChatGPT) to analyze the relationship between 6D pose and task-specific requirements, offers enhanced pose-aware grasp prediction and motion planning capabilities. Our benchmark demonstrates notable advancements in pose estimation, pose-aware manipulation, and real-robot skill transfer, setting new standards for POM research. We will open-source the ManiPose benchmark with the final version paper, inviting the community to engage with our resources, available at our website:https://sites.google.com/view/manipose.

AnyGrasp: Robust and Efficient Grasp Perception in Spatial and Temporal Domains

Dec 16, 2022

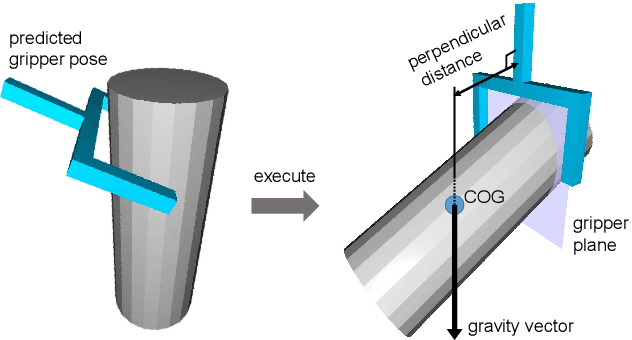

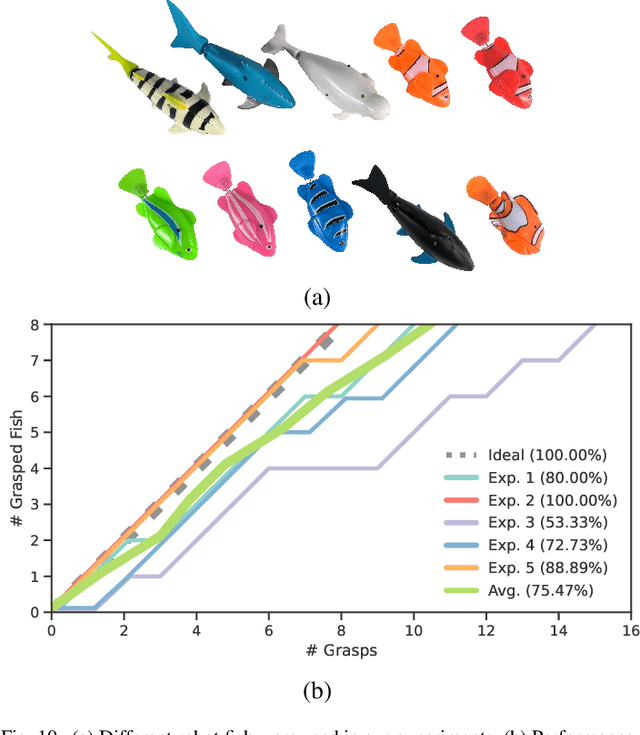

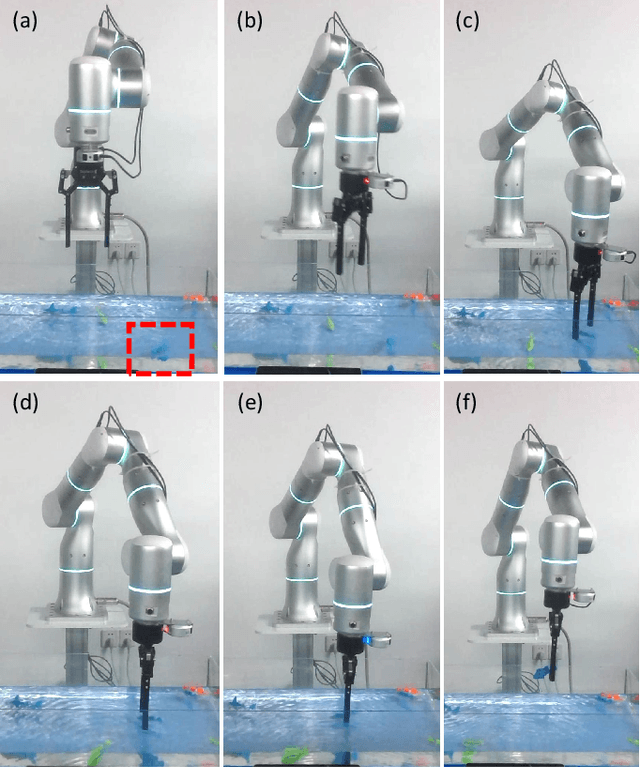

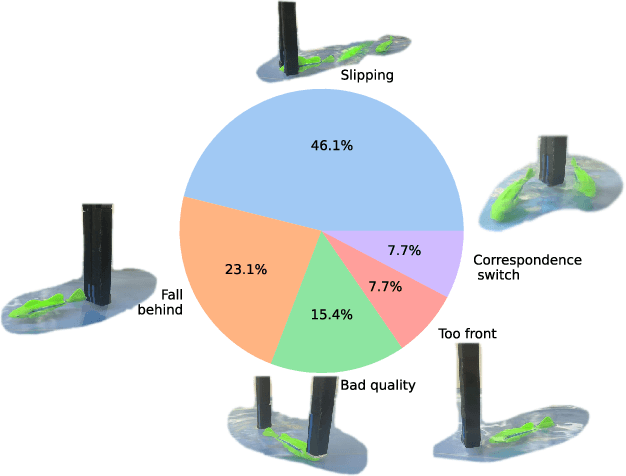

Abstract:As the basis for prehensile manipulation, it is vital to enable robots to grasp as robustly as humans. In daily manipulation, our grasping system is prompt, accurate, flexible and continuous across spatial and temporal domains. Few existing methods cover all these properties for robot grasping. In this paper, we propose a new methodology for grasp perception to enable robots these abilities. Specifically, we develop a dense supervision strategy with real perception and analytic labels in the spatial-temporal domain. Additional awareness of objects' center-of-mass is incorporated into the learning process to help improve grasping stability. Utilization of grasp correspondence across observations enables dynamic grasp tracking. Our model, AnyGrasp, can generate accurate, full-DoF, dense and temporally-smooth grasp poses efficiently, and works robustly against large depth sensing noise. Embedded with AnyGrasp, we achieve a 93.3% success rate when clearing bins with over 300 unseen objects, which is comparable with human subjects under controlled conditions. Over 900 MPPH is reported on a single-arm system. For dynamic grasping, we demonstrate catching swimming robot fish in the water.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge