Hasti Seifi

Sound2Hap: Learning Audio-to-Vibrotactile Haptic Generation from Human Ratings

Jan 21, 2026Abstract:Environmental sounds like footsteps, keyboard typing, or dog barking carry rich information and emotional context, making them valuable for designing haptics in user applications. Existing audio-to-vibration methods, however, rely on signal-processing rules tuned for music or games and often fail to generalize across diverse sounds. To address this, we first investigated user perception of four existing audio-to-haptic algorithms, then created a data-driven model for environmental sounds. In Study 1, 34 participants rated vibrations generated by the four algorithms for 1,000 sounds, revealing no consistent algorithm preferences. Using this dataset, we trained Sound2Hap, a CNN-based autoencoder, to generate perceptually meaningful vibrations from diverse sounds with low latency. In Study 2, 15 participants rated its output higher than signal-processing baselines on both audio-vibration match and Haptic Experience Index (HXI), finding it more harmonious with diverse sounds. This work demonstrates a perceptually validated approach to audio-haptic translation, broadening the reach of sound-driven haptics.

HapticLLaMA: A Multimodal Sensory Language Model for Haptic Captioning

Aug 08, 2025Abstract:Haptic captioning is the task of generating natural language descriptions from haptic signals, such as vibrations, for use in virtual reality, accessibility, and rehabilitation applications. While previous multimodal research has focused primarily on vision and audio, haptic signals for the sense of touch remain underexplored. To address this gap, we formalize the haptic captioning task and propose HapticLLaMA, a multimodal sensory language model that interprets vibration signals into descriptions in a given sensory, emotional, or associative category. We investigate two types of haptic tokenizers, a frequency-based tokenizer and an EnCodec-based tokenizer, that convert haptic signals into sequences of discrete units, enabling their integration with the LLaMA model. HapticLLaMA is trained in two stages: (1) supervised fine-tuning using the LLaMA architecture with LoRA-based adaptation, and (2) fine-tuning via reinforcement learning from human feedback (RLHF). We assess HapticLLaMA's captioning performance using both automated n-gram metrics and human evaluation. HapticLLaMA demonstrates strong capability in interpreting haptic vibration signals, achieving a METEOR score of 59.98 and a BLEU-4 score of 32.06 respectively. Additionally, over 61% of the generated captions received human ratings above 3.5 on a 7-point scale, with RLHF yielding a 10% improvement in the overall rating distribution, indicating stronger alignment with human haptic perception. These findings highlight the potential of large language models to process and adapt to sensory data.

HapticCap: A Multimodal Dataset and Task for Understanding User Experience of Vibration Haptic Signals

Jul 17, 2025Abstract:Haptic signals, from smartphone vibrations to virtual reality touch feedback, can effectively convey information and enhance realism, but designing signals that resonate meaningfully with users is challenging. To facilitate this, we introduce a multimodal dataset and task, of matching user descriptions to vibration haptic signals, and highlight two primary challenges: (1) lack of large haptic vibration datasets annotated with textual descriptions as collecting haptic descriptions is time-consuming, and (2) limited capability of existing tasks and models to describe vibration signals in text. To advance this area, we create HapticCap, the first fully human-annotated haptic-captioned dataset, containing 92,070 haptic-text pairs for user descriptions of sensory, emotional, and associative attributes of vibrations. Based on HapticCap, we propose the haptic-caption retrieval task and present the results of this task from a supervised contrastive learning framework that brings together text representations within specific categories and vibrations. Overall, the combination of language model T5 and audio model AST yields the best performance in the haptic-caption retrieval task, especially when separately trained for each description category.

Partitioner Guided Modal Learning Framework

Jul 15, 2025Abstract:Multimodal learning benefits from multiple modal information, and each learned modal representations can be divided into uni-modal that can be learned from uni-modal training and paired-modal features that can be learned from cross-modal interaction. Building on this perspective, we propose a partitioner-guided modal learning framework, PgM, which consists of the modal partitioner, uni-modal learner, paired-modal learner, and uni-paired modal decoder. Modal partitioner segments the learned modal representation into uni-modal and paired-modal features. Modal learner incorporates two dedicated components for uni-modal and paired-modal learning. Uni-paired modal decoder reconstructs modal representation based on uni-modal and paired-modal features. PgM offers three key benefits: 1) thorough learning of uni-modal and paired-modal features, 2) flexible distribution adjustment for uni-modal and paired-modal representations to suit diverse downstream tasks, and 3) different learning rates across modalities and partitions. Extensive experiments demonstrate the effectiveness of PgM across four multimodal tasks and further highlight its transferability to existing models. Additionally, we visualize the distribution of uni-modal and paired-modal features across modalities and tasks, offering insights into their respective contributions.

ChartQA-X: Generating Explanations for Charts

Apr 17, 2025Abstract:The ability to interpret and explain complex information from visual data in charts is crucial for data-driven decision-making. In this work, we address the challenge of providing explanations alongside answering questions about chart images. We present ChartQA-X, a comprehensive dataset comprising various chart types with 28,299 contextually relevant questions, answers, and detailed explanations. These explanations are generated by prompting six different models and selecting the best responses based on metrics such as faithfulness, informativeness, coherence, and perplexity. Our experiments show that models fine-tuned on our dataset for explanation generation achieve superior performance across various metrics and demonstrate improved accuracy in question-answering tasks on new datasets. By integrating answers with explanatory narratives, our approach enhances the ability of intelligent agents to convey complex information effectively, improve user understanding, and foster trust in the generated responses.

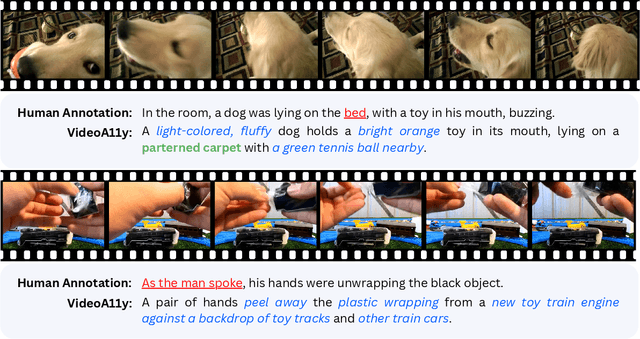

VideoA11y: Method and Dataset for Accessible Video Description

Feb 27, 2025

Abstract:Video descriptions are crucial for blind and low vision (BLV) users to access visual content. However, current artificial intelligence models for generating descriptions often fall short due to limitations in the quality of human annotations within training datasets, resulting in descriptions that do not fully meet BLV users' needs. To address this gap, we introduce VideoA11y, an approach that leverages multimodal large language models (MLLMs) and video accessibility guidelines to generate descriptions tailored for BLV individuals. Using this method, we have curated VideoA11y-40K, the largest and most comprehensive dataset of 40,000 videos described for BLV users. Rigorous experiments across 15 video categories, involving 347 sighted participants, 40 BLV participants, and seven professional describers, showed that VideoA11y descriptions outperform novice human annotations and are comparable to trained human annotations in clarity, accuracy, objectivity, descriptiveness, and user satisfaction. We evaluated models on VideoA11y-40K using both standard and custom metrics, demonstrating that MLLMs fine-tuned on this dataset produce high-quality accessible descriptions. Code and dataset are available at https://people-robots.github.io/VideoA11y.

Grounding Emotional Descriptions to Electrovibration Haptic Signals

Nov 04, 2024

Abstract:Designing and displaying haptic signals with sensory and emotional attributes can improve the user experience in various applications. Free-form user language provides rich sensory and emotional information for haptic design (e.g., ``This signal feels smooth and exciting''), but little work exists on linking user descriptions to haptic signals (i.e., language grounding). To address this gap, we conducted a study where 12 users described the feel of 32 signals perceived on a surface haptics (i.e., electrovibration) display. We developed a computational pipeline using natural language processing (NLP) techniques, such as GPT-3.5 Turbo and word embedding methods, to extract sensory and emotional keywords and group them into semantic clusters (i.e., concepts). We linked the keyword clusters to haptic signal features (e.g., pulse count) using correlation analysis. The proposed pipeline demonstrates the viability of a computational approach to analyzing haptic experiences. We discuss our future plans for creating a predictive model of haptic experience.

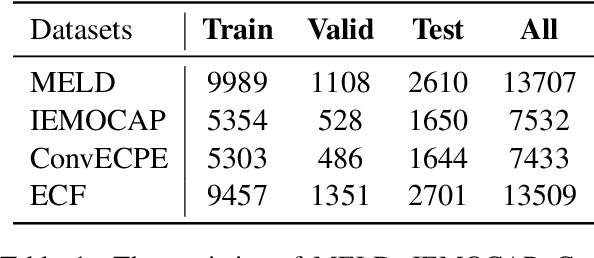

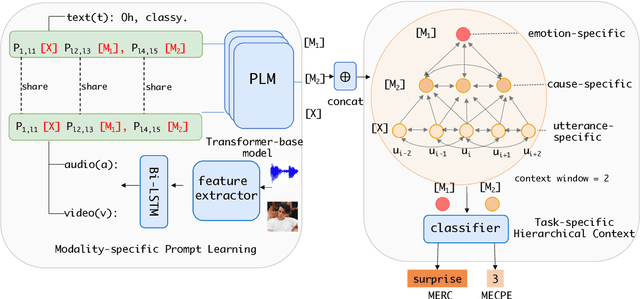

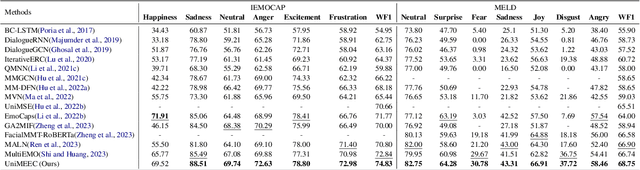

UniMEEC: Towards Unified Multimodal Emotion Recognition and Emotion Cause

Mar 30, 2024

Abstract:Multimodal emotion recognition in conversation (MERC) and multimodal emotion-cause pair extraction (MECPE) has recently garnered significant attention. Emotions are the expression of affect or feelings; responses to specific events, thoughts, or situations are known as emotion causes. Both are like two sides of a coin, collectively describing human behaviors and intents. However, most existing works treat MERC and MECPE as separate tasks, which may result in potential challenges in integrating emotion and cause in real-world applications. In this paper, we propose a Unified Multimodal Emotion recognition and Emotion-Cause analysis framework (UniMEEC) to explore the causality and complementarity between emotion and emotion cause. Concretely, UniMEEC reformulates the MERC and MECPE tasks as two mask prediction problems, enhancing the interaction between emotion and cause. Meanwhile, UniMEEC shares the prompt learning among modalities for probing modality-specific knowledge from the Pre-trained model. Furthermore, we propose a task-specific hierarchical context aggregation to control the information flow to the task. Experiment results on four public benchmark datasets verify the model performance on MERC and MECPE tasks and achieve consistent improvements compared with state-of-the-art methods.

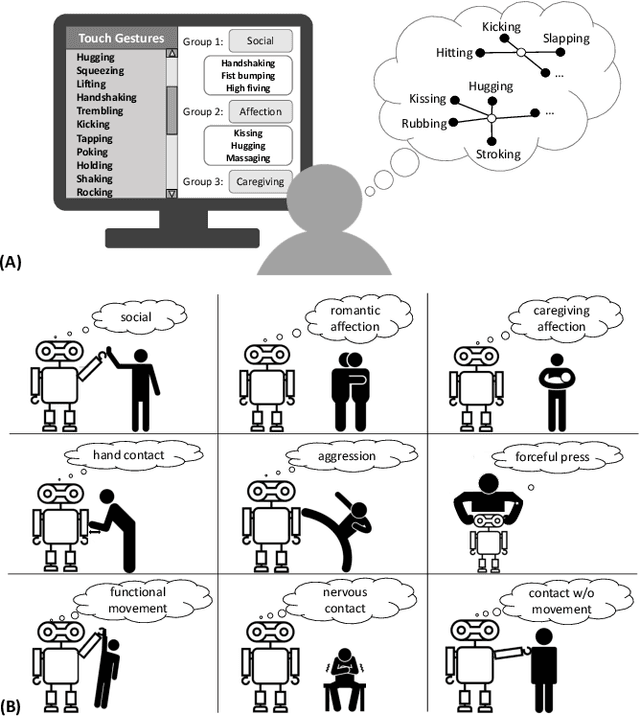

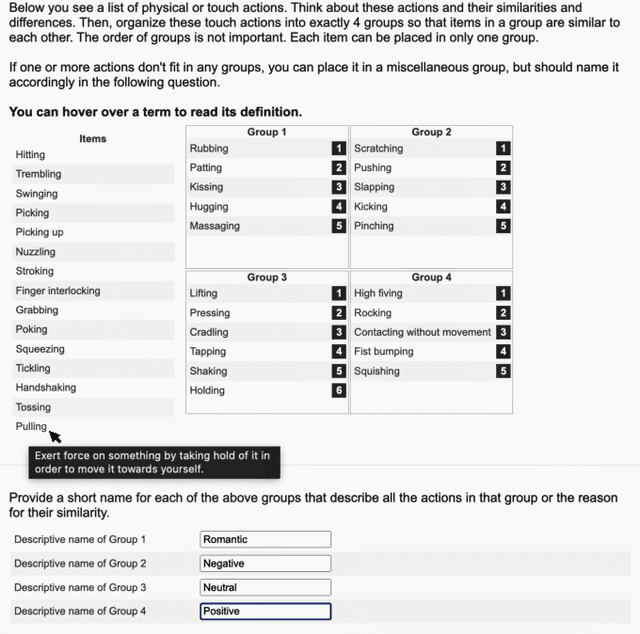

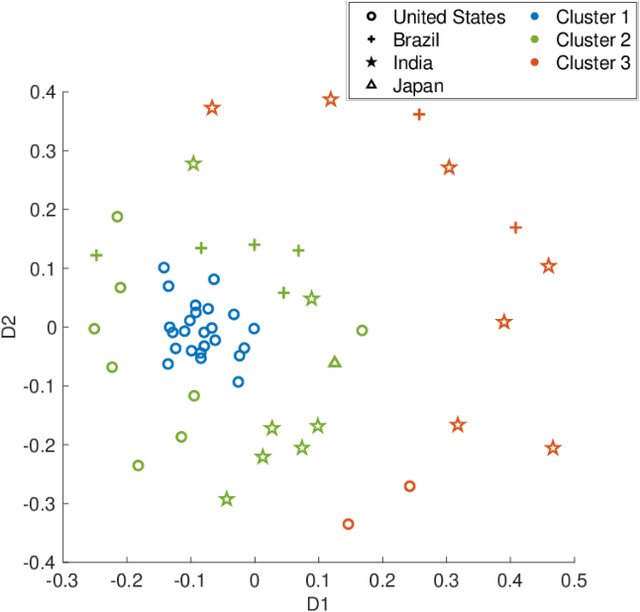

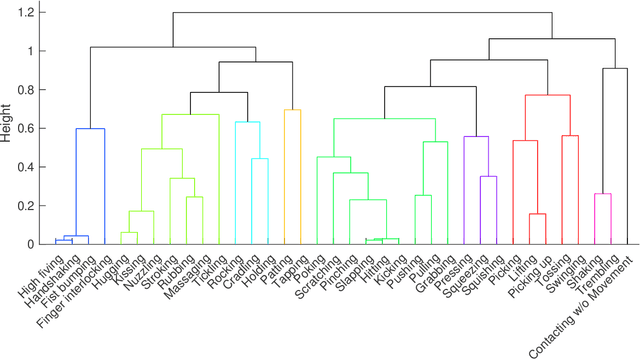

Clustering Social Touch Gestures for Human-Robot Interaction

Apr 03, 2023

Abstract:Social touch provides a rich non-verbal communication channel between humans and robots. Prior work has identified a set of touch gestures for human-robot interaction and described them with natural language labels (e.g., stroking, patting). Yet, no data exists on the semantic relationships between the touch gestures in users' minds. To endow robots with touch intelligence, we investigated how people perceive the similarities of social touch labels from the literature. In an online study, 45 participants grouped 36 social touch labels based on their perceived similarities and annotated their groupings with descriptive names. We derived quantitative similarities of the gestures from these groupings and analyzed the similarities using hierarchical clustering. The analysis resulted in 9 clusters of touch gestures formed around the social, emotional, and contact characteristics of the gestures. We discuss the implications of our results for designing and evaluating touch sensing and interactions with social robots.

Charting Visual Impression of Robot Hands

Nov 17, 2022

Abstract:A wide variety of robotic hands have been designed to date. Yet, we do not know how users perceive these hands and feel about interacting with them. To inform hand design for social robots, we compiled a dataset of 73 robot hands and ran an online study, in which 160 users rated their impressions of the hands using 17 rating scales. Next, we developed 17 regression models that can predict user ratings (e.g., humanlike) from the design features of the hands (e.g., number of fingers). The models have less than a 10-point error in predicting the user ratings on a 0-100 scale. The shape of the fingertips, color scheme, and size of the hands influence the user ratings the most. We present simple guidelines to improve user impression of robot hands and outline remaining questions for future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge