Yasemin Vardar

Delft University of Technology, Delft, The Netherlands

Grounding Emotional Descriptions to Electrovibration Haptic Signals

Nov 04, 2024

Abstract:Designing and displaying haptic signals with sensory and emotional attributes can improve the user experience in various applications. Free-form user language provides rich sensory and emotional information for haptic design (e.g., ``This signal feels smooth and exciting''), but little work exists on linking user descriptions to haptic signals (i.e., language grounding). To address this gap, we conducted a study where 12 users described the feel of 32 signals perceived on a surface haptics (i.e., electrovibration) display. We developed a computational pipeline using natural language processing (NLP) techniques, such as GPT-3.5 Turbo and word embedding methods, to extract sensory and emotional keywords and group them into semantic clusters (i.e., concepts). We linked the keyword clusters to haptic signal features (e.g., pulse count) using correlation analysis. The proposed pipeline demonstrates the viability of a computational approach to analyzing haptic experiences. We discuss our future plans for creating a predictive model of haptic experience.

Tactile Weight Rendering: A Review for Researchers and Developers

Feb 20, 2024Abstract:Haptic rendering of weight plays an essential role in naturalistic object interaction in virtual environments. While kinesthetic devices have traditionally been used for this aim by applying forces on the limbs, tactile interfaces acting on the skin have recently offered potential solutions to enhance or substitute kinesthetic ones. Here, we aim to provide an in-depth overview and comparison of existing tactile weight rendering approaches. We categorized these approaches based on their type of stimulation into asymmetric vibration and skin stretch, further divided according to the working mechanism of the devices. Then, we compared these approaches using various criteria, including physical, mechanical, and perceptual characteristics of the reported devices and their potential applications. We found that asymmetric vibration devices have the smallest form factor, while skin stretch devices relying on the motion of flat surfaces, belts, or tactors present numerous mechanical and perceptual advantages for scenarios requiring more accurate weight rendering. Finally, we discussed the selection of the proposed categorization of devices and their application scopes, together with the limitations and opportunities for future research. We hope this study guides the development and use of tactile interfaces to achieve a more naturalistic object interaction and manipulation in virtual environments.

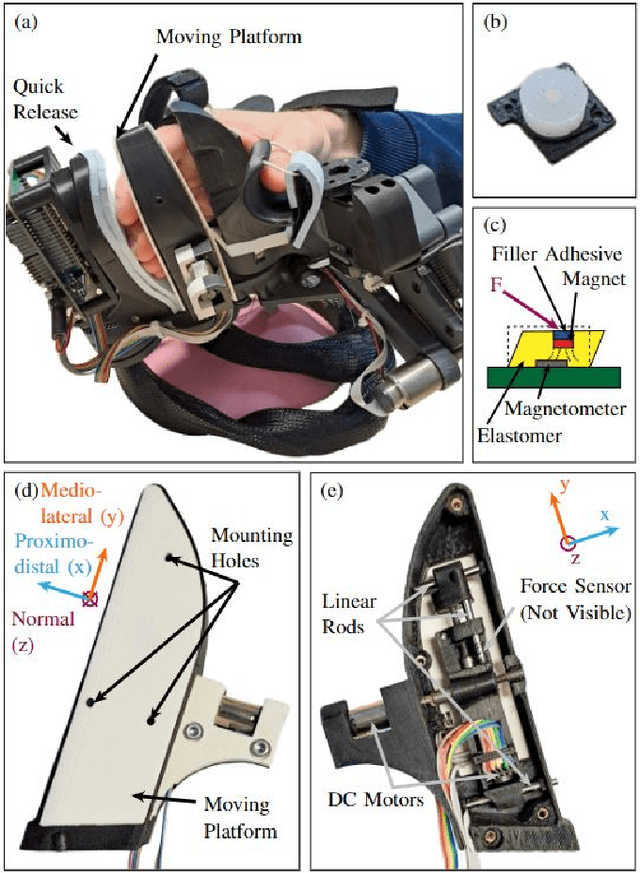

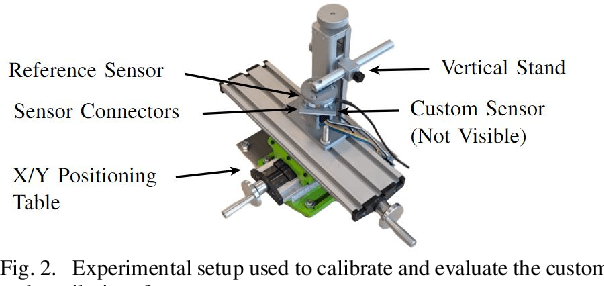

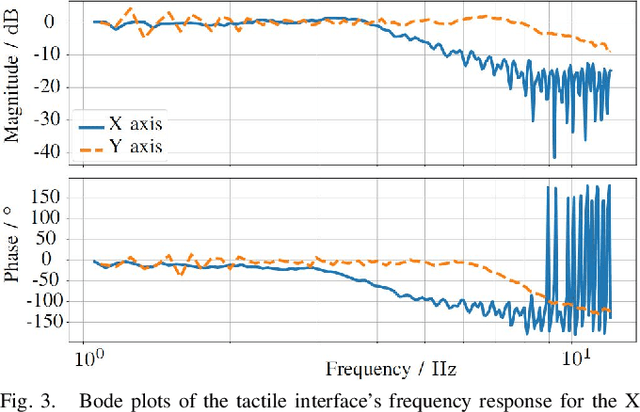

Design and evaluation of a multi-finger skin-stretch tactile interface for hand rehabilitation robots

Feb 19, 2024

Abstract:Object properties perceived through the tactile sense, such as weight, friction, and slip, greatly influence motor control during manipulation tasks. However, the provision of tactile information during robotic training in neurorehabilitation has not been well explored. Therefore, we designed and evaluated a tactile interface based on a two-degrees-of-freedom moving platform mounted on a hand rehabilitation robot that provides skin stretch at four fingertips, from the index through the little finger. To accurately control the rendered forces, we included a custom magnetic-based force sensor to control the tactile interface in a closed loop. The technical evaluation showed that our custom force sensor achieved measurable shear forces of +-8N with accuracies of 95.2-98.4% influenced by hysteresis, viscoelastic creep, and torsional deformation. The tactile interface accurately rendered forces with a step response steady-state accuracy of 97.5-99.4% and a frequency response in the range of most activities of daily living. Our sensor showed the highest measurement-range-to-size ratio and comparable accuracy to sensors of its kind. These characteristics enabled the closed-loop force control of the tactile interface for precise rendering of multi-finger two-dimensional skin stretch. The proposed system is a first step towards more realistic and rich haptic feedback during robotic sensorimotor rehabilitation, potentially improving therapy outcomes.

SENS3: Multisensory Database of Finger-Surface Interactions and Corresponding Sensations

Jan 03, 2024Abstract:The growing demand for natural interactions with technology underscores the importance of achieving realistic touch sensations in digital environments. Realizing this goal highly depends on comprehensive databases of finger-surface interactions, which need further development. Here, we present SENS3, an extensive open-access repository of multisensory data acquired from fifty surfaces when two participants explored them with their fingertips through static contact, pressing, tapping, and sliding. SENS3 encompasses high-fidelity visual, audio, and haptic information recorded during these interactions, including videos, sounds, contact forces, torques, positions, accelerations, skin temperature, heat flux, and surface photographs. Additionally, it incorporates thirteen participants' psychophysical sensation ratings while exploring these surfaces freely. We anticipate that SENS3 will be valuable for advancing multisensory texture rendering, user experience development, and touch sensing in robotics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge