Hanlin Wu

SUG-Occ: An Explicit Semantics and Uncertainty Guided Sparse Learning Framework for Real-Time 3D Occupancy Prediction

Jan 16, 2026Abstract:As autonomous driving moves toward full scene understanding, 3D semantic occupancy prediction has emerged as a crucial perception task, offering voxel-level semantics beyond traditional detection and segmentation paradigms. However, such a refined representation for scene understanding incurs prohibitive computation and memory overhead, posing a major barrier to practical real-time deployment. To address this, we propose SUG-Occ, an explicit Semantics and Uncertainty Guided Sparse Learning Enabled 3D Occupancy Prediction Framework, which exploits the inherent sparsity of 3D scenes to reduce redundant computation while maintaining geometric and semantic completeness. Specifically, we first utilize semantic and uncertainty priors to suppress projections from free space during view transformation while employing an explicit unsigned distance encoding to enhance geometric consistency, producing a structurally consistent sparse 3D representation. Secondly, we design an cascade sparse completion module via hyper cross sparse convolution and generative upsampling to enable efficiently coarse-to-fine reasoning. Finally, we devise an object contextual representation (OCR) based mask decoder that aggregates global semantic context from sparse features and refines voxel-wise predictions via lightweight query-context interactions, avoiding expensive attention operations over volumetric features. Extensive experiments on SemanticKITTI benchmark demonstrate that the proposed approach outperforms the baselines, achieving a 7.34/% improvement in accuracy and a 57.8\% gain in efficiency.

DeltaVLM: Interactive Remote Sensing Image Change Analysis via Instruction-guided Difference Perception

Jul 30, 2025Abstract:Accurate interpretation of land-cover changes in multi-temporal satellite imagery is critical for real-world scenarios. However, existing methods typically provide only one-shot change masks or static captions, limiting their ability to support interactive, query-driven analysis. In this work, we introduce remote sensing image change analysis (RSICA) as a new paradigm that combines the strengths of change detection and visual question answering to enable multi-turn, instruction-guided exploration of changes in bi-temporal remote sensing images. To support this task, we construct ChangeChat-105k, a large-scale instruction-following dataset, generated through a hybrid rule-based and GPT-assisted process, covering six interaction types: change captioning, classification, quantification, localization, open-ended question answering, and multi-turn dialogues. Building on this dataset, we propose DeltaVLM, an end-to-end architecture tailored for interactive RSICA. DeltaVLM features three innovations: (1) a fine-tuned bi-temporal vision encoder to capture temporal differences; (2) a visual difference perception module with a cross-semantic relation measuring (CSRM) mechanism to interpret changes; and (3) an instruction-guided Q-former to effectively extract query-relevant difference information from visual changes, aligning them with textual instructions. We train DeltaVLM on ChangeChat-105k using a frozen large language model, adapting only the vision and alignment modules to optimize efficiency. Extensive experiments and ablation studies demonstrate that DeltaVLM achieves state-of-the-art performance on both single-turn captioning and multi-turn interactive change analysis, outperforming existing multimodal large language models and remote sensing vision-language models. Code, dataset and pre-trained weights are available at https://github.com/hanlinwu/DeltaVLM.

Single-Step Latent Consistency Model for Remote Sensing Image Super-Resolution

Mar 25, 2025

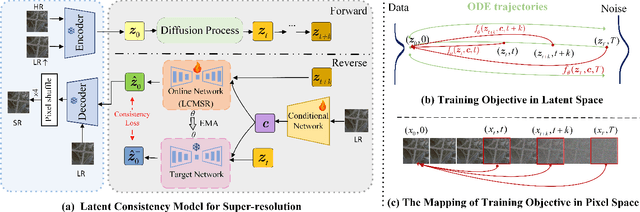

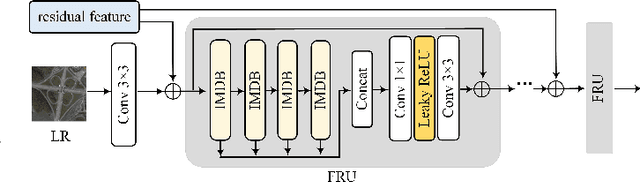

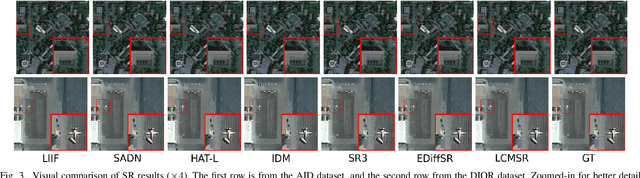

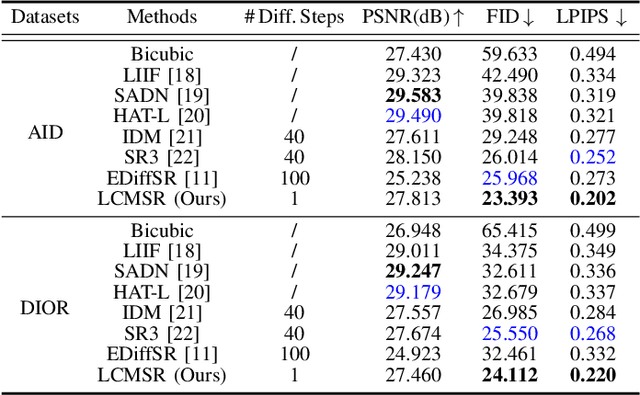

Abstract:Recent advancements in diffusion models (DMs) have greatly advanced remote sensing image super-resolution (RSISR). However, their iterative sampling processes often result in slow inference speeds, limiting their application in real-time tasks. To address this challenge, we propose the latent consistency model for super-resolution (LCMSR), a novel single-step diffusion approach designed to enhance both efficiency and visual quality in RSISR tasks. Our proposal is structured into two distinct stages. In the first stage, we pretrain a residual autoencoder to encode the differential information between high-resolution (HR) and low-resolution (LR) images, transitioning the diffusion process into a latent space to reduce computational costs. The second stage focuses on consistency diffusion learning, which aims to learn the distribution of residual encodings in the latent space, conditioned on LR images. The consistency constraint enforces that predictions at any two timesteps along the reverse diffusion trajectory remain consistent, enabling direct mapping from noise to data. As a result, the proposed LCMSR reduces the iterative steps of traditional diffusion models from 50-1000 or more to just a single step, significantly improving efficiency. Experimental results demonstrate that LCMSR effectively balances efficiency and performance, achieving inference times comparable to non-diffusion models while maintaining high-quality output.

Distinct social-linguistic processing between humans and large audio-language models: Evidence from model-brain alignment

Mar 25, 2025

Abstract:Voice-based AI development faces unique challenges in processing both linguistic and paralinguistic information. This study compares how large audio-language models (LALMs) and humans integrate speaker characteristics during speech comprehension, asking whether LALMs process speaker-contextualized language in ways that parallel human cognitive mechanisms. We compared two LALMs' (Qwen2-Audio and Ultravox 0.5) processing patterns with human EEG responses. Using surprisal and entropy metrics from the models, we analyzed their sensitivity to speaker-content incongruency across social stereotype violations (e.g., a man claiming to regularly get manicures) and biological knowledge violations (e.g., a man claiming to be pregnant). Results revealed that Qwen2-Audio exhibited increased surprisal for speaker-incongruent content and its surprisal values significantly predicted human N400 responses, while Ultravox 0.5 showed limited sensitivity to speaker characteristics. Importantly, neither model replicated the human-like processing distinction between social violations (eliciting N400 effects) and biological violations (eliciting P600 effects). These findings reveal both the potential and limitations of current LALMs in processing speaker-contextualized language, and suggest differences in social-linguistic processing mechanisms between humans and LALMs.

A Periodic Bayesian Flow for Material Generation

Feb 04, 2025

Abstract:Generative modeling of crystal data distribution is an important yet challenging task due to the unique periodic physical symmetry of crystals. Diffusion-based methods have shown early promise in modeling crystal distribution. More recently, Bayesian Flow Networks were introduced to aggregate noisy latent variables, resulting in a variance-reduced parameter space that has been shown to be advantageous for modeling Euclidean data distributions with structural constraints (Song et al., 2023). Inspired by this, we seek to unlock its potential for modeling variables located in non-Euclidean manifolds e.g. those within crystal structures, by overcoming challenging theoretical issues. We introduce CrysBFN, a novel crystal generation method by proposing a periodic Bayesian flow, which essentially differs from the original Gaussian-based BFN by exhibiting non-monotonic entropy dynamics. To successfully realize the concept of periodic Bayesian flow, CrysBFN integrates a new entropy conditioning mechanism and empirically demonstrates its significance compared to time-conditioning. Extensive experiments over both crystal ab initio generation and crystal structure prediction tasks demonstrate the superiority of CrysBFN, which consistently achieves new state-of-the-art on all benchmarks. Surprisingly, we found that CrysBFN enjoys a significant improvement in sampling efficiency, e.g., ~100x speedup 10 v.s. 2000 steps network forwards) compared with previous diffusion-based methods on MP-20 dataset. Code is available at https://github.com/wu-han-lin/CrysBFN.

Probabilistic adaptation of language comprehension for individual speakers: Evidence from neural oscillations

Feb 03, 2025Abstract:Listeners adapt language comprehension based on their mental representations of speakers, but how these representations are dynamically updated remains unclear. We investigated whether listeners probabilistically adapt their comprehension based on the likelihood of speakers producing stereotype-incongruent utterances. Our findings reveal two potential mechanisms: a speaker-general mechanism that adjusts overall expectations about speaker-content relationships, and a speaker-specific mechanism that updates individual speaker models. In two EEG experiments, participants heard speakers make stereotype-congruent or incongruent utterances, with incongruency base rate manipulated between blocks. In Experiment 1, speaker incongruency modulated both high-beta (21-30 Hz) and theta (4-6 Hz) oscillations: incongruent utterances decreased oscillatory power in low base rate condition but increased it in high base rate condition. The theta effect varied with listeners' openness trait: less open participants showed theta increases to speaker-incongruencies, suggesting maintenance of speaker-specific information, while more open participants showed theta decreases, indicating flexible model updating. In Experiment 2, we dissociated base rate from the target speaker by manipulating the overall base rate using an alternative non-target speaker. Only the high-beta effect persisted, showing power decrease for speaker-incongruencies in low base rate condition but no effect in high base rate condition. The high-beta oscillations might reflect the speaker-general adjustment, while theta oscillations may index the speaker-specific model updating. These findings provide evidence for how language processing is shaped by social cognition in real time.

Speaker effects in spoken language comprehension

Dec 10, 2024Abstract:The identity of a speaker significantly influences spoken language comprehension by affecting both perception and expectation. This review explores speaker effects, focusing on how speaker information impacts language processing. We propose an integrative model featuring the interplay between bottom-up perception-based processes driven by acoustic details and top-down expectation-based processes driven by a speaker model. The acoustic details influence lower-level perception, while the speaker model modulates both lower-level and higher-level processes such as meaning interpretation and pragmatic inferences. We define speaker-idiosyncrasy and speaker-demographics effects and demonstrate how bottom-up and top-down processes interact at various levels in different scenarios. This framework contributes to psycholinguistic theory by offering a comprehensive account of how speaker information interacts with linguistic content to shape message construction. We suggest that speaker effects can serve as indices of a language learner's proficiency and an individual's characteristics of social cognition. We encourage future research to extend these findings to AI speakers, probing the universality of speaker effects across humans and artificial agents.

Latent Diffusion, Implicit Amplification: Efficient Continuous-Scale Super-Resolution for Remote Sensing Images

Oct 30, 2024

Abstract:Recent advancements in diffusion models have significantly improved performance in super-resolution (SR) tasks. However, previous research often overlooks the fundamental differences between SR and general image generation. General image generation involves creating images from scratch, while SR focuses specifically on enhancing existing low-resolution (LR) images by adding typically missing high-frequency details. This oversight not only increases the training difficulty but also limits their inference efficiency. Furthermore, previous diffusion-based SR methods are typically trained and inferred at fixed integer scale factors, lacking flexibility to meet the needs of up-sampling with non-integer scale factors. To address these issues, this paper proposes an efficient and elastic diffusion-based SR model (E$^2$DiffSR), specially designed for continuous-scale SR in remote sensing imagery. E$^2$DiffSR employs a two-stage latent diffusion paradigm. During the first stage, an autoencoder is trained to capture the differential priors between high-resolution (HR) and LR images. The encoder intentionally ignores the existing LR content to alleviate the encoding burden, while the decoder introduces an SR branch equipped with a continuous scale upsampling module to accomplish the reconstruction under the guidance of the differential prior. In the second stage, a conditional diffusion model is learned within the latent space to predict the true differential prior encoding. Experimental results demonstrate that E$^2$DiffSR achieves superior objective metrics and visual quality compared to the state-of-the-art SR methods. Additionally, it reduces the inference time of diffusion-based SR methods to a level comparable to that of non-diffusion methods.

When A Man Says He Is Pregnant: ERP Evidence for A Rational Account of Speaker-contextualized Language Comprehension

Sep 26, 2024Abstract:Spoken language is often, if not always, understood in a context that includes the identities of speakers. For instance, we can easily make sense of an utterance such as "I'm going to have a manicure this weekend" or "The first time I got pregnant I had a hard time" when the utterance is spoken by a woman, but it would be harder to understand when it is spoken by a man. Previous event-related potential (ERP) studies have shown mixed results regarding the neurophysiological responses to such speaker-mismatched utterances, with some reporting an N400 effect and others a P600 effect. In an experiment involving 64 participants, we showed that these different ERP effects reflect distinct cognitive processes employed to resolve the speaker-message mismatch. When possible, the message is integrated with the speaker context to arrive at an interpretation, as in the case of violations of social stereotypes (e.g., men getting a manicure), resulting in an N400 effect. However, when such integration is impossible due to violations of biological knowledge (e.g., men getting pregnant), listeners engage in an error correction process to revise either the perceived utterance or the speaker context, resulting in a P600 effect. Additionally, we found that the social N400 effect decreased as a function of the listener's personality trait of openness, while the biological P600 effect remained robust. Our findings help to reconcile the empirical inconsistencies in the literature and provide a rational account of speaker-contextualized language comprehension.

ChangeChat: An Interactive Model for Remote Sensing Change Analysis via Multimodal Instruction Tuning

Sep 13, 2024Abstract:Remote sensing (RS) change analysis is vital for monitoring Earth's dynamic processes by detecting alterations in images over time. Traditional change detection excels at identifying pixel-level changes but lacks the ability to contextualize these alterations. While recent advancements in change captioning offer natural language descriptions of changes, they do not support interactive, user-specific queries. To address these limitations, we introduce ChangeChat, the first bitemporal vision-language model (VLM) designed specifically for RS change analysis. ChangeChat utilizes multimodal instruction tuning, allowing it to handle complex queries such as change captioning, category-specific quantification, and change localization. To enhance the model's performance, we developed the ChangeChat-87k dataset, which was generated using a combination of rule-based methods and GPT-assisted techniques. Experiments show that ChangeChat offers a comprehensive, interactive solution for RS change analysis, achieving performance comparable to or even better than state-of-the-art (SOTA) methods on specific tasks, and significantly surpassing the latest general-domain model, GPT-4. Code and pre-trained weights are available at https://github.com/hanlinwu/ChangeChat.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge