Guangyuan Jiang

Rapid Word Learning Through Meta In-Context Learning

Feb 20, 2025

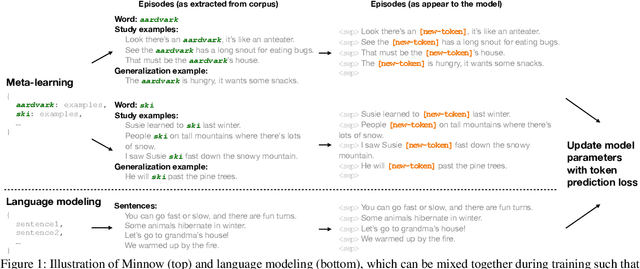

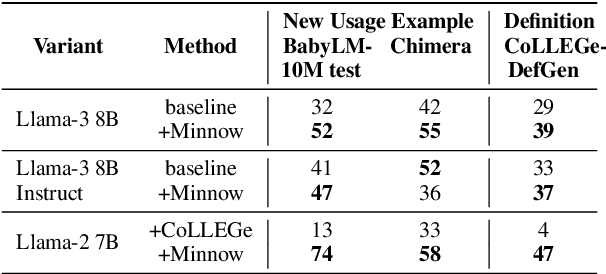

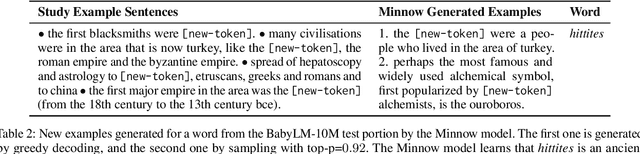

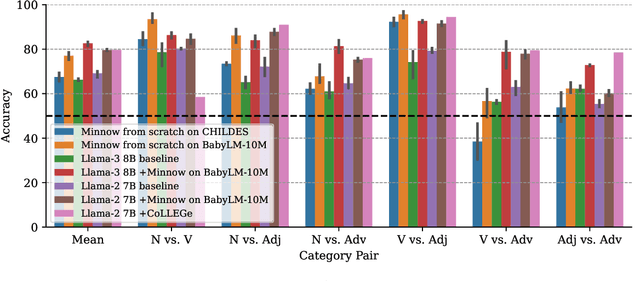

Abstract:Humans can quickly learn a new word from a few illustrative examples, and then systematically and flexibly use it in novel contexts. Yet the abilities of current language models for few-shot word learning, and methods for improving these abilities, are underexplored. In this study, we introduce a novel method, Meta-training for IN-context learNing Of Words (Minnow). This method trains language models to generate new examples of a word's usage given a few in-context examples, using a special placeholder token to represent the new word. This training is repeated on many new words to develop a general word-learning ability. We find that training models from scratch with Minnow on human-scale child-directed language enables strong few-shot word learning, comparable to a large language model (LLM) pre-trained on orders of magnitude more data. Furthermore, through discriminative and generative evaluations, we demonstrate that finetuning pre-trained LLMs with Minnow improves their ability to discriminate between new words, identify syntactic categories of new words, and generate reasonable new usages and definitions for new words, based on one or a few in-context examples. These findings highlight the data efficiency of Minnow and its potential to improve language model performance in word learning tasks.

Finding structure in logographic writing with library learning

May 11, 2024Abstract:One hallmark of human language is its combinatoriality -- reusing a relatively small inventory of building blocks to create a far larger inventory of increasingly complex structures. In this paper, we explore the idea that combinatoriality in language reflects a human inductive bias toward representational efficiency in symbol systems. We develop a computational framework for discovering structure in a writing system. Built on top of state-of-the-art library learning and program synthesis techniques, our computational framework discovers known linguistic structures in the Chinese writing system and reveals how the system evolves towards simplification under pressures for representational efficiency. We demonstrate how a library learning approach, utilizing learned abstractions and compression, may help reveal the fundamental computational principles that underlie the creation of combinatorial structures in human cognition, and offer broader insights into the evolution of efficient communication systems.

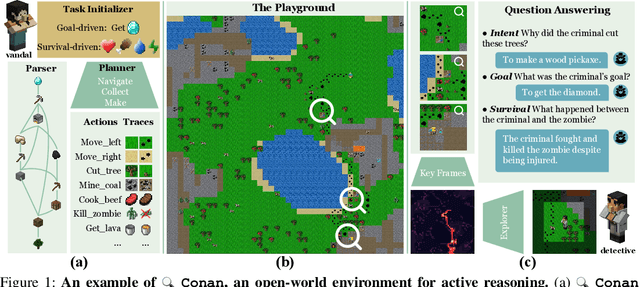

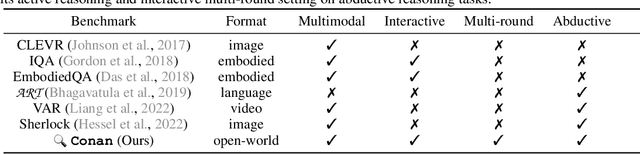

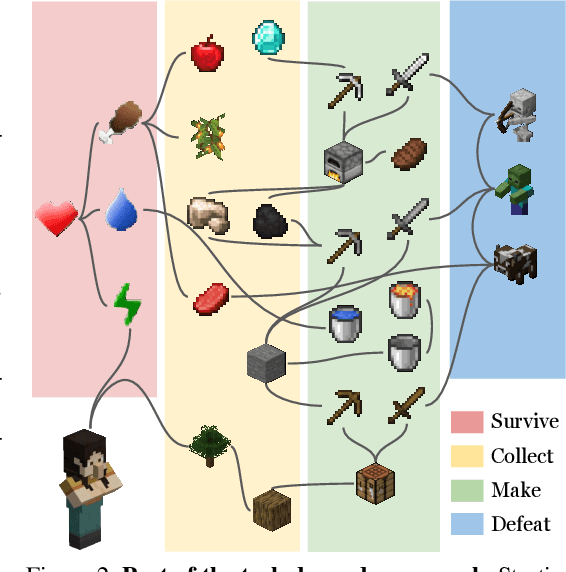

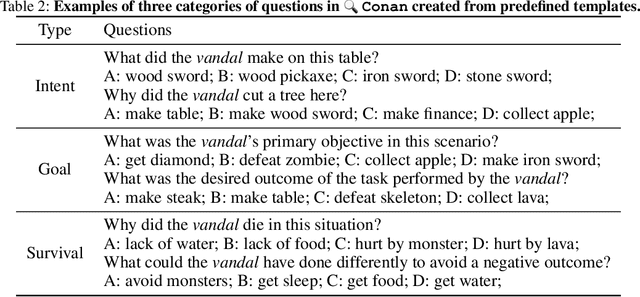

Active Reasoning in an Open-World Environment

Nov 03, 2023

Abstract:Recent advances in vision-language learning have achieved notable success on complete-information question-answering datasets through the integration of extensive world knowledge. Yet, most models operate passively, responding to questions based on pre-stored knowledge. In stark contrast, humans possess the ability to actively explore, accumulate, and reason using both newfound and existing information to tackle incomplete-information questions. In response to this gap, we introduce $Conan$, an interactive open-world environment devised for the assessment of active reasoning. $Conan$ facilitates active exploration and promotes multi-round abductive inference, reminiscent of rich, open-world settings like Minecraft. Diverging from previous works that lean primarily on single-round deduction via instruction following, $Conan$ compels agents to actively interact with their surroundings, amalgamating new evidence with prior knowledge to elucidate events from incomplete observations. Our analysis on $Conan$ underscores the shortcomings of contemporary state-of-the-art models in active exploration and understanding complex scenarios. Additionally, we explore Abduction from Deduction, where agents harness Bayesian rules to recast the challenge of abduction as a deductive process. Through $Conan$, we aim to galvanize advancements in active reasoning and set the stage for the next generation of artificial intelligence agents adept at dynamically engaging in environments.

MEWL: Few-shot multimodal word learning with referential uncertainty

Jun 01, 2023

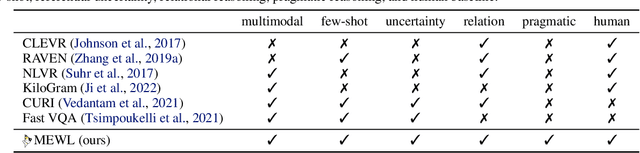

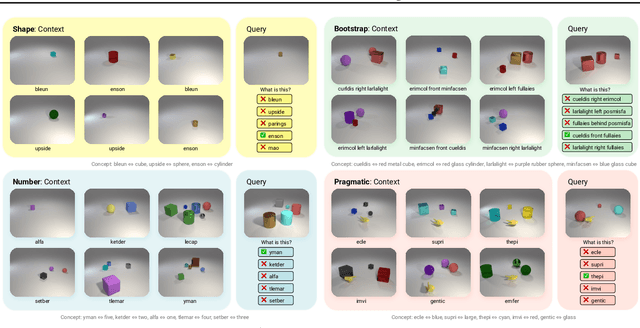

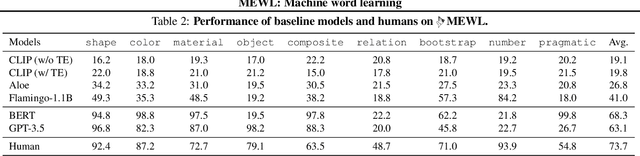

Abstract:Without explicit feedback, humans can rapidly learn the meaning of words. Children can acquire a new word after just a few passive exposures, a process known as fast mapping. This word learning capability is believed to be the most fundamental building block of multimodal understanding and reasoning. Despite recent advancements in multimodal learning, a systematic and rigorous evaluation is still missing for human-like word learning in machines. To fill in this gap, we introduce the MachinE Word Learning (MEWL) benchmark to assess how machines learn word meaning in grounded visual scenes. MEWL covers human's core cognitive toolkits in word learning: cross-situational reasoning, bootstrapping, and pragmatic learning. Specifically, MEWL is a few-shot benchmark suite consisting of nine tasks for probing various word learning capabilities. These tasks are carefully designed to be aligned with the children's core abilities in word learning and echo the theories in the developmental literature. By evaluating multimodal and unimodal agents' performance with a comparative analysis of human performance, we notice a sharp divergence in human and machine word learning. We further discuss these differences between humans and machines and call for human-like few-shot word learning in machines.

EST: Evaluating Scientific Thinking in Artificial Agents

Jun 18, 2022

Abstract:Theoretical ideas and empirical research have shown us a seemingly surprising result: children, even very young toddlers, demonstrate learning and thinking in a strikingly similar manner to scientific reasoning in formal research. Encountering a novel phenomenon, children make hypotheses against data, conduct causal inference from observation, test their theory via experimentation, and correct the proposition if inconsistency arises. Rounds of such processes continue until the underlying mechanism is found. Towards building machines that can learn and think like people, one natural question for us to ask is: whether the intelligence we achieve today manages to perform such a scientific thinking process, and if any, at what level. In this work, we devise the EST environment for evaluating the scientific thinking ability in artificial agents. Motivated by the stream of research on causal discovery, we build our interactive EST environment based on Blicket detection. Specifically, in each episode of EST, an agent is presented with novel observations and asked to figure out all objects' Blicketness. At each time step, the agent proposes new experiments to validate its hypothesis and updates its current belief. By evaluating Reinforcement Learning (RL) agents on both a symbolic and visual version of this task, we notice clear failure of today's learning methods in reaching a level of intelligence comparable to humans. Such inefficacy of learning in scientific thinking calls for future research in building humanlike intelligence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge