Ghouthi Boukli Hacene

IMT Atlantique, Brest, France, MILA, Montreal, Canada

LLM meets Vision-Language Models for Zero-Shot One-Class Classification

Apr 02, 2024Abstract:We consider the problem of zero-shot one-class visual classification. In this setting, only the label of the target class is available, and the goal is to discriminate between positive and negative query samples without requiring any validation example from the target task. We propose a two-step solution that first queries large language models for visually confusing objects and then relies on vision-language pre-trained models (e.g., CLIP) to perform classification. By adapting large-scale vision benchmarks, we demonstrate the ability of the proposed method to outperform adapted off-the-shelf alternatives in this setting. Namely, we propose a realistic benchmark where negative query samples are drawn from the same original dataset as positive ones, including a granularity-controlled version of iNaturalist, where negative samples are at a fixed distance in the taxonomy tree from the positive ones. Our work shows that it is possible to discriminate between a single category and other semantically related ones using only its label

SKILL: Similarity-aware Knowledge distILLation for Speech Self-Supervised Learning

Feb 26, 2024

Abstract:Self-supervised learning (SSL) has achieved remarkable success across various speech-processing tasks. To enhance its efficiency, previous works often leverage the use of compression techniques. A notable recent attempt is DPHuBERT, which applies joint knowledge distillation (KD) and structured pruning to learn a significantly smaller SSL model. In this paper, we contribute to this research domain by introducing SKILL, a novel method that conducts distillation across groups of layers instead of distilling individual arbitrarily selected layers within the teacher network. The identification of the layers to distill is achieved through a hierarchical clustering procedure applied to layer similarity measures. Extensive experiments demonstrate that our distilled version of WavLM Base+ not only outperforms DPHuBERT but also achieves state-of-the-art results in the 30M parameters model class across several SUPERB tasks.

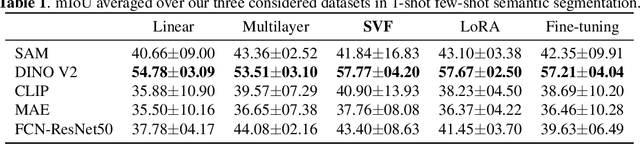

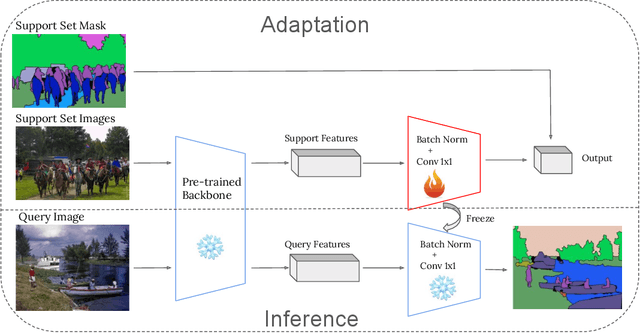

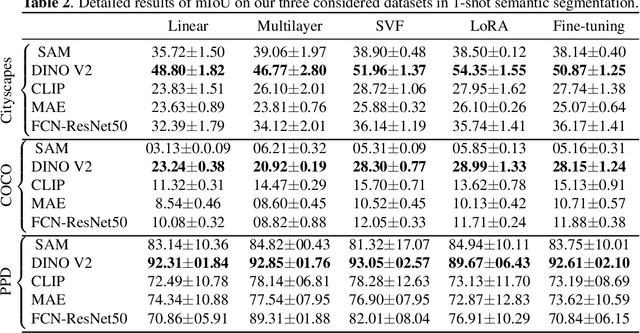

A Novel Benchmark for Few-Shot Semantic Segmentation in the Era of Foundation Models

Jan 20, 2024

Abstract:In recent years, the rapid evolution of computer vision has seen the emergence of various vision foundation models, each tailored to specific data types and tasks. While large language models often share a common pretext task, the diversity in vision foundation models arises from their varying training objectives. In this study, we delve into the quest for identifying the most effective vision foundation models for few-shot semantic segmentation, a critical task in computer vision. Specifically, we conduct a comprehensive comparative analysis of four prominent foundation models: DINO V2, Segment Anything, CLIP, Masked AutoEncoders, and a straightforward ResNet50 pre-trained on the COCO dataset. Our investigation focuses on their adaptability to new semantic segmentation tasks, leveraging only a limited number of segmented images. Our experimental findings reveal that DINO V2 consistently outperforms the other considered foundation models across a diverse range of datasets and adaptation methods. This outcome underscores DINO V2's superior capability to adapt to semantic segmentation tasks compared to its counterparts. Furthermore, our observations indicate that various adapter methods exhibit similar performance, emphasizing the paramount importance of selecting a robust feature extractor over the intricacies of the adaptation technique itself. This insight sheds light on the critical role of feature extraction in the context of few-shot semantic segmentation. This research not only contributes valuable insights into the comparative performance of vision foundation models in the realm of few-shot semantic segmentation but also highlights the significance of a robust feature extractor in this domain.

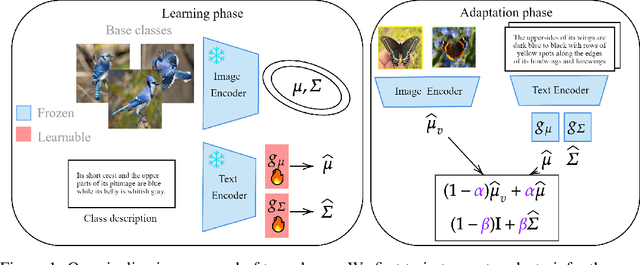

Inferring Latent Class Statistics from Text for Robust Visual Few-Shot Learning

Nov 24, 2023

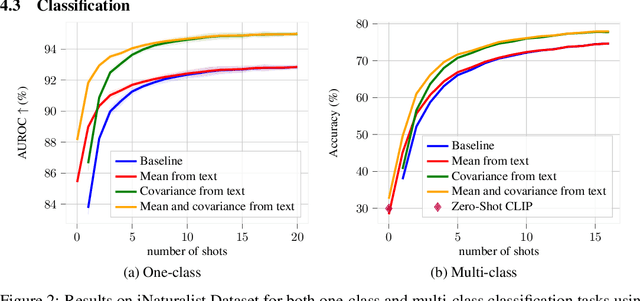

Abstract:In the realm of few-shot learning, foundation models like CLIP have proven effective but exhibit limitations in cross-domain robustness especially in few-shot settings. Recent works add text as an extra modality to enhance the performance of these models. Most of these approaches treat text as an auxiliary modality without fully exploring its potential to elucidate the underlying class visual features distribution. In this paper, we present a novel approach that leverages text-derived statistics to predict the mean and covariance of the visual feature distribution for each class. This predictive framework enriches the latent space, yielding more robust and generalizable few-shot learning models. We demonstrate the efficacy of incorporating both mean and covariance statistics in improving few-shot classification performance across various datasets. Our method shows that we can use text to predict the mean and covariance of the distribution offering promising improvements in few-shot learning scenarios.

A Statistical Model for Predicting Generalization in Few-Shot Classification

Dec 13, 2022

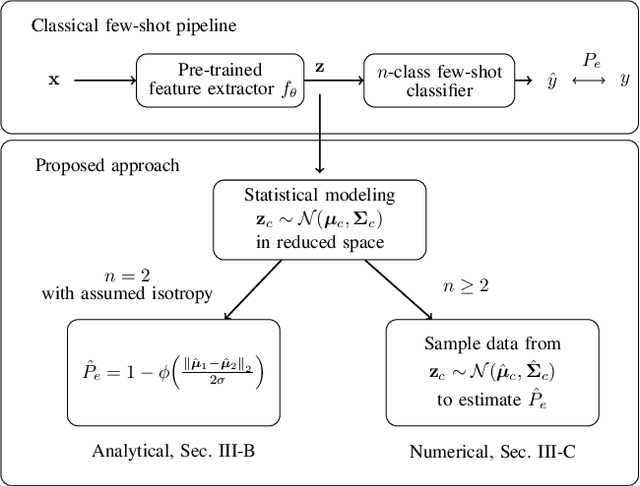

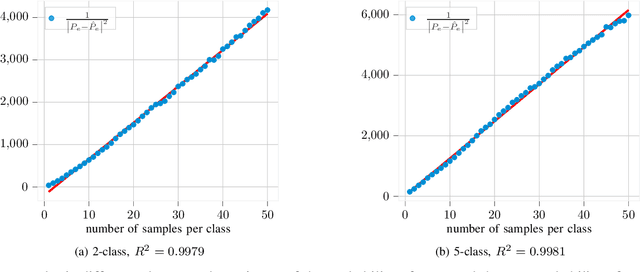

Abstract:The estimation of the generalization error of classifiers often relies on a validation set. Such a set is hardly available in few-shot learning scenarios, a highly disregarded shortcoming in the field. In these scenarios, it is common to rely on features extracted from pre-trained neural networks combined with distance-based classifiers such as nearest class mean. In this work, we introduce a Gaussian model of the feature distribution. By estimating the parameters of this model, we are able to predict the generalization error on new classification tasks with few samples. We observe that accurate distance estimates between class-conditional densities are the key to accurate estimates of the generalization performance. Therefore, we propose an unbiased estimator for these distances and integrate it in our numerical analysis. We show that our approach outperforms alternatives such as the leave-one-out cross-validation strategy in few-shot settings.

Quantization and Deployment of Deep Neural Networks on Microcontrollers

May 27, 2021

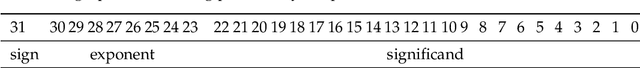

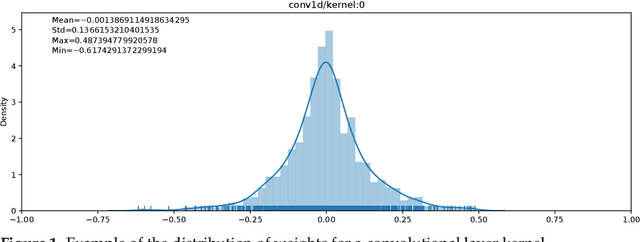

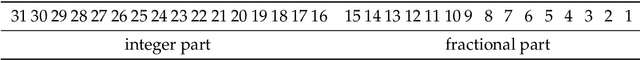

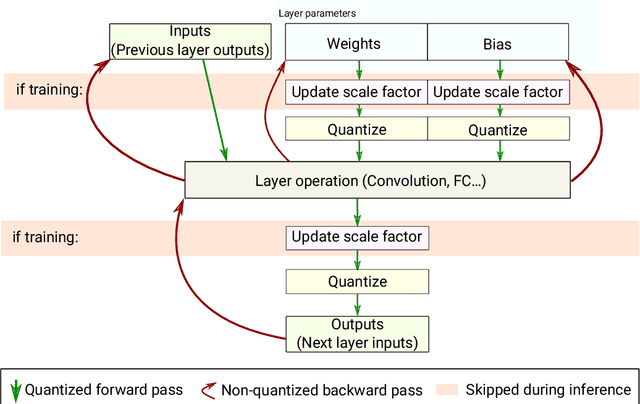

Abstract:Embedding Artificial Intelligence onto low-power devices is a challenging task that has been partly overcome with recent advances in machine learning and hardware design. Presently, deep neural networks can be deployed on embedded targets to perform different tasks such as speech recognition,object detection or Human Activity Recognition. However, there is still room for optimization of deep neural networks onto embedded devices. These optimizations mainly address power consumption,memory and real-time constraints, but also an easier deployment at the edge. Moreover, there is still a need for a better understanding of what can be achieved for different use cases. This work focuses on quantization and deployment of deep neural networks onto low-power 32-bit microcontrollers. The quantization methods, relevant in the context of an embedded execution onto a microcontroller, are first outlined. Then, a new framework for end-to-end deep neural networks training, quantization and deployment is presented. This framework, called MicroAI, is designed as an alternative to existing inference engines (TensorFlow Lite for Microcontrollers and STM32Cube.AI). Our framework can indeed be easily adjusted and/or extended for specific use cases. Execution using single precision 32-bit floating-point as well as fixed-point on 8- and 16-bit integers are supported. The proposed quantization method is evaluated with three different datasets (UCI-HAR, Spoken MNIST and GTSRB). Finally, a comparison study between MicroAI and both existing embedded inference engines is provided in terms of memory and power efficiency. On-device evaluation is done using ARM Cortex-M4F-based microcontrollers (Ambiq Apollo3 and STM32L452RE).

* 36 pages, 14 figures. Published in MDPI Sensors 2021, special issue "Embedded Artificial Intelligence (AI) for Smart Sensing and IoT Applications": https://www.mdpi.com/1424-8220/21/9/2984

DNN Quantization with Attention

Mar 24, 2021

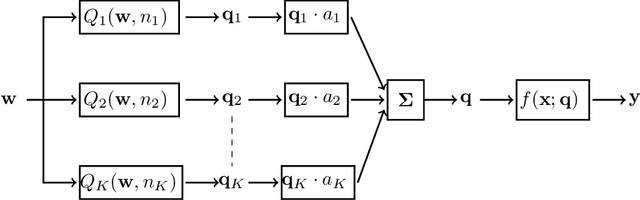

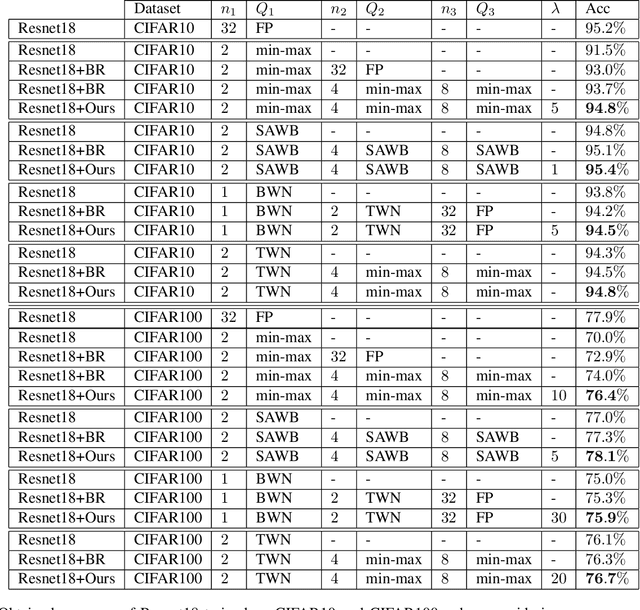

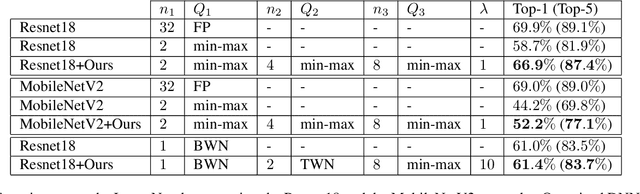

Abstract:Low-bit quantization of network weights and activations can drastically reduce the memory footprint, complexity, energy consumption and latency of Deep Neural Networks (DNNs). However, low-bit quantization can also cause a considerable drop in accuracy, in particular when we apply it to complex learning tasks or lightweight DNN architectures. In this paper, we propose a training procedure that relaxes the low-bit quantization. We call this procedure \textit{DNN Quantization with Attention} (DQA). The relaxation is achieved by using a learnable linear combination of high, medium and low-bit quantizations. Our learning procedure converges step by step to a low-bit quantization using an attention mechanism with temperature scheduling. In experiments, our approach outperforms other low-bit quantization techniques on various object recognition benchmarks such as CIFAR10, CIFAR100 and ImageNet ILSVRC 2012, achieves almost the same accuracy as a full precision DNN, and considerably reduces the accuracy drop when quantizing lightweight DNN architectures.

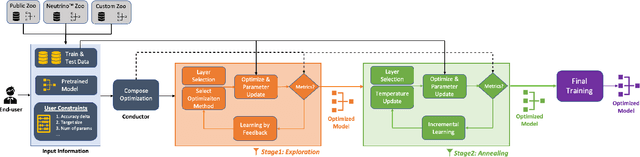

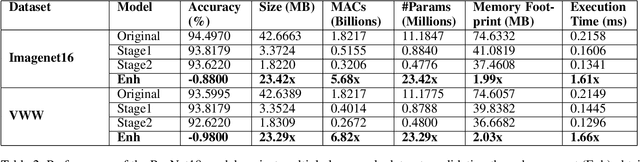

Deeplite Neutrino: An End-to-End Framework for Constrained Deep Learning Model Optimization

Jan 13, 2021

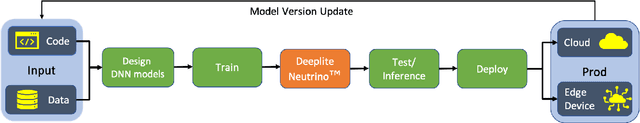

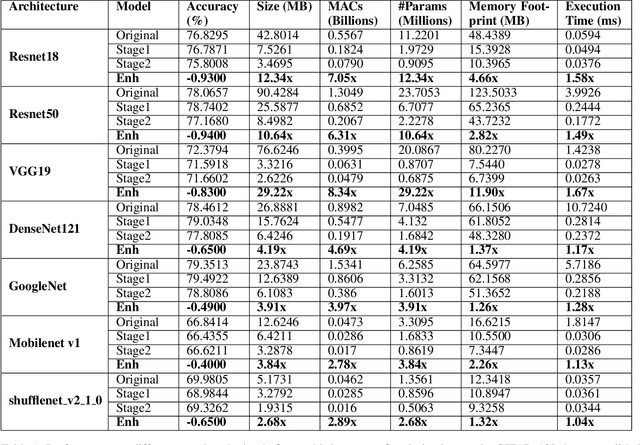

Abstract:Designing deep learning-based solutions is becoming a race for training deeper models with a greater number of layers. While a large-size deeper model could provide competitive accuracy, it creates a lot of logistical challenges and unreasonable resource requirements during development and deployment. This has been one of the key reasons for deep learning models not being excessively used in various production environments, especially in edge devices. There is an immediate requirement for optimizing and compressing these deep learning models, to enable on-device intelligence. In this research, we introduce a black-box framework, Deeplite Neutrino for production-ready optimization of deep learning models. The framework provides an easy mechanism for the end-users to provide constraints such as a tolerable drop in accuracy or target size of the optimized models, to guide the whole optimization process. The framework is easy to include in an existing production pipeline and is available as a Python Package, supporting PyTorch and Tensorflow libraries. The optimization performance of the framework is shown across multiple benchmark datasets and popular deep learning models. Further, the framework is currently used in production and the results and testimonials from several clients are summarized.

DecisiveNets: Training Deep Associative Memories to Solve Complex Machine Learning Problems

Dec 02, 2020

Abstract:Learning deep representations to solve complex machine learning tasks has become the prominent trend in the past few years. Indeed, Deep Neural Networks are now the golden standard in domains as various as computer vision, natural language processing or even playing combinatorial games. However, problematic limitations are hidden behind this surprising universal capability. Among other things, explainability of the decisions is a major concern, especially since deep neural networks are made up of a very large number of trainable parameters. Moreover, computational complexity can quickly become a problem, especially in contexts constrained by real time or limited resources. Therefore, understanding how information is stored and the impact this storage can have on the system remains a major and open issue. In this chapter, we introduce a method to transform deep neural network models into deep associative memories, with simpler, more explicable and less expensive operations. We show through experiments that these transformations can be done without penalty on predictive performance. The resulting deep associative memories are excellent candidates for artificial intelligence that is easier to theorize and manipulate.

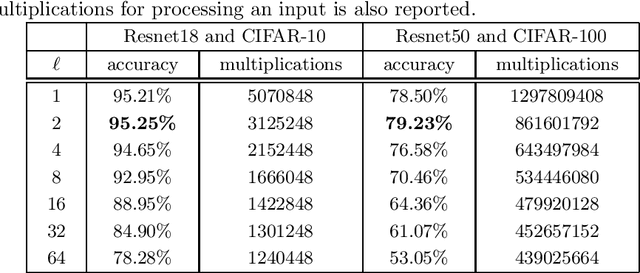

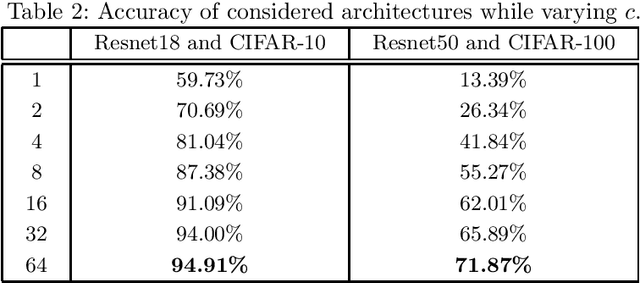

ThriftyNets : Convolutional Neural Networks with Tiny Parameter Budget

Jul 20, 2020

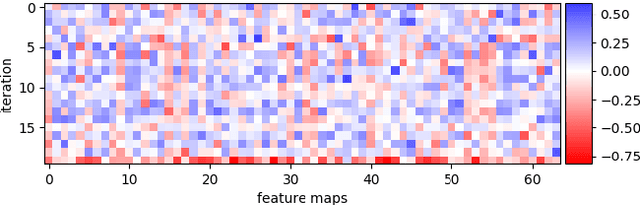

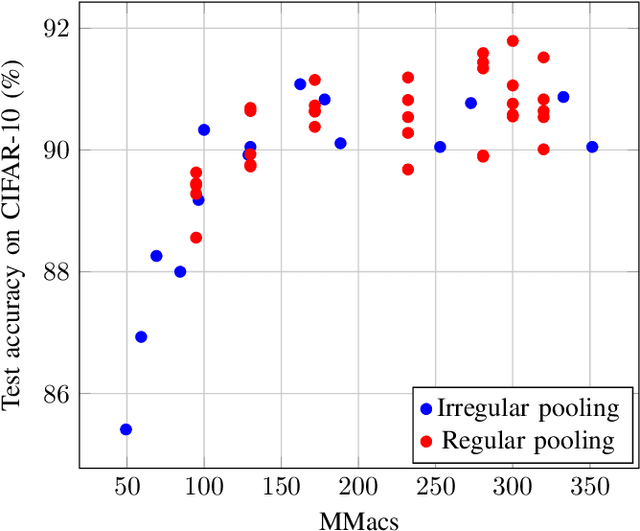

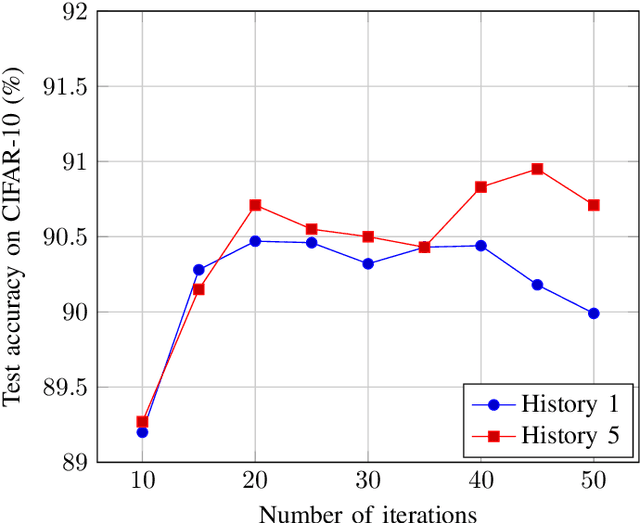

Abstract:Typical deep convolutional architectures present an increasing number of feature maps as we go deeper in the network, whereas spatial resolution of inputs is decreased through downsampling operations. This means that most of the parameters lay in the final layers, while a large portion of the computations are performed by a small fraction of the total parameters in the first layers. In an effort to use every parameter of a network at its maximum, we propose a new convolutional neural network architecture, called ThriftyNet. In ThriftyNet, only one convolutional layer is defined and used recursively, leading to a maximal parameter factorization. In complement, normalization, non-linearities, downsamplings and shortcut ensure sufficient expressivity of the model. ThriftyNet achieves competitive performance on a tiny parameters budget, exceeding 91% accuracy on CIFAR-10 with less than 40K parameters in total, and 74.3% on CIFAR-100 with less than 600K parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge