Alain Pegatoquet

Université Côte d'Azur, CNRS, LEAT, Sophia Antipolis, France

Spiking monocular event based 6D pose estimation for space application

Jan 06, 2025Abstract:With the growing interest in on On-orbit servicing (OOS) and Active Debris Removal (ADR) missions, spacecraft poses estimation algorithms are being developed using deep learning to improve the precision of this complex task and find the most efficient solution. With the advances of bio-inspired low-power solutions, such a spiking neural networks and event-based processing and cameras, and their recent work for space applications, we propose to investigate the feasibility of a fully event-based solution to improve event-based pose estimation for spacecraft. In this paper, we address the first event-based dataset SEENIC with real event frames captured by an event-based camera on a testbed. We show the methods and results of the first event-based solution for this use case, where our small spiking end-to-end network (S2E2) solution achieves interesting results over 21cm position error and 14degree rotation error, which is the first step towards fully event-based processing for embedded spacecraft pose estimation.

* 6 pages, 2 figures, 1 table. This paper has been presented in the Thursday 19 September poster session at the SPAICE 2024 conference (17-19 September 2024)

On Reducing Activity with Distillation and Regularization for Energy Efficient Spiking Neural Networks

Jun 26, 2024Abstract:Interest in spiking neural networks (SNNs) has been growing steadily, promising an energy-efficient alternative to formal neural networks (FNNs), commonly known as artificial neural networks (ANNs). Despite increasing interest, especially for Edge applications, these event-driven neural networks suffered from their difficulty to be trained compared to FNNs. To alleviate this problem, a number of innovative methods have been developed to provide performance more or less equivalent to that of FNNs. However, the spiking activity of a network during inference is usually not considered. While SNNs may usually have performance comparable to that of FNNs, it is often at the cost of an increase of the network's activity, thus limiting the benefit of using them as a more energy-efficient solution. In this paper, we propose to leverage Knowledge Distillation (KD) for SNNs training with surrogate gradient descent in order to optimize the trade-off between performance and spiking activity. Then, after understanding why KD led to an increase in sparsity, we also explored Activations regularization and proposed a novel method with Logits Regularization. These approaches, validated on several datasets, clearly show a reduction in network spiking activity (-26.73% on GSC and -14.32% on CIFAR-10) while preserving accuracy.

Embedded event based object detection with spiking neural network

Jun 25, 2024Abstract:The complexity of event-based object detection (OD) poses considerable challenges. Spiking Neural Networks (SNNs) show promising results and pave the way for efficient event-based OD. Despite this success, the path to efficient SNNs on embedded devices remains a challenge. This is due to the size of the networks required to accomplish the task and the ability of devices to take advantage of SNNs benefits. Even when "edge" devices are considered, they typically use embedded GPUs that consume tens of watts. In response to these challenges, our research introduces an embedded neuromorphic testbench that utilizes the SPiking Low-power Event-based ArchiTecture (SPLEAT) accelerator. Using an extended version of the Qualia framework, we can train, evaluate, quantize, and deploy spiking neural networks on an FPGA implementation of SPLEAT. We used this testbench to load a state-of-the-art SNN solution, estimate the performance loss associated with deploying the network on dedicated hardware, and run real-world event-based OD on neuromorphic hardware specifically designed for low-power spiking neural networks. Remarkably, our embedded spiking solution, which includes a model with 1.08 million parameters, operates efficiently with 490 mJ per prediction.

Neural information coding for efficient spike-based image denoising

May 15, 2023

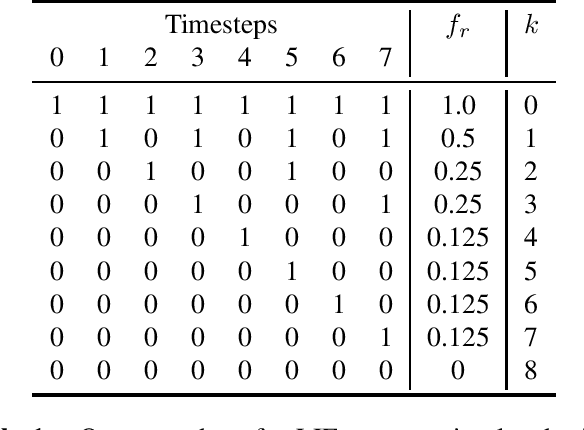

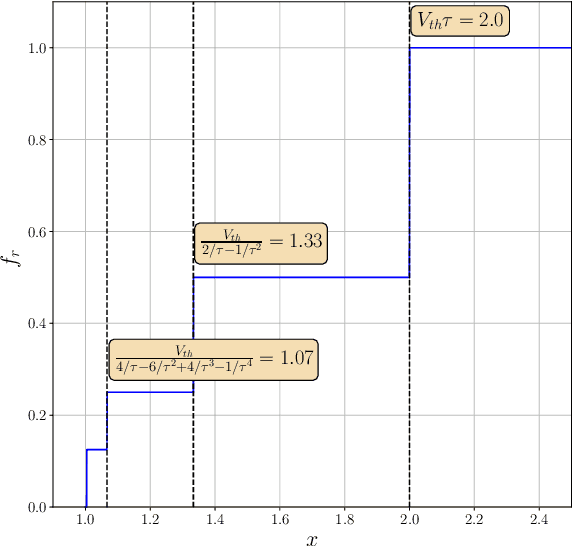

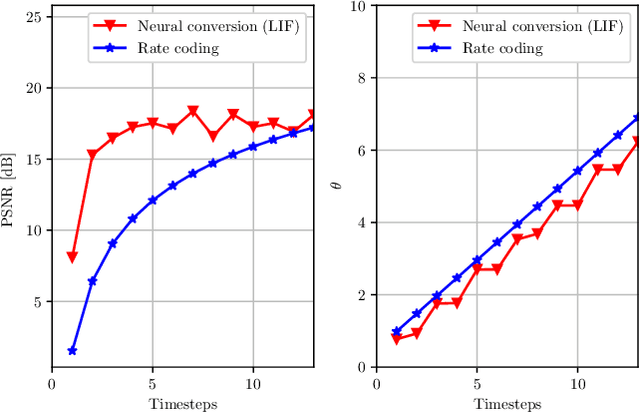

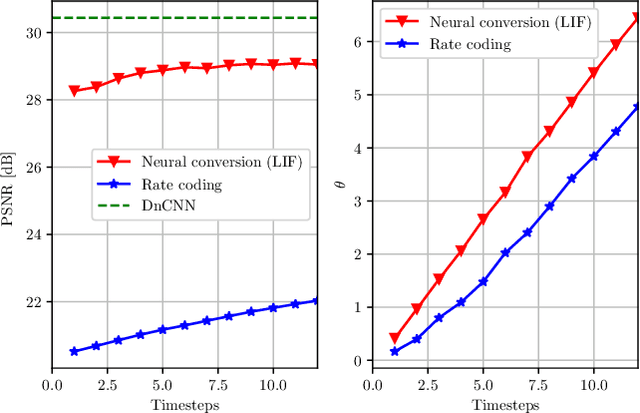

Abstract:In recent years, Deep Convolutional Neural Networks (DCNNs) have outreached the performance of classical algorithms for image restoration tasks. However most of these methods are not suited for computational efficiency and are therefore too expensive to be executed on embedded and mobile devices. In this work we investigate Spiking Neural Networks (SNNs) for Gaussian denoising, with the goal of approaching the performance of conventional DCNN while reducing the computational load. We propose a formal analysis of the information conversion processing carried out by the Leaky Integrate and Fire (LIF) neurons and we compare its performance with the classical rate-coding mechanism. The neural coding schemes are then evaluated through experiments in terms of denoising performance and computation efficiency for a state-of-the-art deep convolutional neural network. Our results show that SNNs with LIF neurons can provide competitive denoising performance but at a reduced computational cost.

Quantization and Deployment of Deep Neural Networks on Microcontrollers

May 27, 2021

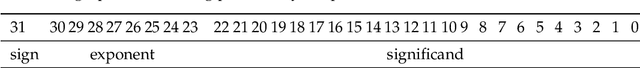

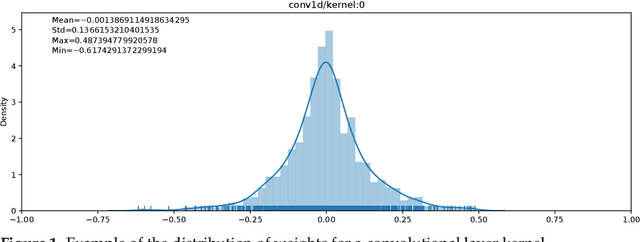

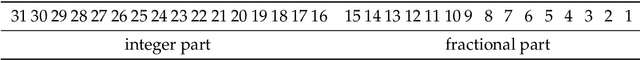

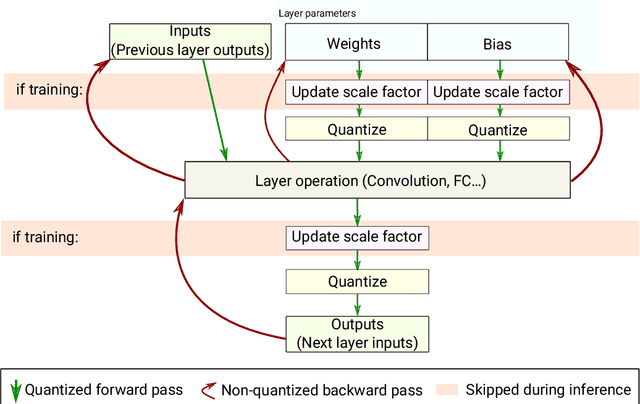

Abstract:Embedding Artificial Intelligence onto low-power devices is a challenging task that has been partly overcome with recent advances in machine learning and hardware design. Presently, deep neural networks can be deployed on embedded targets to perform different tasks such as speech recognition,object detection or Human Activity Recognition. However, there is still room for optimization of deep neural networks onto embedded devices. These optimizations mainly address power consumption,memory and real-time constraints, but also an easier deployment at the edge. Moreover, there is still a need for a better understanding of what can be achieved for different use cases. This work focuses on quantization and deployment of deep neural networks onto low-power 32-bit microcontrollers. The quantization methods, relevant in the context of an embedded execution onto a microcontroller, are first outlined. Then, a new framework for end-to-end deep neural networks training, quantization and deployment is presented. This framework, called MicroAI, is designed as an alternative to existing inference engines (TensorFlow Lite for Microcontrollers and STM32Cube.AI). Our framework can indeed be easily adjusted and/or extended for specific use cases. Execution using single precision 32-bit floating-point as well as fixed-point on 8- and 16-bit integers are supported. The proposed quantization method is evaluated with three different datasets (UCI-HAR, Spoken MNIST and GTSRB). Finally, a comparison study between MicroAI and both existing embedded inference engines is provided in terms of memory and power efficiency. On-device evaluation is done using ARM Cortex-M4F-based microcontrollers (Ambiq Apollo3 and STM32L452RE).

* 36 pages, 14 figures. Published in MDPI Sensors 2021, special issue "Embedded Artificial Intelligence (AI) for Smart Sensing and IoT Applications": https://www.mdpi.com/1424-8220/21/9/2984

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge