Fulin Tang

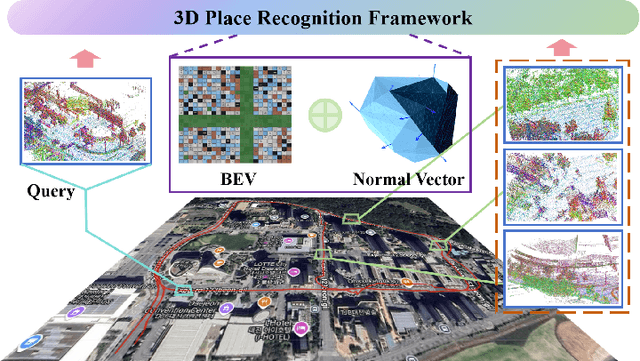

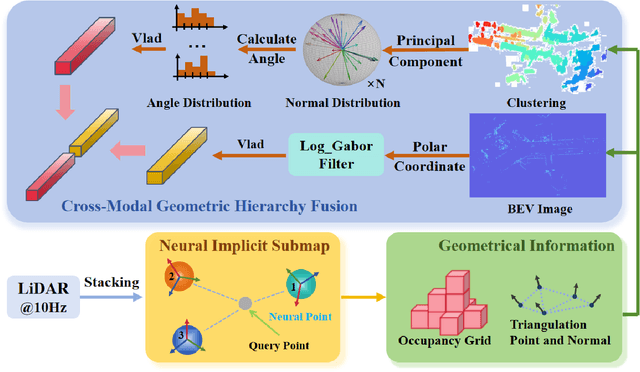

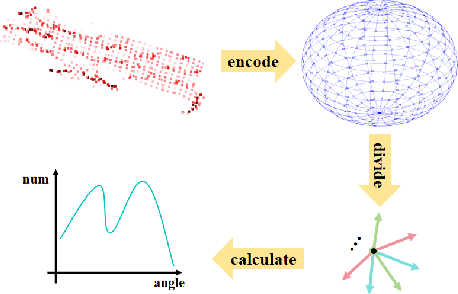

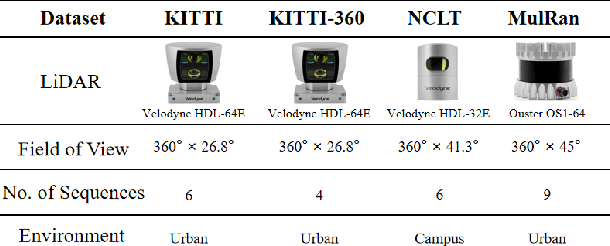

Cross-Modal Geometric Hierarchy Fusion: An Implicit-Submap Driven Framework for Resilient 3D Place Recognition

Jun 17, 2025

Abstract:LiDAR-based place recognition serves as a crucial enabler for long-term autonomy in robotics and autonomous driving systems. Yet, prevailing methodologies relying on handcrafted feature extraction face dual challenges: (1) Inconsistent point cloud density, induced by ego-motion dynamics and environmental disturbances during repeated traversals, leads to descriptor instability, and (2) Representation fragility stems from reliance on single-level geometric abstractions that lack discriminative power in structurally complex scenarios. To address these limitations, we propose a novel framework that redefines 3D place recognition through density-agnostic geometric reasoning. Specifically, we introduce an implicit 3D representation based on elastic points, which is immune to the interference of original scene point cloud density and achieves the characteristic of uniform distribution. Subsequently, we derive the occupancy grid and normal vector information of the scene from this implicit representation. Finally, with the aid of these two types of information, we obtain descriptors that fuse geometric information from both bird's-eye view (capturing macro-level spatial layouts) and 3D segment (encoding micro-scale surface geometries) perspectives. We conducted extensive experiments on numerous datasets (KITTI, KITTI-360, MulRan, NCLT) across diverse environments. The experimental results demonstrate that our method achieves state-of-the-art performance. Moreover, our approach strikes an optimal balance between accuracy, runtime, and memory optimization for historical maps, showcasing excellent Resilient and scalability. Our code will be open-sourced in the future.

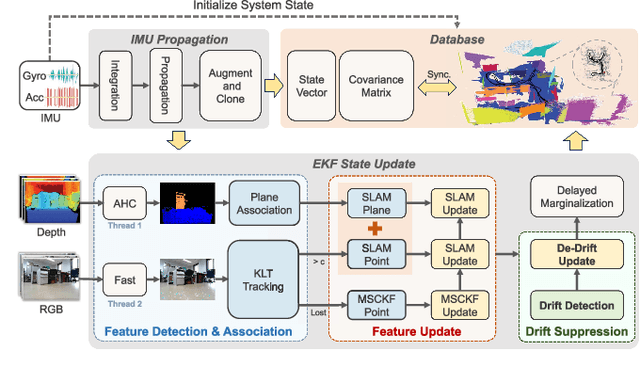

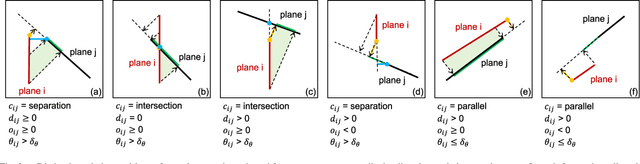

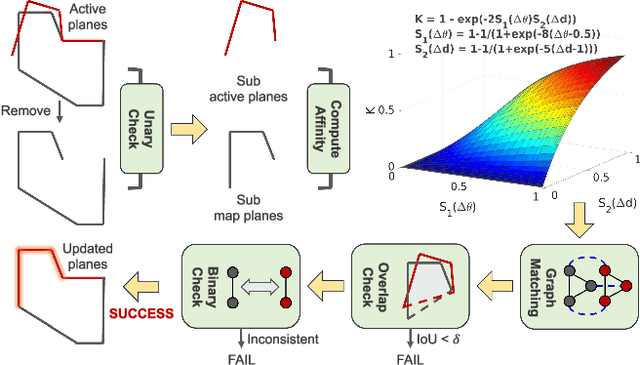

PGD-VIO: An Accurate Plane-Aided Visual-Inertial Odometry with Graph-Based Drift Suppression

Jul 25, 2024

Abstract:Generally, high-level features provide more geometrical information compared to point features, which can be exploited to further constrain motions. Planes are commonplace in man-made environments, offering an active means to reduce drift, due to their extensive spatial and temporal observability. To make full use of planar information, we propose a novel visual-inertial odometry (VIO) using an RGBD camera and an inertial measurement unit (IMU), effectively integrating point and plane features in an extended Kalman filter (EKF) framework. Depth information of point features is leveraged to improve the accuracy of point triangulation, while plane features serve as direct observations added into the state vector. Notably, to benefit long-term navigation,a novel graph-based drift detection strategy is proposed to search overlapping and identical structures in the plane map so that the cumulative drift is suppressed subsequently. The experimental results on two public datasets demonstrate that our system outperforms state-of-the-art methods in localization accuracy and meanwhile generates a compact and consistent plane map, free of expensive global bundle adjustment and loop closing techniques.

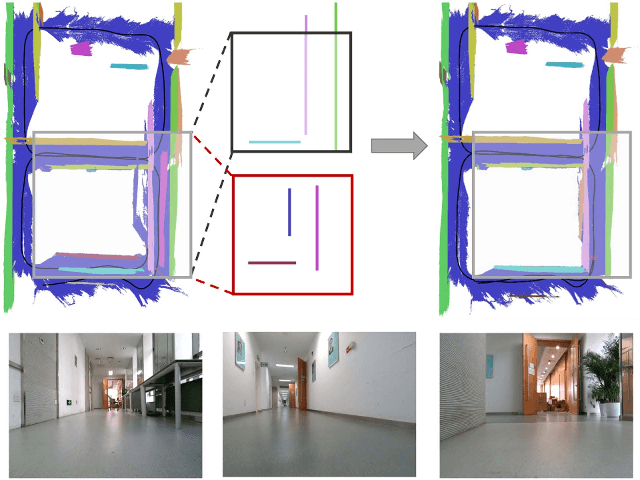

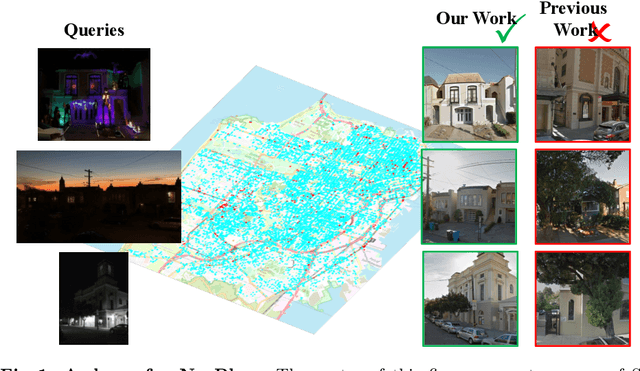

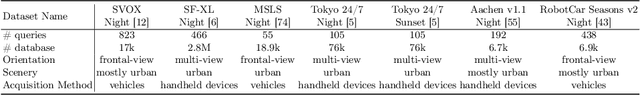

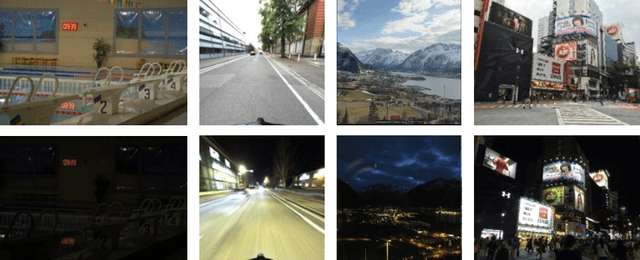

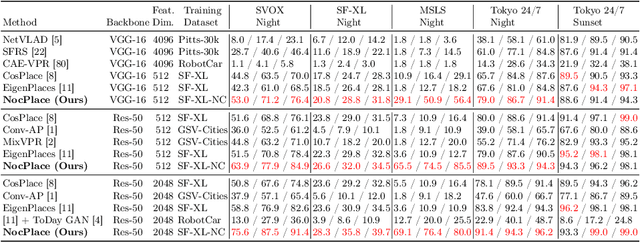

NocPlace: Nocturnal Visual Place Recognition Using Generative and Inherited Knowledge Transfer

Feb 27, 2024

Abstract:Visual Place Recognition (VPR) is crucial in computer vision, aiming to retrieve database images similar to a query image from an extensive collection of known images. However, like many vision-related tasks, learning-based VPR often experiences a decline in performance during nighttime due to the scarcity of nighttime images. Specifically, VPR needs to address the cross-domain problem of night-to-day rather than just the issue of a single nighttime domain. In response to these issues, we present NocPlace, which leverages a generated large-scale, multi-view, nighttime VPR dataset to embed resilience against dazzling lights and extreme darkness in the learned global descriptor. Firstly, we establish a day-night urban scene dataset called NightCities, capturing diverse nighttime scenarios and lighting variations across 60 cities globally. Following this, an unpaired image-to-image translation network is trained on this dataset. Using this trained translation network, we process an existing VPR dataset, thereby obtaining its nighttime version. The NocPlace is then fine-tuned using night-style images, the original labels, and descriptors inherited from the Daytime VPR model. Comprehensive experiments on various nighttime VPR test sets reveal that NocPlace considerably surpasses previous state-of-the-art methods.

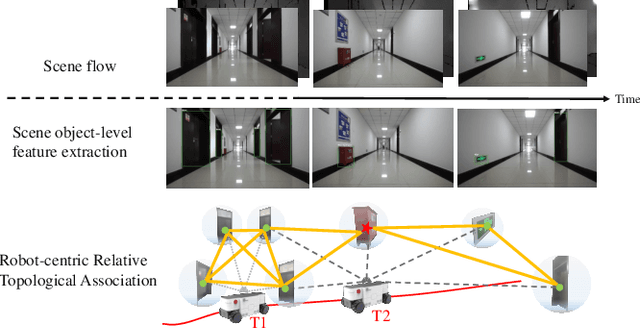

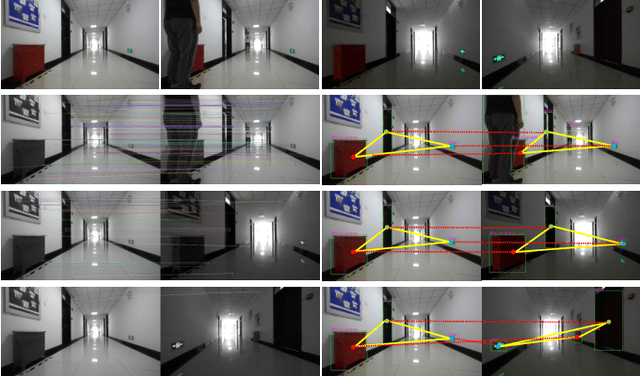

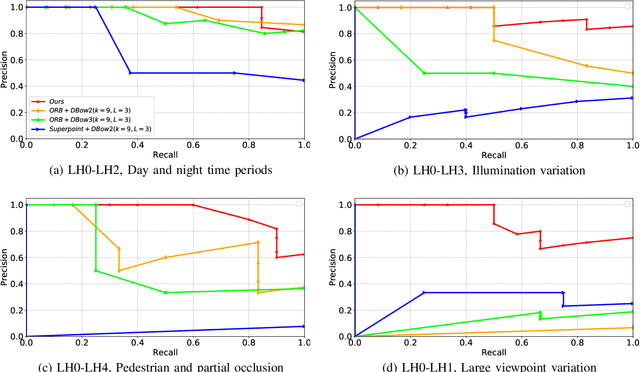

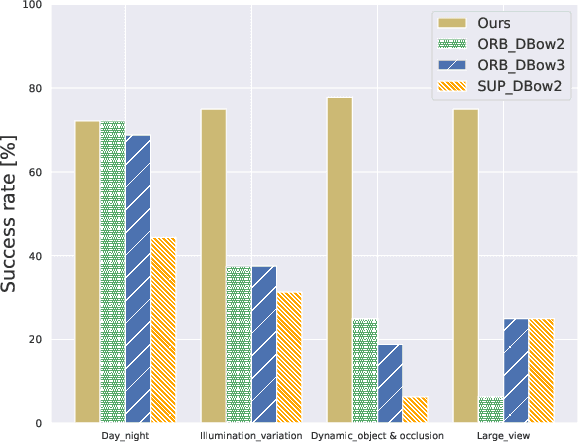

Lightweight Object-level Topological Semantic Mapping and Long-term Global Localization based on Graph Matching

Jan 16, 2022

Abstract:Mapping and localization are two essential tasks for mobile robots in real-world applications. However, largescale and dynamic scenes challenge the accuracy and robustness of most current mature solutions. This situation becomes even worse when computational resources are limited. In this paper, we present a novel lightweight object-level mapping and localization method with high accuracy and robustness. Different from previous methods, our method does not need a prior constructed precise geometric map, which greatly releases the storage burden, especially for large-scale navigation. We use object-level features with both semantic and geometric information to model landmarks in the environment. Particularly, a learning topological primitive is first proposed to efficiently obtain and organize the object-level landmarks. On the basis of this, we use a robot-centric mapping framework to represent the environment as a semantic topology graph and relax the burden of maintaining global consistency at the same time. Besides, a hierarchical memory management mechanism is introduced to improve the efficiency of online mapping with limited computational resources. Based on the proposed map, the robust localization is achieved by constructing a novel local semantic scene graph descriptor, and performing multi-constraint graph matching to compare scene similarity. Finally, we test our method on a low-cost embedded platform to demonstrate its advantages. Experimental results on a large scale and multi-session real-world environment show that the proposed method outperforms the state of arts in terms of lightweight and robustness.

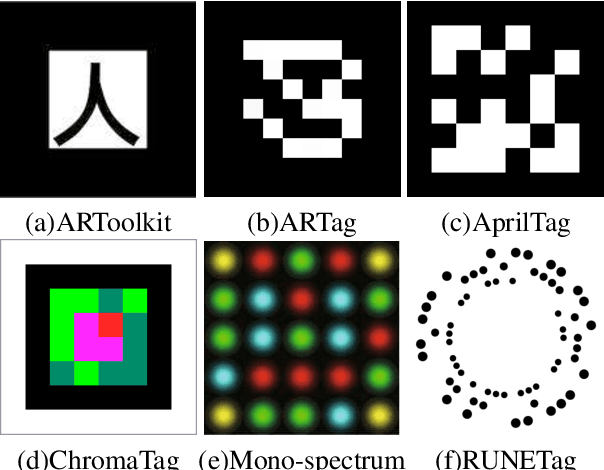

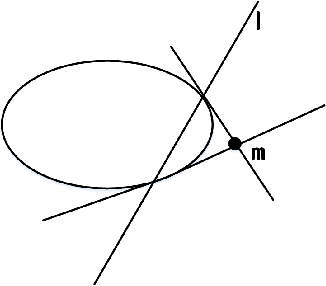

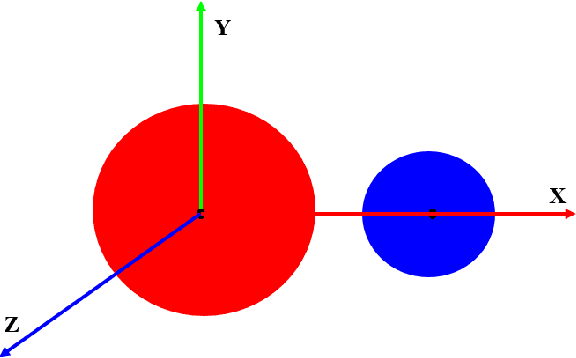

Efficient Circle-Based Camera Pose Tracking Free of PnP

Jul 24, 2019

Abstract:Camera pose tracking attracts much interest both from academic and industrial communities, of which the methods based on planar markers are easy to be implemented. However, most of the existing methods need to identify multiple points in the marker images for matching to space points. Then, PnP and RANSAC are used to compute the camera pose. If cameras move fast or are far away from markers, matching is easy to generate errors and even RANSAC cannot remove incorrect matching. Then, the result by PnP cannot have good performance. To solve this problem, we design circular markers and represent 6D camera pose analytically and unifiedly as very concise forms from each of the marker by projective invariance. Afterwards, the pose is further optimized by a proposed nonlinear cost function based on a polar-n-direction geometric distance. The method is from imaged circle edges and without PnP/RANSAC, making pose tracking robust and accurate. Experimental results show that the proposed method outperforms the state of the arts in terms of noise, blur, and distance from camera to marker. Simultaneously, it can still run at about 100 FPS on a consumer computer with only CPU.

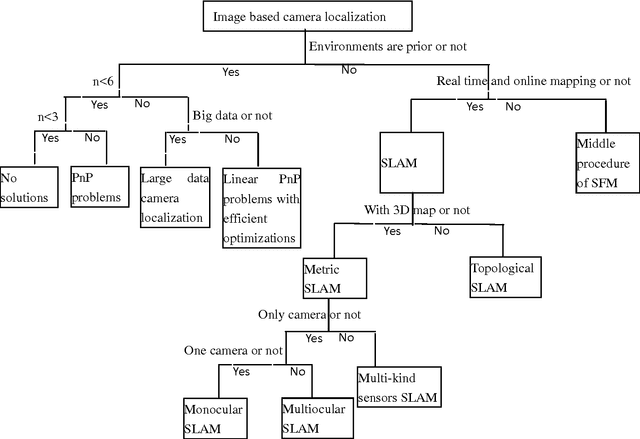

Image Based Camera Localization: an Overview

May 03, 2018

Abstract:Recently, virtual reality, augmented reality, robotics, autonomous driving et al attract much attention of both academic and industrial community, in which image based camera localization is a key task. However, there has not been a complete review on image-based camera localization. It is urgent to map this topic to help people enter the field quickly. In this paper, an overview of image based camera localization is presented. A new and complete kind of classifications for image based camera localization is provided and the related techniques are introduced. Trends for the future development are also discussed. It will be useful to not only researchers but also engineers and other people interested.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge