Francesco Fioranelli

Neural-HAR: A Dimension-Gated CNN Accelerator for Real-Time Radar Human Activity Recognition

Oct 26, 2025Abstract:Radar-based human activity recognition (HAR) is attractive for unobtrusive and privacy-preserving monitoring, yet many CNN/RNN solutions remain too heavy for edge deployment, and even lightweight ViT/SSM variants often exceed practical compute and memory budgets. We introduce Neural-HAR, a dimension-gated CNN accelerator tailored for real-time radar HAR on resource-constrained platforms. At its core is GateCNN, a parameter-efficient Doppler-temporal network that (i) embeds Doppler vectors to emphasize frequency evolution over time and (ii) applies dual-path gated convolutions that modulate Doppler-aware content features with temporal gates, complemented by a residual path for stable training. On the University of Glasgow UoG2020 continuous radar dataset, GateCNN attains 86.4% accuracy with only 2.7k parameters and 0.28M FLOPs per inference, comparable to CNN-BiGRU at a fraction of the complexity. Our FPGA prototype on Xilinx Zynq-7000 Z-7007S reaches 107.5 $\mu$s latency and 15 mW dynamic power using LUT-based ROM and distributed RAM only (zero DSP/BRAM), demonstrating real-time, energy-efficient edge inference. Code and HLS conversion scripts are available at https://github.com/lab-emi/AIRHAR.

RadMamba: Efficient Human Activity Recognition through Radar-based Micro-Doppler-Oriented Mamba State-Space Model

Apr 16, 2025Abstract:Radar-based HAR has emerged as a promising alternative to conventional monitoring approaches, such as wearable devices and camera-based systems, due to its unique privacy preservation and robustness advantages. However, existing solutions based on convolutional and recurrent neural networks, although effective, are computationally demanding during deployment. This limits their applicability in scenarios with constrained resources or those requiring multiple sensors. Advanced architectures, such as ViT and SSM architectures, offer improved modeling capabilities and have made efforts toward lightweight designs. However, their computational complexity remains relatively high. To leverage the strengths of transformer architectures while simultaneously enhancing accuracy and reducing computational complexity, this paper introduces RadMamba, a parameter-efficient, radar micro-Doppler-oriented Mamba SSM specifically tailored for radar-based HAR. Across three diverse datasets, RadMamba matches the top-performing previous model's 99.8% classification accuracy on Dataset DIAT with only 1/400 of its parameters and equals the leading models' 92.0% accuracy on Dataset CI4R with merely 1/10 of their parameters. In scenarios with continuous sequences of actions evaluated on Dataset UoG2020, RadMamba surpasses other models with significantly higher parameter counts by at least 3%, achieving this with only 6.7k parameters. Our code is available at: https://github.com/lab-emi/AIRHAR.

Grouped Target Tracking and Seamless People Counting with a 24 GHz MIMO FMCW

Apr 07, 2025Abstract:The problem of radar-based tracking of groups of people moving together and counting their numbers in indoor environments is considered here. A novel processing pipeline to track groups of people moving together and count their numbers is proposed and validated. The pipeline is specifically designed to deal with frequent changes of direction and stop & go movements typical of indoor activities. The proposed approach combines a tracker with a classifier to count the number of grouped people; this uses both spatial features extracted from range-azimuth maps, and Doppler frequency features extracted with wavelet decomposition. Thus, the pipeline outputs over time both the location and number of people present. The proposed approach is verified with experimental data collected with a 24 GHz Frequency Modulated Continuous Wave (FMCW) radar. It is shown that the proposed method achieves 95.59% accuracy in counting the number of people, and a tracking metric OSPA of 0.338. Furthermore, the performance is analyzed as a function of different relevant variables such as feature combinations and scenarios.

Automatic Labelling & Semantic Segmentation with 4D Radar Tensors

Jan 20, 2025Abstract:In this paper, an automatic labelling process is presented for automotive datasets, leveraging on complementary information from LiDAR and camera. The generated labels are then used as ground truth with the corresponding 4D radar data as inputs to a proposed semantic segmentation network, to associate a class label to each spatial voxel. Promising results are shown by applying both approaches to the publicly shared RaDelft dataset, with the proposed network achieving over 65% of the LiDAR detection performance, improving 13.2% in vehicle detection probability, and reducing 0.54 m in terms of Chamfer distance, compared to variants inspired from the literature.

A Deep Automotive Radar Detector using the RaDelft Dataset

Jun 07, 2024Abstract:The detection of multiple extended targets in complex environments using high-resolution automotive radar is considered. A data-driven approach is proposed where unlabeled synchronized lidar data is used as ground truth to train a neural network with only radar data as input. To this end, the novel, large-scale, real-life, and multi-sensor RaDelft dataset has been recorded using a demonstrator vehicle in different locations in the city of Delft. The dataset, as well as the documentation and example code, is publicly available for those researchers in the field of automotive radar or machine perception. The proposed data-driven detector is able to generate lidar-like point clouds using only radar data from a high-resolution system, which preserves the shape and size of extended targets. The results are compared against conventional CFAR detectors as well as variations of the method to emulate the available approaches in the literature, using the probability of detection, the probability of false alarm, and the Chamfer distance as performance metrics. Moreover, an ablation study was carried out to assess the impact of Doppler and temporal information on detection performance. The proposed method outperforms the different baselines in terms of Chamfer distance, achieving a reduction of 75% against conventional CFAR detectors and 10% against the modified state-of-the-art deep learning-based approaches.

Analysis of Processing Pipelines for Indoor Human Tracking using FMCW radar

Mar 15, 2024Abstract:In this paper, the problem of formulating effective processing pipelines for indoor human tracking is investigated, with the usage of a Multiple Input Multiple Output (MIMO) Frequency Modulated Continuous Wave (FMCW) radar. Specifically, two processing pipelines starting with detections on the Range-Azimuth (RA) maps and the Range-Doppler (RD) maps are formulated and compared, together with subsequent clustering and tracking algorithms and their relevant parameters. Experimental results are presented to validate and assess both pipelines, using a 24 GHz commercial radar platform with 250 MHz bandwidth and 15 virtual channels. Scenarios where 1 and 2 people move in an indoor environment are considered, and the influence of the number of virtual channels and detectors' parameters is discussed. The characteristics and limitations of both pipelines are presented, with the approach based on detections on RA maps showing in general more robust results.

See Further Than CFAR: a Data-Driven Radar Detector Trained by Lidar

Feb 27, 2024Abstract:In this paper, we address the limitations of traditional constant false alarm rate (CFAR) target detectors in automotive radars, particularly in complex urban environments with multiple objects that appear as extended targets. We propose a data-driven radar target detector exploiting a highly efficient 2D CNN backbone inspired by the computer vision domain. Our approach is distinguished by a unique cross sensor supervision pipeline, enabling it to learn exclusively from unlabeled synchronized radar and lidar data, thus eliminating the need for costly manual object annotations. Using a novel large-scale, real-life multi-sensor dataset recorded in various driving scenarios, we demonstrate that the proposed detector generates dense, lidar-like point clouds, achieving a lower Chamfer distance to the reference lidar point clouds than CFAR detectors. Overall, it significantly outperforms CFAR baselines detection accuracy.

3D high-resolution imaging algorithm using 1D MIMO array for autonomous driving application

Feb 20, 2024Abstract:The problem of 3D high-resolution imaging in automotive multiple-input multiple-output (MIMO) side-looking radar using a 1D array is considered. The concept of motion-enhanced snapshots is introduced for generating larger apertures in the azimuth dimension. For the first time, 3D imaging capabilities can be achieved with high angular resolution using a 1D MIMO antenna array, which can alleviate the requirement for large radar systems in autonomous vehicles. The robustness to variations in the vehicle's movement trajectory is also considered and addressed with relevant compensations in the steering vector. The available degrees of freedom as well as the Signal to Noise Ratio (SNR) are shown to increase with the proposed method compared to conventional imaging approaches. The performance of the algorithm has been studied in simulations, and validated with experimental data collected in a realistic driving scenario.

Self-Supervised Learning for Enhancing Angular Resolution in Automotive MIMO Radars

Jan 24, 2023Abstract:A novel framework to enhance the angular resolution of automotive radars is proposed. An approach to enlarge the antenna aperture using artificial neural networks is developed using a self-supervised learning scheme. Data from a high angular resolution radar, i.e., a radar with a large antenna aperture, is used to train a deep neural network to extrapolate the antenna element's response. Afterward, the trained network is used to enhance the angular resolution of compact, low-cost radars. One million scenarios are simulated in a Monte-Carlo fashion, varying the number of targets, their Radar Cross Section (RCS), and location to evaluate the method's performance. Finally, the method is tested in real automotive data collected outdoors with a commercial radar system. A significant increase in the ability to resolve targets is demonstrated, which can translate to more accurate and faster responses from the planning and decision making system of the vehicle.

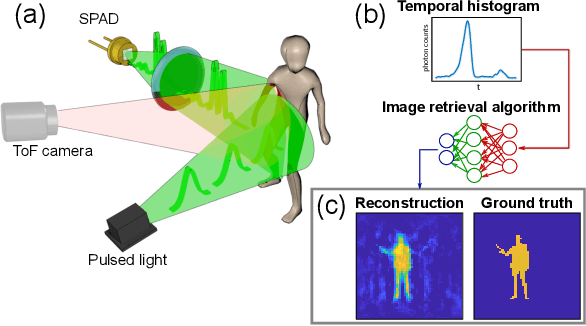

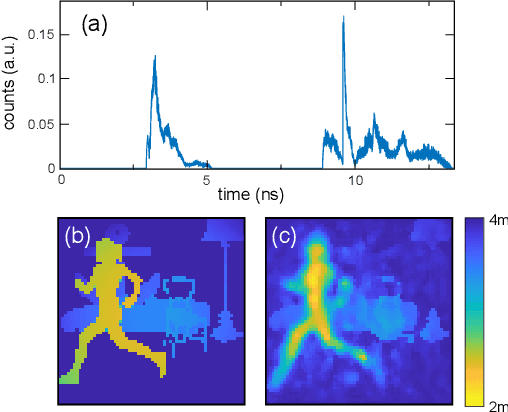

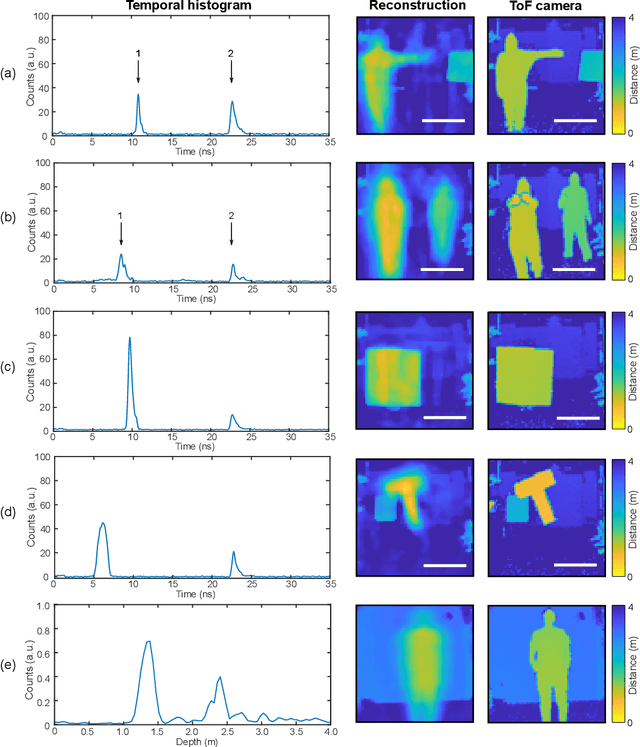

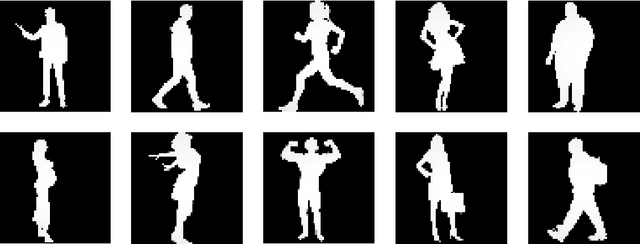

Spatial images from temporal data

Dec 02, 2019

Abstract:Traditional paradigms for imaging rely on the use of spatial structure either in the detector (pixels arrays) or in the illumination (patterned light). Removal of spatial structure in the detector or illumination, i.e. imaging with just a single-point sensor, would require solving a very strongly ill-posed inverse retrieval problem that to date has not been solved. Here we demonstrate a data-driven approach in which full 3D information is obtained with just a single-point, single-photon avalanche diode that records the arrival time of photons reflected from a scene that is illuminated with short pulses of light. Imaging with single-point time-of-flight (temporal) data opens new routes in terms of speed, size, and functionality. As an example, we show how the training based on an optical time-of-flight camera enables a compact radio-frequency impulse RADAR transceiver to provide 3D images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge