Francesca Bovolo

Deep Reinforcement Learning for Band Selection in Hyperspectral Image Classification

Mar 15, 2021

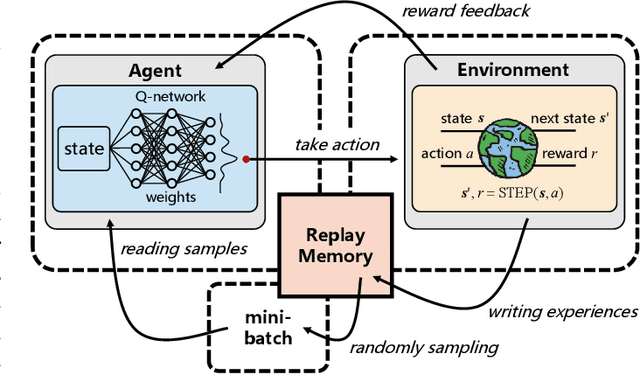

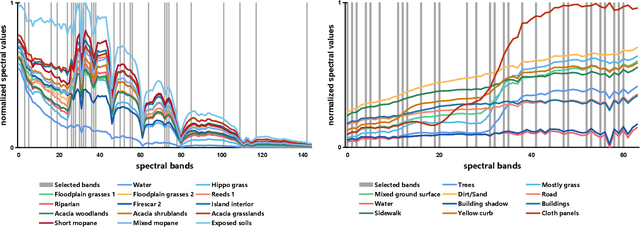

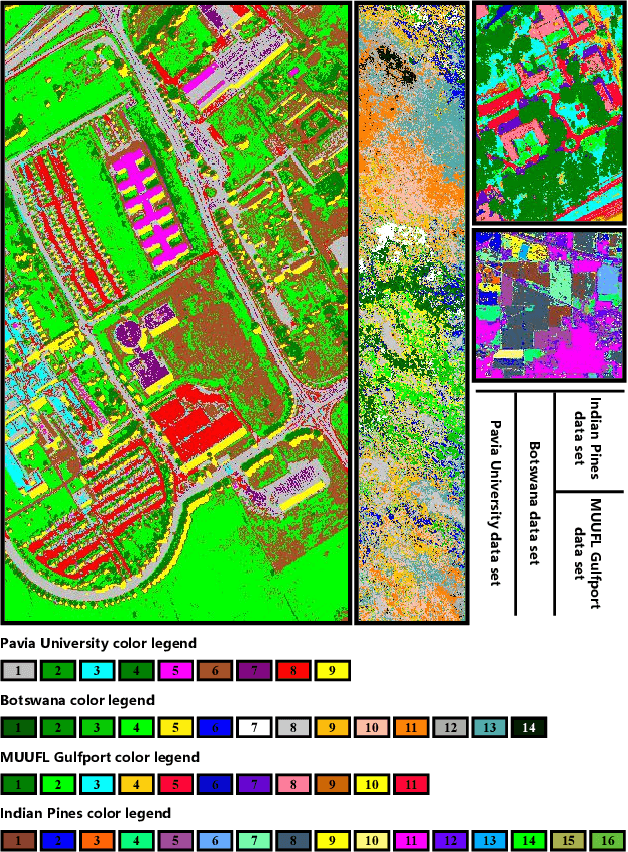

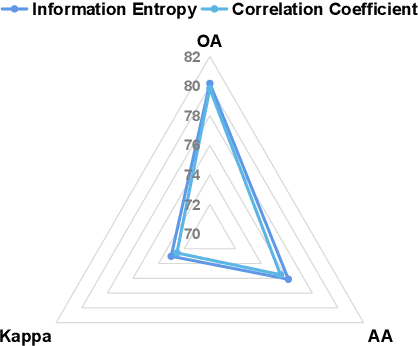

Abstract:Band selection refers to the process of choosing the most relevant bands in a hyperspectral image. By selecting a limited number of optimal bands, we aim at speeding up model training, improving accuracy, or both. It reduces redundancy among spectral bands while trying to preserve the original information of the image. By now many efforts have been made to develop unsupervised band selection approaches, of which the majority are heuristic algorithms devised by trial and error. In this paper, we are interested in training an intelligent agent that, given a hyperspectral image, is capable of automatically learning policy to select an optimal band subset without any hand-engineered reasoning. To this end, we frame the problem of unsupervised band selection as a Markov decision process, propose an effective method to parameterize it, and finally solve the problem by deep reinforcement learning. Once the agent is trained, it learns a band-selection policy that guides the agent to sequentially select bands by fully exploiting the hyperspectral image and previously picked bands. Furthermore, we propose two different reward schemes for the environment simulation of deep reinforcement learning and compare them in experiments. This, to the best of our knowledge, is the first study that explores a deep reinforcement learning model for hyperspectral image analysis, thus opening a new door for future research and showcasing the great potential of deep reinforcement learning in remote sensing applications. Extensive experiments are carried out on four hyperspectral data sets, and experimental results demonstrate the effectiveness of the proposed method.

Self-supervised pre-training enhances change detection in Sentinel-2 imagery

Jan 20, 2021

Abstract:While annotated images for change detection using satellite imagery are scarce and costly to obtain, there is a wealth of unlabeled images being generated every day. In order to leverage these data to learn an image representation more adequate for change detection, we explore methods that exploit the temporal consistency of Sentinel-2 times series to obtain a usable self-supervised learning signal. For this, we build and make publicly available (https://zenodo.org/record/4280482) the Sentinel-2 Multitemporal Cities Pairs (S2MTCP) dataset, containing multitemporal image pairs from 1520 urban areas worldwide. We test the results of multiple self-supervised learning methods for pre-training models for change detection and apply it on a public change detection dataset made of Sentinel-2 image pairs (OSCD).

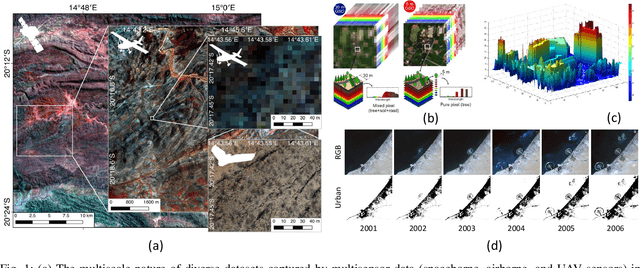

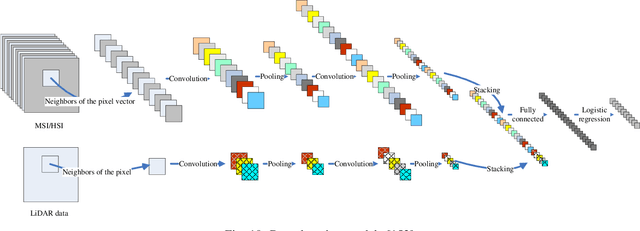

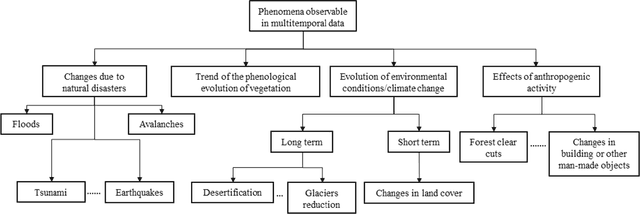

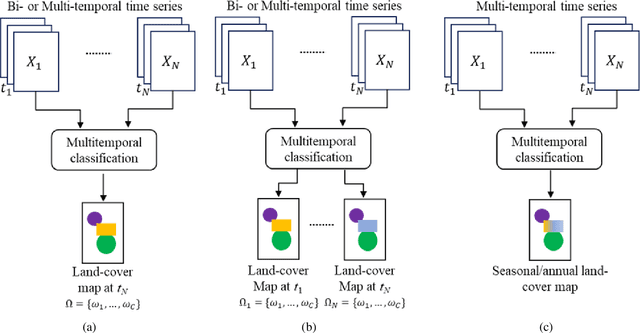

Multisource and Multitemporal Data Fusion in Remote Sensing

Dec 19, 2018

Abstract:The sharp and recent increase in the availability of data captured by different sensors combined with their considerably heterogeneous natures poses a serious challenge for the effective and efficient processing of remotely sensed data. Such an increase in remote sensing and ancillary datasets, however, opens up the possibility of utilizing multimodal datasets in a joint manner to further improve the performance of the processing approaches with respect to the application at hand. Multisource data fusion has, therefore, received enormous attention from researchers worldwide for a wide variety of applications. Moreover, thanks to the revisit capability of several spaceborne sensors, the integration of the temporal information with the spatial and/or spectral/backscattering information of the remotely sensed data is possible and helps to move from a representation of 2D/3D data to 4D data structures, where the time variable adds new information as well as challenges for the information extraction algorithms. There are a huge number of research works dedicated to multisource and multitemporal data fusion, but the methods for the fusion of different modalities have expanded in different paths according to each research community. This paper brings together the advances of multisource and multitemporal data fusion approaches with respect to different research communities and provides a thorough and discipline-specific starting point for researchers at different levels (i.e., students, researchers, and senior researchers) willing to conduct novel investigations on this challenging topic by supplying sufficient detail and references.

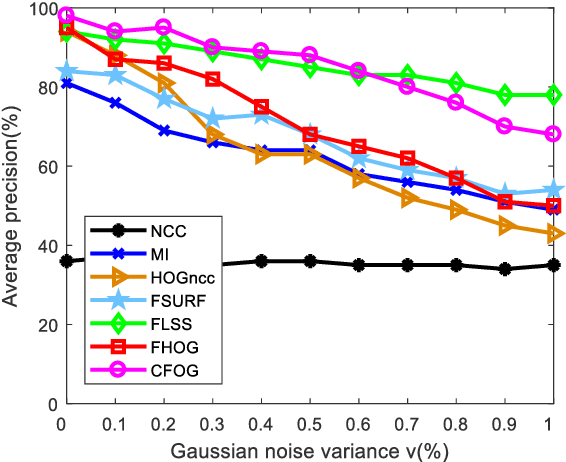

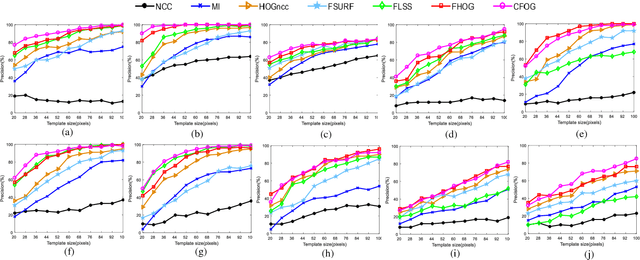

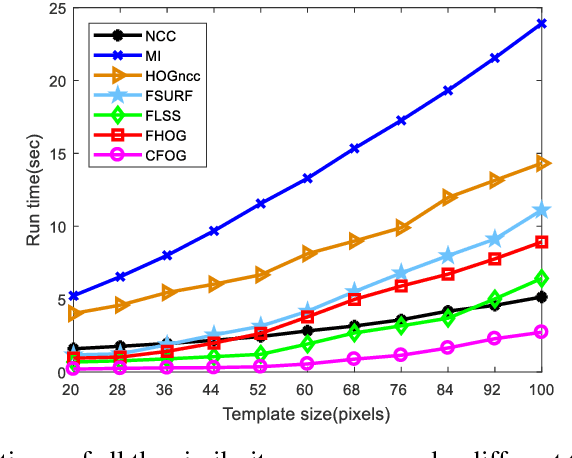

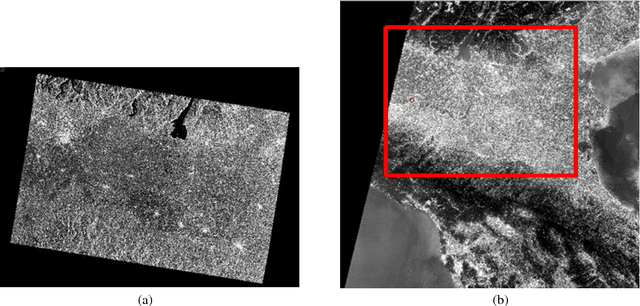

A Fast and Robust Matching Framework for Multimodal Remote Sensing Image Registration

Aug 19, 2018

Abstract:While image registration has been studied in remote sensing community for decades, registering multimodal data [e.g., optical, light detection and ranging (LiDAR), synthetic aperture radar (SAR), and map] remains a challenging problem because of significant nonlinear intensity differences between such data. To address this problem, we present a novel fast and robust matching framework integrating local descriptors for multimodal registration. In the proposed framework, a local descriptor (such as Histogram of Oriented Gradient (HOG), Local Self-Similarity or Speeded-Up Robust Feature) is first extracted at each pixel to form a pixel-wise feature representation of an image. Then we define a similarity measure based on the feature representation in frequency domain using the Fast Fourier Transform (FFT) technique, followed by a template matching scheme to detect control points between images. We also propose a novel pixel-wise feature representation using orientated gradients of images, which is named channel features of orientated gradients (CFOG). This novel feature is an extension of the pixel-wise HOG descriptor, and outperforms that both in matching performance and computational efficiency. The major advantages of the proposed framework include (1) structural similarity representation using the pixel-wise feature description and (2) high computational efficiency due to the use of FFT. Moreover, we design an automatic registration system for very large-size multimodal images based on the proposed framework. Experimental results obtained on many different types of multimodal images show the superior matching performance of the proposed framework with respect to the state-of-the-art methods and the effectiveness of the designed system, which show very good potential large-size image registration in real applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge