Katharina Anders

TUM2TWIN: Introducing the Large-Scale Multimodal Urban Digital Twin Benchmark Dataset

May 13, 2025

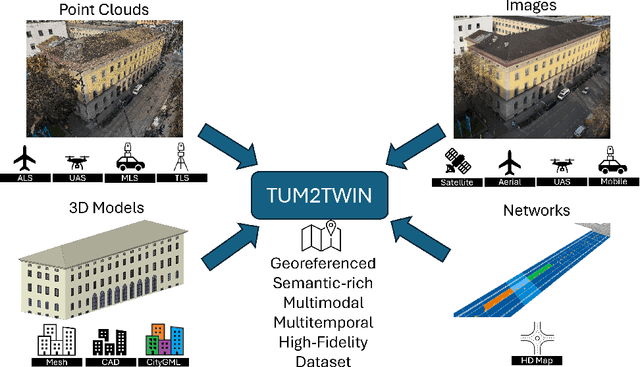

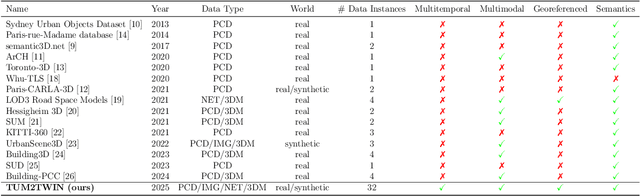

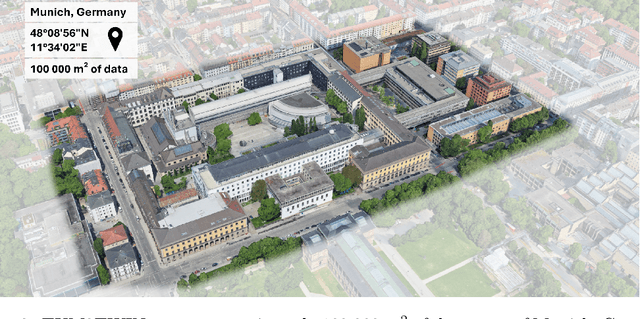

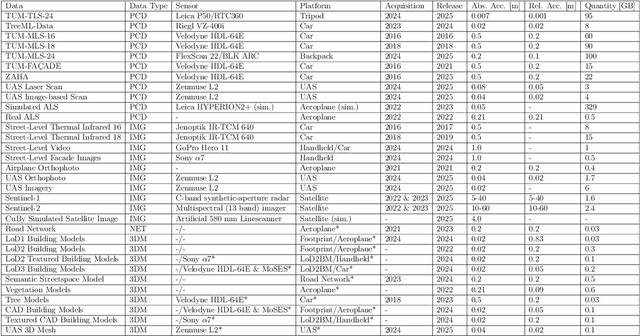

Abstract:Urban Digital Twins (UDTs) have become essential for managing cities and integrating complex, heterogeneous data from diverse sources. Creating UDTs involves challenges at multiple process stages, including acquiring accurate 3D source data, reconstructing high-fidelity 3D models, maintaining models' updates, and ensuring seamless interoperability to downstream tasks. Current datasets are usually limited to one part of the processing chain, hampering comprehensive UDTs validation. To address these challenges, we introduce the first comprehensive multimodal Urban Digital Twin benchmark dataset: TUM2TWIN. This dataset includes georeferenced, semantically aligned 3D models and networks along with various terrestrial, mobile, aerial, and satellite observations boasting 32 data subsets over roughly 100,000 $m^2$ and currently 767 GB of data. By ensuring georeferenced indoor-outdoor acquisition, high accuracy, and multimodal data integration, the benchmark supports robust analysis of sensors and the development of advanced reconstruction methods. Additionally, we explore downstream tasks demonstrating the potential of TUM2TWIN, including novel view synthesis of NeRF and Gaussian Splatting, solar potential analysis, point cloud semantic segmentation, and LoD3 building reconstruction. We are convinced this contribution lays a foundation for overcoming current limitations in UDT creation, fostering new research directions and practical solutions for smarter, data-driven urban environments. The project is available under: https://tum2t.win

Classification of structural building damage grades from multi-temporal photogrammetric point clouds using a machine learning model trained on virtual laser scanning data

Feb 24, 2023

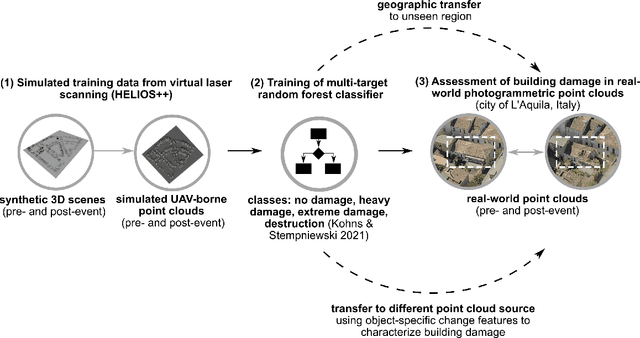

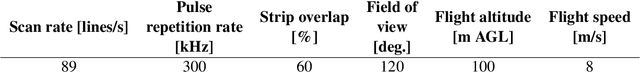

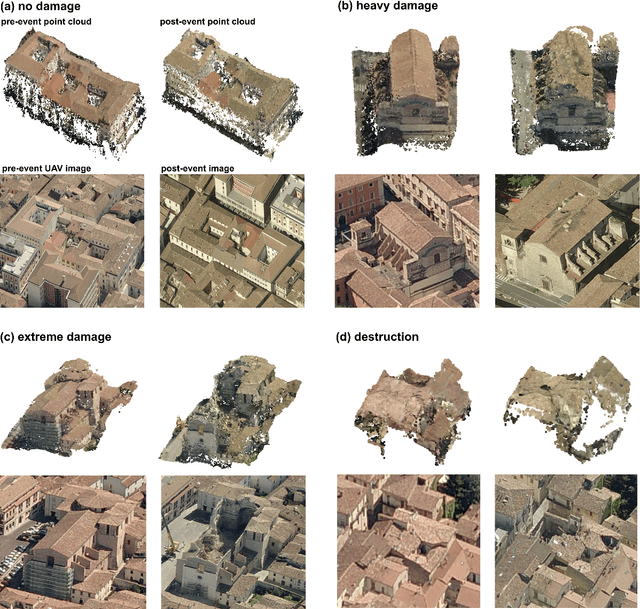

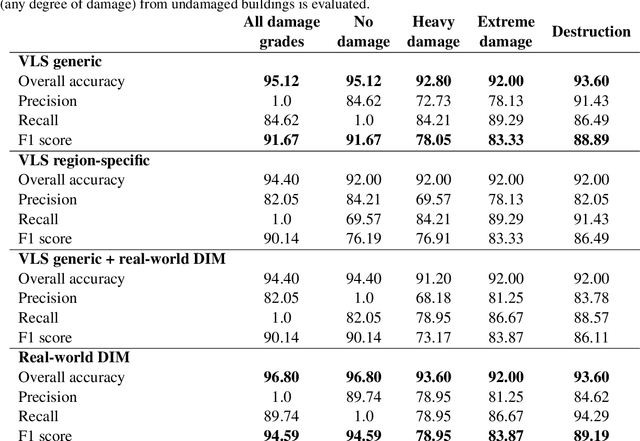

Abstract:Automatic damage assessment based on UAV-derived 3D point clouds can provide fast information on the damage situation after an earthquake. However, the assessment of multiple damage grades is challenging due to the variety in damage patterns and limited transferability of existing methods to other geographic regions or data sources. We present a novel approach to automatically assess multi-class building damage from real-world multi-temporal point clouds using a machine learning model trained on virtual laser scanning (VLS) data. We (1) identify object-specific change features, (2) separate changed and unchanged building parts, (3) train a random forest machine learning model with VLS data based on object-specific change features, and (4) use the classifier to assess building damage in real-world point clouds from photogrammetry-based dense image matching (DIM). We evaluate classifiers trained on different input data with respect to their capacity to classify three damage grades (heavy, extreme, destruction) in pre- and post-event DIM point clouds of a real earthquake event. Our approach is transferable with respect to multi-source input point clouds used for training (VLS) and application (DIM) of the model. We further achieve geographic transferability of the model by training it on simulated data of geometric change which characterises relevant damage grades across different geographic regions. The model yields high multi-target classification accuracies (overall accuracy: 92.0% - 95.1%). Its performance improves only slightly when using real-world region-specific training data (< 3% higher overall accuracies) and when using real-world region-specific training data (< 2% higher overall accuracies). We consider our approach relevant for applications where timely information on the damage situation is required and sufficient real-world training data is not available.

Virtual laser scanning with HELIOS++: A novel take on ray tracing-based simulation of topographic 3D laser scanning

Jan 21, 2021

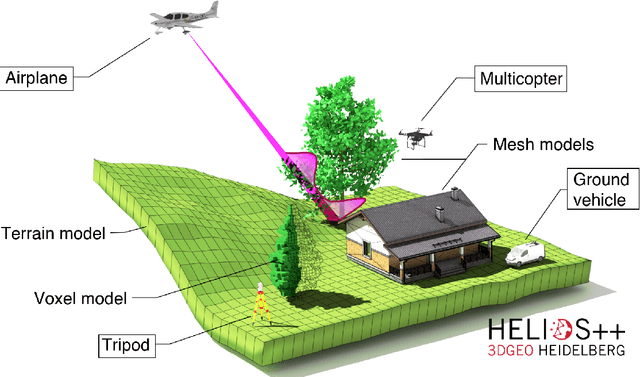

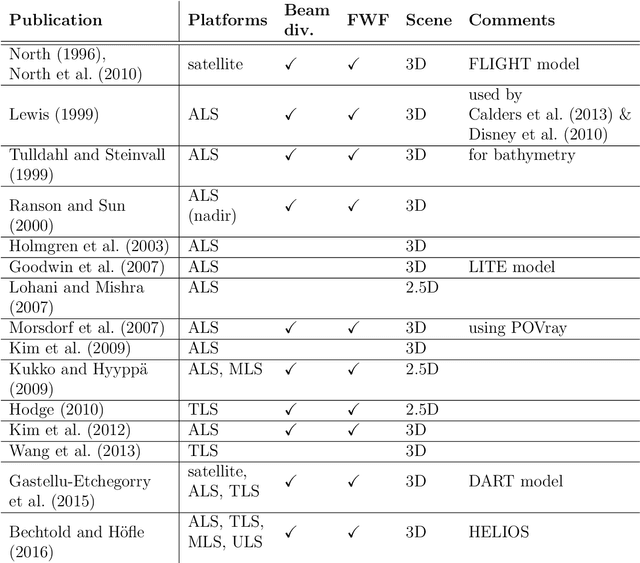

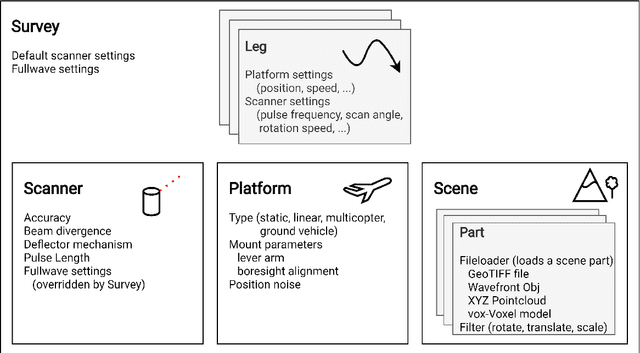

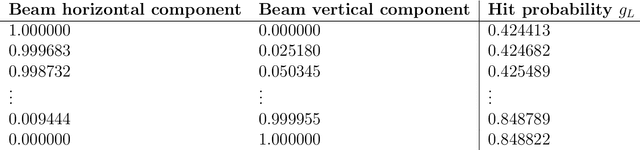

Abstract:Topographic laser scanning is a remote sensing method to create detailed 3D point cloud representations of the Earth's surface. Since data acquisition is expensive, simulations can complement real data given certain premises are available: i) a model of 3D scene and scanner, ii) a model of the beam-scene interaction, simplified to a computationally feasible while physically realistic level, and iii) an application for which simulated data is fit for use. A number of laser scanning simulators for different purposes exist, which we enrich by presenting HELIOS++. HELIOS++ is an open-source simulation framework for terrestrial static, mobile, UAV-based and airborne laser scanning implemented in C++. The HELIOS++ concept provides a flexible solution for the trade-off between physical accuracy (realism) and computational complexity (runtime, memory footprint), as well as ease of use and of configuration. Unique features of HELIOS++ include the availability of Python bindings (pyhelios) for controlling simulations, and a range of model types for 3D scene representation. HELIOS++ further allows the simulation of beam divergence using a subsampling strategy, and is able to create full-waveform outputs as a basis for detailed analysis. As generation and analysis of waveforms can strongly impact runtimes, the user may set the level of detail for the subsampling, or optionally disable full-waveform output altogether. A detailed assessment of computational considerations and a comparison of HELIOS++ to its predecessor, HELIOS, reveal reduced runtimes by up to 83 %. At the same time, memory requirements are reduced by up to 94 %, allowing for much larger (i.e. more complex) 3D scenes to be loaded into memory and hence to be virtually acquired by laser scanning simulation.

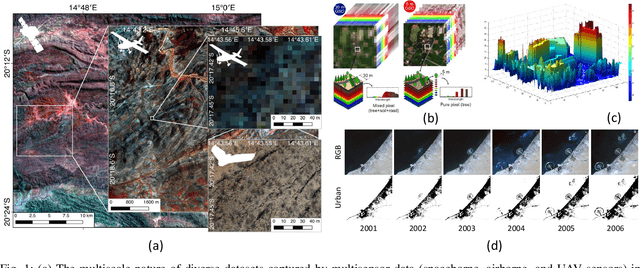

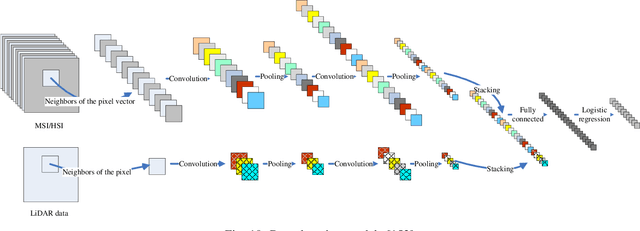

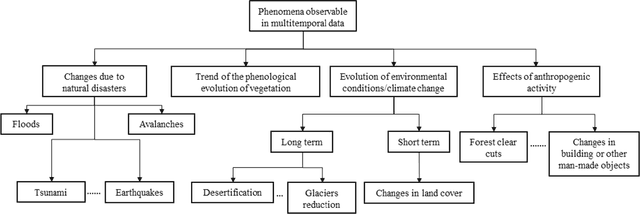

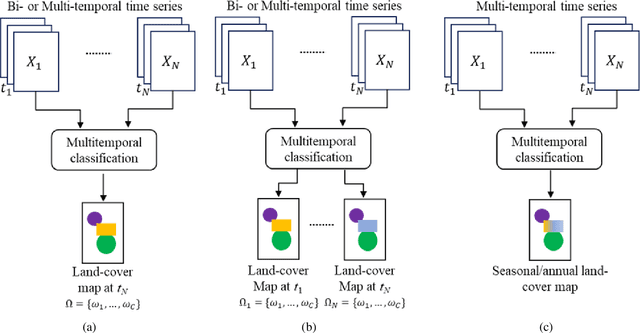

Multisource and Multitemporal Data Fusion in Remote Sensing

Dec 19, 2018

Abstract:The sharp and recent increase in the availability of data captured by different sensors combined with their considerably heterogeneous natures poses a serious challenge for the effective and efficient processing of remotely sensed data. Such an increase in remote sensing and ancillary datasets, however, opens up the possibility of utilizing multimodal datasets in a joint manner to further improve the performance of the processing approaches with respect to the application at hand. Multisource data fusion has, therefore, received enormous attention from researchers worldwide for a wide variety of applications. Moreover, thanks to the revisit capability of several spaceborne sensors, the integration of the temporal information with the spatial and/or spectral/backscattering information of the remotely sensed data is possible and helps to move from a representation of 2D/3D data to 4D data structures, where the time variable adds new information as well as challenges for the information extraction algorithms. There are a huge number of research works dedicated to multisource and multitemporal data fusion, but the methods for the fusion of different modalities have expanded in different paths according to each research community. This paper brings together the advances of multisource and multitemporal data fusion approaches with respect to different research communities and provides a thorough and discipline-specific starting point for researchers at different levels (i.e., students, researchers, and senior researchers) willing to conduct novel investigations on this challenging topic by supplying sufficient detail and references.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge