Bernhard Höfle

Benchmarking tree species classification from proximally-sensed laser scanning data: introducing the FOR-species20K dataset

Aug 12, 2024Abstract:Proximally-sensed laser scanning offers significant potential for automated forest data capture, but challenges remain in automatically identifying tree species without additional ground data. Deep learning (DL) shows promise for automation, yet progress is slowed by the lack of large, diverse, openly available labeled datasets of single tree point clouds. This has impacted the robustness of DL models and the ability to establish best practices for species classification. To overcome these challenges, the FOR-species20K benchmark dataset was created, comprising over 20,000 tree point clouds from 33 species, captured using terrestrial (TLS), mobile (MLS), and drone laser scanning (ULS) across various European forests, with some data from other regions. This dataset enables the benchmarking of DL models for tree species classification, including both point cloud-based (PointNet++, MinkNet, MLP-Mixer, DGCNNs) and multi-view image-based methods (SimpleView, DetailView, YOLOv5). 2D image-based models generally performed better (average OA = 0.77) than 3D point cloud-based models (average OA = 0.72), with consistent results across different scanning platforms and sensors. The top model, DetailView, was particularly robust, handling data imbalances well and generalizing effectively across tree sizes. The FOR-species20K dataset, available at https://zenodo.org/records/13255198, is a key resource for developing and benchmarking DL models for tree species classification using laser scanning data, providing a foundation for future advancements in the field.

Classification of structural building damage grades from multi-temporal photogrammetric point clouds using a machine learning model trained on virtual laser scanning data

Feb 24, 2023Abstract:Automatic damage assessment based on UAV-derived 3D point clouds can provide fast information on the damage situation after an earthquake. However, the assessment of multiple damage grades is challenging due to the variety in damage patterns and limited transferability of existing methods to other geographic regions or data sources. We present a novel approach to automatically assess multi-class building damage from real-world multi-temporal point clouds using a machine learning model trained on virtual laser scanning (VLS) data. We (1) identify object-specific change features, (2) separate changed and unchanged building parts, (3) train a random forest machine learning model with VLS data based on object-specific change features, and (4) use the classifier to assess building damage in real-world point clouds from photogrammetry-based dense image matching (DIM). We evaluate classifiers trained on different input data with respect to their capacity to classify three damage grades (heavy, extreme, destruction) in pre- and post-event DIM point clouds of a real earthquake event. Our approach is transferable with respect to multi-source input point clouds used for training (VLS) and application (DIM) of the model. We further achieve geographic transferability of the model by training it on simulated data of geometric change which characterises relevant damage grades across different geographic regions. The model yields high multi-target classification accuracies (overall accuracy: 92.0% - 95.1%). Its performance improves only slightly when using real-world region-specific training data (< 3% higher overall accuracies) and when using real-world region-specific training data (< 2% higher overall accuracies). We consider our approach relevant for applications where timely information on the damage situation is required and sufficient real-world training data is not available.

Virtual laser scanning with HELIOS++: A novel take on ray tracing-based simulation of topographic 3D laser scanning

Jan 21, 2021

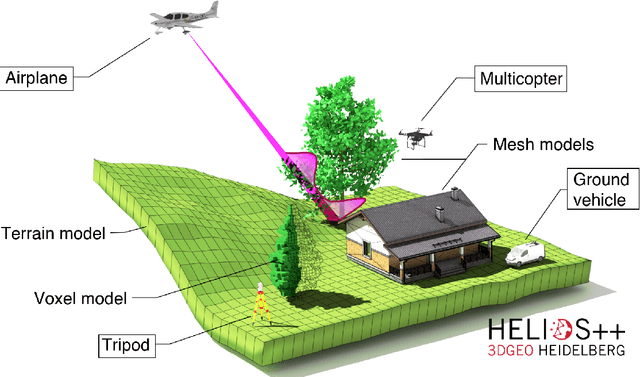

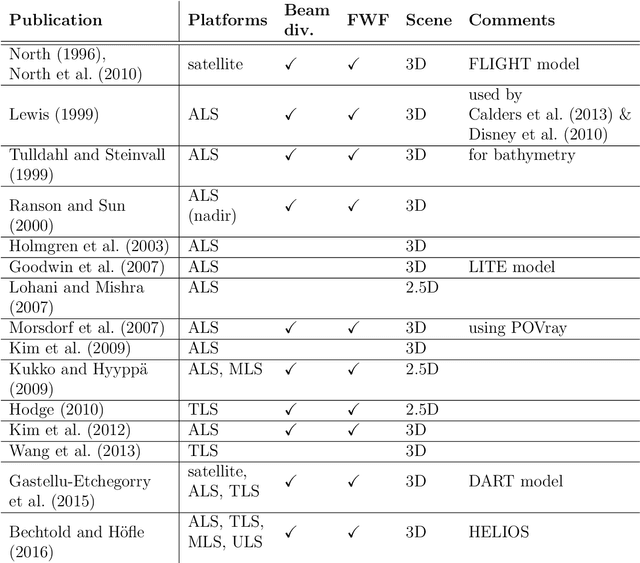

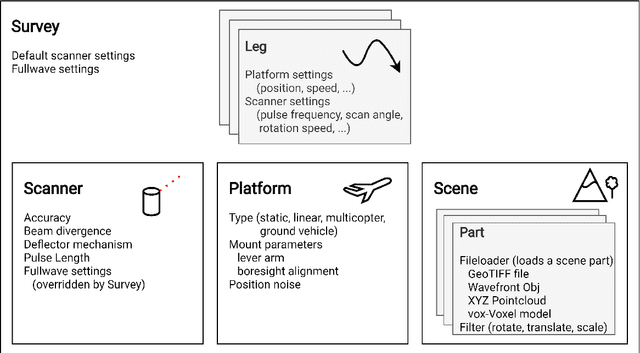

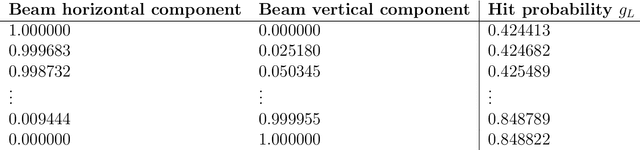

Abstract:Topographic laser scanning is a remote sensing method to create detailed 3D point cloud representations of the Earth's surface. Since data acquisition is expensive, simulations can complement real data given certain premises are available: i) a model of 3D scene and scanner, ii) a model of the beam-scene interaction, simplified to a computationally feasible while physically realistic level, and iii) an application for which simulated data is fit for use. A number of laser scanning simulators for different purposes exist, which we enrich by presenting HELIOS++. HELIOS++ is an open-source simulation framework for terrestrial static, mobile, UAV-based and airborne laser scanning implemented in C++. The HELIOS++ concept provides a flexible solution for the trade-off between physical accuracy (realism) and computational complexity (runtime, memory footprint), as well as ease of use and of configuration. Unique features of HELIOS++ include the availability of Python bindings (pyhelios) for controlling simulations, and a range of model types for 3D scene representation. HELIOS++ further allows the simulation of beam divergence using a subsampling strategy, and is able to create full-waveform outputs as a basis for detailed analysis. As generation and analysis of waveforms can strongly impact runtimes, the user may set the level of detail for the subsampling, or optionally disable full-waveform output altogether. A detailed assessment of computational considerations and a comparison of HELIOS++ to its predecessor, HELIOS, reveal reduced runtimes by up to 83 %. At the same time, memory requirements are reduced by up to 94 %, allowing for much larger (i.e. more complex) 3D scenes to be loaded into memory and hence to be virtually acquired by laser scanning simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge