Fengbo Lan

Affordance-Aware Interactive Decision-Making and Execution for Ambiguous Instructions

Feb 05, 2026Abstract:Enabling robots to explore and act in unfamiliar environments under ambiguous human instructions by interactively identifying task-relevant objects (e.g., identifying cups or beverages for "I'm thirsty") remains challenging for existing vision-language model (VLM)-based methods. This challenge stems from inefficient reasoning and the lack of environmental interaction, which hinder real-time task planning and execution. To address this, We propose Affordance-Aware Interactive Decision-Making and Execution for Ambiguous Instructions (AIDE), a dual-stream framework that integrates interactive exploration with vision-language reasoning, where Multi-Stage Inference (MSI) serves as the decision-making stream and Accelerated Decision-Making (ADM) as the execution stream, enabling zero-shot affordance analysis and interpretation of ambiguous instructions. Extensive experiments in simulation and real-world environments show that AIDE achieves the task planning success rate of over 80\% and more than 95\% accuracy in closed-loop continuous execution at 10 Hz, outperforming existing VLM-based methods in diverse open-world scenarios.

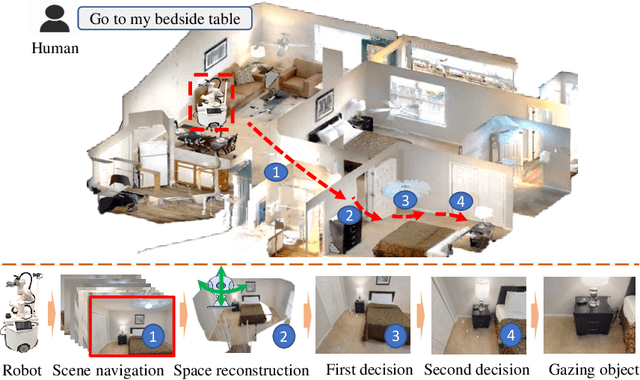

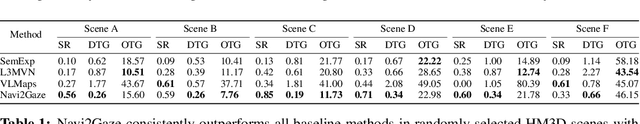

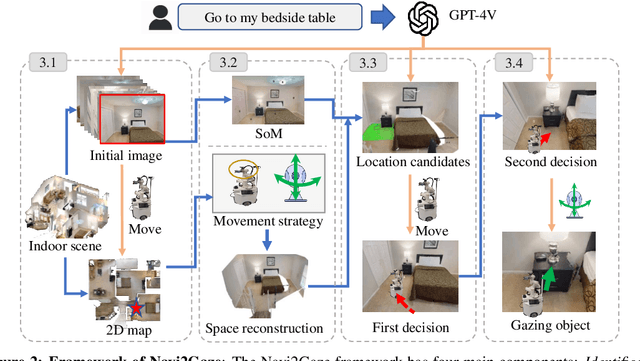

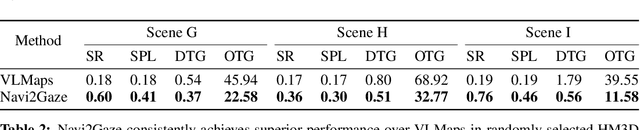

Navi2Gaze: Leveraging Foundation Models for Navigation and Target Gazing

Jul 12, 2024

Abstract:Task-aware navigation continues to be a challenging area of research, especially in scenarios involving open vocabulary. Previous studies primarily focus on finding suitable locations for task completion, often overlooking the importance of the robot's pose. However, the robot's orientation is crucial for successfully completing tasks because of how objects are arranged (e.g., to open a refrigerator door). Humans intuitively navigate to objects with the right orientation using semantics and common sense. For instance, when opening a refrigerator, we naturally stand in front of it rather than to the side. Recent advances suggest that Vision-Language Models (VLMs) can provide robots with similar common sense. Therefore, we develop a VLM-driven method called Navigation-to-Gaze (Navi2Gaze) for efficient navigation and object gazing based on task descriptions. This method uses the VLM to score and select the best pose from numerous candidates automatically. In evaluations on multiple photorealistic simulation benchmarks, Navi2Gaze significantly outperforms existing approaches and precisely determines the optimal orientation relative to target objects.

DexCatch: Learning to Catch Arbitrary Objects with Dexterous Hands

Oct 13, 2023

Abstract:Achieving human-like dexterous manipulation remains a crucial area of research in robotics. Current research focuses on improving the success rate of pick-and-place tasks. Compared with pick-and-place, throw-catching behavior has the potential to increase picking speed without transporting objects to their destination. However, dynamic dexterous manipulation poses a major challenge for stable control due to a large number of dynamic contacts. In this paper, we propose a Stability-Constrained Reinforcement Learning (SCRL) algorithm to learn to catch diverse objects with dexterous hands. The SCRL algorithm outperforms baselines by a large margin, and the learned policies show strong zero-shot transfer performance on unseen objects. Remarkably, even though the object in a hand facing sideward is extremely unstable due to the lack of support from the palm, our method can still achieve a high level of success in the most challenging task. Video demonstrations of learned behaviors and the code can be found on the supplementary website.

Mixed Graph Signal Analysis of Joint Image Denoising / Interpolation

Sep 18, 2023

Abstract:A noise-corrupted image often requires interpolation. Given a linear denoiser and a linear interpolator, when should the operations be independently executed in separate steps, and when should they be combined and jointly optimized? We study joint denoising / interpolation of images from a mixed graph filtering perspective: we model denoising using an undirected graph, and interpolation using a directed graph. We first prove that, under mild conditions, a linear denoiser is a solution graph filter to a maximum a posteriori (MAP) problem regularized using an undirected graph smoothness prior, while a linear interpolator is a solution to a MAP problem regularized using a directed graph smoothness prior. Next, we study two variants of the joint interpolation / denoising problem: a graph-based denoiser followed by an interpolator has an optimal separable solution, while an interpolator followed by a denoiser has an optimal non-separable solution. Experiments show that our joint denoising / interpolation method outperformed separate approaches noticeably.

Tackling Scattering and Reflective Flare in Mobile Camera Systems: A Raw Image Dataset for Enhanced Flare Removal

Jul 26, 2023Abstract:The increasing prevalence of mobile devices has led to significant advancements in mobile camera systems and improved image quality. Nonetheless, mobile photography still grapples with challenging issues such as scattering and reflective flare. The absence of a comprehensive real image dataset tailored for mobile phones hinders the development of effective flare mitigation techniques. To address this issue, we present a novel raw image dataset specifically designed for mobile camera systems, focusing on flare removal. Capitalizing on the distinct properties of raw images, this dataset serves as a solid foundation for developing advanced flare removal algorithms. It encompasses a wide variety of real-world scenarios captured with diverse mobile devices and camera settings. The dataset comprises over 2,000 high-quality full-resolution raw image pairs for scattering flare and 1,100 for reflective flare, which can be further segmented into up to 30,000 and 2,200 paired patches, respectively, ensuring broad adaptability across various imaging conditions. Experimental results demonstrate that networks trained with synthesized data struggle to cope with complex lighting settings present in this real image dataset. We also show that processing data through a mobile phone's internal ISP compromises image quality while using raw image data presents significant advantages for addressing the flare removal problem. Our dataset is expected to enable an array of new research in flare removal and contribute to substantial improvements in mobile image quality, benefiting mobile photographers and end-users alike.

A RL-based Policy Optimization Method Guided by Adaptive Stability Certification

Jan 02, 2023Abstract:In contrast to the control-theoretic methods, the lack of stability guarantee remains a significant problem for model-free reinforcement learning (RL) methods. Jointly learning a policy and a Lyapunov function has recently become a promising approach to ensuring the whole system with a stability guarantee. However, the classical Lyapunov constraints researchers introduced cannot stabilize the system during the sampling-based optimization. Therefore, we propose the Adaptive Stability Certification (ASC), making the system reach sampling-based stability. Because the ASC condition can search for the optimal policy heuristically, we design the Adaptive Lyapunov-based Actor-Critic (ALAC) algorithm based on the ASC condition. Meanwhile, our algorithm avoids the optimization problem that a variety of constraints are coupled into the objective in current approaches. When evaluated on ten robotic tasks, our method achieves lower accumulated cost and fewer stability constraint violations than previous studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge