Gene Cheung

Deep Unrolling of Sparsity-Induced RDO for 3D Point Cloud Attribute Coding

Sep 10, 2025Abstract:Given encoded 3D point cloud geometry available at the decoder, we study the problem of lossy attribute compression in a multi-resolution B-spline projection framework. A target continuous 3D attribute function is first projected onto a sequence of nested subspaces $\mathcal{F}^{(p)}_{l_0} \subseteq \cdots \subseteq \mathcal{F}^{(p)}_{L}$, where $\mathcal{F}^{(p)}_{l}$ is a family of functions spanned by a B-spline basis function of order $p$ at a chosen scale and its integer shifts. The projected low-pass coefficients $F_l^*$ are computed by variable-complexity unrolling of a rate-distortion (RD) optimization algorithm into a feed-forward network, where the rate term is the sparsity-promoting $\ell_1$-norm. Thus, the projection operation is end-to-end differentiable. For a chosen coarse-to-fine predictor, the coefficients are then adjusted to account for the prediction from a lower-resolution to a higher-resolution, which is also optimized in a data-driven manner.

Lightweight Transformer via Unrolling of Mixed Graph Algorithms for Traffic Forecast

May 19, 2025

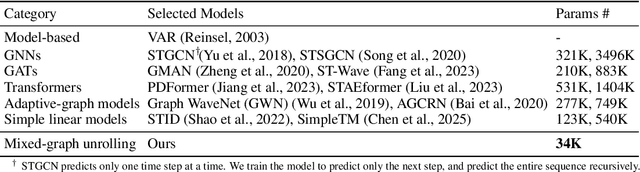

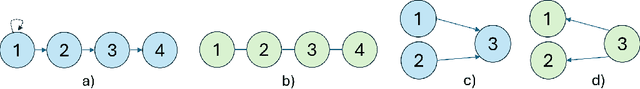

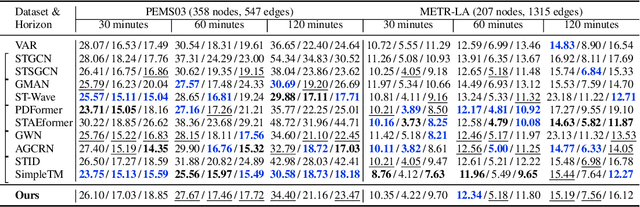

Abstract:To forecast traffic with both spatial and temporal dimensions, we unroll a mixed-graph-based optimization algorithm into a lightweight and interpretable transformer-like neural net. Specifically, we construct two graphs: an undirected graph $\mathcal{G}^u$ capturing spatial correlations across geography, and a directed graph $\mathcal{G}^d$ capturing sequential relationships over time. We formulate a prediction problem for the future samples of signal $\mathbf{x}$, assuming it is "smooth" with respect to both $\mathcal{G}^u$ and $\mathcal{G}^d$, where we design new $\ell_2$ and $\ell_1$-norm variational terms to quantify and promote signal smoothness (low-frequency reconstruction) on a directed graph. We construct an iterative algorithm based on alternating direction method of multipliers (ADMM), and unroll it into a feed-forward network for data-driven parameter learning. We insert graph learning modules for $\mathcal{G}^u$ and $\mathcal{G}^d$, which are akin to the self-attention mechanism in classical transformers. Experiments show that our unrolled networks achieve competitive traffic forecast performance as state-of-the-art prediction schemes, while reducing parameter counts drastically. Our code is available in https://github.com/SingularityUndefined/Unrolling-GSP-STForecast.

Efficient Learning of Balanced Signed Graphs via Iterative Linear Programming

Sep 12, 2024Abstract:Signed graphs are equipped with both positive and negative edge weights, encoding pairwise correlations as well as anti-correlations in data. A balanced signed graph has no cycles of odd number of negative edges. Laplacian of a balanced signed graph has eigenvectors that map simply to ones in a similarity-transformed positive graph Laplacian, thus enabling reuse of well-studied spectral filters designed for positive graphs. We propose a fast method to learn a balanced signed graph Laplacian directly from data. Specifically, for each node $i$, to determine its polarity $\beta_i \in \{-1,1\}$ and edge weights $\{w_{i,j}\}_{j=1}^N$, we extend a sparse inverse covariance formulation based on linear programming (LP) called CLIME, by adding linear constraints to enforce ``consistent" signs of edge weights $\{w_{i,j}\}_{j=1}^N$ with the polarities of connected nodes -- i.e., positive/negative edges connect nodes of same/opposing polarities. For each LP, we adapt projections on convex set (POCS) to determine a suitable CLIME parameter $\rho > 0$ that guarantees LP feasibility. We solve the resulting LP via an off-the-shelf LP solver in $\mathcal{O}(N^{2.055})$. Experiments on synthetic and real-world datasets show that our balanced graph learning method outperforms competing methods and enables the use of spectral filters and graph convolutional networks (GCNs) designed for positive graphs on signed graphs.

Constructing an Interpretable Deep Denoiser by Unrolling Graph Laplacian Regularizer

Sep 10, 2024

Abstract:An image denoiser can be used for a wide range of restoration problems via the Plug-and-Play (PnP) architecture. In this paper, we propose a general framework to build an interpretable graph-based deep denoiser (GDD) by unrolling a solution to a maximum a posteriori (MAP) problem equipped with a graph Laplacian regularizer (GLR) as signal prior. Leveraging a recent theorem showing that any (pseudo-)linear denoiser $\boldsymbol \Psi$, under mild conditions, can be mapped to a solution of a MAP denoising problem regularized using GLR, we first initialize a graph Laplacian matrix $\mathbf L$ via truncated Taylor Series Expansion (TSE) of $\boldsymbol \Psi^{-1}$. Then, we compute the MAP linear system solution by unrolling iterations of the conjugate gradient (CG) algorithm into a sequence of neural layers as a feed-forward network -- one that is amenable to parameter tuning. The resulting GDD network is "graph-interpretable", low in parameter count, and easy to initialize thanks to $\mathbf L$ derived from a known well-performing denoiser $\boldsymbol \Psi$. Experimental results show that GDD achieves competitive image denoising performance compared to competitors, but employing far fewer parameters, and is more robust to covariate shift.

Graph Unfolding and Sampling for Transitory Video Summarization via Gershgorin Disc Alignment

Aug 03, 2024Abstract:User-generated videos (UGVs) uploaded from mobile phones to social media sites like YouTube and TikTok are short and non-repetitive. We summarize a transitory UGV into several keyframes in linear time via fast graph sampling based on Gershgorin disc alignment (GDA). Specifically, we first model a sequence of $N$ frames in a UGV as an $M$-hop path graph $\mathcal{G}^o$ for $M \ll N$, where the similarity between two frames within $M$ time instants is encoded as a positive edge based on feature similarity. Towards efficient sampling, we then "unfold" $\mathcal{G}^o$ to a $1$-hop path graph $\mathcal{G}$, specified by a generalized graph Laplacian matrix $\mathcal{L}$, via one of two graph unfolding procedures with provable performance bounds. We show that maximizing the smallest eigenvalue $\lambda_{\min}(\mathbf{B})$ of a coefficient matrix $\mathbf{B} = \textit{diag}\left(\mathbf{h}\right) + \mu \mathcal{L}$, where $\mathbf{h}$ is the binary keyframe selection vector, is equivalent to minimizing a worst-case signal reconstruction error. We maximize instead the Gershgorin circle theorem (GCT) lower bound $\lambda^-_{\min}(\mathbf{B})$ by choosing $\mathbf{h}$ via a new fast graph sampling algorithm that iteratively aligns left-ends of Gershgorin discs for all graph nodes (frames). Extensive experiments on multiple short video datasets show that our algorithm achieves comparable or better video summarization performance compared to state-of-the-art methods, at a substantially reduced complexity.

Unrolling Plug-and-Play Gradient Graph Laplacian Regularizer for Image Restoration

Jul 01, 2024

Abstract:Generic deep learning (DL) networks for image restoration like denoising and interpolation lack mathematical interpretability, require voluminous training data to tune a large parameter set, and are fragile during covariance shift. To address these shortcomings, for a general linear image formation model, we first formulate a convex optimization problem with a new graph smoothness prior called gradient graph Laplacian regularizer (GGLR) that promotes piecewise planar (PWP) signal reconstruction. To solve the posed problem, we introduce a variable number of auxiliary variables to create a family of Plug-and-Play (PnP) ADMM algorithms and unroll them into variable-complexity feed-forward networks, amenable to parameter tuning via back-propagation. More complex unrolled networks require more labeled data to train more parameters, but have better potential performance. Experimental results show that our unrolled networks perform competitively to generic DL networks in image restoration quality while using a small fraction of parameters, and demonstrate improved robustness to covariance shift.

Interpretable Lightweight Transformer via Unrolling of Learned Graph Smoothness Priors

Jun 06, 2024

Abstract:We build interpretable and lightweight transformer-like neural networks by unrolling iterative optimization algorithms that minimize graph smoothness priors -- the quadratic graph Laplacian regularizer (GLR) and the $\ell_1$-norm graph total variation (GTV) -- subject to an interpolation constraint. The crucial insight is that a normalized signal-dependent graph learning module amounts to a variant of the basic self-attention mechanism in conventional transformers. Unlike "black-box" transformers that require learning of large key, query and value matrices to compute scaled dot products as affinities and subsequent output embeddings, resulting in huge parameter sets, our unrolled networks employ shallow CNNs to learn low-dimensional features per node to establish pairwise Mahalanobis distances and construct sparse similarity graphs. At each layer, given a learned graph, the target interpolated signal is simply a low-pass filtered output derived from the minimization of an assumed graph smoothness prior, leading to a dramatic reduction in parameter count. Experiments for two image interpolation applications verify the restoration performance, parameter efficiency and robustness to covariate shift of our graph-based unrolled networks compared to conventional transformers.

Signal Processing in the Retina: Interpretable Graph Classifier to Predict Ganglion Cell Responses

Jan 03, 2024

Abstract:It is a popular hypothesis in neuroscience that ganglion cells in the retina are activated by selectively detecting visual features in an observed scene. While ganglion cell firings can be predicted via data-trained deep neural nets, the networks remain indecipherable, thus providing little understanding of the cells' underlying operations. To extract knowledge from the cell firings, in this paper we learn an interpretable graph-based classifier from data to predict the firings of ganglion cells in response to visual stimuli. Specifically, we learn a positive semi-definite (PSD) metric matrix $\mathbf{M} \succeq 0$ that defines Mahalanobis distances between graph nodes (visual events) endowed with pre-computed feature vectors; the computed inter-node distances lead to edge weights and a combinatorial graph that is amenable to binary classification. Mathematically, we define the objective of metric matrix $\mathbf{M}$ optimization using a graph adaptation of large margin nearest neighbor (LMNN), which is rewritten as a semi-definite programming (SDP) problem. We solve it efficiently via a fast approximation called Gershgorin disc perfect alignment (GDPA) linearization. The learned metric matrix $\mathbf{M}$ provides interpretability: important features are identified along $\mathbf{M}$'s diagonal, and their mutual relationships are inferred from off-diagonal terms. Our fast metric learning framework can be applied to other biological systems with pre-chosen features that require interpretation.

Volumetric 3D Point Cloud Attribute Compression: Learned polynomial bilateral filter for prediction

Nov 22, 2023Abstract:We extend a previous study on 3D point cloud attribute compression scheme that uses a volumetric approach: given a target volumetric attribute function $f : \mathbb{R}^3 \mapsto \mathbb{R}$, we quantize and encode parameters $\theta$ that characterize $f$ at the encoder, for reconstruction $f_{\hat{\theta}}(\mathbf(x))$ at known 3D points $\mathbf(x)$ at the decoder. Specifically, parameters $\theta$ are quantized coefficients of B-spline basis vectors $\mathbf{\Phi}_l$ (for order $p \geq 2$) that span the function space $\mathcal{F}_l^{(p)}$ at a particular resolution $l$, which are coded from coarse to fine resolutions for scalability. In this work, we focus on the prediction of finer-grained coefficients given coarser-grained ones by learning parameters of a polynomial bilateral filter (PBF) from data. PBF is a pseudo-linear filter that is signal-dependent with a graph spectral interpretation common in the graph signal processing (GSP) field. We demonstrate PBF's predictive performance over a linear predictor inspired by MPEG standardization over a wide range of point cloud datasets.

Learned Nonlinear Predictor for Critically Sampled 3D Point Cloud Attribute Compression

Nov 22, 2023

Abstract:We study 3D point cloud attribute compression via a volumetric approach: assuming point cloud geometry is known at both encoder and decoder, parameters $\theta$ of a continuous attribute function $f: \mathbb{R}^3 \mapsto \mathbb{R}$ are quantized to $\hat{\theta}$ and encoded, so that discrete samples $f_{\hat{\theta}}(\mathbf{x}_i)$ can be recovered at known 3D points $\mathbf{x}_i \in \mathbb{R}^3$ at the decoder. Specifically, we consider a nested sequences of function subspaces $\mathcal{F}^{(p)}_{l_0} \subseteq \cdots \subseteq \mathcal{F}^{(p)}_L$, where $\mathcal{F}_l^{(p)}$ is a family of functions spanned by B-spline basis functions of order $p$, $f_l^*$ is the projection of $f$ on $\mathcal{F}_l^{(p)}$ and encoded as low-pass coefficients $F_l^*$, and $g_l^*$ is the residual function in orthogonal subspace $\mathcal{G}_l^{(p)}$ (where $\mathcal{G}_l^{(p)} \oplus \mathcal{F}_l^{(p)} = \mathcal{F}_{l+1}^{(p)}$) and encoded as high-pass coefficients $G_l^*$. In this paper, to improve coding performance over [1], we study predicting $f_{l+1}^*$ at level $l+1$ given $f_l^*$ at level $l$ and encoding of $G_l^*$ for the $p=1$ case (RAHT($1$)). For the prediction, we formalize RAHT(1) linear prediction in MPEG-PCC in a theoretical framework, and propose a new nonlinear predictor using a polynomial of bilateral filter. We derive equations to efficiently compute the critically sampled high-pass coefficients $G_l^*$ amenable to encoding. We optimize parameters in our resulting feed-forward network on a large training set of point clouds by minimizing a rate-distortion Lagrangian. Experimental results show that our improved framework outperformed the MPEG G-PCC predictor by $11$ to $12\%$ in bit rate reduction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge