Felix Jonathan

Decentralized Uncertainty-Aware Active Search with a Team of Aerial Robots

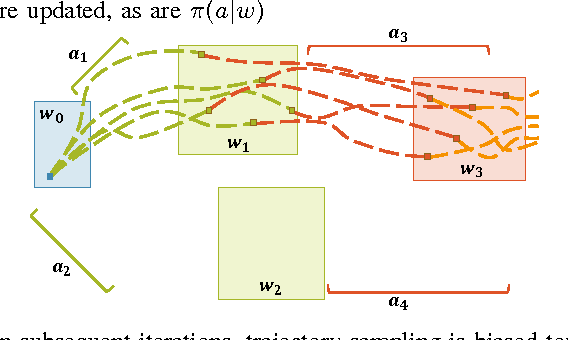

Oct 11, 2024Abstract:Rapid search and rescue is critical to maximizing survival rates following natural disasters. However, these efforts are challenged by the need to search large disaster zones, lack of reliability in the communications infrastructure, and a priori unknown numbers of objects of interest (OOIs), such as injured survivors. Aerial robots are increasingly being deployed for search and rescue due to their high mobility, but there remains a gap in deploying multi-robot autonomous aerial systems for methodical search of large environments. Prior works have relied on preprogrammed paths from human operators or are evaluated only in simulation. We bridge these gaps in the state of the art by developing and demonstrating a decentralized active search system, which biases its trajectories to take additional views of uncertain OOIs. The methodology leverages stochasticity for rapid coverage in communication denied scenarios. When communications are available, robots share poses, goals, and OOI information to accelerate the rate of search. Extensive simulations and hardware experiments in Bloomingdale, OH, are conducted to validate the approach. The results demonstrate the active search approach outperforms greedy coverage-based planning in communication-denied scenarios while maintaining comparable performance in communication-enabled scenarios.

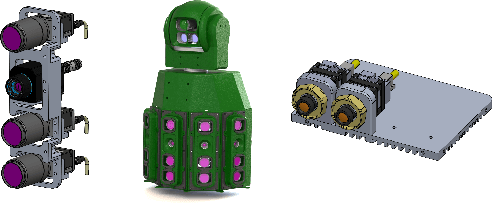

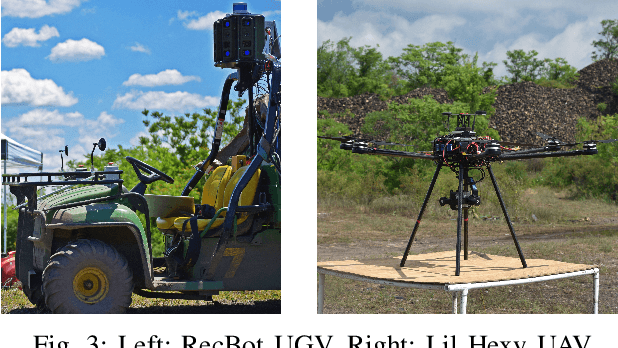

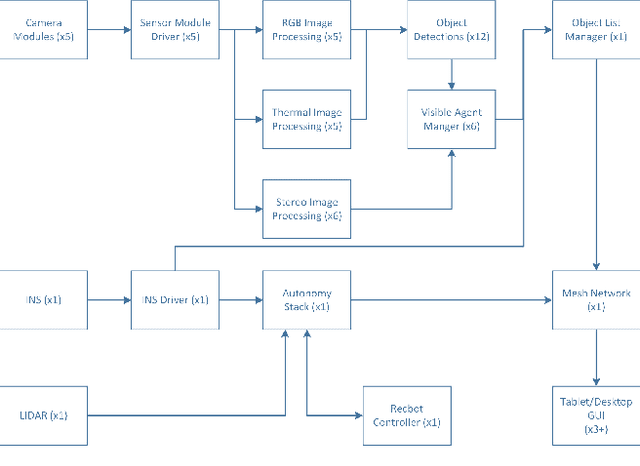

UGV-UAV Object Geolocation in Unstructured Environments

Jan 14, 2022

Abstract:A robotic system of multiple unmanned ground vehicles (UGVs) and unmanned aerial vehicles (UAVs) has the potential for advancing autonomous object geolocation performance. Much research has focused on algorithmic improvements on individual components, such as navigation, motion planning, and perception. In this paper, we present a UGV-UAV object detection and geolocation system, which performs perception, navigation, and planning autonomously in real scale in unstructured environment. We designed novel sensor pods equipped with multispectral (visible, near-infrared, thermal), high resolution (181.6 Mega Pixels), stereo (near-infrared pair), wide field of view (192 degree HFOV) array. We developed a novel on-board software-hardware architecture to process the high volume sensor data in real-time, and we built a custom AI subsystem composed of detection, tracking, navigation, and planning for autonomous objects geolocation in real-time. This research is the first real scale demonstration of such high speed data processing capability. Our novel modular sensor pod can boost relevant computer vision and machine learning research. Our novel hardware-software architecture is a solid foundation for system-level and component-level research. Our system is validated through data-driven offline tests as well as a series of field tests in unstructured environments. We present quantitative results as well as discussions on key robotic system level challenges which manifest when we build and test the system. This system is the first step toward a UGV-UAV cooperative reconnaissance system in the future.

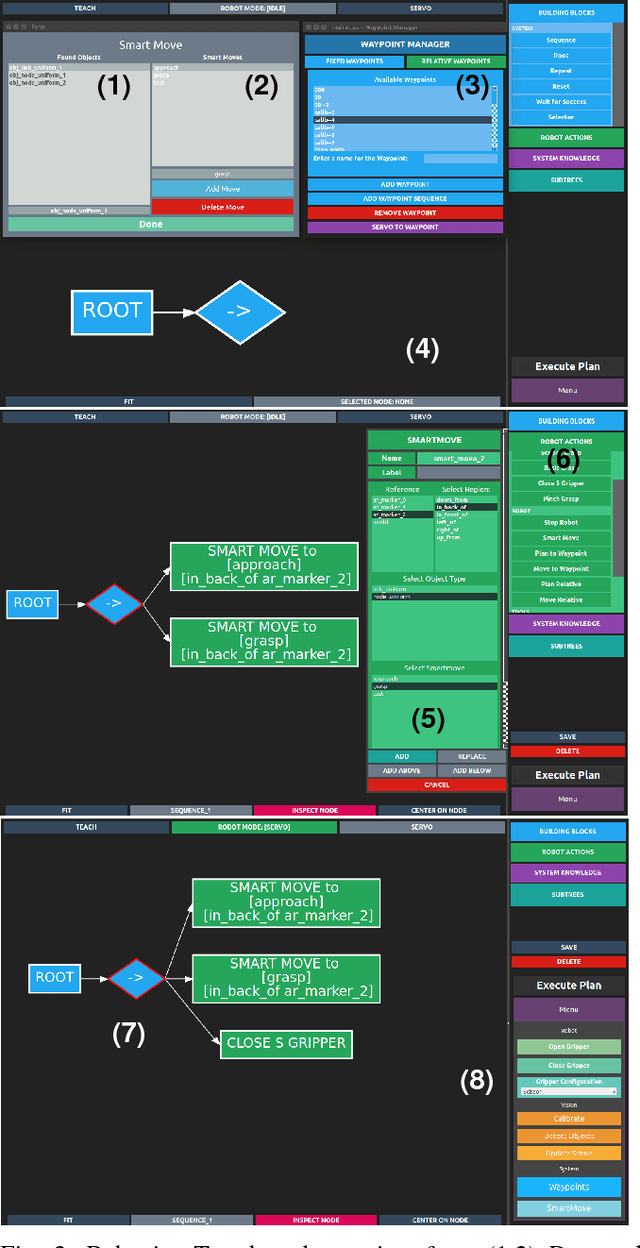

Evaluating Methods for End-User Creation of Robot Task Plans

Nov 06, 2018

Abstract:How can we enable users to create effective, perception-driven task plans for collaborative robots? We conducted a 35-person user study with the Behavior Tree-based CoSTAR system to determine which strategies for end user creation of generalizable robot task plans are most usable and effective. CoSTAR allows domain experts to author complex, perceptually grounded task plans for collaborative robots. As a part of CoSTAR's wide range of capabilities, it allows users to specify SmartMoves: abstract goals such as "pick up component A from the right side of the table." Users were asked to perform pick-and-place assembly tasks with either SmartMoves or one of three simpler baseline versions of CoSTAR. Overall, participants found CoSTAR to be highly usable, with an average System Usability Scale score of 73.4 out of 100. SmartMove also helped users perform tasks faster and more effectively; all SmartMove users completed the first two tasks, while not all users completed the tasks using the other strategies. SmartMove users showed better performance for incorporating perception across all three tasks.

* 7 pages; IROS 2018

Temporal and Physical Reasoning for Perception-Based Robotic Manipulation

Oct 11, 2017

Abstract:Accurate knowledge of object poses is crucial to successful robotic manipulation tasks, and yet most current approaches only work in laboratory settings. Noisy sensors and cluttered scenes interfere with accurate pose recognition, which is problematic especially when performing complex tasks involving object interactions. This is because most pose estimation algorithms focus only on estimating objects from a single frame, which means they lack continuity between frames. Further, they often do not consider resulting physical properties of the predicted scene such as intersecting objects or objects in unstable positions. In this work, we enhance the accuracy and stability of estimated poses for a whole scene by enforcing these physical constraints over time through the integration of a physics simulation. This allows us to accurately determine relationships between objects for a construction task. Scene parsing performance was evaluated on both simulated and real- world data. We apply our method to a real-world block stacking task, where the robot must build a tall tower of colored blocks.

User Experience of the CoSTAR System for Instruction of Collaborative Robots

Mar 23, 2017

Abstract:How can we enable novice users to create effective task plans for collaborative robots? Must there be a tradeoff between generalizability and ease of use? To answer these questions, we conducted a user study with the CoSTAR system, which integrates perception and reasoning into a Behavior Tree-based task plan editor. In our study, we ask novice users to perform simple pick-and-place assembly tasks under varying perception and planning capabilities. Our study shows that users found Behavior Trees to be an effective way of specifying task plans. Furthermore, users were also able to more quickly, effectively, and generally author task plans with the addition of CoSTAR's planning, perception, and reasoning capabilities. Despite these improvements, concepts associated with these capabilities were rated by users as less usable, and our results suggest a direction for further refinement.

Do What I Want, Not What I Did: Imitation of Skills by Planning Sequences of Actions

Dec 05, 2016

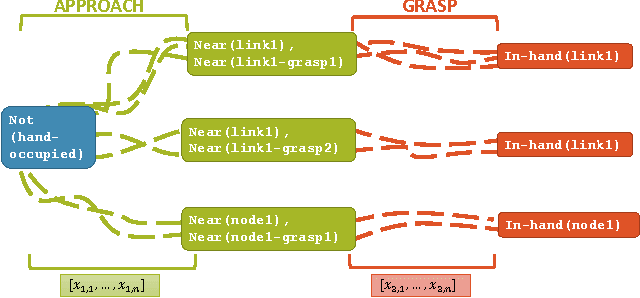

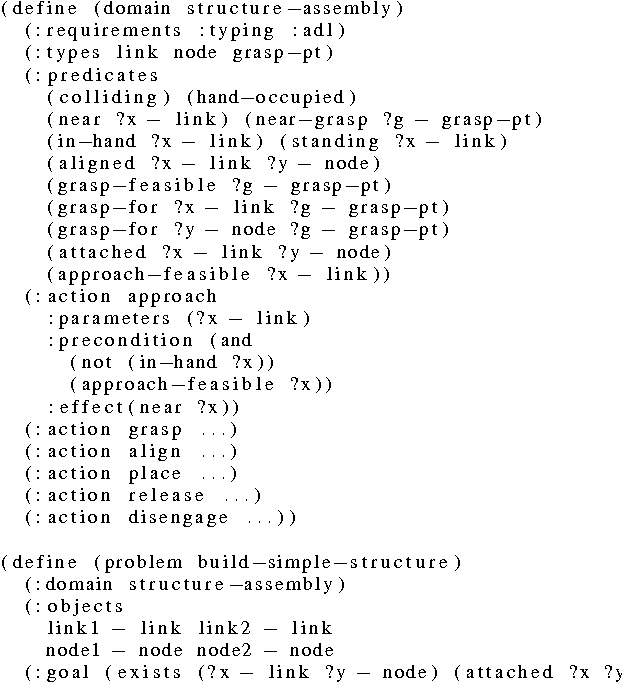

Abstract:We propose a learning-from-demonstration approach for grounding actions from expert data and an algorithm for using these actions to perform a task in new environments. Our approach is based on an application of sampling-based motion planning to search through the tree of discrete, high-level actions constructed from a symbolic representation of a task. Recursive sampling-based planning is used to explore the space of possible continuous-space instantiations of these actions. We demonstrate the utility of our approach with a magnetic structure assembly task, showing that the robot can intelligently select a sequence of actions in different parts of the workspace and in the presence of obstacles. This approach can better adapt to new environments by selecting the correct high-level actions for the particular environment while taking human preferences into account.

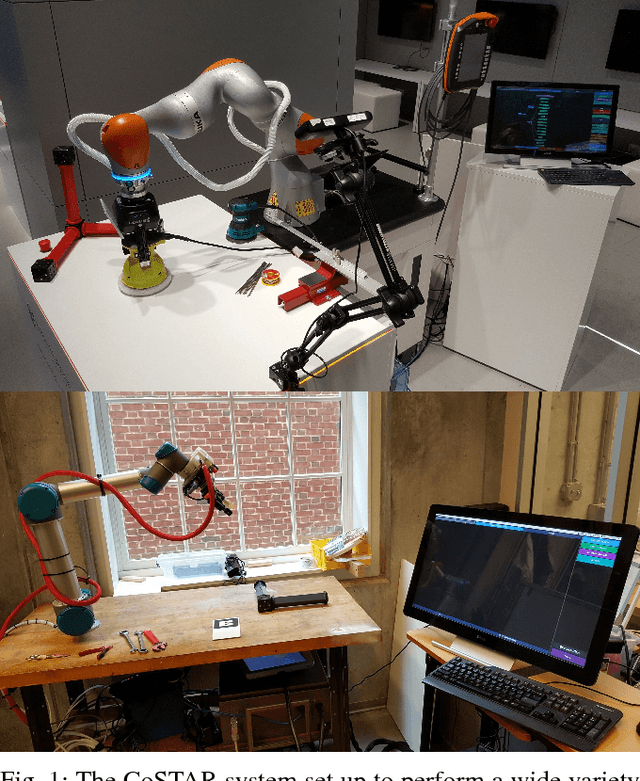

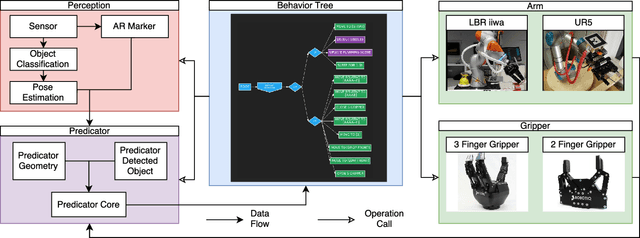

CoSTAR: Instructing Collaborative Robots with Behavior Trees and Vision

Nov 18, 2016

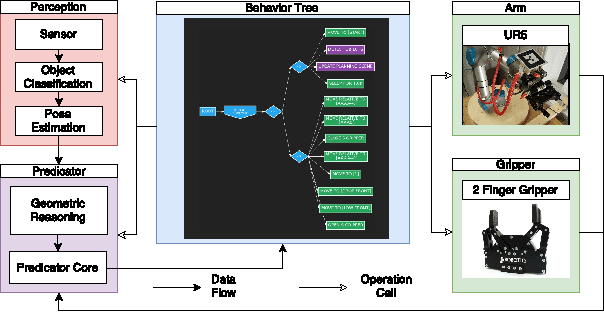

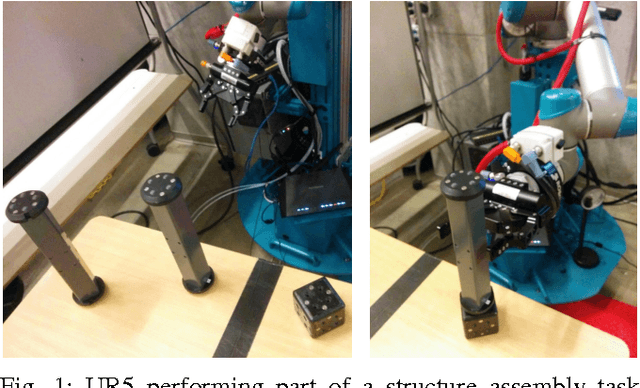

Abstract:For collaborative robots to become useful, end users who are not robotics experts must be able to instruct them to perform a variety of tasks. With this goal in mind, we developed a system for end-user creation of robust task plans with a broad range of capabilities. CoSTAR: the Collaborative System for Task Automation and Recognition is our winning entry in the 2016 KUKA Innovation Award competition at the Hannover Messe trade show, which this year focused on Flexible Manufacturing. CoSTAR is unique in how it creates natural abstractions that use perception to represent the world in a way users can both understand and utilize to author capable and robust task plans. Our Behavior Tree-based task editor integrates high-level information from known object segmentation and pose estimation with spatial reasoning and robot actions to create robust task plans. We describe the cross-platform design and implementation of this system on multiple industrial robots and evaluate its suitability for a wide variety of use cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge