Fatma Güney

Mapping like a Skeptic: Probabilistic BEV Projection for Online HD Mapping

Aug 29, 2025Abstract:Constructing high-definition (HD) maps from sensory input requires accurately mapping the road elements in image space to the Bird's Eye View (BEV) space. The precision of this mapping directly impacts the quality of the final vectorized HD map. Existing HD mapping approaches outsource the projection to standard mapping techniques, such as attention-based ones. However, these methods struggle with accuracy due to generalization problems, often hallucinating non-existent road elements. Our key idea is to start with a geometric mapping based on camera parameters and adapt it to the scene to extract relevant map information from camera images. To implement this, we propose a novel probabilistic projection mechanism with confidence scores to (i) refine the mapping to better align with the scene and (ii) filter out irrelevant elements that should not influence HD map generation. In addition, we improve temporal processing by using confidence scores to selectively accumulate reliable information over time. Experiments on new splits of the nuScenes and Argoverse2 datasets demonstrate improved performance over state-of-the-art approaches, indicating better generalization. The improvements are particularly pronounced on nuScenes and in the challenging long perception range. Our code and model checkpoints are available at https://github.com/Fatih-Erdogan/mapping-like-skeptic .

ETA: Efficiency through Thinking Ahead, A Dual Approach to Self-Driving with Large Models

Jun 09, 2025Abstract:How can we benefit from large models without sacrificing inference speed, a common dilemma in self-driving systems? A prevalent solution is a dual-system architecture, employing a small model for rapid, reactive decisions and a larger model for slower but more informative analyses. Existing dual-system designs often implement parallel architectures where inference is either directly conducted using the large model at each current frame or retrieved from previously stored inference results. However, these works still struggle to enable large models for a timely response to every online frame. Our key insight is to shift intensive computations of the current frame to previous time steps and perform a batch inference of multiple time steps to make large models respond promptly to each time step. To achieve the shifting, we introduce Efficiency through Thinking Ahead (ETA), an asynchronous system designed to: (1) propagate informative features from the past to the current frame using future predictions from the large model, (2) extract current frame features using a small model for real-time responsiveness, and (3) integrate these dual features via an action mask mechanism that emphasizes action-critical image regions. Evaluated on the Bench2Drive CARLA Leaderboard-v2 benchmark, ETA advances state-of-the-art performance by 8% with a driving score of 69.53 while maintaining a near-real-time inference speed at 50 ms.

Track-On: Transformer-based Online Point Tracking with Memory

Jan 30, 2025

Abstract:In this paper, we consider the problem of long-term point tracking, which requires consistent identification of points across multiple frames in a video, despite changes in appearance, lighting, perspective, and occlusions. We target online tracking on a frame-by-frame basis, making it suitable for real-world, streaming scenarios. Specifically, we introduce Track-On, a simple transformer-based model designed for online long-term point tracking. Unlike prior methods that depend on full temporal modeling, our model processes video frames causally without access to future frames, leveraging two memory modules -- spatial memory and context memory -- to capture temporal information and maintain reliable point tracking over long time horizons. At inference time, it employs patch classification and refinement to identify correspondences and track points with high accuracy. Through extensive experiments, we demonstrate that Track-On sets a new state-of-the-art for online models and delivers superior or competitive results compared to offline approaches on seven datasets, including the TAP-Vid benchmark. Our method offers a robust and scalable solution for real-time tracking in diverse applications. Project page: https://kuis-ai.github.io/track_on

Segment-Level Road Obstacle Detection Using Visual Foundation Model Priors and Likelihood Ratios

Dec 07, 2024

Abstract:Detecting road obstacles is essential for autonomous vehicles to navigate dynamic and complex traffic environments safely. Current road obstacle detection methods typically assign a score to each pixel and apply a threshold to generate final predictions. However, selecting an appropriate threshold is challenging, and the per-pixel classification approach often leads to fragmented predictions with numerous false positives. In this work, we propose a novel method that leverages segment-level features from visual foundation models and likelihood ratios to predict road obstacles directly. By focusing on segments rather than individual pixels, our approach enhances detection accuracy, reduces false positives, and offers increased robustness to scene variability. We benchmark our approach against existing methods on the RoadObstacle and LostAndFound datasets, achieving state-of-the-art performance without needing a predefined threshold.

O1O: Grouping of Known Classes to Identify Unknown Objects as Odd-One-Out

Oct 10, 2024

Abstract:Object detection methods trained on a fixed set of known classes struggle to detect objects of unknown classes in the open-world setting. Current fixes involve adding approximate supervision with pseudo-labels corresponding to candidate locations of objects, typically obtained in a class-agnostic manner. While previous approaches mainly rely on the appearance of objects, we find that geometric cues improve unknown recall. Although additional supervision from pseudo-labels helps to detect unknown objects, it also introduces confusion for known classes. We observed a notable decline in the model's performance for detecting known objects in the presence of noisy pseudo-labels. Drawing inspiration from studies on human cognition, we propose to group known classes into superclasses. By identifying similarities between classes within a superclass, we can identify unknown classes through an odd-one-out scoring mechanism. Our experiments on open-world detection benchmarks demonstrate significant improvements in unknown recall, consistently across all tasks. Crucially, we achieve this without compromising known performance, thanks to better partitioning of the feature space with superclasses.

Robust Bird's Eye View Segmentation by Adapting DINOv2

Sep 16, 2024

Abstract:Extracting a Bird's Eye View (BEV) representation from multiple camera images offers a cost-effective, scalable alternative to LIDAR-based solutions in autonomous driving. However, the performance of the existing BEV methods drops significantly under various corruptions such as brightness and weather changes or camera failures. To improve the robustness of BEV perception, we propose to adapt a large vision foundational model, DINOv2, to BEV estimation using Low Rank Adaptation (LoRA). Our approach builds on the strong representation space of DINOv2 by adapting it to the BEV task in a state-of-the-art framework, SimpleBEV. Our experiments show increased robustness of BEV perception under various corruptions, with increasing gains from scaling up the model and the input resolution. We also showcase the effectiveness of the adapted representations in terms of fewer learnable parameters and faster convergence during training.

Self-Evolving Depth-Supervised 3D Gaussian Splatting from Rendered Stereo Pairs

Sep 11, 2024

Abstract:3D Gaussian Splatting (GS) significantly struggles to accurately represent the underlying 3D scene geometry, resulting in inaccuracies and floating artifacts when rendering depth maps. In this paper, we address this limitation, undertaking a comprehensive analysis of the integration of depth priors throughout the optimization process of Gaussian primitives, and present a novel strategy for this purpose. This latter dynamically exploits depth cues from a readily available stereo network, processing virtual stereo pairs rendered by the GS model itself during training and achieving consistent self-improvement of the scene representation. Experimental results on three popular datasets, breaking ground as the first to assess depth accuracy for these models, validate our findings.

A Likelihood Ratio-Based Approach to Segmenting Unknown Objects

Sep 10, 2024

Abstract:Addressing the Out-of-Distribution (OoD) segmentation task is a prerequisite for perception systems operating in an open-world environment. Large foundational models are frequently used in downstream tasks, however, their potential for OoD remains mostly unexplored. We seek to leverage a large foundational model to achieve robust representation. Outlier supervision is a widely used strategy for improving OoD detection of the existing segmentation networks. However, current approaches for outlier supervision involve retraining parts of the original network, which is typically disruptive to the model's learned feature representation. Furthermore, retraining becomes infeasible in the case of large foundational models. Our goal is to retrain for outlier segmentation without compromising the strong representation space of the foundational model. To this end, we propose an adaptive, lightweight unknown estimation module (UEM) for outlier supervision that significantly enhances the OoD segmentation performance without affecting the learned feature representation of the original network. UEM learns a distribution for outliers and a generic distribution for known classes. Using the learned distributions, we propose a likelihood-ratio-based outlier scoring function that fuses the confidence of UEM with that of the pixel-wise segmentation inlier network to detect unknown objects. We also propose an objective to optimize this score directly. Our approach achieves a new state-of-the-art across multiple datasets, outperforming the previous best method by 5.74% average precision points while having a lower false-positive rate. Importantly, strong inlier performance remains unaffected.

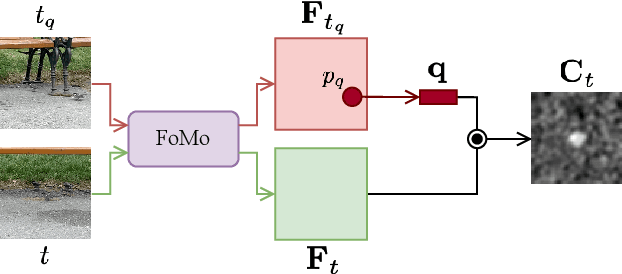

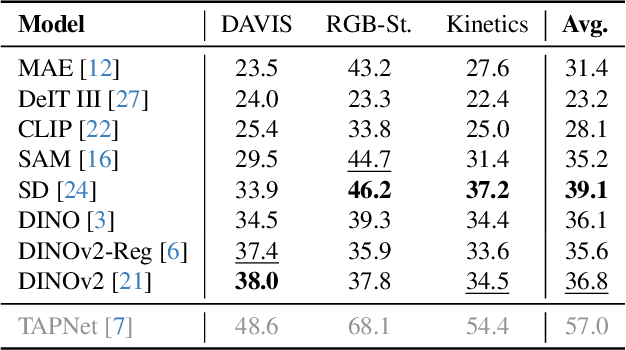

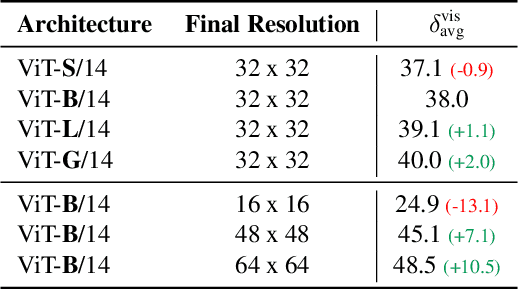

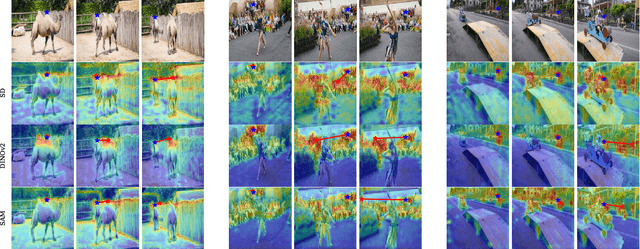

Can Visual Foundation Models Achieve Long-term Point Tracking?

Aug 24, 2024

Abstract:Large-scale vision foundation models have demonstrated remarkable success across various tasks, underscoring their robust generalization capabilities. While their proficiency in two-view correspondence has been explored, their effectiveness in long-term correspondence within complex environments remains unexplored. To address this, we evaluate the geometric awareness of visual foundation models in the context of point tracking: (i) in zero-shot settings, without any training; (ii) by probing with low-capacity layers; (iii) by fine-tuning with Low Rank Adaptation (LoRA). Our findings indicate that features from Stable Diffusion and DINOv2 exhibit superior geometric correspondence abilities in zero-shot settings. Furthermore, DINOv2 achieves performance comparable to supervised models in adaptation settings, demonstrating its potential as a strong initialization for correspondence learning.

CarFormer: Self-Driving with Learned Object-Centric Representations

Jul 22, 2024

Abstract:The choice of representation plays a key role in self-driving. Bird's eye view (BEV) representations have shown remarkable performance in recent years. In this paper, we propose to learn object-centric representations in BEV to distill a complex scene into more actionable information for self-driving. We first learn to place objects into slots with a slot attention model on BEV sequences. Based on these object-centric representations, we then train a transformer to learn to drive as well as reason about the future of other vehicles. We found that object-centric slot representations outperform both scene-level and object-level approaches that use the exact attributes of objects. Slot representations naturally incorporate information about objects from their spatial and temporal context such as position, heading, and speed without explicitly providing it. Our model with slots achieves an increased completion rate of the provided routes and, consequently, a higher driving score, with a lower variance across multiple runs, affirming slots as a reliable alternative in object-centric approaches. Additionally, we validate our model's performance as a world model through forecasting experiments, demonstrating its capability to predict future slot representations accurately. The code and the pre-trained models can be found at https://kuis-ai.github.io/CarFormer/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge