Fangde Liu

The Deep Poincaré Map: A Novel Approach for Left Ventricle Segmentation

Oct 30, 2018

Abstract:Precise segmentation of the left ventricle (LV) within cardiac MRI images is a prerequisite for the quantitative measurement of heart function. However, this task is challenging due to the limited availability of labeled data and motion artifacts from cardiac imaging. In this work, we present an iterative segmentation algorithm for LV delineation. By coupling deep learning with a novel dynamic-based labeling scheme, we present a new methodology where a policy model is learned to guide an agent to travel over the the image, tracing out a boundary of the ROI -- using the magnitude difference of the Poincar\'e map as a stopping criterion. Our method is evaluated on two datasets, namely the Sunnybrook Cardiac Dataset (SCD) and data from the STACOM 2011 LV segmentation challenge. Our method outperforms the previous research over many metrics. In order to demonstrate the transferability of our method we present encouraging results over the STACOM 2011 data, when using a model trained on the SCD dataset.

TensorLayer: A Versatile Library for Efficient Deep Learning Development

Aug 03, 2017

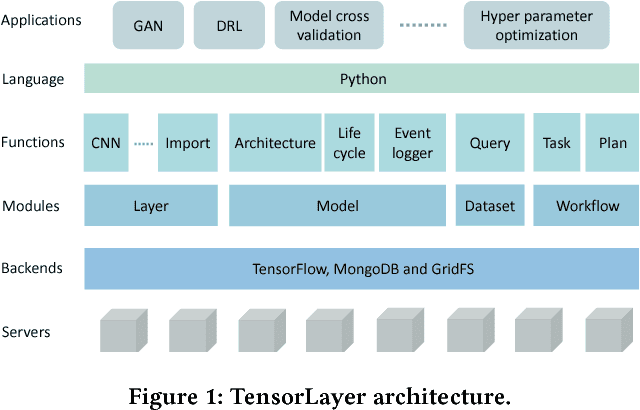

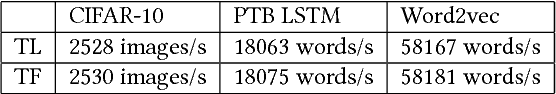

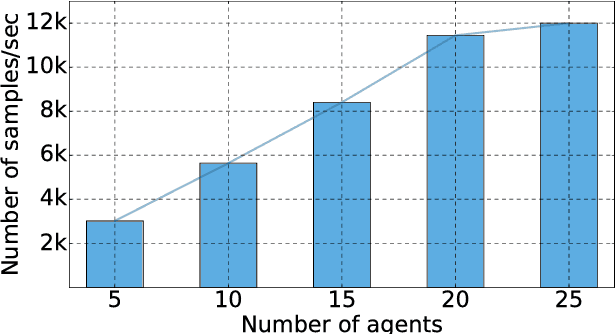

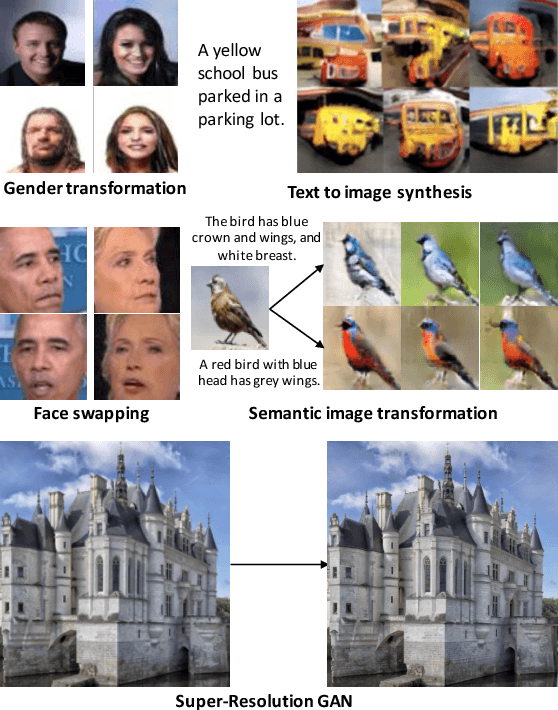

Abstract:Deep learning has enabled major advances in the fields of computer vision, natural language processing, and multimedia among many others. Developing a deep learning system is arduous and complex, as it involves constructing neural network architectures, managing training/trained models, tuning optimization process, preprocessing and organizing data, etc. TensorLayer is a versatile Python library that aims at helping researchers and engineers efficiently develop deep learning systems. It offers rich abstractions for neural networks, model and data management, and parallel workflow mechanism. While boosting efficiency, TensorLayer maintains both performance and scalability. TensorLayer was released in September 2016 on GitHub, and has helped people from academia and industry develop real-world applications of deep learning.

Automatic Brain Tumor Detection and Segmentation Using U-Net Based Fully Convolutional Networks

Jun 03, 2017

Abstract:A major challenge in brain tumor treatment planning and quantitative evaluation is determination of the tumor extent. The noninvasive magnetic resonance imaging (MRI) technique has emerged as a front-line diagnostic tool for brain tumors without ionizing radiation. Manual segmentation of brain tumor extent from 3D MRI volumes is a very time-consuming task and the performance is highly relied on operator's experience. In this context, a reliable fully automatic segmentation method for the brain tumor segmentation is necessary for an efficient measurement of the tumor extent. In this study, we propose a fully automatic method for brain tumor segmentation, which is developed using U-Net based deep convolutional networks. Our method was evaluated on Multimodal Brain Tumor Image Segmentation (BRATS 2015) datasets, which contain 220 high-grade brain tumor and 54 low-grade tumor cases. Cross-validation has shown that our method can obtain promising segmentation efficiently.

Deep De-Aliasing for Fast Compressive Sensing MRI

May 19, 2017

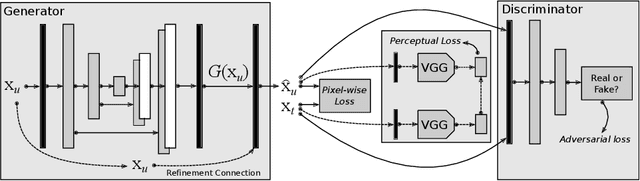

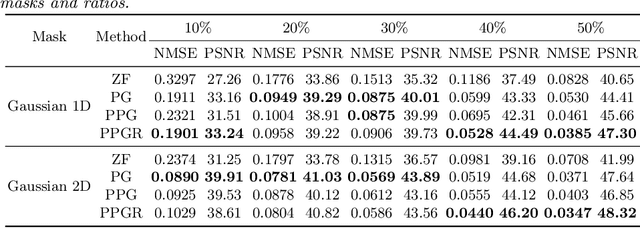

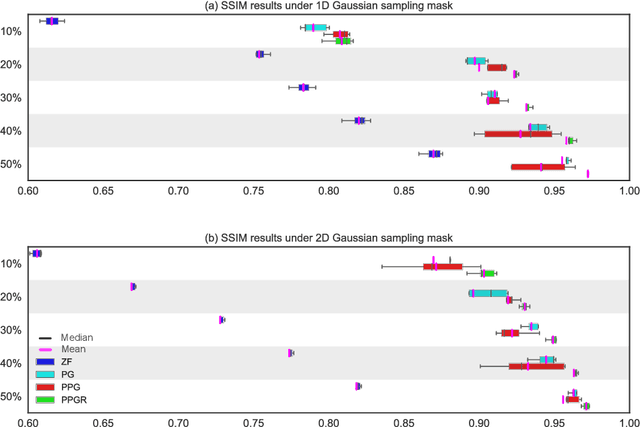

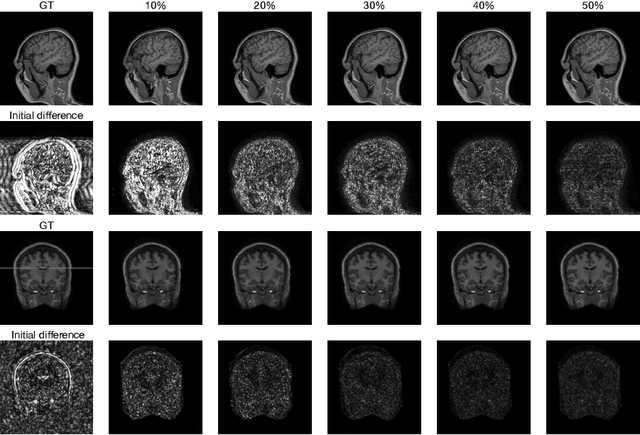

Abstract:Fast Magnetic Resonance Imaging (MRI) is highly in demand for many clinical applications in order to reduce the scanning cost and improve the patient experience. This can also potentially increase the image quality by reducing the motion artefacts and contrast washout. However, once an image field of view and the desired resolution are chosen, the minimum scanning time is normally determined by the requirement of acquiring sufficient raw data to meet the Nyquist-Shannon sampling criteria. Compressive Sensing (CS) theory has been perfectly matched to the MRI scanning sequence design with much less required raw data for the image reconstruction. Inspired by recent advances in deep learning for solving various inverse problems, we propose a conditional Generative Adversarial Networks-based deep learning framework for de-aliasing and reconstructing MRI images from highly undersampled data with great promise to accelerate the data acquisition process. By coupling an innovative content loss with the adversarial loss our de-aliasing results are more realistic. Furthermore, we propose a refinement learning procedure for training the generator network, which can stabilise the training with fast convergence and less parameter tuning. We demonstrate that the proposed framework outperforms state-of-the-art CS-MRI methods, in terms of reconstruction error and perceptual image quality. In addition, our method can reconstruct each image in 0.22ms--0.37ms, which is promising for real-time applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge