Axel Oehmichen

MOAI: A methodology for evaluating the impact of indoor airflow in the transmission of COVID-19

Mar 31, 2021

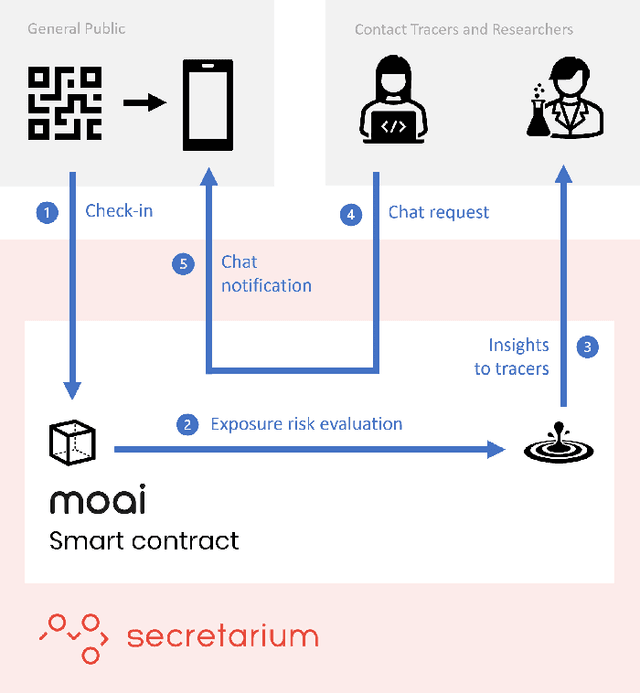

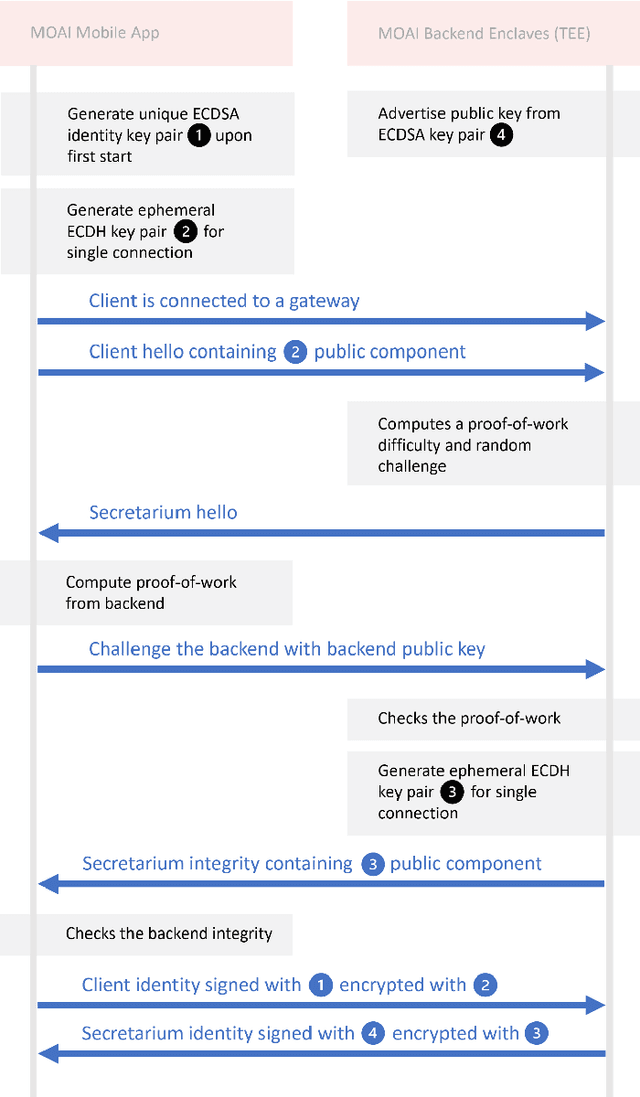

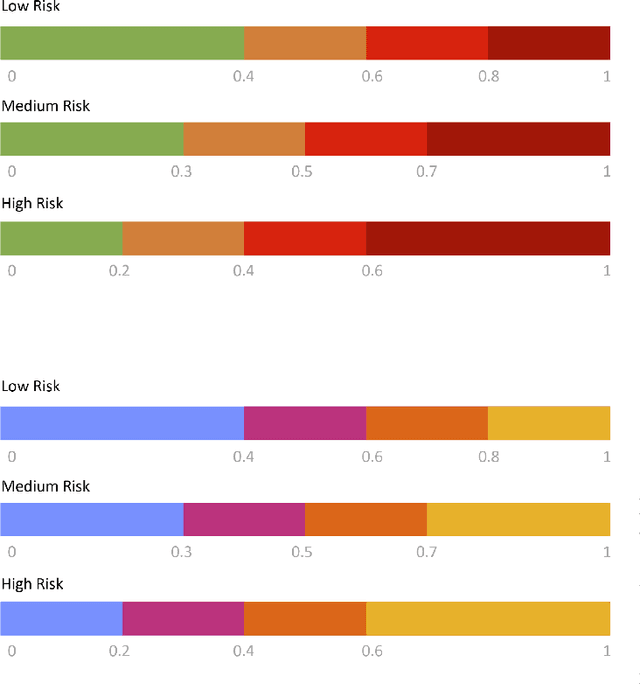

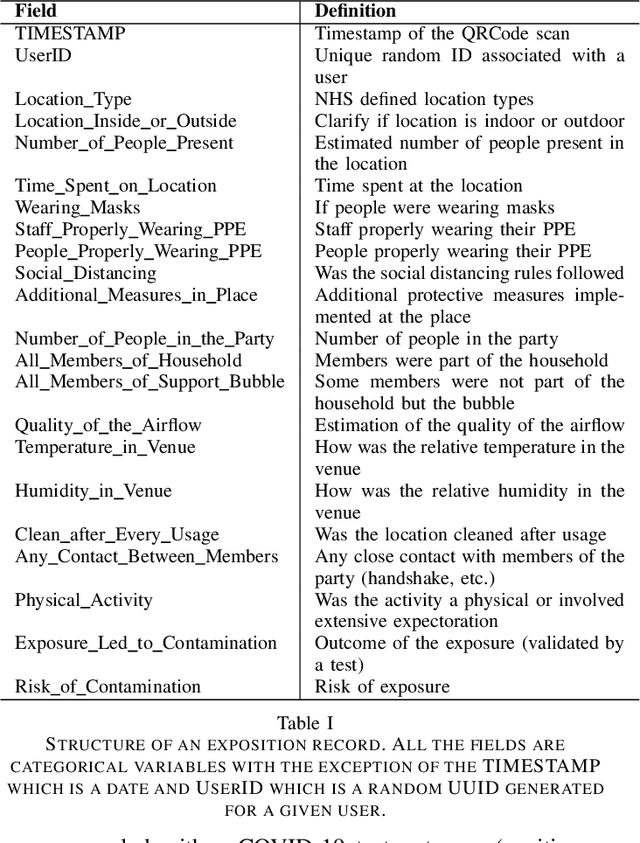

Abstract:Epidemiology models play a key role in understanding and responding to the COVID-19 pandemic. In order to build those models, scientists need to understand contributing factors and their relative importance. A large strand of literature has identified the importance of airflow to mitigate droplets and far-field aerosol transmission risks. However, the specific factors contributing to higher or lower contamination in various settings have not been clearly defined and quantified. As part of the MOAI project (https://moaiapp.com), we are developing a privacy-preserving test and trace app to enable infection cluster investigators to get in touch with patients without having to know their identity. This approach allows involving users in the fight against the pandemic by contributing additional information in the form of anonymous research questionnaires. We first describe how the questionnaire was designed, and the synthetic data was generated based on a review we carried out on the latest available literature. We then present a model to evaluate the risk exposition of a user for a given setting. We finally propose a temporal addition to the model to evaluate the risk exposure over time for a given user.

Characterizing Political Fake News in Twitter by its Meta-Data

Dec 16, 2017

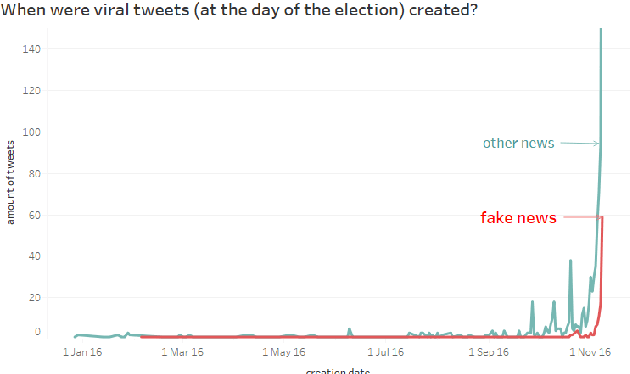

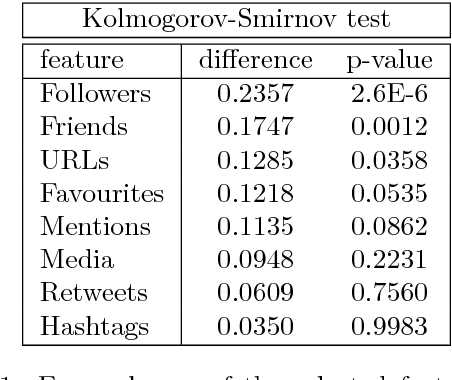

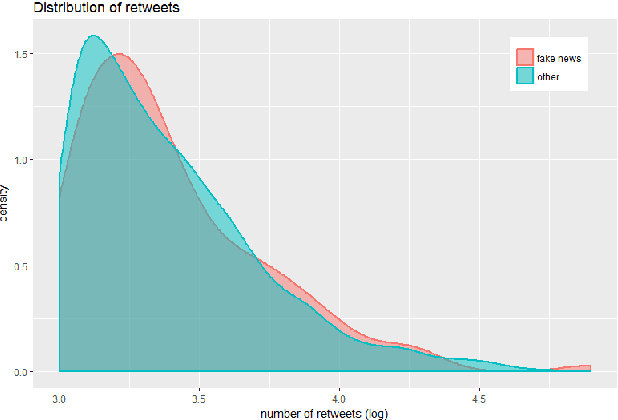

Abstract:This article presents a preliminary approach towards characterizing political fake news on Twitter through the analysis of their meta-data. In particular, we focus on more than 1.5M tweets collected on the day of the election of Donald Trump as 45th president of the United States of America. We use the meta-data embedded within those tweets in order to look for differences between tweets containing fake news and tweets not containing them. Specifically, we perform our analysis only on tweets that went viral, by studying proxies for users' exposure to the tweets, by characterizing accounts spreading fake news, and by looking at their polarization. We found significant differences on the distribution of followers, the number of URLs on tweets, and the verification of the users.

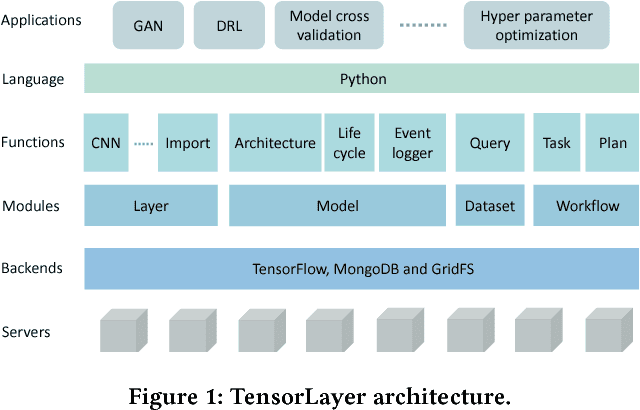

TensorLayer: A Versatile Library for Efficient Deep Learning Development

Aug 03, 2017

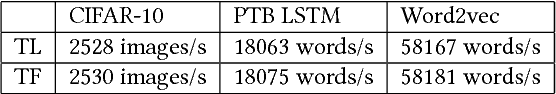

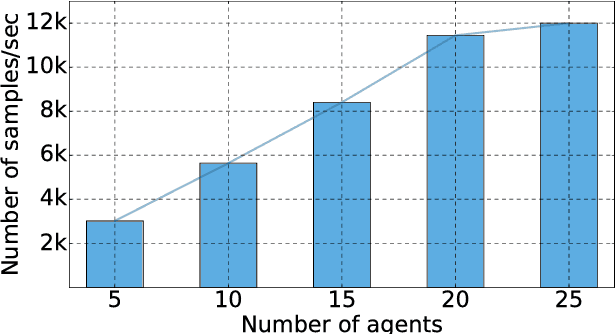

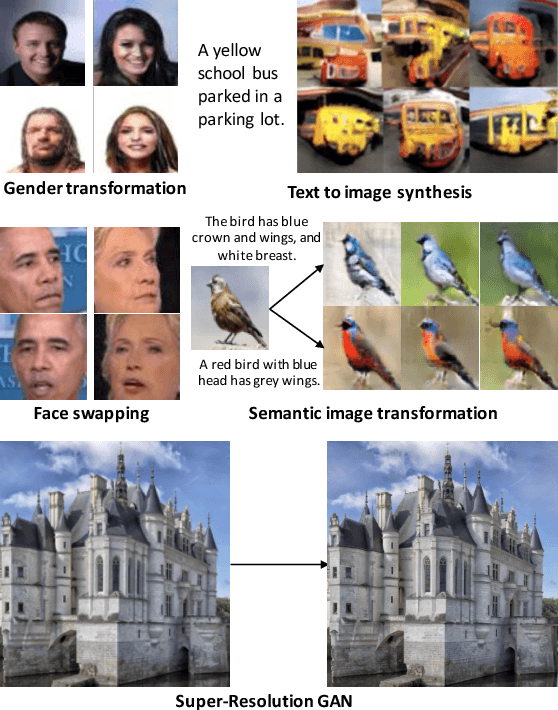

Abstract:Deep learning has enabled major advances in the fields of computer vision, natural language processing, and multimedia among many others. Developing a deep learning system is arduous and complex, as it involves constructing neural network architectures, managing training/trained models, tuning optimization process, preprocessing and organizing data, etc. TensorLayer is a versatile Python library that aims at helping researchers and engineers efficiently develop deep learning systems. It offers rich abstractions for neural networks, model and data management, and parallel workflow mechanism. While boosting efficiency, TensorLayer maintains both performance and scalability. TensorLayer was released in September 2016 on GitHub, and has helped people from academia and industry develop real-world applications of deep learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge