Akara Supratak

Quantifying the Impact of Data Characteristics on the Transferability of Sleep Stage Scoring Models

Mar 28, 2023

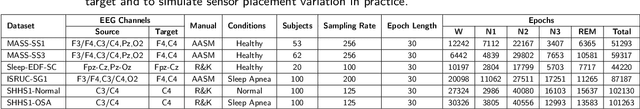

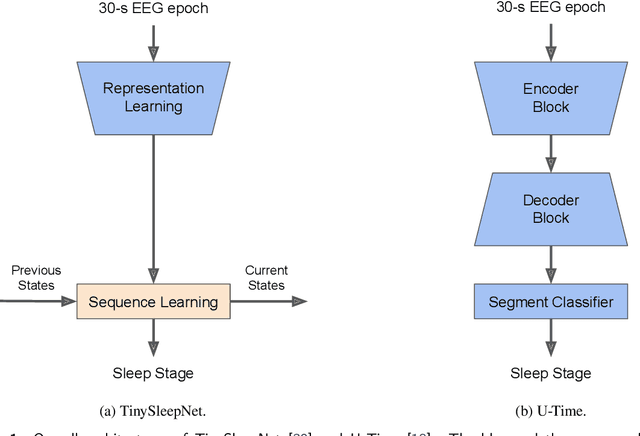

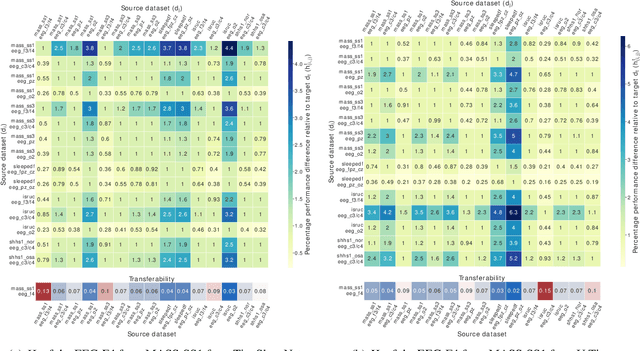

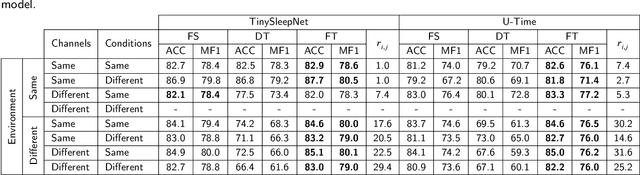

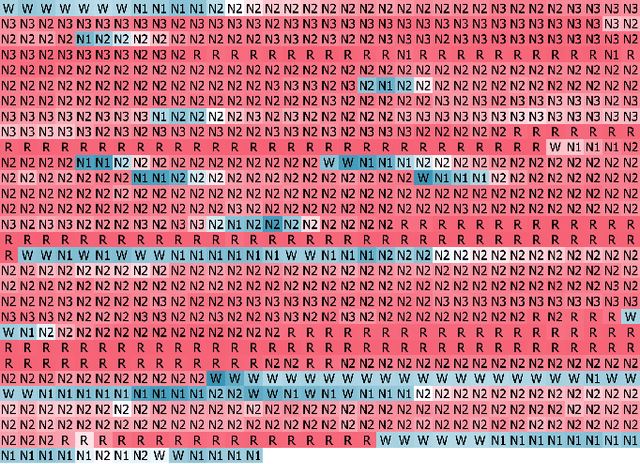

Abstract:Deep learning models for scoring sleep stages based on single-channel EEG have been proposed as a promising method for remote sleep monitoring. However, applying these models to new datasets, particularly from wearable devices, raises two questions. First, when annotations on a target dataset are unavailable, which different data characteristics affect the sleep stage scoring performance the most and by how much? Second, when annotations are available, which dataset should be used as the source of transfer learning to optimize performance? In this paper, we propose a novel method for computationally quantifying the impact of different data characteristics on the transferability of deep learning models. Quantification is accomplished by training and evaluating two models with significant architectural differences, TinySleepNet and U-Time, under various transfer configurations in which the source and target datasets have different recording channels, recording environments, and subject conditions. For the first question, the environment had the highest impact on sleep stage scoring performance, with performance degrading by over 14% when sleep annotations were unavailable. For the second question, the most useful transfer sources for TinySleepNet and the U-Time models were MASS-SS1 and ISRUC-SG1, containing a high percentage of N1 (the rarest sleep stage) relative to the others. The frontal and central EEGs were preferred for TinySleepNet. The proposed approach enables full utilization of existing sleep datasets for training and planning model transfer to maximize the sleep stage scoring performance on a target problem when sleep annotations are limited or unavailable, supporting the realization of remote sleep monitoring.

DeepSleepNet: a Model for Automatic Sleep Stage Scoring based on Raw Single-Channel EEG

Aug 03, 2017

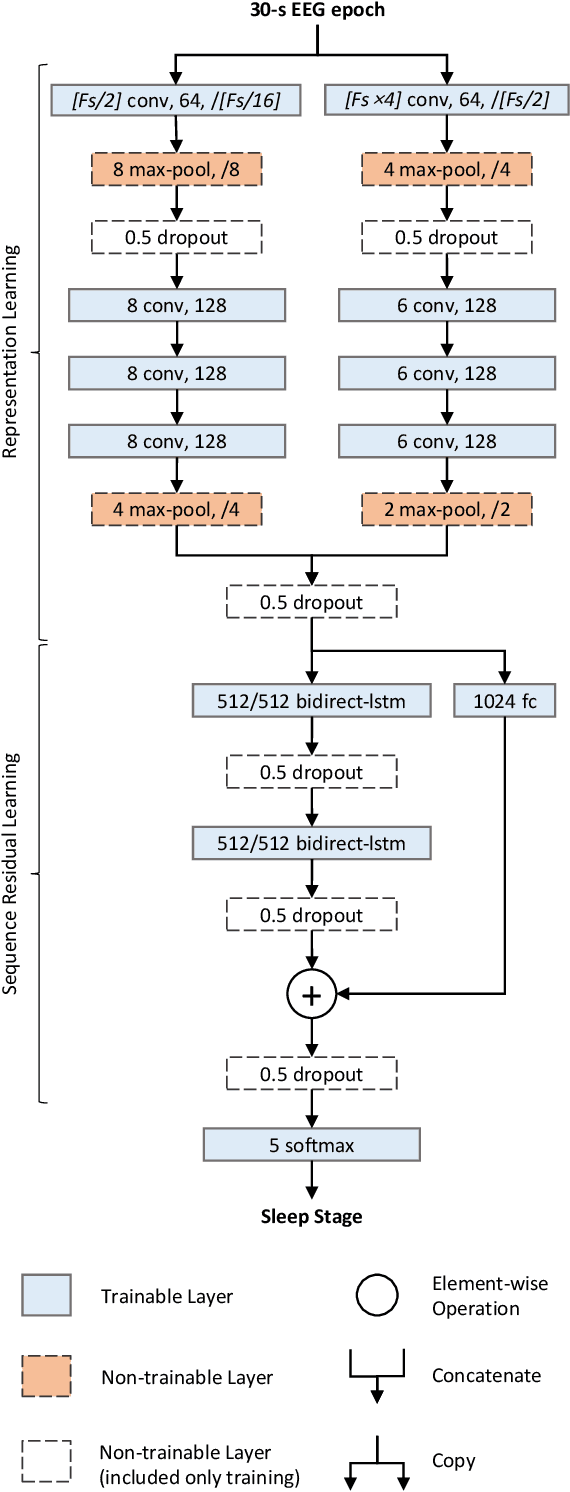

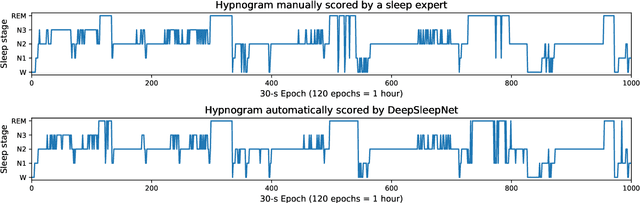

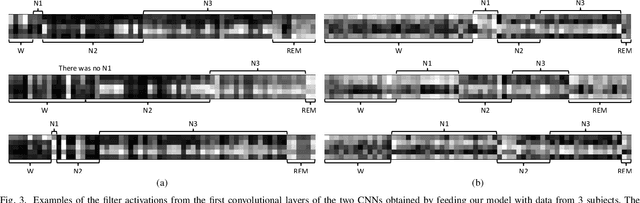

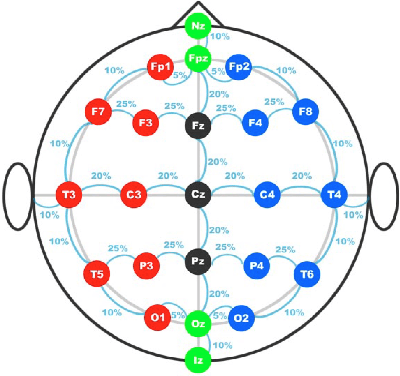

Abstract:The present study proposes a deep learning model, named DeepSleepNet, for automatic sleep stage scoring based on raw single-channel EEG. Most of the existing methods rely on hand-engineered features which require prior knowledge of sleep analysis. Only a few of them encode the temporal information such as transition rules, which is important for identifying the next sleep stages, into the extracted features. In the proposed model, we utilize Convolutional Neural Networks to extract time-invariant features, and bidirectional-Long Short-Term Memory to learn transition rules among sleep stages automatically from EEG epochs. We implement a two-step training algorithm to train our model efficiently. We evaluated our model using different single-channel EEGs (F4-EOG(Left), Fpz-Cz and Pz-Oz) from two public sleep datasets, that have different properties (e.g., sampling rate) and scoring standards (AASM and R&K). The results showed that our model achieved similar overall accuracy and macro F1-score (MASS: 86.2%-81.7, Sleep-EDF: 82.0%-76.9) compared to the state-of-the-art methods (MASS: 85.9%-80.5, Sleep-EDF: 78.9%-73.7) on both datasets. This demonstrated that, without changing the model architecture and the training algorithm, our model could automatically learn features for sleep stage scoring from different raw single-channel EEGs from different datasets without utilizing any hand-engineered features.

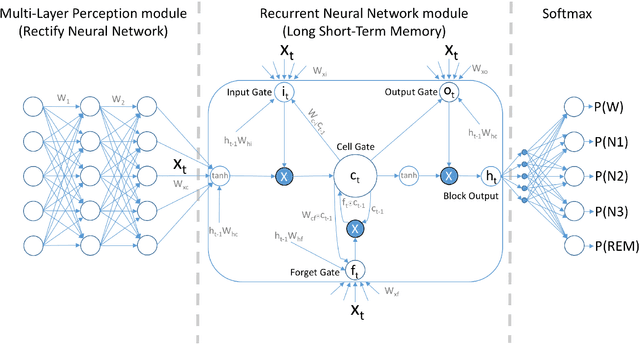

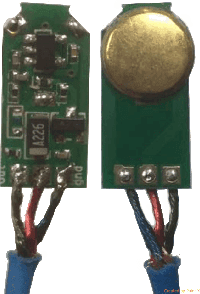

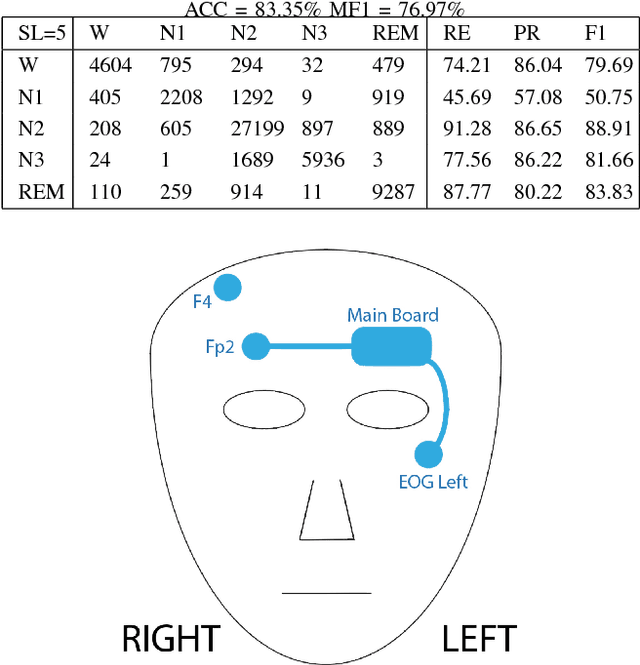

Mixed Neural Network Approach for Temporal Sleep Stage Classification

Aug 03, 2017

Abstract:This paper proposes a practical approach to addressing limitations posed by use of single active electrodes in applications for sleep stage classification. Electroencephalography (EEG)-based characterizations of sleep stage progression contribute the diagnosis and monitoring of the many pathologies of sleep. Several prior reports have explored ways of automating the analysis of sleep EEG and of reducing the complexity of the data needed for reliable discrimination of sleep stages in order to make it possible to perform sleep studies at lower cost in the home (rather than only in specialized clinical facilities). However, these reports have involved recordings from electrodes placed on the cranial vertex or occiput, which can be uncomfortable or difficult for subjects to position. Those that have utilized single EEG channels which contain less sleep information, have showed poor classification performance. We have taken advantage of Rectifier Neural Network for feature detection and Long Short-Term Memory (LSTM) network for sequential data learning to optimize classification performance with single electrode recordings. After exploring alternative electrode placements, we found a comfortable configuration of a single-channel EEG on the forehead and have shown that it can be integrated with additional electrodes for simultaneous recording of the electroocuolgram (EOG). Evaluation of data from 62 people (with 494 hours sleep) demonstrated better performance of our analytical algorithm for automated sleep classification than existing approaches using vertex or occipital electrode placements. Use of this recording configuration with neural network deconvolution promises to make clinically indicated home sleep studies practical.

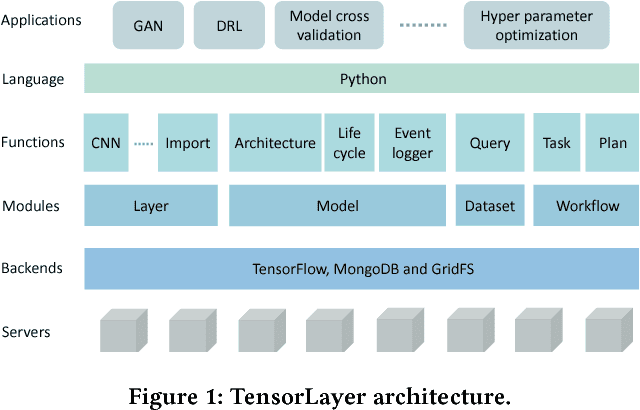

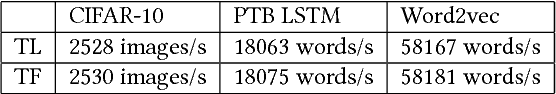

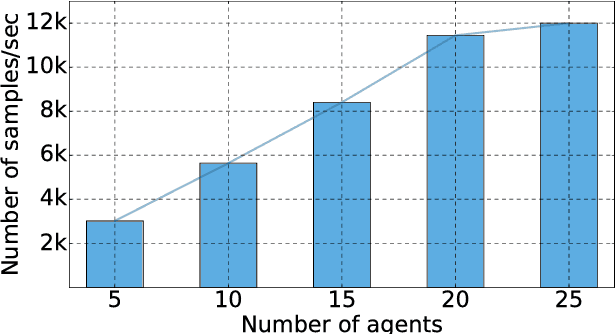

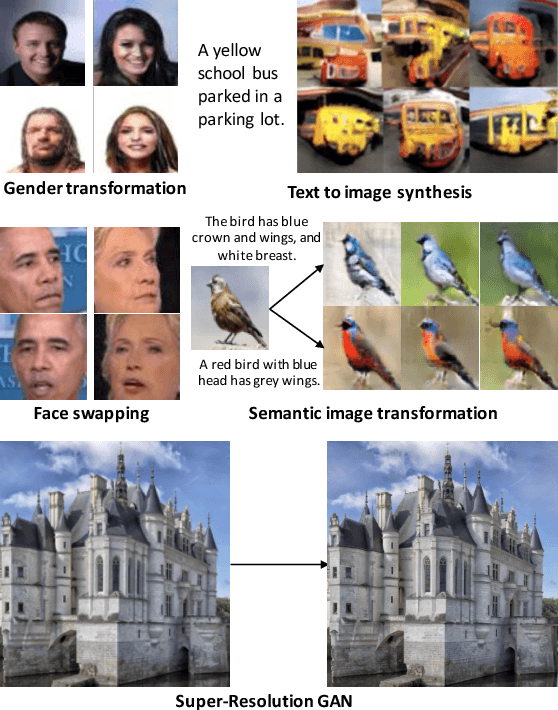

TensorLayer: A Versatile Library for Efficient Deep Learning Development

Aug 03, 2017

Abstract:Deep learning has enabled major advances in the fields of computer vision, natural language processing, and multimedia among many others. Developing a deep learning system is arduous and complex, as it involves constructing neural network architectures, managing training/trained models, tuning optimization process, preprocessing and organizing data, etc. TensorLayer is a versatile Python library that aims at helping researchers and engineers efficiently develop deep learning systems. It offers rich abstractions for neural networks, model and data management, and parallel workflow mechanism. While boosting efficiency, TensorLayer maintains both performance and scalability. TensorLayer was released in September 2016 on GitHub, and has helped people from academia and industry develop real-world applications of deep learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge