Peter Haddawy

Quantifying the Impact of Data Characteristics on the Transferability of Sleep Stage Scoring Models

Mar 28, 2023

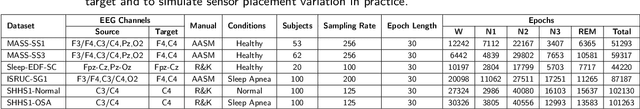

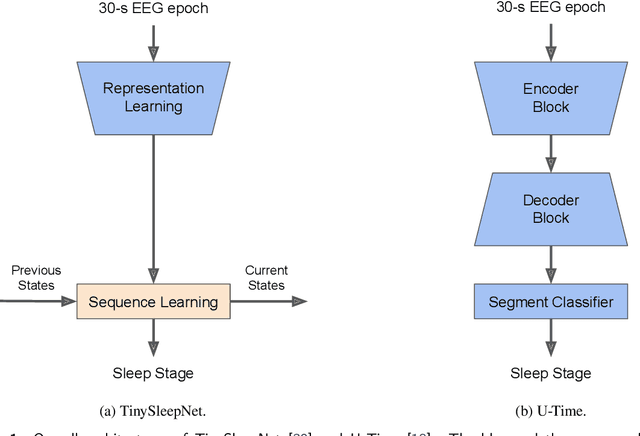

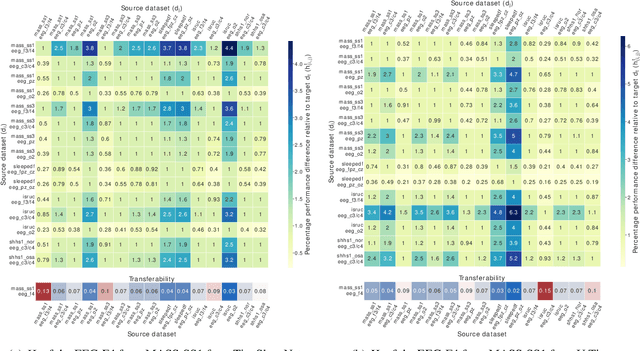

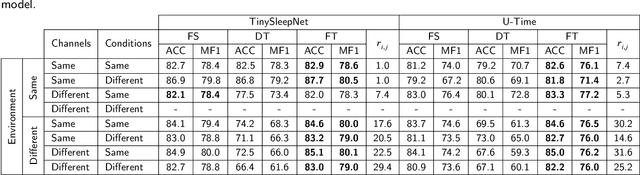

Abstract:Deep learning models for scoring sleep stages based on single-channel EEG have been proposed as a promising method for remote sleep monitoring. However, applying these models to new datasets, particularly from wearable devices, raises two questions. First, when annotations on a target dataset are unavailable, which different data characteristics affect the sleep stage scoring performance the most and by how much? Second, when annotations are available, which dataset should be used as the source of transfer learning to optimize performance? In this paper, we propose a novel method for computationally quantifying the impact of different data characteristics on the transferability of deep learning models. Quantification is accomplished by training and evaluating two models with significant architectural differences, TinySleepNet and U-Time, under various transfer configurations in which the source and target datasets have different recording channels, recording environments, and subject conditions. For the first question, the environment had the highest impact on sleep stage scoring performance, with performance degrading by over 14% when sleep annotations were unavailable. For the second question, the most useful transfer sources for TinySleepNet and the U-Time models were MASS-SS1 and ISRUC-SG1, containing a high percentage of N1 (the rarest sleep stage) relative to the others. The frontal and central EEGs were preferred for TinySleepNet. The proposed approach enables full utilization of existing sleep datasets for training and planning model transfer to maximize the sleep stage scoring performance on a target problem when sleep annotations are limited or unavailable, supporting the realization of remote sleep monitoring.

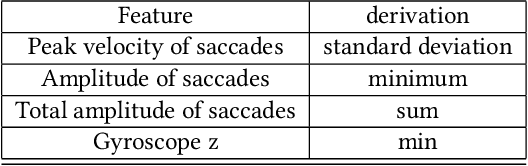

Differentiating Surgeon Expertise Solely by Eye Movement Features

Feb 11, 2021

Abstract:Developments in computer science in recent years are moving into hospitals. Surgeons are faced with ever new technical challenges. Visual perception plays a key role in most of these. Diagnostic and training models are needed to optimize the training of young surgeons. In this study, we present a model for classifying experts, 4th-year residents and 3rd-year residents, using only eye movements. We show a model that uses a minimal set of features and still achieve a robust accuracy of 76.46 % to classify eye movements into the correct class. Likewise, in this study, we address the evolutionary steps of visual perception between three expertise classes, forming a first step towards a diagnostic model for expertise.

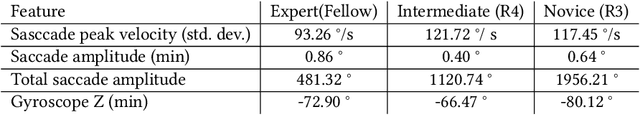

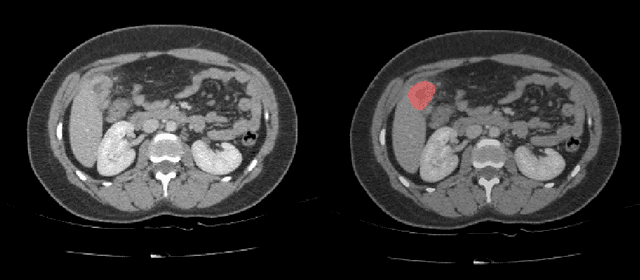

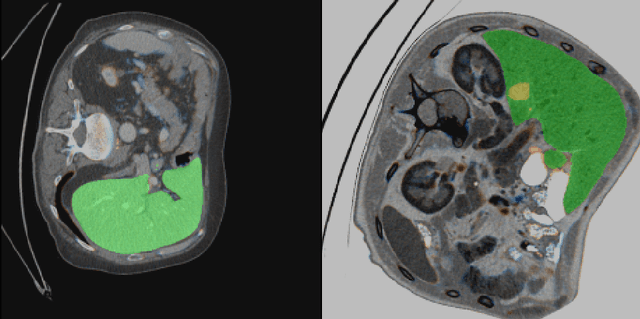

Automatic segmentation of kidney and liver tumors in CT images

Sep 16, 2019

Abstract:Automatic segmentation of hepatic lesions in computed tomography (CT) images is a challenging task to perform due to heterogeneous, diffusive shape of tumors and complex background. To address the problem more and more researchers rely on assistance of deep convolutional neural networks (CNN) with 2D or 3D type architecture that have proven to be effective in a wide range of computer vision tasks, including medical image processing. In this technical report, we carry out research focused on more careful approach to the process of learning rather than on complex architecture of the CNN. We have chosen MICCAI 2017 LiTS dataset for training process and the public 3DIRCADb dataset for validation of our method. The proposed algorithm reached DICE score 78.8% on the 3DIRCADb dataset. The described method was then applied to the 2019 Kidney Tumor Segmentation (KiTS-2019) challenge, where our single submission achieved 96.38% for kidney and 67.38% for tumor Dice scores.

Convergent Deduction for Probabilistic Logic

Mar 27, 2013Abstract:This paper discusses the semantics and proof theory of Nilsson's probabilistic logic, outlining both the benefits of its well-defined model theory and the drawbacks of its proof theory. Within Nilsson's semantic framework, we derive a set of inference rules which are provably sound. The resulting proof system, in contrast to Nilsson's approach, has the important feature of convergence - that is, the inference process proceeds by computing increasingly narrow probability intervals which converge from above and below on the smallest entailed probability interval. Thus the procedure can be stopped at any time to yield partial information concerning the smallest entailed interval.

Probability as a Modal Operator

Mar 27, 2013

Abstract:This paper argues for a modal view of probability. The syntax and semantics of one particularly strong probability logic are discussed and some examples of the use of the logic are provided. We show that it is both natural and useful to think of probability as a modal operator. Contrary to popular belief in AI, a probability ranging between 0 and 1 represents a continuum between impossibility and necessity, not between simple falsity and truth. The present work provides a clear semantics for quantification into the scope of the probability operator and for higher-order probabilities. Probability logic is a language for expressing both probabilistic and logical concepts.

Time, Chance, and Action

Mar 27, 2013

Abstract:To operate intelligently in the world, an agent must reason about its actions. The consequences of an action are a function of both the state of the world and the action itself. Many aspects of the world are inherently stochastic, so a representation for reasoning about actions must be able to express chances of world states as well as indeterminacy in the effects of actions and other events. This paper presents a propositional temporal probability logic for representing and reasoning about actions. The logic can represent the probability that facts hold and events occur at various times. It can represent the probability that actions and other events affect the future. It can represent concurrent actions and conditions that hold or change during execution of an action. The model of probability relates probabilities over time. The logical language integrates both modal and probabilistic constructs and can thus represent and distinguish between possibility, probability, and truth. Several examples illustrating the use of the logic are given.

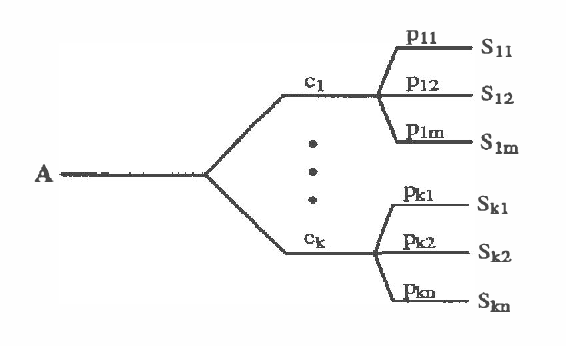

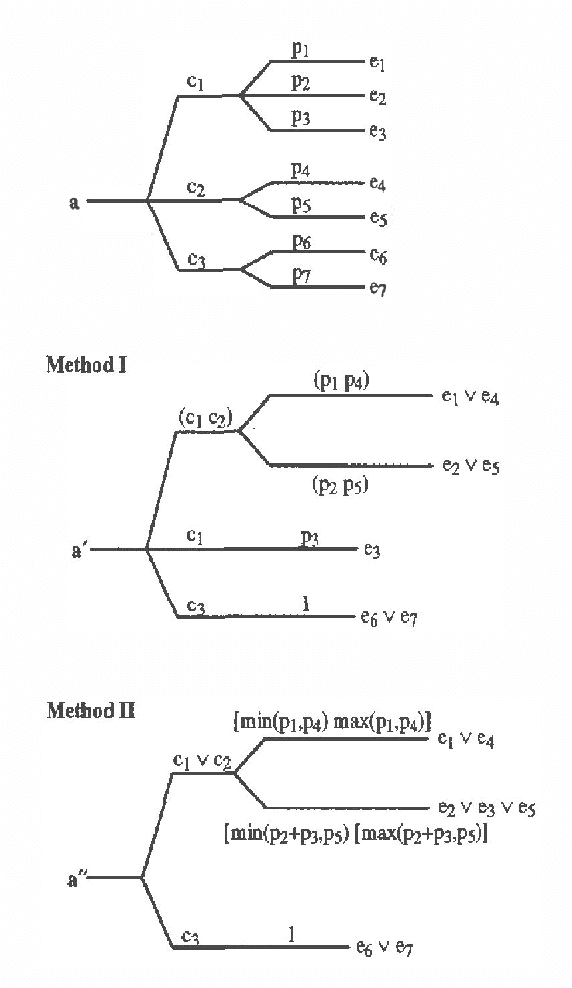

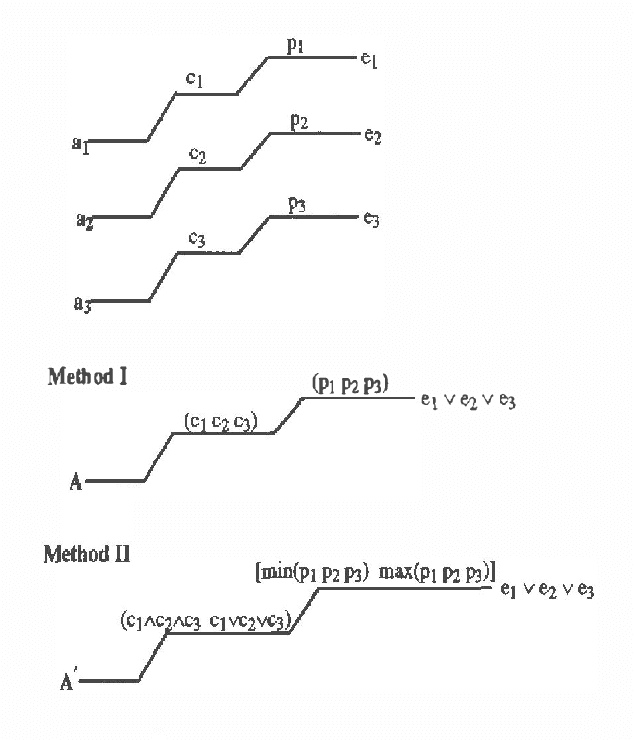

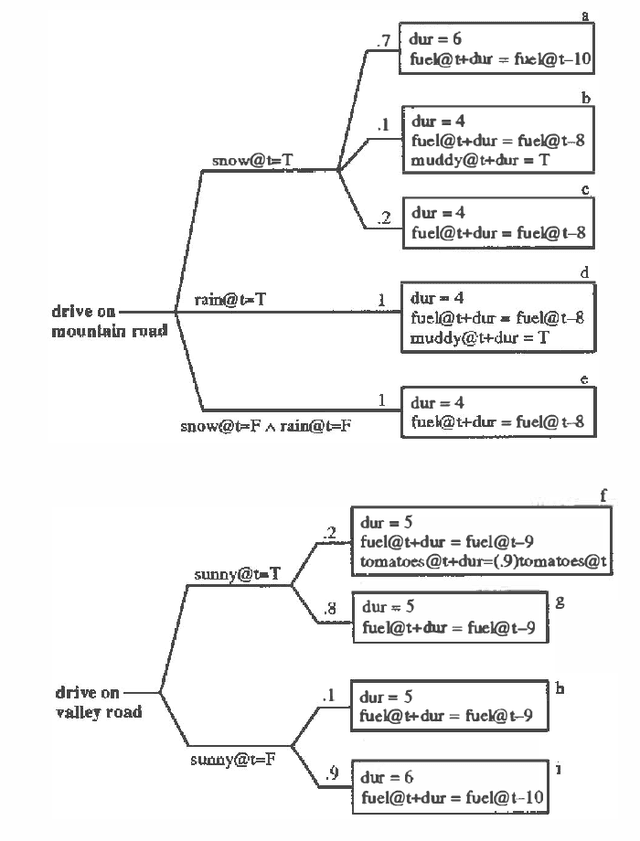

Abstracting Probabilistic Actions

Feb 27, 2013

Abstract:This paper discusses the problem of abstracting conditional probabilistic actions. We identify two distinct types of abstraction: intra-action abstraction and inter-action abstraction. We define what it means for the abstraction of an action to be correct and then derive two methods of intra-action abstraction and two methods of inter-action abstraction which are correct according to this criterion. We illustrate the developed techniques by applying them to actions described with the temporal action representation used in the DRIPS decision-theoretic planner and we describe how the planner uses abstraction to reduce the complexity of planning.

Generating Bayesian Networks from Probability Logic Knowledge Bases

Feb 27, 2013

Abstract:We present a method for dynamically generating Bayesian networks from knowledge bases consisting of first-order probability logic sentences. We present a subset of probability logic sufficient for representing the class of Bayesian networks with discrete-valued nodes. We impose constraints on the form of the sentences that guarantee that the knowledge base contains all the probabilistic information necessary to generate a network. We define the concept of d-separation for knowledge bases and prove that a knowledge base with independence conditions defined by d-separation is a complete specification of a probability distribution. We present a network generation algorithm that, given an inference problem in the form of a query Q and a set of evidence E, generates a network to compute P(Q|E). We prove the algorithm to be correct.

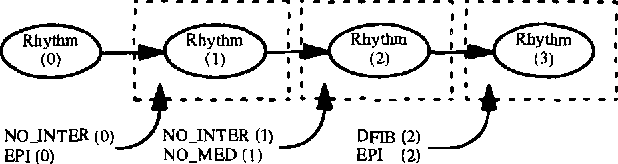

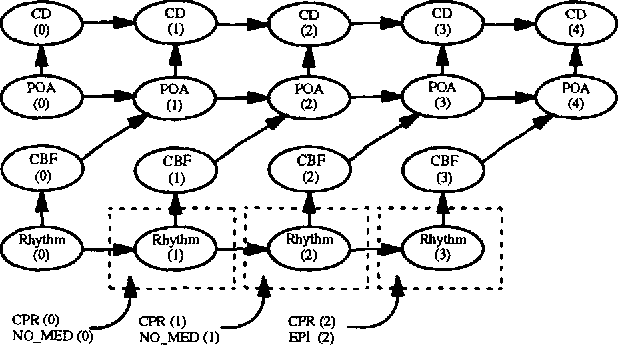

A Theoretical Framework for Context-Sensitive Temporal Probability Model Construction with Application to Plan Projection

Feb 20, 2013

Abstract:We define a context-sensitive temporal probability logic for representing classes of discrete-time temporal Bayesian networks. Context constraints allow inference to be focused on only the relevant portions of the probabilistic knowledge. We provide a declarative semantics for our language. We present a Bayesian network construction algorithm whose generated networks give sound and complete answers to queries. We use related concepts in logic programming to justify our approach. We have implemented a Bayesian network construction algorithm for a subset of the theory and demonstrate it's application to the problem of evaluating the effectiveness of treatments for acute cardiac conditions.

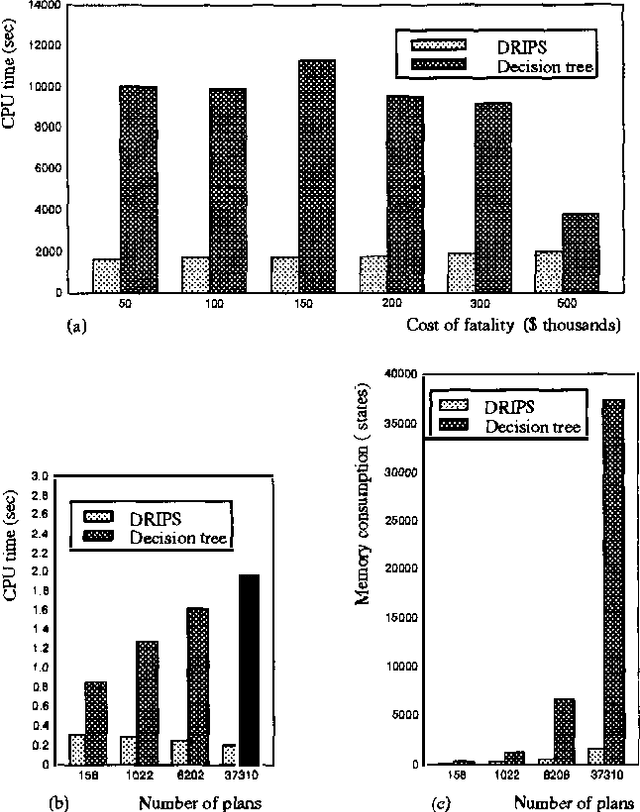

Efficient Decision-Theoretic Planning: Techniques and Empirical Analysis

Feb 20, 2013

Abstract:This paper discusses techniques for performing efficient decision-theoretic planning. We give an overview of the DRIPS decision-theoretic refinement planning system, which uses abstraction to efficiently identify optimal plans. We present techniques for automatically generating search control information, which can significantly improve the planner's performance. We evaluate the efficiency of DRIPS both with and without the search control rules on a complex medical planning problem and compare its performance to that of a branch-and-bound decision tree algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge