AnHai Doan

Deep Entity Matching with Pre-Trained Language Models

Apr 01, 2020

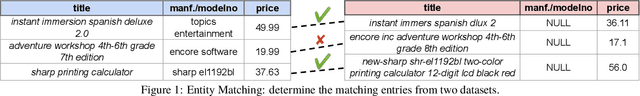

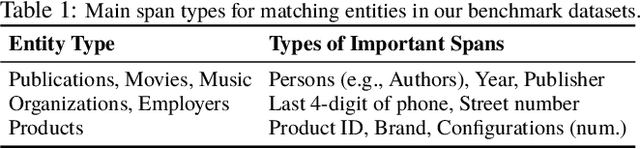

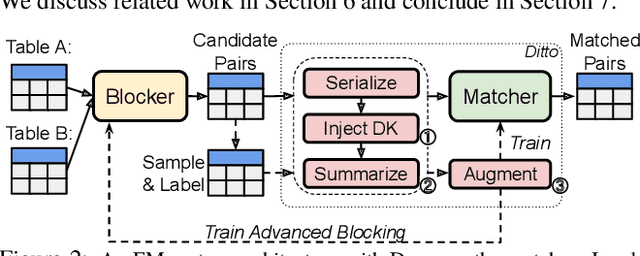

Abstract:We present Ditto, a novel entity matching system based on pre-trained Transformer-based language models. We fine-tune and cast EM as a sequence-pair classification problem to leverage such models with a simple architecture. Our experiments show that a straightforward application of language models such as BERT, DistilBERT, or ALBERT pre-trained on large text corpora already significantly improves the matching quality and outperforms previous state-of-the-art (SOTA), by up to 19% of F1 score on benchmark datasets. We also developed three optimization techniques to further improve Ditto's matching capability. Ditto allows domain knowledge to be injected by highlighting important pieces of input information that may be of interest when making matching decisions. Ditto also summarizes strings that are too long so that only the essential information is retained and used for EM. Finally, Ditto adapts a SOTA technique on data augmentation for text to EM to augment the training data with (difficult) examples. This way, Ditto is forced to learn "harder" to improve the model's matching capability. The optimizations we developed further boost the performance of Ditto by up to 8.5%. Perhaps more surprisingly, we establish that Ditto can achieve the previous SOTA results with at most half the number of labeled data. Finally, we demonstrate Ditto's effectiveness on a real-world large-scale EM task. On matching two company datasets consisting of 789K and 412K records, Ditto achieves a high F1 score of 96.5%.

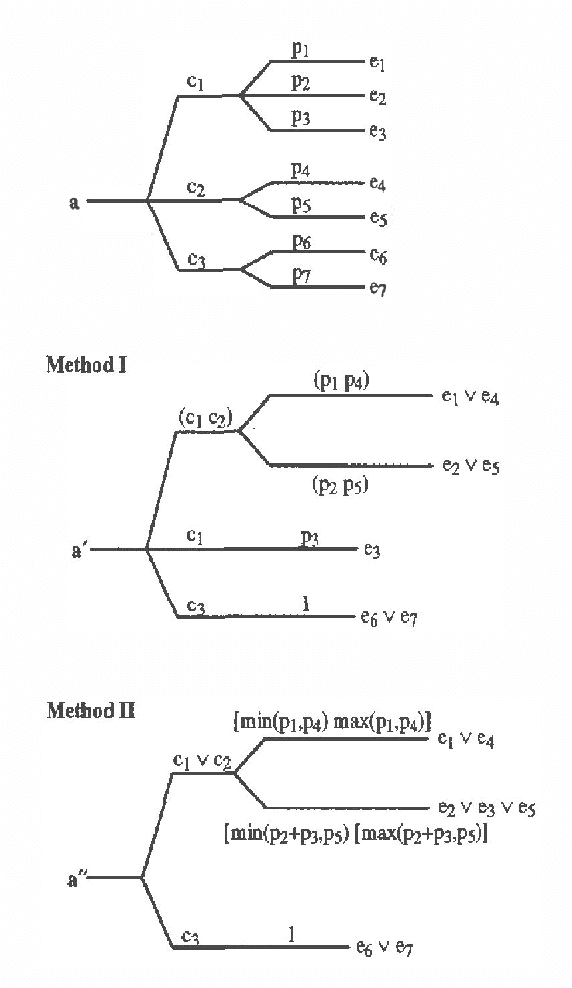

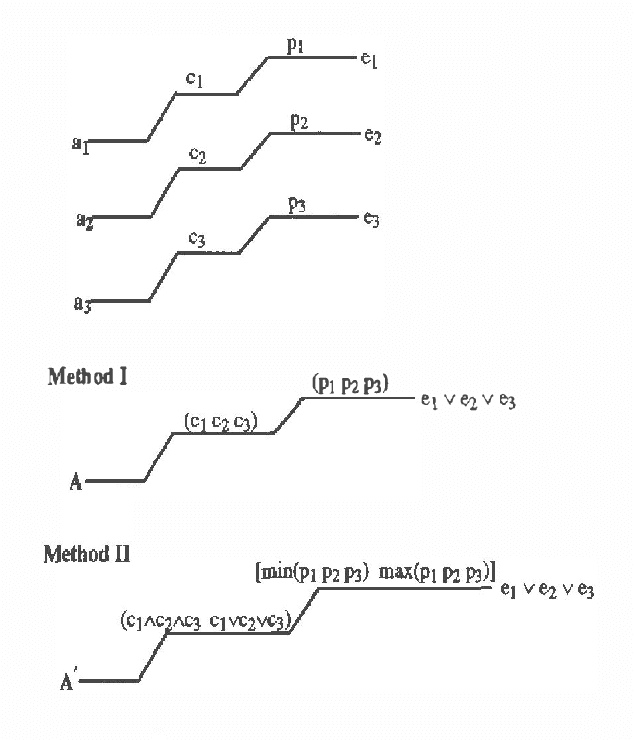

Abstracting Probabilistic Actions

Feb 27, 2013

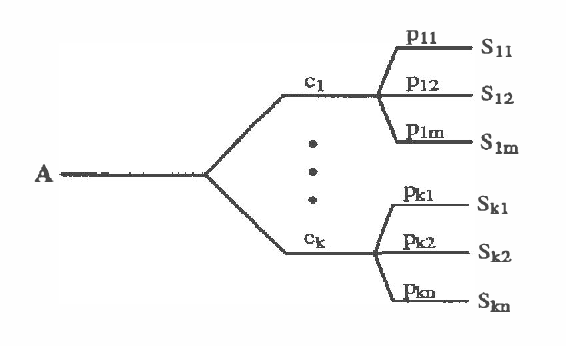

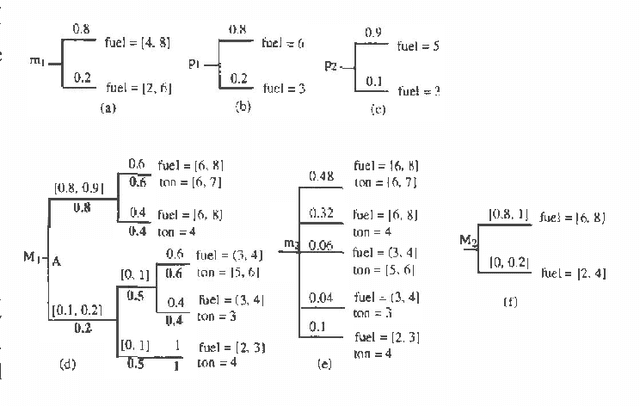

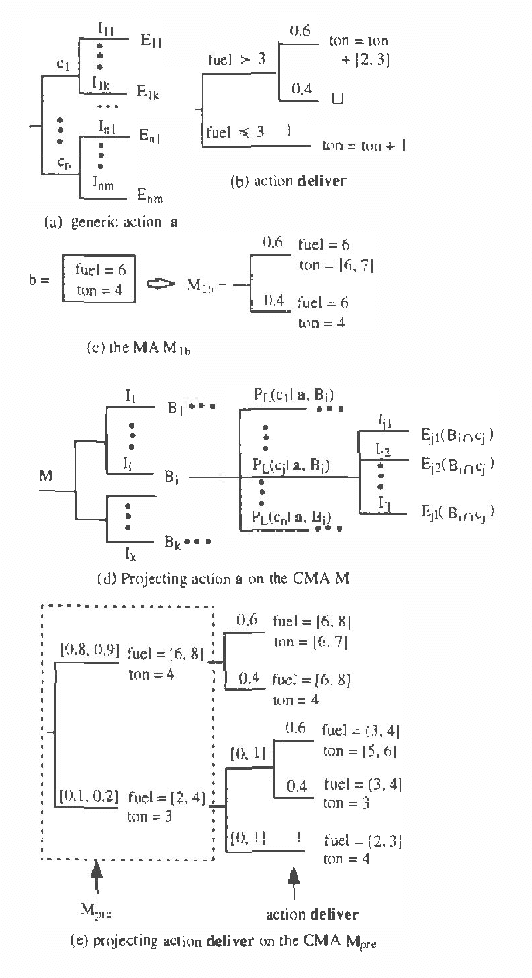

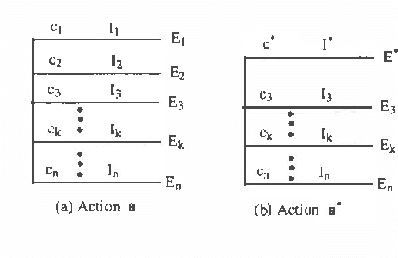

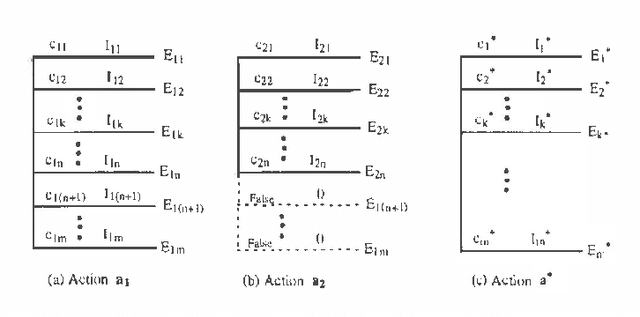

Abstract:This paper discusses the problem of abstracting conditional probabilistic actions. We identify two distinct types of abstraction: intra-action abstraction and inter-action abstraction. We define what it means for the abstraction of an action to be correct and then derive two methods of intra-action abstraction and two methods of inter-action abstraction which are correct according to this criterion. We illustrate the developed techniques by applying them to actions described with the temporal action representation used in the DRIPS decision-theoretic planner and we describe how the planner uses abstraction to reduce the complexity of planning.

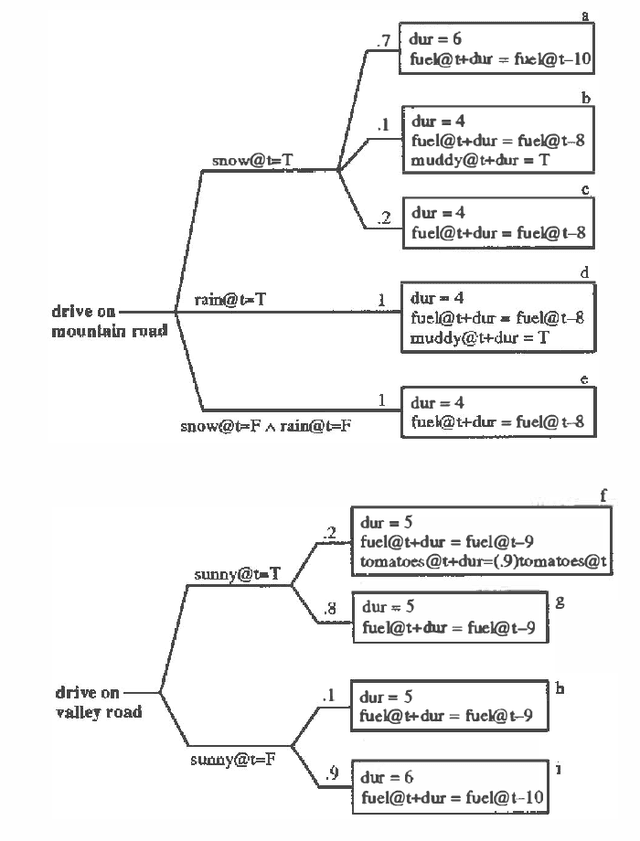

Efficient Decision-Theoretic Planning: Techniques and Empirical Analysis

Feb 20, 2013

Abstract:This paper discusses techniques for performing efficient decision-theoretic planning. We give an overview of the DRIPS decision-theoretic refinement planning system, which uses abstraction to efficiently identify optimal plans. We present techniques for automatically generating search control information, which can significantly improve the planner's performance. We evaluate the efficiency of DRIPS both with and without the search control rules on a complex medical planning problem and compare its performance to that of a branch-and-bound decision tree algorithm.

Sound Abstraction of Probabilistic Actions in The Constraint Mass Assignment Framework

Feb 13, 2013

Abstract:This paper provides a formal and practical framework for sound abstraction of probabilistic actions. We start by precisely defining the concept of sound abstraction within the context of finite-horizon planning (where each plan is a finite sequence of actions). Next we show that such abstraction cannot be performed within the traditional probabilistic action representation, which models a world with a single probability distribution over the state space. We then present the constraint mass assignment representation, which models the world with a set of probability distributions and is a generalization of mass assignment representations. Within this framework, we present sound abstraction procedures for three types of action abstraction. We end the paper with discussions and related work on sound and approximate abstraction. We give pointers to papers in which we discuss other sound abstraction-related issues, including applications, estimating loss due to abstraction, and automatically generating abstraction hierarchies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge