Erik Altman

Graph Feature Preprocessor: Real-time Extraction of Subgraph-based Features from Transaction Graphs

Feb 13, 2024

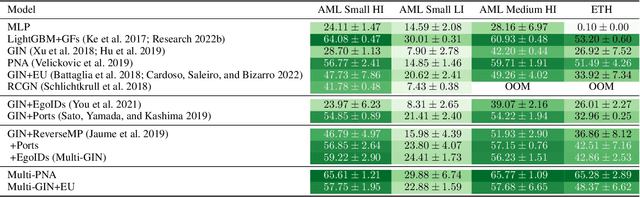

Abstract:In this paper, we present "Graph Feature Preprocessor", a software library for detecting typical money laundering and fraud patterns in financial transaction graphs in real time. These patterns are used to produce a rich set of transaction features for downstream machine learning training and inference tasks such as money laundering detection. We show that our enriched transaction features dramatically improve the prediction accuracy of gradient-boosting-based machine learning models. Our library exploits multicore parallelism, maintains a dynamic in-memory graph, and efficiently mines subgraph patterns in the incoming transaction stream, which enables it to be operated in a streaming manner. We evaluate our library using highly-imbalanced synthetic anti-money laundering (AML) and real-life Ethereum phishing datasets. In these datasets, the proportion of illicit transactions is very small, which makes the learning process challenging. Our solution, which combines our Graph Feature Preprocessor and gradient-boosting-based machine learning models, is able to detect these illicit transactions with higher minority-class F1 scores than standard graph neural networks. In addition, the end-to-end throughput rate of our solution executed on a multicore CPU outperforms the graph neural network baselines executed on a powerful V100 GPU. Overall, the combination of high accuracy, a high throughput rate, and low latency of our solution demonstrates the practical value of our library in real-world applications. Graph Feature Preprocessor has been integrated into IBM mainframe software products, namely "IBM Cloud Pak for Data on Z" and "AI Toolkit for IBM Z and LinuxONE".

Realistic Synthetic Financial Transactions for Anti-Money Laundering Models

Jun 22, 2023

Abstract:With the widespread digitization of finance and the increasing popularity of cryptocurrencies, the sophistication of fraud schemes devised by cybercriminals is growing. Money laundering -- the movement of illicit funds to conceal their origins -- can cross bank and national boundaries, producing complex transaction patterns. The UN estimates 2-5\% of global GDP or \$0.8 - \$2.0 trillion dollars are laundered globally each year. Unfortunately, real data to train machine learning models to detect laundering is generally not available, and previous synthetic data generators have had significant shortcomings. A realistic, standardized, publicly-available benchmark is needed for comparing models and for the advancement of the area. To this end, this paper contributes a synthetic financial transaction dataset generator and a set of synthetically generated AML (Anti-Money Laundering) datasets. We have calibrated this agent-based generator to match real transactions as closely as possible and made the datasets public. We describe the generator in detail and demonstrate how the datasets generated can help compare different Graph Neural Networks in terms of their AML abilities. In a key way, using synthetic data in these comparisons can be even better than using real data: the ground truth labels are complete, whilst many laundering transactions in real data are never detected.

Provably Powerful Graph Neural Networks for Directed Multigraphs

Jun 20, 2023

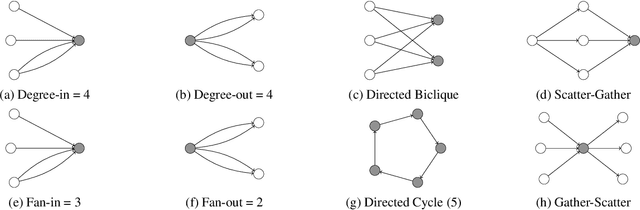

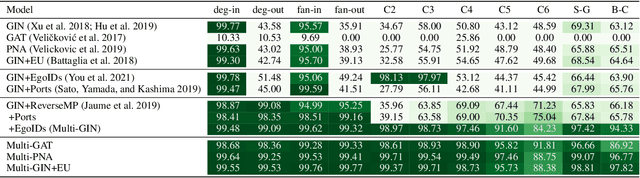

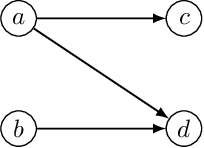

Abstract:This paper proposes a set of simple adaptations to transform standard message-passing Graph Neural Networks (GNN) into provably powerful directed multigraph neural networks. The adaptations include multigraph port numbering, ego IDs, and reverse message passing. We prove that the combination of these theoretically enables the detection of any directed subgraph pattern. To validate the effectiveness of our proposed adaptations in practice, we conduct experiments on synthetic subgraph detection tasks, which demonstrate outstanding performance with almost perfect results. Moreover, we apply our proposed adaptations to two financial crime analysis tasks. We observe dramatic improvements in detecting money laundering transactions, improving the minority-class F1 score of a standard message-passing GNN by up to 45%, and clearly outperforming tree-based and GNN baselines. Similarly impressive results are observed on a real-world phishing detection dataset, boosting a standard GNN's F1 score by over 15% and outperforming all baselines.

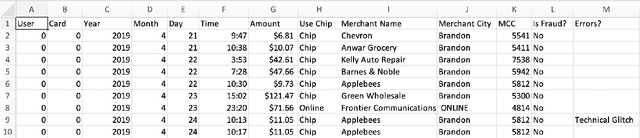

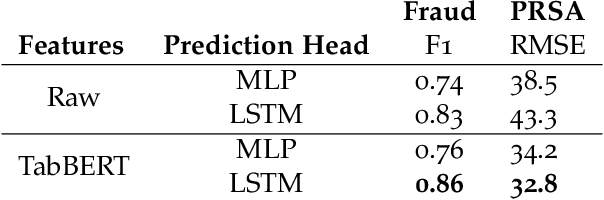

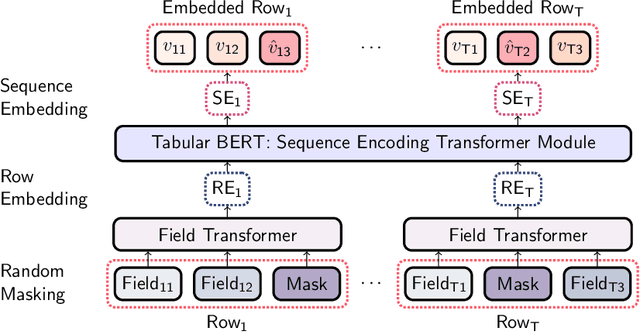

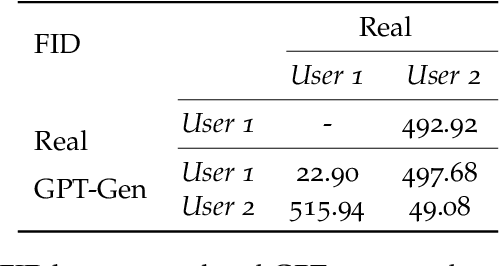

Tabular Transformers for Modeling Multivariate Time Series

Nov 03, 2020

Abstract:Tabular datasets are ubiquitous in data science applications. Given their importance, it seems natural to apply state-of-the-art deep learning algorithms in order to fully unlock their potential. Here we propose neural network models that represent tabular time series that can optionally leverage their hierarchical structure. This results in two architectures for tabular time series: one for learning representations that is analogous to BERT and can be pre-trained end-to-end and used in downstream tasks, and one that is akin to GPT and can be used for generation of realistic synthetic tabular sequences. We demonstrate our models on two datasets: a synthetic credit card transaction dataset, where the learned representations are used for fraud detection and synthetic data generation, and on a real pollution dataset, where the learned encodings are used to predict atmospheric pollutant concentrations. Code and data are available at https://github.com/IBM/TabFormer.

Understanding AI Data Repositories with Automatic Query Generation

Apr 20, 2018

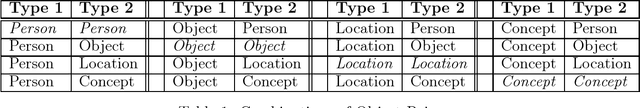

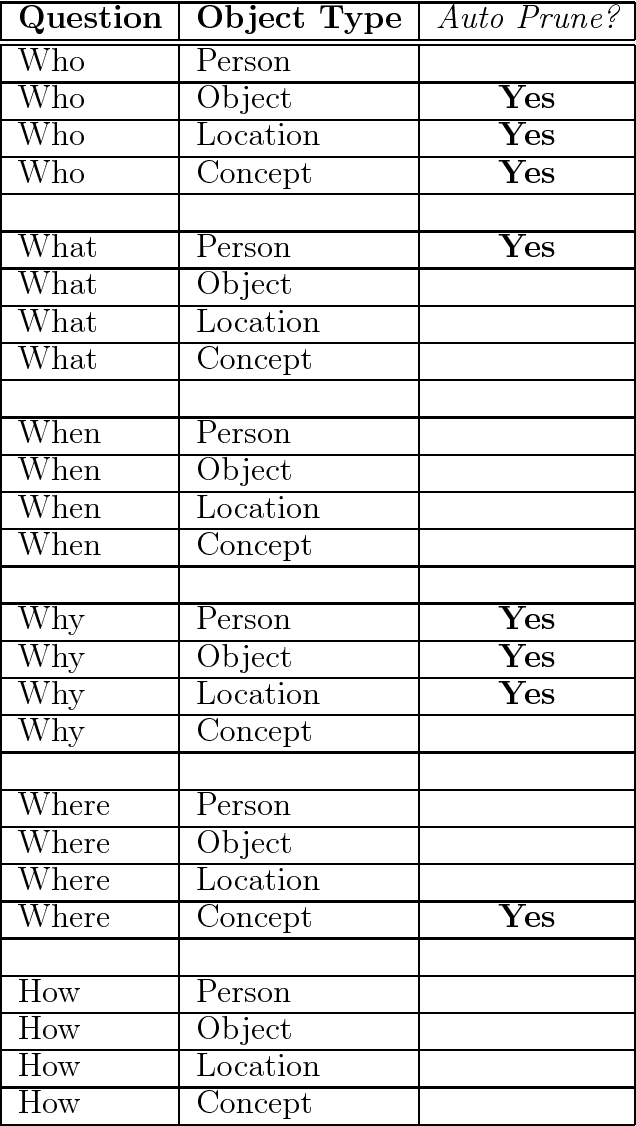

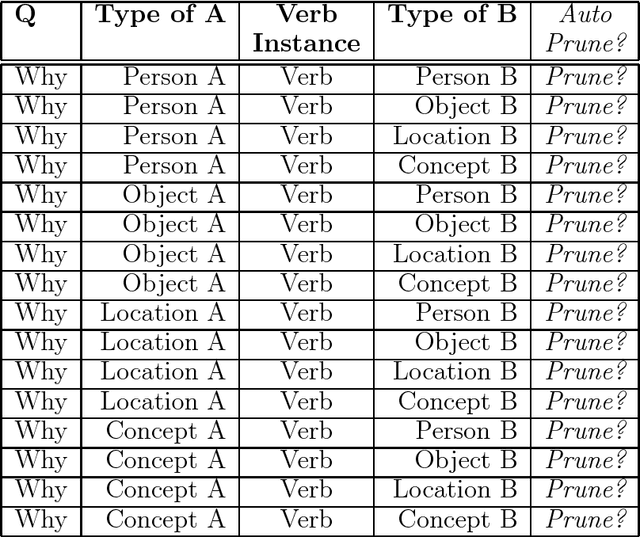

Abstract:We describe a set of techniques to generate queries automatically based on one or more ingested, input corpuses. These queries require no a priori domain knowledge, and hence no human domain experts. Thus, these auto-generated queries help address the epistemological question of how we know what we know, or more precisely in this case, how an AI system with ingested data knows what it knows. These auto-generated queries can also be used to identify and remedy problem areas in ingested material -- areas for which the knowledge of the AI system is incomplete or even erroneous. Similarly, the proposed techniques facilitate tests of AI capability -- both in terms of coverage and accuracy. By removing humans from the main learning loop, our approach also allows more effective scaling of AI and cognitive capabilities to provide (1) broader coverage in a single domain such as health or geology; and (2) more rapid deployment to new domains. The proposed techniques also allow ingested knowledge to be extended naturally. Our investigations are early, and this paper provides a description of the techniques. Assessment of their efficacy is our next step for future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge