Erica Tevere

AI Space Cortex: An Experimental System for Future Era Space Exploration

Jul 09, 2025Abstract:Our Robust, Explainable Autonomy for Scientific Icy Moon Operations (REASIMO) effort contributes to NASA's Concepts for Ocean worlds Life Detection Technology (COLDTech) program, which explores science platform technologies for ocean worlds such as Europa and Enceladus. Ocean world missions pose significant operational challenges. These include long communication lags, limited power, and lifetime limitations caused by radiation damage and hostile conditions. Given these operational limitations, onboard autonomy will be vital for future Ocean world missions. Besides the management of nominal lander operations, onboard autonomy must react appropriately in the event of anomalies. Traditional spacecraft rely on a transition into 'safe-mode' in which non-essential components and subsystems are powered off to preserve safety and maintain communication with Earth. For a severely time-limited Ocean world mission, resolutions to these anomalies that can be executed without Earth-in-the-loop communication and associated delays are paramount for completion of the mission objectives and science goals. To address these challenges, the REASIMO effort aims to demonstrate a robust level of AI-assisted autonomy for such missions, including the ability to detect and recover from anomalies, and to perform missions based on pre-trained behaviors rather than hard-coded, predetermined logic like all prior space missions. We developed an AI-assisted, personality-driven, intelligent framework for control of an Ocean world mission by combining a mix of advanced technologies. To demonstrate the capabilities of the framework, we perform tests of autonomous sampling operations on a lander-manipulator testbed at the NASA Jet Propulsion Laboratory, approximating possible surface conditions such a mission might encounter.

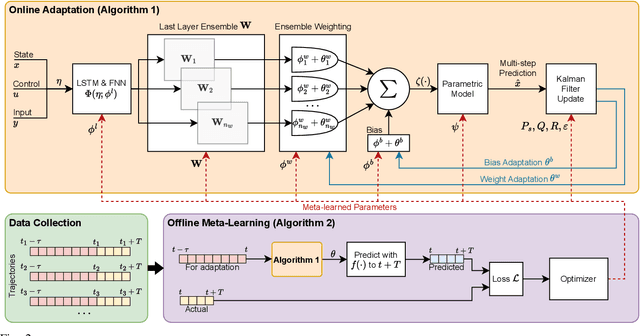

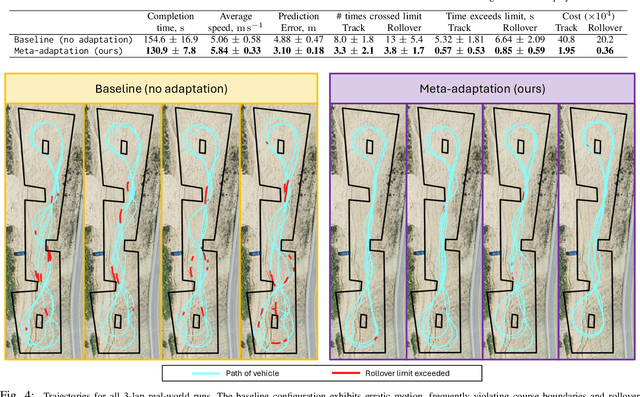

Meta-Learning Online Dynamics Model Adaptation in Off-Road Autonomous Driving

Apr 23, 2025

Abstract:High-speed off-road autonomous driving presents unique challenges due to complex, evolving terrain characteristics and the difficulty of accurately modeling terrain-vehicle interactions. While dynamics models used in model-based control can be learned from real-world data, they often struggle to generalize to unseen terrain, making real-time adaptation essential. We propose a novel framework that combines a Kalman filter-based online adaptation scheme with meta-learned parameters to address these challenges. Offline meta-learning optimizes the basis functions along which adaptation occurs, as well as the adaptation parameters, while online adaptation dynamically adjusts the onboard dynamics model in real time for model-based control. We validate our approach through extensive experiments, including real-world testing on a full-scale autonomous off-road vehicle, demonstrating that our method outperforms baseline approaches in prediction accuracy, performance, and safety metrics, particularly in safety-critical scenarios. Our results underscore the effectiveness of meta-learned dynamics model adaptation, advancing the development of reliable autonomous systems capable of navigating diverse and unseen environments. Video is available at: https://youtu.be/cCKHHrDRQEA

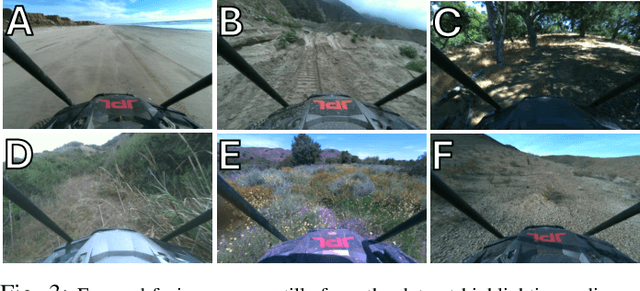

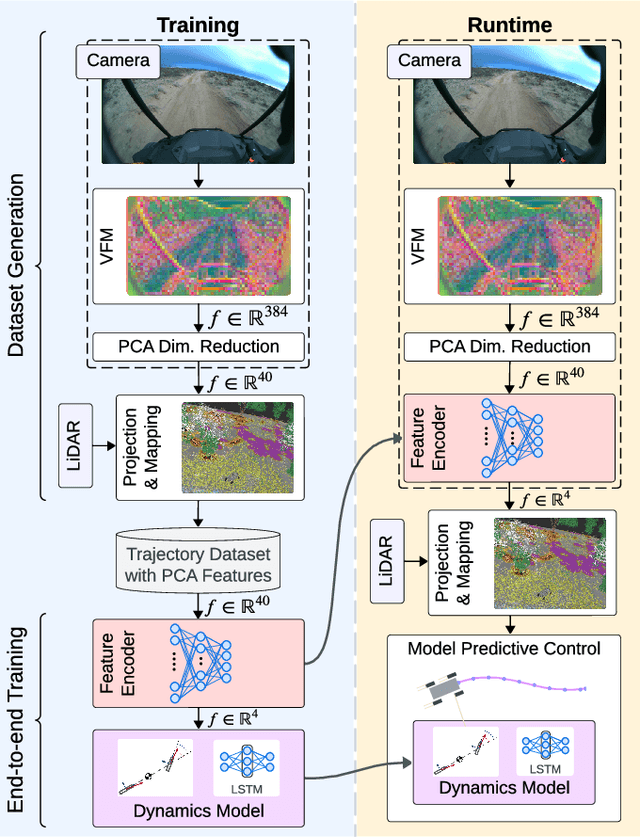

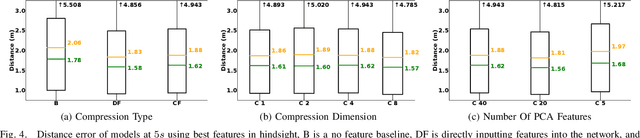

Dynamics Modeling using Visual Terrain Features for High-Speed Autonomous Off-Road Driving

Nov 30, 2024

Abstract:Rapid autonomous traversal of unstructured terrain is essential for scenarios such as disaster response, search and rescue, or planetary exploration. As a vehicle navigates at the limit of its capabilities over extreme terrain, its dynamics can change suddenly and dramatically. For example, high-speed and varying terrain can affect parameters such as traction, tire slip, and rolling resistance. To achieve effective planning in such environments, it is crucial to have a dynamics model that can accurately anticipate these conditions. In this work, we present a hybrid model that predicts the changing dynamics induced by the terrain as a function of visual inputs. We leverage a pre-trained visual foundation model (VFM) DINOv2, which provides rich features that encode fine-grained semantic information. To use this dynamics model for planning, we propose an end-to-end training architecture for a projection distance independent feature encoder that compresses the information from the VFM, enabling the creation of a lightweight map of the environment at runtime. We validate our architecture on an extensive dataset (hundreds of kilometers of aggressive off-road driving) collected across multiple locations as part of the DARPA Robotic Autonomy in Complex Environments with Resiliency (RACER) program. https://www.youtube.com/watch?v=dycTXxEosMk

Learning and Autonomy for Extraterrestrial Terrain Sampling: An Experience Report from OWLAT Deployment

Dec 04, 2023Abstract:Extraterrestrial autonomous lander missions increasingly demand adaptive capabilities to handle the unpredictable and diverse nature of the terrain. This paper discusses the deployment of a Deep Meta-Learning with Controlled Deployment Gaps (CoDeGa) trained model for terrain scooping tasks in Ocean Worlds Lander Autonomy Testbed (OWLAT) at NASA Jet Propulsion Laboratory. The CoDeGa-powered scooping strategy is designed to adapt to novel terrains, selecting scooping actions based on the available RGB-D image data and limited experience. The paper presents our experiences with transferring the scooping framework with CoDeGa-trained model from a low-fidelity testbed to the high-fidelity OWLAT testbed. Additionally, it validates the method's performance in novel, realistic environments, and shares the lessons learned from deploying learning-based autonomy algorithms for space exploration. Experimental results from OWLAT substantiate the efficacy of CoDeGa in rapidly adapting to unfamiliar terrains and effectively making autonomous decisions under considerable domain shifts, thereby endorsing its potential utility in future extraterrestrial missions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge