Enmeng Lu

Redefining Superalignment: From Weak-to-Strong Alignment to Human-AI Co-Alignment to Sustainable Symbiotic Society

Apr 24, 2025Abstract:Artificial Intelligence (AI) systems are becoming increasingly powerful and autonomous, and may progress to surpass human intelligence levels, namely Artificial Superintelligence (ASI). During the progression from AI to ASI, it may exceed human control, violate human values, and even lead to irreversible catastrophic consequences in extreme cases. This gives rise to a pressing issue that needs to be addressed: superalignment, ensuring that AI systems much smarter than humans, remain aligned with human (compatible) intentions and values. Existing scalable oversight and weak-to-strong generalization methods may prove substantially infeasible and inadequate when facing ASI. We must explore safer and more pluralistic frameworks and approaches for superalignment. In this paper, we redefine superalignment as the human-AI co-alignment towards a sustainable symbiotic society, and highlight a framework that integrates external oversight and intrinsic proactive alignment. External oversight superalignment should be grounded in human-centered ultimate decision, supplemented by interpretable automated evaluation and correction, to achieve continuous alignment with humanity's evolving values. Intrinsic proactive superalignment is rooted in a profound understanding of the self, others, and society, integrating self-awareness, self-reflection, and empathy to spontaneously infer human intentions, distinguishing good from evil and proactively considering human well-being, ultimately attaining human-AI co-alignment through iterative interaction. The integration of externally-driven oversight with intrinsically-driven proactive alignment empowers sustainable symbiotic societies through human-AI co-alignment, paving the way for achieving safe and beneficial AGI and ASI for good, for human, and for a symbiotic ecology.

AI Governance InternationaL Evaluation Index (AGILE Index)

Feb 26, 2025Abstract:The rapid advancement of Artificial Intelligence (AI) technology is profoundly transforming human society and concurrently presenting a series of ethical, legal, and social issues. The effective governance of AI has become a crucial global concern. Since 2022, the extensive deployment of generative AI, particularly large language models, marked a new phase in AI governance. Continuous efforts are being made by the international community in actively addressing the novel challenges posed by these AI developments. As consensus on international governance continues to be established and put into action, the practical importance of conducting a global assessment of the state of AI governance is progressively coming to light. In this context, we initiated the development of the AI Governance InternationaL Evaluation Index (AGILE Index). Adhering to the design principle, "the level of governance should match the level of development," the inaugural evaluation of the AGILE Index commences with an exploration of four foundational pillars: the development level of AI, the AI governance environment, the AI governance instruments, and the AI governance effectiveness. It covers 39 indicators across 18 dimensions to comprehensively assess the AI governance level of 14 representative countries globally. The index is utilized to delve into the status of AI governance to date in 14 countries for the first batch of evaluation. The aim is to depict the current state of AI governance in these countries through data scoring, assist them in identifying their governance stage and uncovering governance issues, and ultimately offer insights for the enhancement of their AI governance systems.

MTDP: Modulated Transformer Diffusion Policy Model

Feb 13, 2025

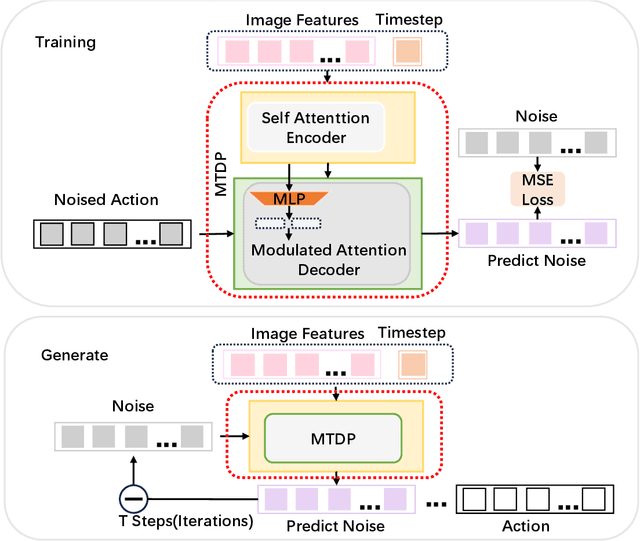

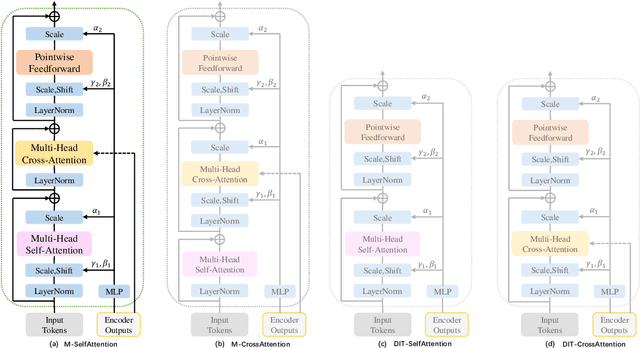

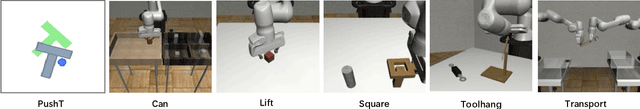

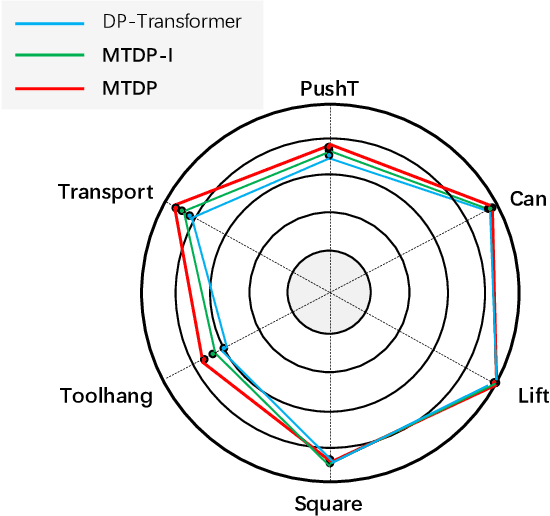

Abstract:Recent research on robot manipulation based on Behavior Cloning (BC) has made significant progress. By combining diffusion models with BC, diffusion policiy has been proposed, enabling robots to quickly learn manipulation tasks with high success rates. However, integrating diffusion policy with high-capacity Transformer presents challenges, traditional Transformer architectures struggle to effectively integrate guiding conditions, resulting in poor performance in manipulation tasks when using Transformer-based models. In this paper, we investigate key architectural designs of Transformers and improve the traditional Transformer architecture by proposing the Modulated Transformer Diffusion Policy (MTDP) model for diffusion policy. The core of this model is the Modulated Attention module we proposed, which more effectively integrates the guiding conditions with the main input, improving the generative model's output quality and, consequently, increasing the robot's task success rate. In six experimental tasks, MTDP outperformed existing Transformer model architectures, particularly in the Toolhang experiment, where the success rate increased by 12\%. To verify the generality of Modulated Attention, we applied it to the UNet architecture to construct Modulated UNet Diffusion Policy model (MUDP), which also achieved higher success rates than existing UNet architectures across all six experiments. The Diffusion Policy uses Denoising Diffusion Probabilistic Models (DDPM) as the diffusion model. Building on this, we also explored Denoising Diffusion Implicit Models (DDIM) as the diffusion model, constructing the MTDP-I and MUDP-I model, which nearly doubled the generation speed while maintaining performance.

Autonomous Alignment with Human Value on Altruism through Considerate Self-imagination and Theory of Mind

Jan 07, 2025Abstract:With the widespread application of Artificial Intelligence (AI) in human society, enabling AI to autonomously align with human values has become a pressing issue to ensure its sustainable development and benefit to humanity. One of the most important aspects of aligning with human values is the necessity for agents to autonomously make altruistic, safe, and ethical decisions, considering and caring for human well-being. Current AI extremely pursues absolute superiority in certain tasks, remaining indifferent to the surrounding environment and other agents, which has led to numerous safety risks. Altruistic behavior in human society originates from humans' capacity for empathizing others, known as Theory of Mind (ToM), combined with predictive imaginative interactions before taking action to produce thoughtful and altruistic behaviors. Inspired by this, we are committed to endow agents with considerate self-imagination and ToM capabilities, driving them through implicit intrinsic motivations to autonomously align with human altruistic values. By integrating ToM within the imaginative space, agents keep an eye on the well-being of other agents in real time, proactively anticipate potential risks to themselves and others, and make thoughtful altruistic decisions that balance negative effects on the environment. The ancient Chinese story of Sima Guang Smashes the Vat illustrates the moral behavior of the young Sima Guang smashed a vat to save a child who had accidentally fallen into it, which is an excellent reference scenario for this paper. We design an experimental scenario similar to Sima Guang Smashes the Vat and its variants with different complexities, which reflects the trade-offs and comprehensive considerations between self-goals, altruistic rescue, and avoiding negative side effects.

Brain-inspired Action Generation with Spiking Transformer Diffusion Policy Model

Nov 15, 2024Abstract:Spiking Neural Networks (SNNs) has the ability to extract spatio-temporal features due to their spiking sequence. While previous research has primarily foucus on the classification of image and reinforcement learning. In our paper, we put forward novel diffusion policy model based on Spiking Transformer Neural Networks and Denoising Diffusion Probabilistic Model (DDPM): Spiking Transformer Modulate Diffusion Policy Model (STMDP), a new brain-inspired model for generating robot action trajectories. In order to improve the performance of this model, we develop a novel decoder module: Spiking Modulate De coder (SMD), which replaces the traditional Decoder module within the Transformer architecture. Additionally, we explored the substitution of DDPM with Denoising Diffusion Implicit Models (DDIM) in our frame work. We conducted experiments across four robotic manipulation tasks and performed ablation studies on the modulate block. Our model consistently outperforms existing Transformer-based diffusion policy method. Especially in Can task, we achieved an improvement of 8%. The proposed STMDP method integrates SNNs, dffusion model and Transformer architecture, which offers new perspectives and promising directions for exploration in brain-inspired robotics.

Building Altruistic and Moral AI Agent with Brain-inspired Affective Empathy Mechanisms

Oct 29, 2024

Abstract:As AI closely interacts with human society, it is crucial to ensure that its decision-making is safe, altruistic, and aligned with human ethical and moral values. However, existing research on embedding ethical and moral considerations into AI remains insufficient, and previous external constraints based on principles and rules are inadequate to provide AI with long-term stability and generalization capabilities. In contrast, the intrinsic altruistic motivation based on empathy is more willing, spontaneous, and robust. Therefore, this paper is dedicated to autonomously driving intelligent agents to acquire morally behaviors through human-like affective empathy mechanisms. We draw inspiration from the neural mechanism of human brain's moral intuitive decision-making, and simulate the mirror neuron system to construct a brain-inspired affective empathy-driven altruistic decision-making model. Here, empathy directly impacts dopamine release to form intrinsic altruistic motivation. Based on the principle of moral utilitarianism, we design the moral reward function that integrates intrinsic empathy and extrinsic self-task goals. A comprehensive experimental scenario incorporating empathetic processes, personal objectives, and altruistic goals is developed. The proposed model enables the agent to make consistent moral decisions (prioritizing altruism) by balancing self-interest with the well-being of others. We further introduce inhibitory neurons to regulate different levels of empathy and verify the positive correlation between empathy levels and altruistic preferences, yielding conclusions consistent with findings from psychological behavioral experiments. This work provides a feasible solution for the development of ethical AI by leveraging the intrinsic human-like empathy mechanisms, and contributes to the harmonious coexistence between humans and AI.

Brain-inspired and Self-based Artificial Intelligence

Feb 29, 2024

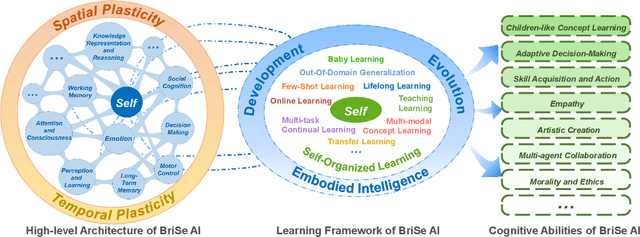

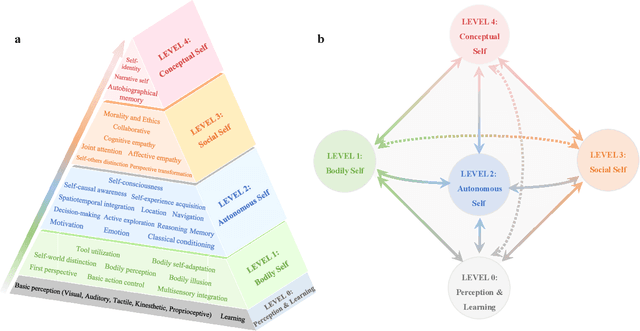

Abstract:The question "Can machines think?" and the Turing Test to assess whether machines could achieve human-level intelligence is one of the roots of AI. With the philosophical argument "I think, therefore I am", this paper challenge the idea of a "thinking machine" supported by current AIs since there is no sense of self in them. Current artificial intelligence is only seemingly intelligent information processing and does not truly understand or be subjectively aware of oneself and perceive the world with the self as human intelligence does. In this paper, we introduce a Brain-inspired and Self-based Artificial Intelligence (BriSe AI) paradigm. This BriSe AI paradigm is dedicated to coordinating various cognitive functions and learning strategies in a self-organized manner to build human-level AI models and robotic applications. Specifically, BriSe AI emphasizes the crucial role of the Self in shaping the future AI, rooted with a practical hierarchical Self framework, including Perception and Learning, Bodily Self, Autonomous Self, Social Self, and Conceptual Self. The hierarchical framework of the Self highlights self-based environment perception, self-bodily modeling, autonomous interaction with the environment, social interaction and collaboration with others, and even more abstract understanding of the Self. Furthermore, the positive mutual promotion and support among multiple levels of Self, as well as between Self and learning, enhance the BriSe AI's conscious understanding of information and flexible adaptation to complex environments, serving as a driving force propelling BriSe AI towards real Artificial General Intelligence.

STREAM: Social data and knowledge collective intelligence platform for TRaining Ethical AI Models

Oct 09, 2023Abstract:This paper presents Social data and knowledge collective intelligence platform for TRaining Ethical AI Models (STREAM) to address the challenge of aligning AI models with human moral values, and to provide ethics datasets and knowledge bases to help promote AI models "follow good advice as naturally as a stream follows its course". By creating a comprehensive and representative platform that accurately mirrors the moral judgments of diverse groups including humans and AIs, we hope to effectively portray cultural and group variations, and capture the dynamic evolution of moral judgments over time, which in turn will facilitate the Establishment, Evaluation, Embedding, Embodiment, Ensemble, and Evolvement (6Es) of the moral capabilities of AI models. Currently, STREAM has already furnished a comprehensive collection of ethical scenarios, and amassed substantial moral judgment data annotated by volunteers and various popular Large Language Models (LLMs), collectively portraying the moral preferences and performances of both humans and AIs across a range of moral contexts. This paper will outline the current structure and construction of STREAM, explore its potential applications, and discuss its future prospects.

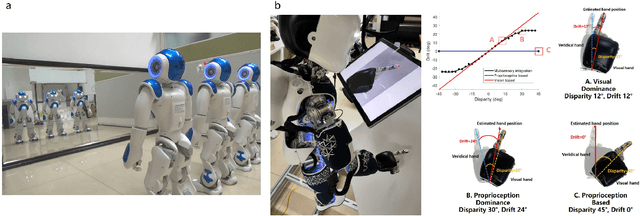

Brain-inspired bodily self-perception model that replicates the rubber hand illusion

Mar 28, 2023Abstract:At the core of bodily self-consciousness is the perception of the ownership of one's body. Recent efforts to gain a deeper understanding of the mechanisms behind the brain's encoding of the self-body have led to various attempts to develop a unified theoretical framework to explain related behavioral and neurophysiological phenomena. A central question to be explained is how body illusions such as the rubber hand illusion actually occur. Despite the conceptual descriptions of the mechanisms of bodily self-consciousness and the possible relevant brain areas, the existing theoretical models still lack an explanation of the computational mechanisms by which the brain encodes the perception of one's body and how our subjectively perceived body illusions can be generated by neural networks. Here we integrate the biological findings of bodily self-consciousness to propose a Brain-inspired bodily self-perception model, by which perceptions of bodily self can be autonomously constructed without any supervision signals. We successfully validated our computational model with six rubber hand illusion experiments on platforms including a iCub humanoid robot and simulated environments. The experimental results show that our model can not only well replicate the behavioral and neural data of monkeys in biological experiments, but also reasonably explain the causes and results of the rubber hand illusion from the neuronal level due to advantages in biological interpretability, thus contributing to the revealing of the computational and neural mechanisms underlying the occurrence of the rubber hand illusion.

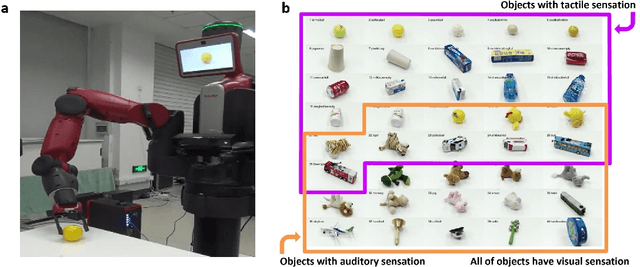

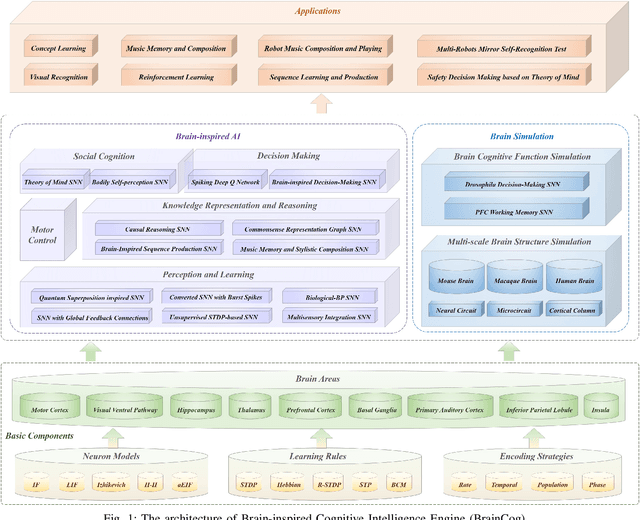

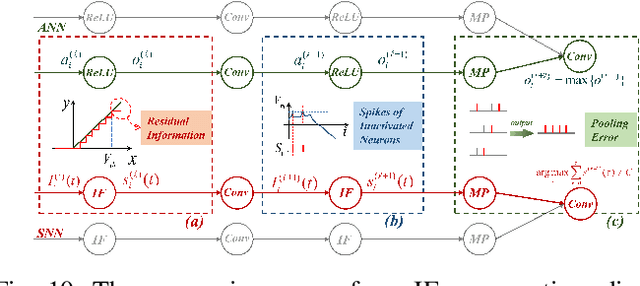

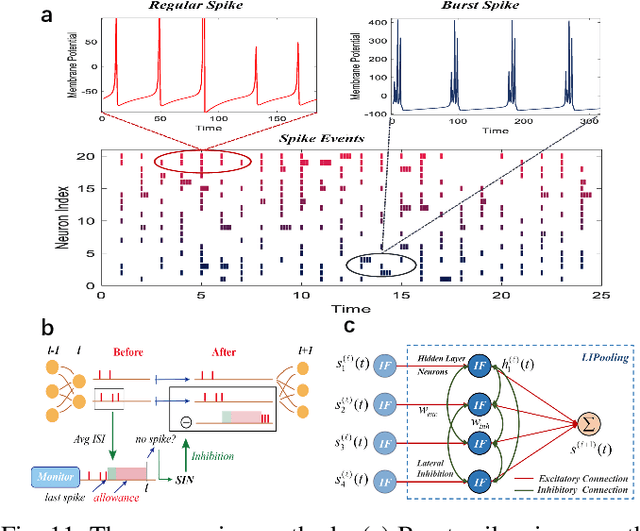

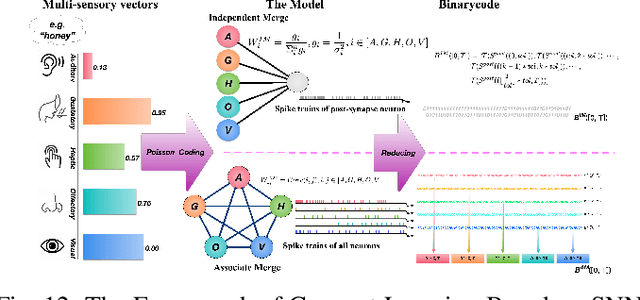

BrainCog: A Spiking Neural Network based Brain-inspired Cognitive Intelligence Engine for Brain-inspired AI and Brain Simulation

Jul 18, 2022

Abstract:Spiking neural networks (SNNs) have attracted extensive attentions in Brain-inspired Artificial Intelligence and computational neuroscience. They can be used to simulate biological information processing in the brain at multiple scales. More importantly, SNNs serve as an appropriate level of abstraction to bring inspirations from brain and cognition to Artificial Intelligence. In this paper, we present the Brain-inspired Cognitive Intelligence Engine (BrainCog) for creating brain-inspired AI and brain simulation models. BrainCog incorporates different types of spiking neuron models, learning rules, brain areas, etc., as essential modules provided by the platform. Based on these easy-to-use modules, BrainCog supports various brain-inspired cognitive functions, including Perception and Learning, Decision Making, Knowledge Representation and Reasoning, Motor Control, and Social Cognition. These brain-inspired AI models have been effectively validated on various supervised, unsupervised, and reinforcement learning tasks, and they can be used to enable AI models to be with multiple brain-inspired cognitive functions. For brain simulation, BrainCog realizes the function simulation of decision-making, working memory, the structure simulation of the Neural Circuit, and whole brain structure simulation of Mouse brain, Macaque brain, and Human brain. An AI engine named BORN is developed based on BrainCog, and it demonstrates how the components of BrainCog can be integrated and used to build AI models and applications. To enable the scientific quest to decode the nature of biological intelligence and create AI, BrainCog aims to provide essential and easy-to-use building blocks, and infrastructural support to develop brain-inspired spiking neural network based AI, and to simulate the cognitive brains at multiple scales. The online repository of BrainCog can be found at https://github.com/braincog-x.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge