Ehsan Nowroozi

Federated Learning Under Attack: Exposing Vulnerabilities through Data Poisoning Attacks in Computer Networks

Mar 05, 2024

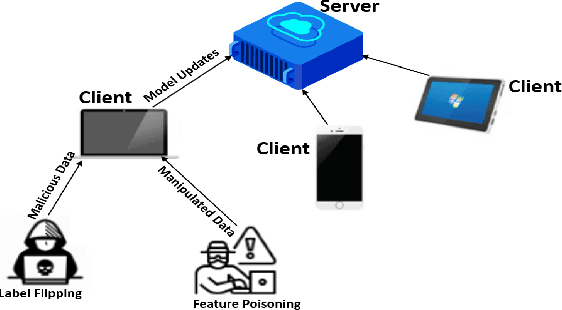

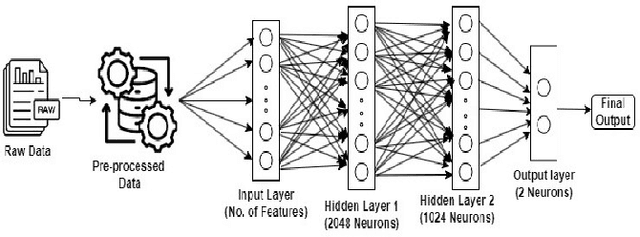

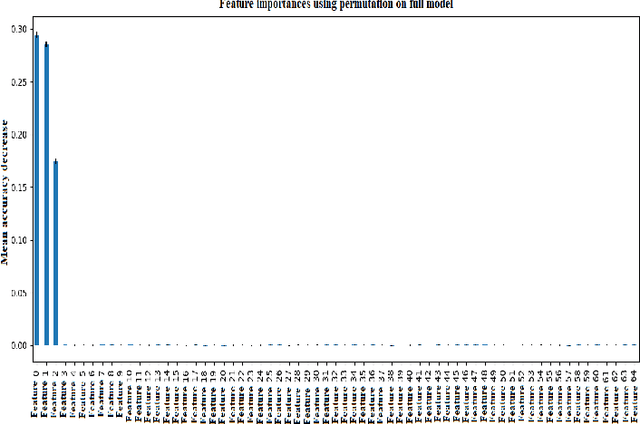

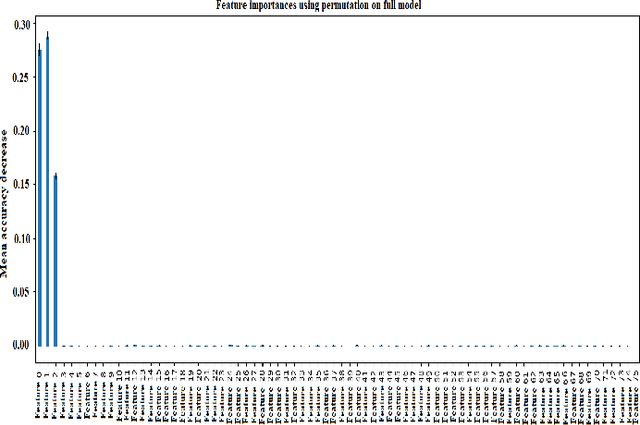

Abstract:Federated Learning (FL) is a machine learning (ML) approach that enables multiple decentralized devices or edge servers to collaboratively train a shared model without exchanging raw data. During the training and sharing of model updates between clients and servers, data and models are susceptible to different data-poisoning attacks. In this study, our motivation is to explore the severity of data poisoning attacks in the computer network domain because they are easy to implement but difficult to detect. We considered two types of data-poisoning attacks, label flipping (LF) and feature poisoning (FP), and applied them with a novel approach. In LF, we randomly flipped the labels of benign data and trained the model on the manipulated data. For FP, we randomly manipulated the highly contributing features determined using the Random Forest algorithm. The datasets used in this experiment were CIC and UNSW related to computer networks. We generated adversarial samples using the two attacks mentioned above, which were applied to a small percentage of datasets. Subsequently, we trained and tested the accuracy of the model on adversarial datasets. We recorded the results for both benign and manipulated datasets and observed significant differences between the accuracy of the models on different datasets. From the experimental results, it is evident that the LF attack failed, whereas the FP attack showed effective results, which proved its significance in fooling a server. With a 1% LF attack on the CIC, the accuracy was approximately 0.0428 and the ASR was 0.9564; hence, the attack is easily detectable, while with a 1% FP attack, the accuracy and ASR were both approximately 0.9600, hence, FP attacks are difficult to detect. We repeated the experiment with different poisoning percentages.

Mitigating Label Flipping Attacks in Malicious URL Detectors Using Ensemble Trees

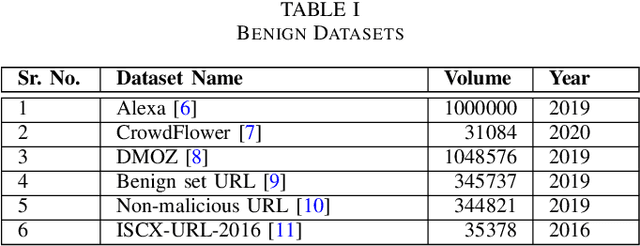

Mar 05, 2024Abstract:Malicious URLs provide adversarial opportunities across various industries, including transportation, healthcare, energy, and banking which could be detrimental to business operations. Consequently, the detection of these URLs is of crucial importance; however, current Machine Learning (ML) models are susceptible to backdoor attacks. These attacks involve manipulating a small percentage of training data labels, such as Label Flipping (LF), which changes benign labels to malicious ones and vice versa. This manipulation results in misclassification and leads to incorrect model behavior. Therefore, integrating defense mechanisms into the architecture of ML models becomes an imperative consideration to fortify against potential attacks. The focus of this study is on backdoor attacks in the context of URL detection using ensemble trees. By illuminating the motivations behind such attacks, highlighting the roles of attackers, and emphasizing the critical importance of effective defense strategies, this paper contributes to the ongoing efforts to fortify ML models against adversarial threats within the ML domain in network security. We propose an innovative alarm system that detects the presence of poisoned labels and a defense mechanism designed to uncover the original class labels with the aim of mitigating backdoor attacks on ensemble tree classifiers. We conducted a case study using the Alexa and Phishing Site URL datasets and showed that LF attacks can be addressed using our proposed defense mechanism. Our experimental results prove that the LF attack achieved an Attack Success Rate (ASR) between 50-65% within 2-5%, and the innovative defense method successfully detected poisoned labels with an accuracy of up to 100%.

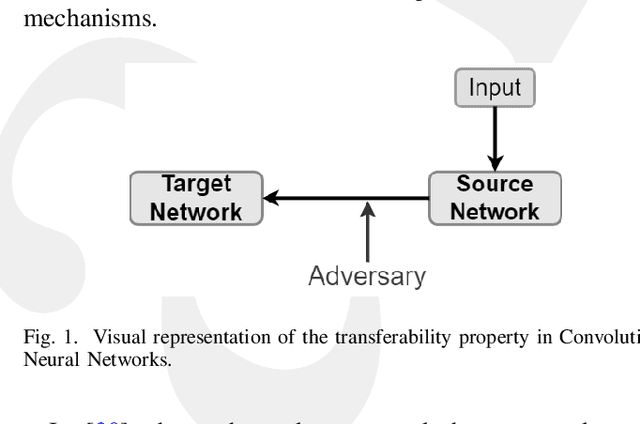

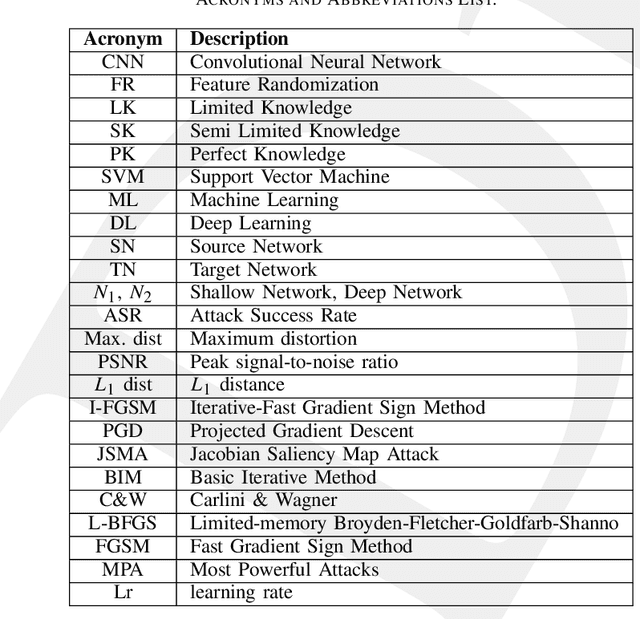

Unscrambling the Rectification of Adversarial Attacks Transferability across Computer Networks

Oct 26, 2023Abstract:Convolutional neural networks (CNNs) models play a vital role in achieving state-of-the-art performances in various technological fields. CNNs are not limited to Natural Language Processing (NLP) or Computer Vision (CV) but also have substantial applications in other technological domains, particularly in cybersecurity. The reliability of CNN's models can be compromised because of their susceptibility to adversarial attacks, which can be generated effortlessly, easily applied, and transferred in real-world scenarios. In this paper, we present a novel and comprehensive method to improve the strength of attacks and assess the transferability of adversarial examples in CNNs when such strength changes, as well as whether the transferability property issue exists in computer network applications. In the context of our study, we initially examined six distinct modes of attack: the Carlini and Wagner (C&W), Fast Gradient Sign Method (FGSM), Iterative Fast Gradient Sign Method (I-FGSM), Jacobian-based Saliency Map (JSMA), Limited-memory Broyden fletcher Goldfarb Shanno (L-BFGS), and Projected Gradient Descent (PGD) attack. We applied these attack techniques on two popular datasets: the CIC and UNSW datasets. The outcomes of our experiment demonstrate that an improvement in transferability occurs in the targeted scenarios for FGSM, JSMA, LBFGS, and other attacks. Our findings further indicate that the threats to security posed by adversarial examples, even in computer network applications, necessitate the development of novel defense mechanisms to enhance the security of DL-based techniques.

Spritz-PS: Validation of Synthetic Face Images Using a Large Dataset of Printed Documents

Apr 06, 2023Abstract:The capability of doing effective forensic analysis on printed and scanned (PS) images is essential in many applications. PS documents may be used to conceal the artifacts of images which is due to the synthetic nature of images since these artifacts are typically present in manipulated images and the main artifacts in the synthetic images can be removed after the PS. Due to the appeal of Generative Adversarial Networks (GANs), synthetic face images generated with GANs models are difficult to differentiate from genuine human faces and may be used to create counterfeit identities. Additionally, since GANs models do not account for physiological constraints for generating human faces and their impact on human IRISes, distinguishing genuine from synthetic IRISes in the PS scenario becomes extremely difficult. As a result of the lack of large-scale reference IRIS datasets in the PS scenario, we aim at developing a novel dataset to become a standard for Multimedia Forensics (MFs) investigation which is available at [45]. In this paper, we provide a novel dataset made up of a large number of synthetic and natural printed IRISes taken from VIPPrint Printed and Scanned face images. We extracted irises from face images and it is possible that the model due to eyelid occlusion captured the incomplete irises. To fill the missing pixels of extracted iris, we applied techniques to discover the complex link between the iris images. To highlight the problems involved with the evaluation of the dataset's IRIS images, we conducted a large number of analyses employing Siamese Neural Networks to assess the similarities between genuine and synthetic human IRISes, such as ResNet50, Xception, VGG16, and MobileNet-v2. For instance, using the Xception network, we achieved 56.76\% similarity of IRISes for synthetic images and 92.77% similarity of IRISes for real images.

SPRITZ-1.5C: Employing Deep Ensemble Learning for Improving the Security of Computer Networks against Adversarial Attacks

Sep 25, 2022

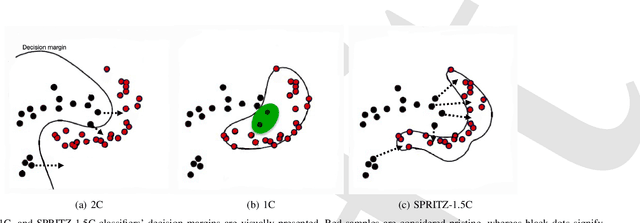

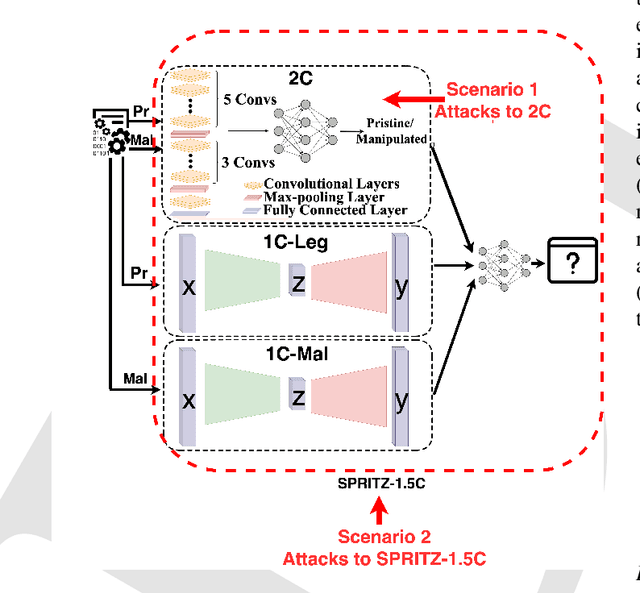

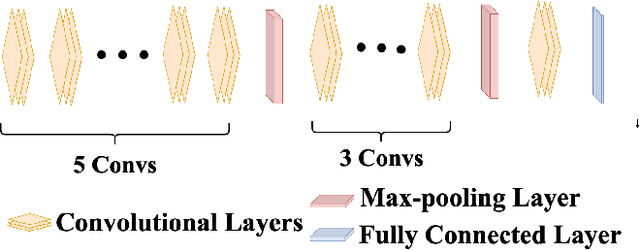

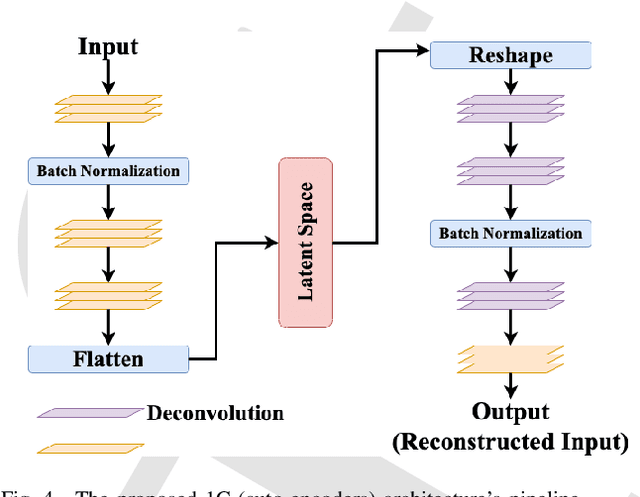

Abstract:In the past few years, Convolutional Neural Networks (CNN) have demonstrated promising performance in various real-world cybersecurity applications, such as network and multimedia security. However, the underlying fragility of CNN structures poses major security problems, making them inappropriate for use in security-oriented applications including such computer networks. Protecting these architectures from adversarial attacks necessitates using security-wise architectures that are challenging to attack. In this study, we present a novel architecture based on an ensemble classifier that combines the enhanced security of 1-Class classification (known as 1C) with the high performance of conventional 2-Class classification (known as 2C) in the absence of attacks.Our architecture is referred to as the 1.5-Class (SPRITZ-1.5C) classifier and constructed using a final dense classifier, one 2C classifier (i.e., CNNs), and two parallel 1C classifiers (i.e., auto-encoders). In our experiments, we evaluated the robustness of our proposed architecture by considering eight possible adversarial attacks in various scenarios. We performed these attacks on the 2C and SPRITZ-1.5C architectures separately. The experimental results of our study showed that the Attack Success Rate (ASR) of the I-FGSM attack against a 2C classifier trained with the N-BaIoT dataset is 0.9900. In contrast, the ASR is 0.0000 for the SPRITZ-1.5C classifier.

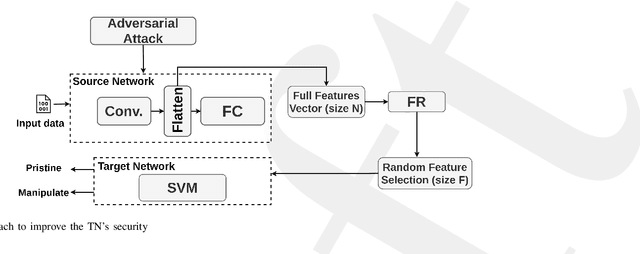

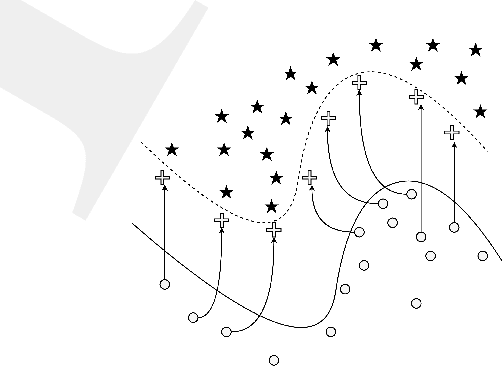

Resisting Deep Learning Models Against Adversarial Attack Transferability via Feature Randomization

Sep 11, 2022

Abstract:In the past decades, the rise of artificial intelligence has given us the capabilities to solve the most challenging problems in our day-to-day lives, such as cancer prediction and autonomous navigation. However, these applications might not be reliable if not secured against adversarial attacks. In addition, recent works demonstrated that some adversarial examples are transferable across different models. Therefore, it is crucial to avoid such transferability via robust models that resist adversarial manipulations. In this paper, we propose a feature randomization-based approach that resists eight adversarial attacks targeting deep learning models in the testing phase. Our novel approach consists of changing the training strategy in the target network classifier and selecting random feature samples. We consider the attacker with a Limited-Knowledge and Semi-Knowledge conditions to undertake the most prevalent types of adversarial attacks. We evaluate the robustness of our approach using the well-known UNSW-NB15 datasets that include realistic and synthetic attacks. Afterward, we demonstrate that our strategy outperforms the existing state-of-the-art approach, such as the Most Powerful Attack, which consists of fine-tuning the network model against specific adversarial attacks. Finally, our experimental results show that our methodology can secure the target network and resists adversarial attack transferability by over 60%.

An Adversarial Attack Analysis on Malicious Advertisement URL Detection Framework

Apr 27, 2022

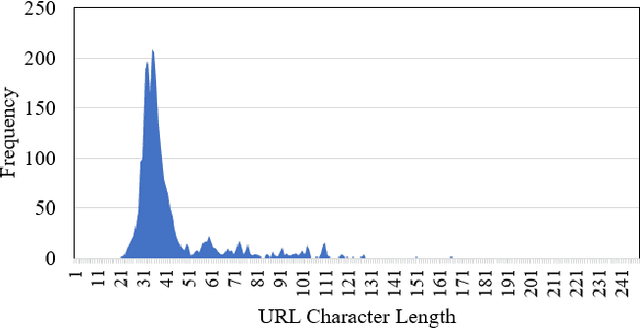

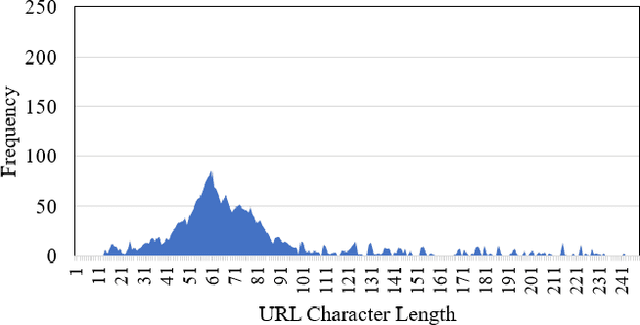

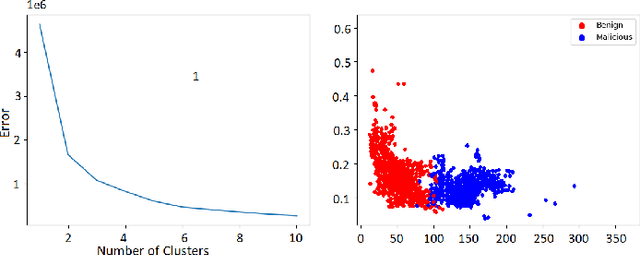

Abstract:Malicious advertisement URLs pose a security risk since they are the source of cyber-attacks, and the need to address this issue is growing in both industry and academia. Generally, the attacker delivers an attack vector to the user by means of an email, an advertisement link or any other means of communication and directs them to a malicious website to steal sensitive information and to defraud them. Existing malicious URL detection techniques are limited and to handle unseen features as well as generalize to test data. In this study, we extract a novel set of lexical and web-scrapped features and employ machine learning technique to set up system for fraudulent advertisement URLs detection. The combination set of six different kinds of features precisely overcome the obfuscation in fraudulent URL classification. Based on different statistical properties, we use twelve different formatted datasets for detection, prediction and classification task. We extend our prediction analysis for mismatched and unlabelled datasets. For this framework, we analyze the performance of four machine learning techniques: Random Forest, Gradient Boost, XGBoost and AdaBoost in the detection part. With our proposed method, we can achieve a false negative rate as low as 0.0037 while maintaining high accuracy of 99.63%. Moreover, we devise a novel unsupervised technique for data clustering using K- Means algorithm for the visual analysis. This paper analyses the vulnerability of decision tree-based models using the limited knowledge attack scenario. We considered the exploratory attack and implemented Zeroth Order Optimization adversarial attack on the detection models.

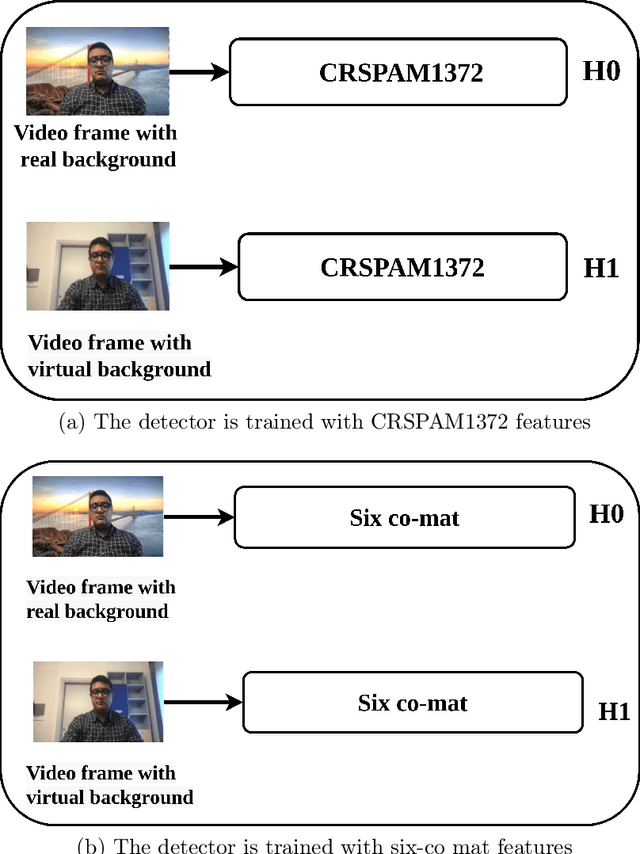

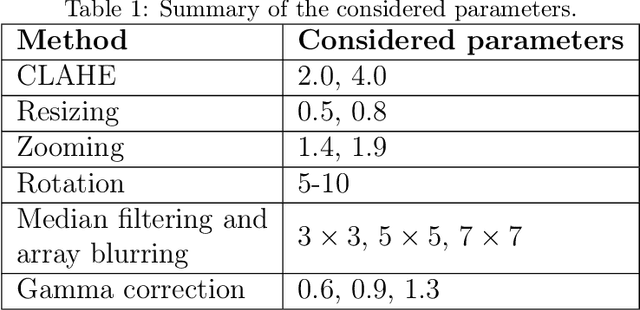

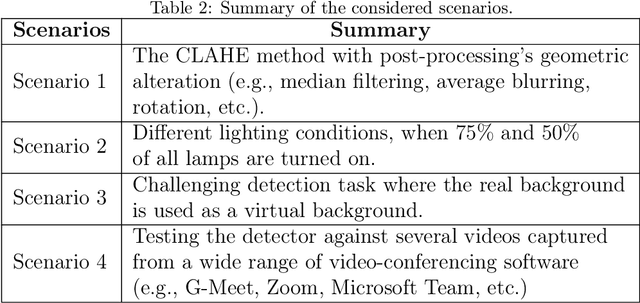

Real or Virtual: A Video Conferencing Background Manipulation-Detection System

Apr 25, 2022

Abstract:Recently, the popularity and wide use of the last-generation video conferencing technologies created an exponential growth in its market size. Such technology allows participants in different geographic regions to have a virtual face-to-face meeting. Additionally, it enables users to employ a virtual background to conceal their own environment due to privacy concerns or to reduce distractions, particularly in professional settings. Nevertheless, in scenarios where the users should not hide their actual locations, they may mislead other participants by claiming their virtual background as a real one. Therefore, it is crucial to develop tools and strategies to detect the authenticity of the considered virtual background. In this paper, we present a detection strategy to distinguish between real and virtual video conferencing user backgrounds. We demonstrate that our detector is robust against two attack scenarios. The first scenario considers the case where the detector is unaware about the attacks and inn the second scenario, we make the detector aware of the adversarial attacks, which we refer to Adversarial Multimedia Forensics (i.e, the forensically-edited frames are included in the training set). Given the lack of publicly available dataset of virtual and real backgrounds for video conferencing, we created our own dataset and made them publicly available [1]. Then, we demonstrate the robustness of our detector against different adversarial attacks that the adversary considers. Ultimately, our detector's performance is significant against the CRSPAM1372 [2] features, and post-processing operations such as geometric transformations with different quality factors that the attacker may choose. Moreover, our performance results shows that we can perfectly identify a real from a virtual background with an accuracy of 99.80%.

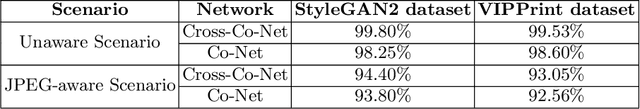

Detecting High-Quality GAN-Generated Face Images using Neural Networks

Mar 03, 2022

Abstract:In the past decades, the excessive use of the last-generation GAN (Generative Adversarial Networks) models in computer vision has enabled the creation of artificial face images that are visually indistinguishable from genuine ones. These images are particularly used in adversarial settings to create fake social media accounts and other fake online profiles. Such malicious activities can negatively impact the trustworthiness of users identities. On the other hand, the recent development of GAN models may create high-quality face images without evidence of spatial artifacts. Therefore, reassembling uniform color channel correlations is a challenging research problem. To face these challenges, we need to develop efficient tools able to differentiate between fake and authentic face images. In this chapter, we propose a new strategy to differentiate GAN-generated images from authentic images by leveraging spectral band discrepancies, focusing on artificial face image synthesis. In particular, we enable the digital preservation of face images using the Cross-band co-occurrence matrix and spatial co-occurrence matrix. Then, we implement these techniques and feed them to a Convolutional Neural Networks (CNN) architecture to identify the real from artificial faces. Additionally, we show that the performance boost is particularly significant and achieves more than 92% in different post-processing environments. Finally, we provide several research observations demonstrating that this strategy improves a comparable detection method based only on intra-band spatial co-occurrences.

Demystifying the Transferability of Adversarial Attacks in Computer Networks

Oct 09, 2021

Abstract:Deep Convolutional Neural Networks (CNN) models are one of the most popular networks in deep learning. With their large fields of application in different areas, they are extensively used in both academia and industry. CNN-based models include several exciting implementations such as early breast cancer detection or detecting developmental delays in children (e.g., autism, speech disorders, etc.). However, previous studies demonstrate that these models are subject to various adversarial attacks. Interestingly, some adversarial examples could potentially still be effective against different unknown models. This particular property is known as adversarial transferability, and prior works slightly analyzed this characteristic in a very limited application domain. In this paper, we aim to demystify the transferability threats in computer networks by studying the possibility of transferring adversarial examples. In particular, we provide the first comprehensive study which assesses the robustness of CNN-based models for computer networks against adversarial transferability. In our experiments, we consider five different attacks: (1) the Iterative Fast Gradient Method (I-FGSM), (2) the Jacobian-based Saliency Map attack (JSMA), (3) the L-BFGS attack, (4) the Projected Gradient Descent attack (PGD), and (5) the DeepFool attack. These attacks are performed against two well-known datasets: the N-BaIoT dataset and the Domain Generating Algorithms (DGA) dataset. Our results show that the transferability happens in specific use cases where the adversary can easily compromise the victim's network with very few knowledge of the targeted model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge