Selcuk Uluagac

Resisting Deep Learning Models Against Adversarial Attack Transferability via Feature Randomization

Sep 11, 2022

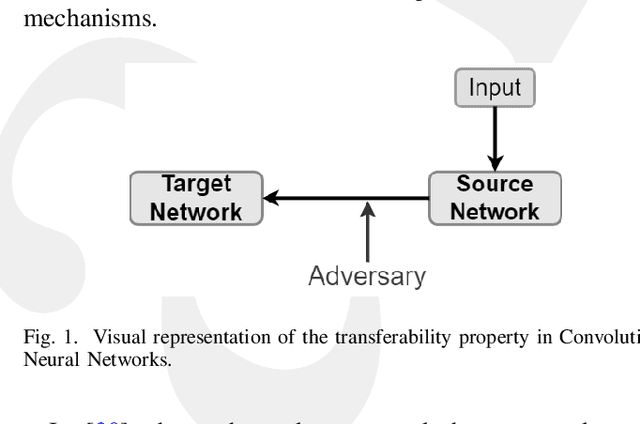

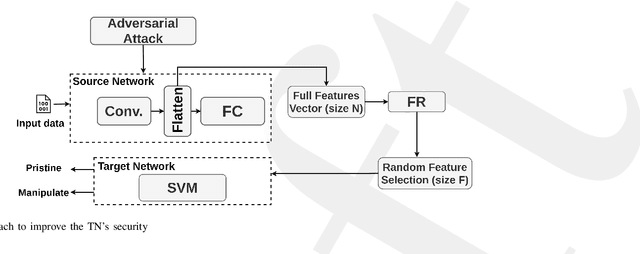

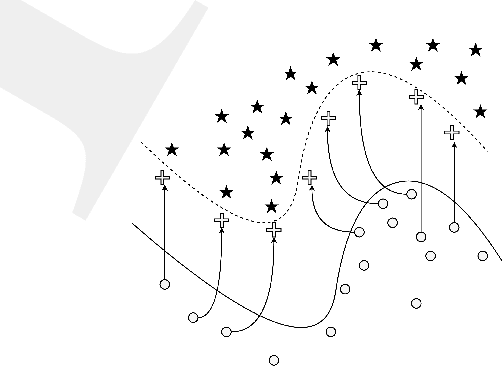

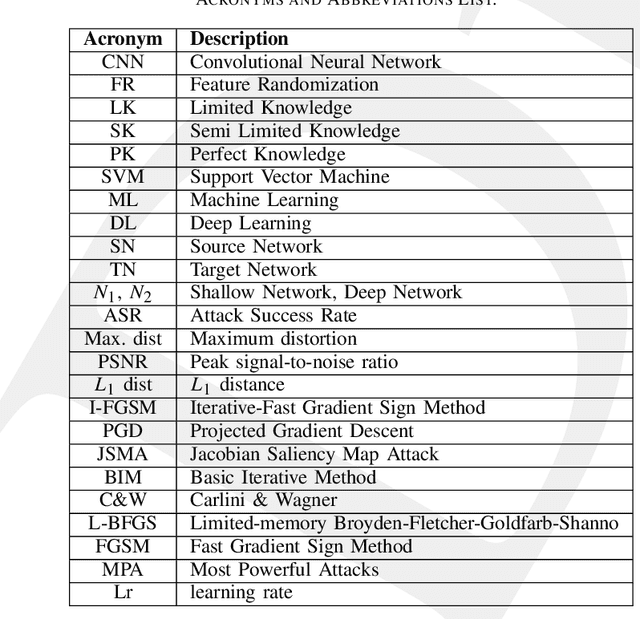

Abstract:In the past decades, the rise of artificial intelligence has given us the capabilities to solve the most challenging problems in our day-to-day lives, such as cancer prediction and autonomous navigation. However, these applications might not be reliable if not secured against adversarial attacks. In addition, recent works demonstrated that some adversarial examples are transferable across different models. Therefore, it is crucial to avoid such transferability via robust models that resist adversarial manipulations. In this paper, we propose a feature randomization-based approach that resists eight adversarial attacks targeting deep learning models in the testing phase. Our novel approach consists of changing the training strategy in the target network classifier and selecting random feature samples. We consider the attacker with a Limited-Knowledge and Semi-Knowledge conditions to undertake the most prevalent types of adversarial attacks. We evaluate the robustness of our approach using the well-known UNSW-NB15 datasets that include realistic and synthetic attacks. Afterward, we demonstrate that our strategy outperforms the existing state-of-the-art approach, such as the Most Powerful Attack, which consists of fine-tuning the network model against specific adversarial attacks. Finally, our experimental results show that our methodology can secure the target network and resists adversarial attack transferability by over 60%.

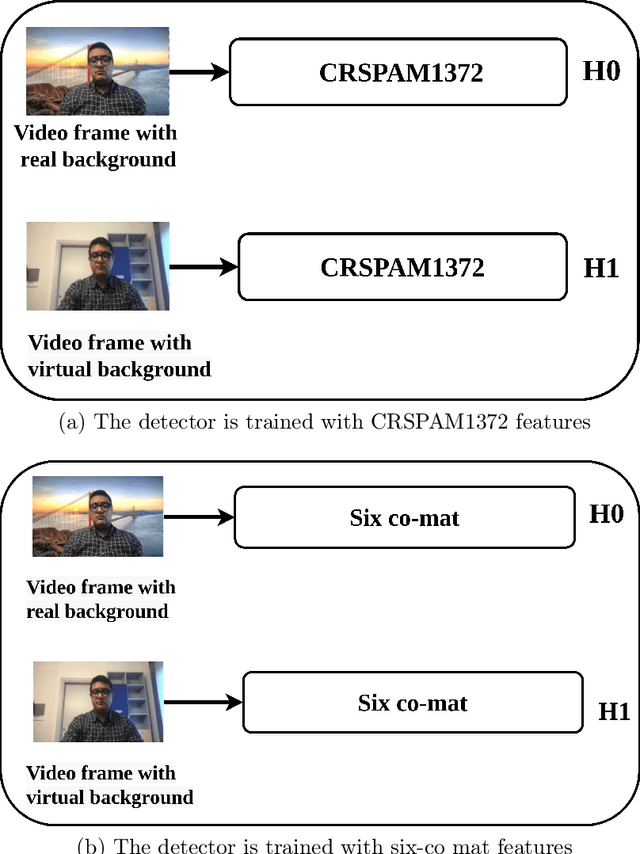

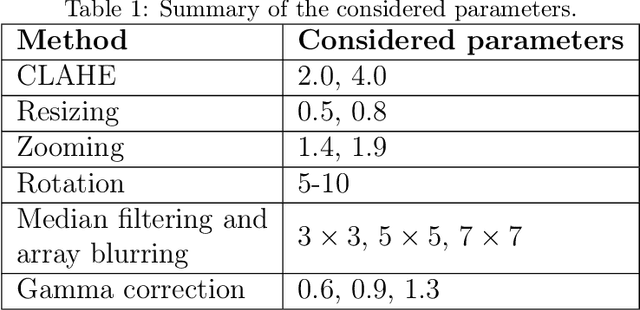

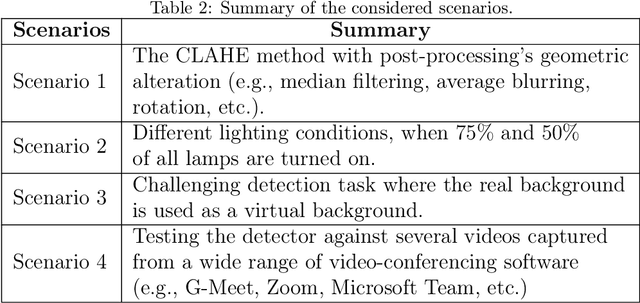

Real or Virtual: A Video Conferencing Background Manipulation-Detection System

Apr 25, 2022

Abstract:Recently, the popularity and wide use of the last-generation video conferencing technologies created an exponential growth in its market size. Such technology allows participants in different geographic regions to have a virtual face-to-face meeting. Additionally, it enables users to employ a virtual background to conceal their own environment due to privacy concerns or to reduce distractions, particularly in professional settings. Nevertheless, in scenarios where the users should not hide their actual locations, they may mislead other participants by claiming their virtual background as a real one. Therefore, it is crucial to develop tools and strategies to detect the authenticity of the considered virtual background. In this paper, we present a detection strategy to distinguish between real and virtual video conferencing user backgrounds. We demonstrate that our detector is robust against two attack scenarios. The first scenario considers the case where the detector is unaware about the attacks and inn the second scenario, we make the detector aware of the adversarial attacks, which we refer to Adversarial Multimedia Forensics (i.e, the forensically-edited frames are included in the training set). Given the lack of publicly available dataset of virtual and real backgrounds for video conferencing, we created our own dataset and made them publicly available [1]. Then, we demonstrate the robustness of our detector against different adversarial attacks that the adversary considers. Ultimately, our detector's performance is significant against the CRSPAM1372 [2] features, and post-processing operations such as geometric transformations with different quality factors that the attacker may choose. Moreover, our performance results shows that we can perfectly identify a real from a virtual background with an accuracy of 99.80%.

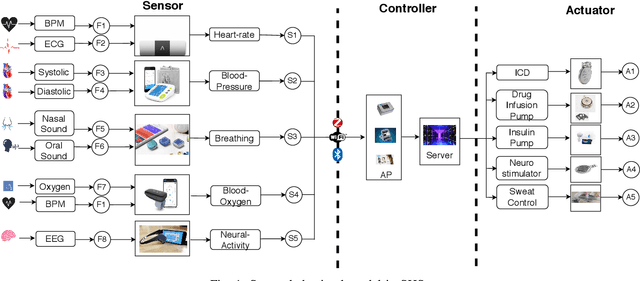

A Novel Framework for Threat Analysis of Machine Learning-based Smart Healthcare Systems

Mar 05, 2021

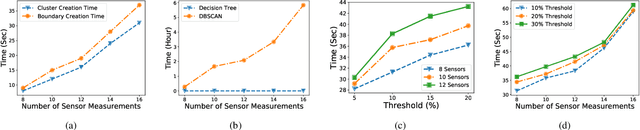

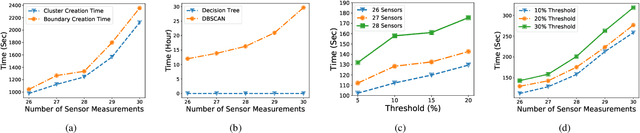

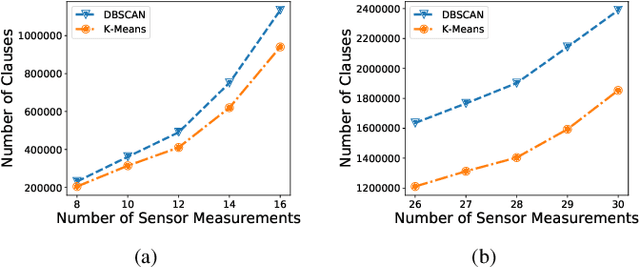

Abstract:Smart healthcare systems (SHSs) are providing fast and efficient disease treatment leveraging wireless body sensor networks (WBSNs) and implantable medical devices (IMDs)-based internet of medical things (IoMT). In addition, IoMT-based SHSs are enabling automated medication, allowing communication among myriad healthcare sensor devices. However, adversaries can launch various attacks on the communication network and the hardware/firmware to introduce false data or cause data unavailability to the automatic medication system endangering the patient's life. In this paper, we propose SHChecker, a novel threat analysis framework that integrates machine learning and formal analysis capabilities to identify potential attacks and corresponding effects on an IoMT-based SHS. Our framework can provide us with all potential attack vectors, each representing a set of sensor measurements to be altered, for an SHS given a specific set of attack attributes, allowing us to realize the system's resiliency, thus the insight to enhance the robustness of the model. We implement SHChecker on a synthetic and a real dataset, which affirms that our framework can reveal potential attack vectors in an IoMT system. This is a novel effort to formally analyze supervised and unsupervised machine learning models for black-box SHS threat analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge