Mohammadreza Mohammadi

CrossNAS: A Cross-Layer Neural Architecture Search Framework for PIM Systems

May 28, 2025Abstract:In this paper, we propose the CrossNAS framework, an automated approach for exploring a vast, multidimensional search space that spans various design abstraction layers-circuits, architecture, and systems-to optimize the deployment of machine learning workloads on analog processing-in-memory (PIM) systems. CrossNAS leverages the single-path one-shot weight-sharing strategy combined with the evolutionary search for the first time in the context of PIM system mapping and optimization. CrossNAS sets a new benchmark for PIM neural architecture search (NAS), outperforming previous methods in both accuracy and energy efficiency while maintaining comparable or shorter search times.

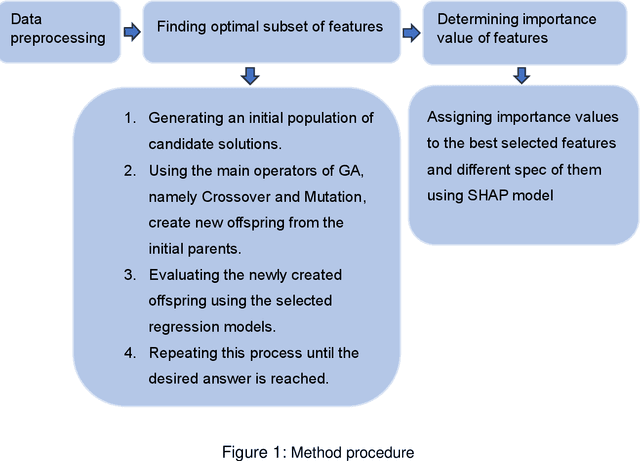

An explainable machine learning-based approach for analyzing customers' online data to identify the importance of product attributes

Feb 03, 2024

Abstract:Online customer data provides valuable information for product design and marketing research, as it can reveal the preferences of customers. However, analyzing these data using artificial intelligence (AI) for data-driven design is a challenging task due to potential concealed patterns. Moreover, in these research areas, most studies are only limited to finding customers' needs. In this study, we propose a game theory machine learning (ML) method that extracts comprehensive design implications for product development. The method first uses a genetic algorithm to select, rank, and combine product features that can maximize customer satisfaction based on online ratings. Then, we use SHAP (SHapley Additive exPlanations), a game theory method that assigns a value to each feature based on its contribution to the prediction, to provide a guideline for assessing the importance of each feature for the total satisfaction. We apply our method to a real-world dataset of laptops from Kaggle, and derive design implications based on the results. Our approach tackles a major challenge in the field of multi-criteria decision making and can help product designers and marketers, to understand customer preferences better with less data and effort. The proposed method outperforms benchmark methods in terms of relevant performance metrics.

Facial Expression Recognition at the Edge: CPU vs GPU vs VPU vs TPU

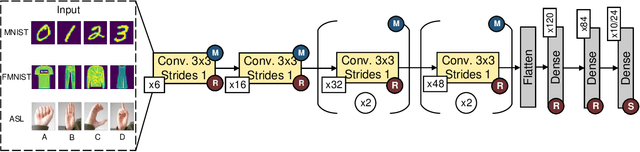

May 17, 2023Abstract:Facial Expression Recognition (FER) plays an important role in human-computer interactions and is used in a wide range of applications. Convolutional Neural Networks (CNN) have shown promise in their ability to classify human facial expressions, however, large CNNs are not well-suited to be implemented on resource- and energy-constrained IoT devices. In this work, we present a hierarchical framework for developing and optimizing hardware-aware CNNs tuned for deployment at the edge. We perform a comprehensive analysis across various edge AI accelerators including NVIDIA Jetson Nano, Intel Neural Compute Stick, and Coral TPU. Using the proposed strategy, we achieved a peak accuracy of 99.49% when testing on the CK+ facial expression recognition dataset. Additionally, we achieved a minimum inference latency of 0.39 milliseconds and a minimum power consumption of 0.52 Watts.

Energy-Efficient Deployment of Machine Learning Workloads on Neuromorphic Hardware

Oct 10, 2022

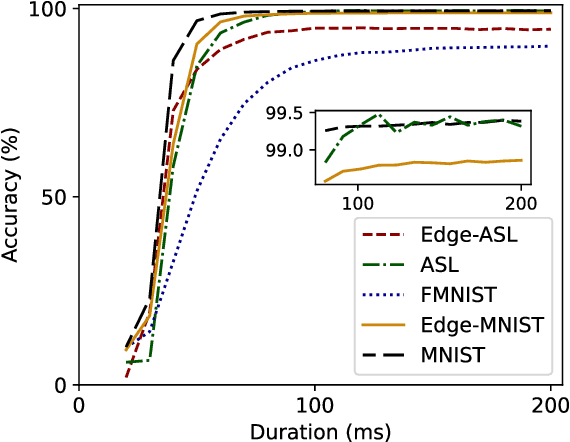

Abstract:As the technology industry is moving towards implementing tasks such as natural language processing, path planning, image classification, and more on smaller edge computing devices, the demand for more efficient implementations of algorithms and hardware accelerators has become a significant area of research. In recent years, several edge deep learning hardware accelerators have been released that specifically focus on reducing the power and area consumed by deep neural networks (DNNs). On the other hand, spiking neural networks (SNNs) which operate on discrete time-series data, have been shown to achieve substantial power reductions over even the aforementioned edge DNN accelerators when deployed on specialized neuromorphic event-based/asynchronous hardware. While neuromorphic hardware has demonstrated great potential for accelerating deep learning tasks at the edge, the current space of algorithms and hardware is limited and still in rather early development. Thus, many hybrid approaches have been proposed which aim to convert pre-trained DNNs into SNNs. In this work, we provide a general guide to converting pre-trained DNNs into SNNs while also presenting techniques to improve the deployment of converted SNNs on neuromorphic hardware with respect to latency, power, and energy. Our experimental results show that when compared against the Intel Neural Compute Stick 2, Intel's neuromorphic processor, Loihi, consumes up to 27x less power and 5x less energy in the tested image classification tasks by using our SNN improvement techniques.

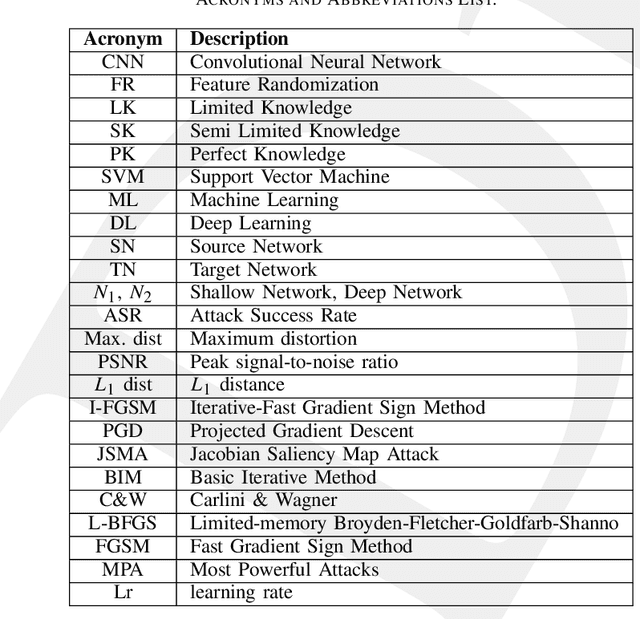

SPRITZ-1.5C: Employing Deep Ensemble Learning for Improving the Security of Computer Networks against Adversarial Attacks

Sep 25, 2022

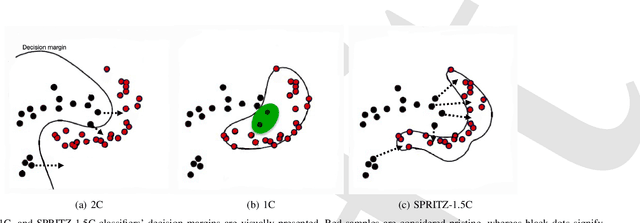

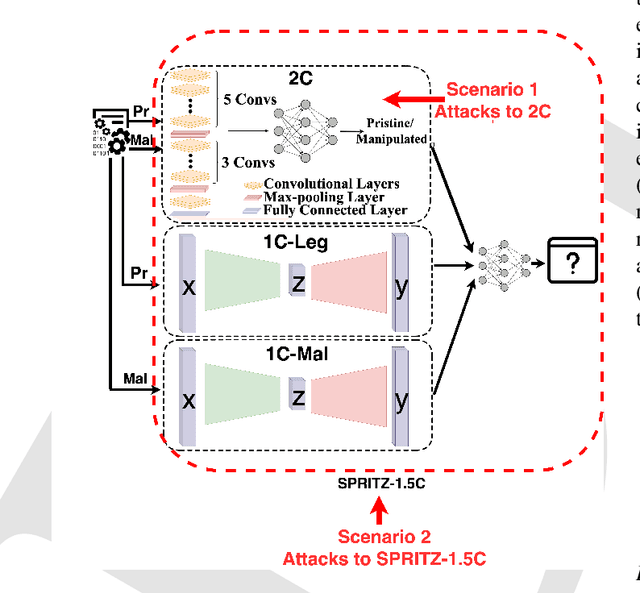

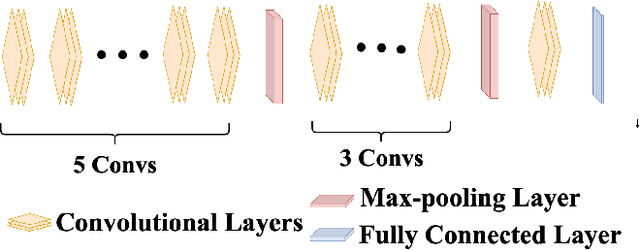

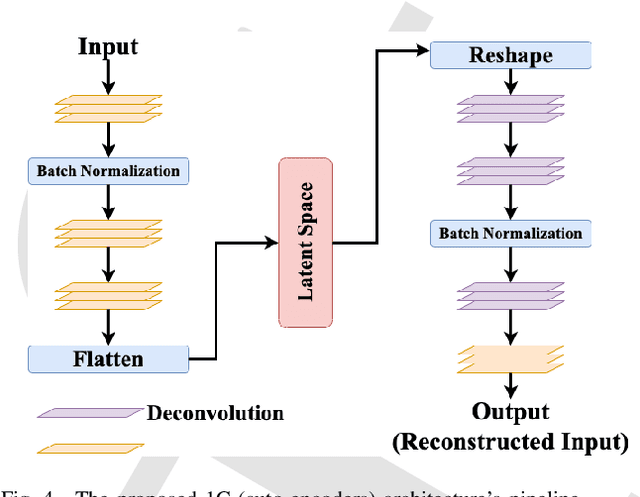

Abstract:In the past few years, Convolutional Neural Networks (CNN) have demonstrated promising performance in various real-world cybersecurity applications, such as network and multimedia security. However, the underlying fragility of CNN structures poses major security problems, making them inappropriate for use in security-oriented applications including such computer networks. Protecting these architectures from adversarial attacks necessitates using security-wise architectures that are challenging to attack. In this study, we present a novel architecture based on an ensemble classifier that combines the enhanced security of 1-Class classification (known as 1C) with the high performance of conventional 2-Class classification (known as 2C) in the absence of attacks.Our architecture is referred to as the 1.5-Class (SPRITZ-1.5C) classifier and constructed using a final dense classifier, one 2C classifier (i.e., CNNs), and two parallel 1C classifiers (i.e., auto-encoders). In our experiments, we evaluated the robustness of our proposed architecture by considering eight possible adversarial attacks in various scenarios. We performed these attacks on the 2C and SPRITZ-1.5C architectures separately. The experimental results of our study showed that the Attack Success Rate (ASR) of the I-FGSM attack against a 2C classifier trained with the N-BaIoT dataset is 0.9900. In contrast, the ASR is 0.0000 for the SPRITZ-1.5C classifier.

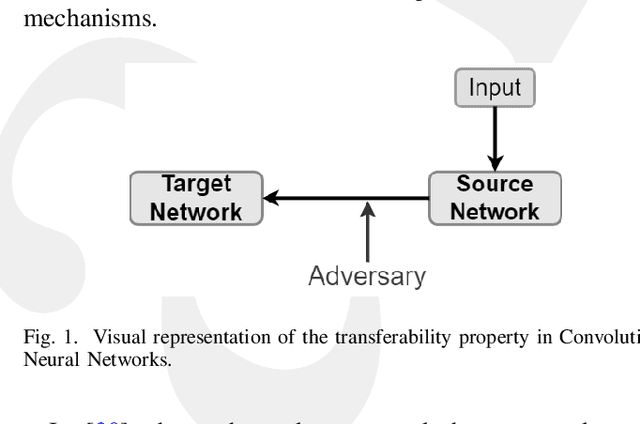

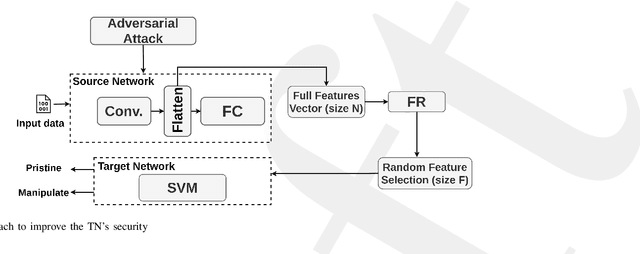

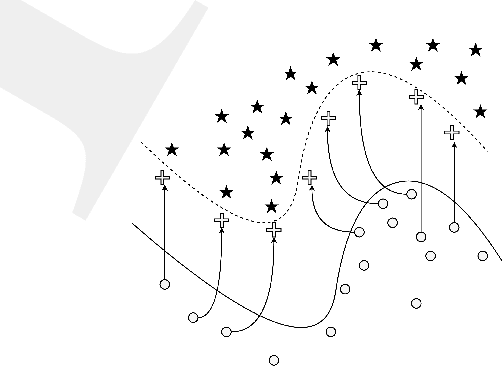

Resisting Deep Learning Models Against Adversarial Attack Transferability via Feature Randomization

Sep 11, 2022

Abstract:In the past decades, the rise of artificial intelligence has given us the capabilities to solve the most challenging problems in our day-to-day lives, such as cancer prediction and autonomous navigation. However, these applications might not be reliable if not secured against adversarial attacks. In addition, recent works demonstrated that some adversarial examples are transferable across different models. Therefore, it is crucial to avoid such transferability via robust models that resist adversarial manipulations. In this paper, we propose a feature randomization-based approach that resists eight adversarial attacks targeting deep learning models in the testing phase. Our novel approach consists of changing the training strategy in the target network classifier and selecting random feature samples. We consider the attacker with a Limited-Knowledge and Semi-Knowledge conditions to undertake the most prevalent types of adversarial attacks. We evaluate the robustness of our approach using the well-known UNSW-NB15 datasets that include realistic and synthetic attacks. Afterward, we demonstrate that our strategy outperforms the existing state-of-the-art approach, such as the Most Powerful Attack, which consists of fine-tuning the network model against specific adversarial attacks. Finally, our experimental results show that our methodology can secure the target network and resists adversarial attack transferability by over 60%.

An Adversarial Attack Analysis on Malicious Advertisement URL Detection Framework

Apr 27, 2022

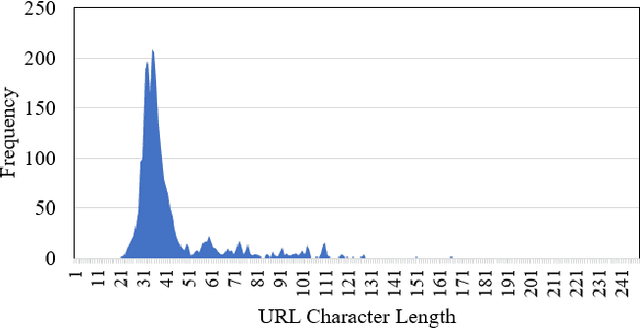

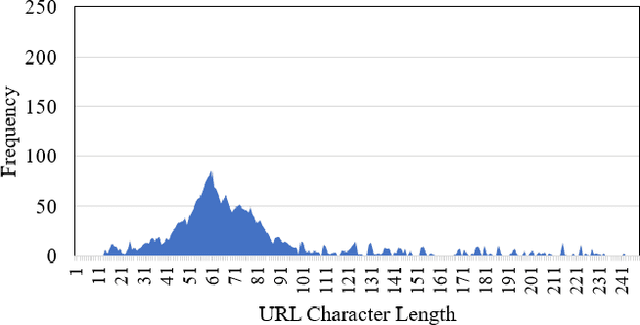

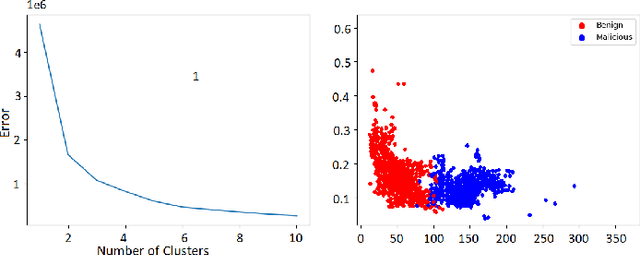

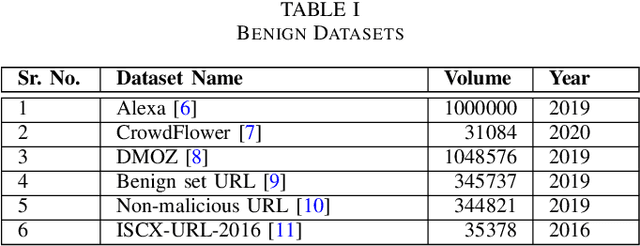

Abstract:Malicious advertisement URLs pose a security risk since they are the source of cyber-attacks, and the need to address this issue is growing in both industry and academia. Generally, the attacker delivers an attack vector to the user by means of an email, an advertisement link or any other means of communication and directs them to a malicious website to steal sensitive information and to defraud them. Existing malicious URL detection techniques are limited and to handle unseen features as well as generalize to test data. In this study, we extract a novel set of lexical and web-scrapped features and employ machine learning technique to set up system for fraudulent advertisement URLs detection. The combination set of six different kinds of features precisely overcome the obfuscation in fraudulent URL classification. Based on different statistical properties, we use twelve different formatted datasets for detection, prediction and classification task. We extend our prediction analysis for mismatched and unlabelled datasets. For this framework, we analyze the performance of four machine learning techniques: Random Forest, Gradient Boost, XGBoost and AdaBoost in the detection part. With our proposed method, we can achieve a false negative rate as low as 0.0037 while maintaining high accuracy of 99.63%. Moreover, we devise a novel unsupervised technique for data clustering using K- Means algorithm for the visual analysis. This paper analyses the vulnerability of decision tree-based models using the limited knowledge attack scenario. We considered the exploratory attack and implemented Zeroth Order Optimization adversarial attack on the detection models.

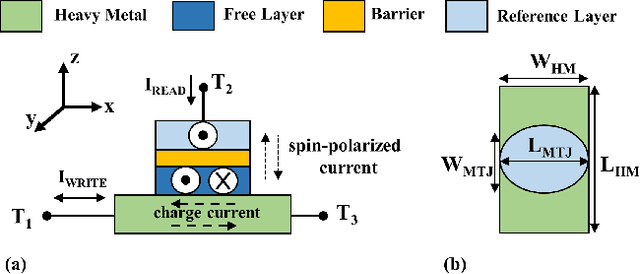

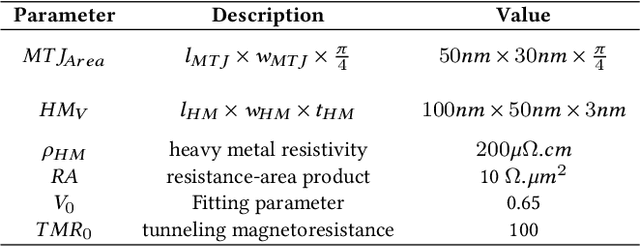

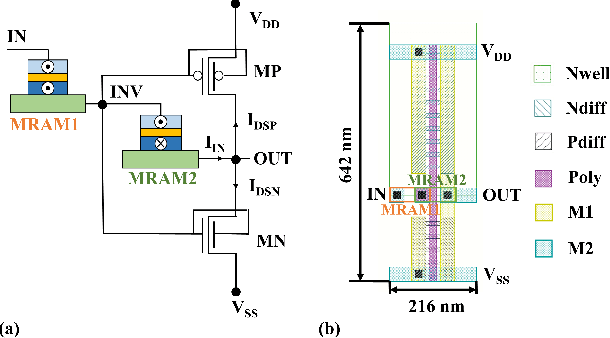

MRAM-based Analog Sigmoid Function for In-memory Computing

Apr 21, 2022

Abstract:We propose an analog implementation of the transcendental activation function leveraging two spin-orbit torque magnetoresistive random-access memory (SOT-MRAM) devices and a CMOS inverter. The proposed analog neuron circuit consumes 1.8-27x less power, and occupies 2.5-4931x smaller area, compared to the state-of-the-art analog and digital implementations. Moreover, the developed neuron can be readily integrated with memristive crossbars without requiring any intermediate signal conversion units. The architecture-level analyses show that a fully-analog in-memory computing (IMC) circuit that use our SOT-MRAM neuron along with an SOT-MRAM based crossbar can achieve more than 1.1x, 12x, and 13.3x reduction in power, latency, and energy, respectively, compared to a mixed-signal implementation with analog memristive crossbars and digital neurons. Finally, through cross-layer analyses, we provide a guide on how varying the device-level parameters in our neuron can affect the accuracy of multilayer perceptron (MLP) for MNIST classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge