David Johnson

Beyond saliency: enhancing explanation of speech emotion recognition with expert-referenced acoustic cues

Nov 12, 2025

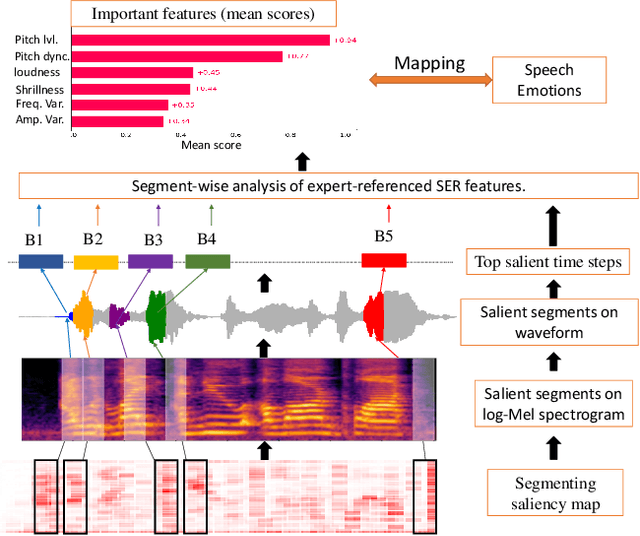

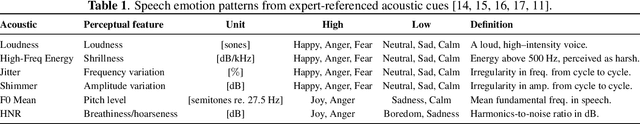

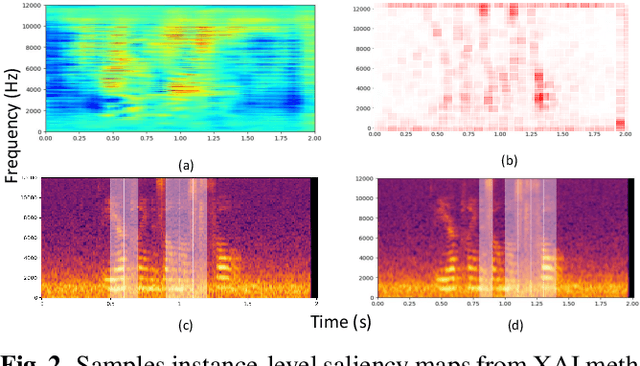

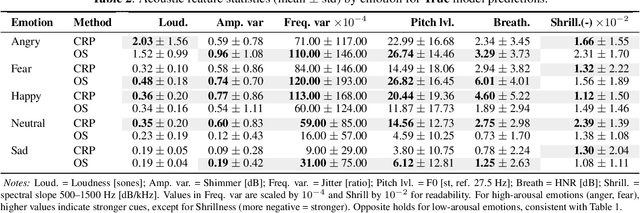

Abstract:Explainable AI (XAI) for Speech Emotion Recognition (SER) is critical for building transparent, trustworthy models. Current saliency-based methods, adapted from vision, highlight spectrogram regions but fail to show whether these regions correspond to meaningful acoustic markers of emotion, limiting faithfulness and interpretability. We propose a framework that overcomes these limitations by quantifying the magnitudes of cues within salient regions. This clarifies "what" is highlighted and connects it to "why" it matters, linking saliency to expert-referenced acoustic cues of speech emotions. Experiments on benchmark SER datasets show that our approach improves explanation quality by explicitly linking salient regions to theory-driven speech emotions expert-referenced acoustics. Compared to standard saliency methods, it provides more understandable and plausible explanations of SER models, offering a foundational step towards trustworthy speech-based affective computing.

OpenGERT: Open Source Automated Geometry Extraction with Geometric and Electromagnetic Sensitivity Analyses for Ray-Tracing Propagation Models

Jan 12, 2025

Abstract:Accurate RF propagation modeling in urban environments is critical for developing digital spectrum twins and optimizing wireless communication systems. We introduce OpenGERT, an open-source automated Geometry Extraction tool for Ray Tracing, which collects and processes terrain and building data from OpenStreetMap, Microsoft Global ML Building Footprints, and USGS elevation data. Using the Blender Python API, it creates detailed urban models for high-fidelity simulations with NVIDIA Sionna RT. We perform sensitivity analyses to examine how variations in building height, position, and electromagnetic material properties affect ray-tracing accuracy. Specifically, we present pairwise dispersion plots of channel statistics (path gain, mean excess delay, delay spread, link outage, and Rician K-factor) and investigate how their sensitivities change with distance from transmitters. We also visualize the variance of these statistics for selected transmitter locations to gain deeper insights. Our study covers Munich and Etoile scenes, each with 10 transmitter locations. For each location, we apply five types of perturbations: material, position, height, height-position, and all combined, with 50 perturbations each. Results show that small changes in permittivity and conductivity minimally affect channel statistics, whereas variations in building height and position significantly alter all statistics, even with noise standard deviations of 1 meter in height and 0.4 meters in position. These findings highlight the importance of precise environmental modeling for accurate propagation predictions, essential for digital spectrum twins and advanced communication networks. The code for geometry extraction and sensitivity analyses is available at github.com/serhatadik/OpenGERT/.

Data Discovery for the SDGs: A Systematic Rule-based Approach

Jul 16, 2023Abstract:In 2015, the United Nations put forward 17 Sustainable Development Goals (SDGs) to be achieved by 2030, where data has been promoted as a focus to innovating sustainable development and as a means to measuring progress towards achieving the SDGs. In this study, we propose a systematic approach towards discovering data types and sources that can be used for SDG research. The proposed method integrates a systematic mapping approach using manual qualitative coding over a corpus of SDG-related research literature followed by an automated process that applies rules to perform data entity extraction computationally. This approach is exemplified by an analysis of literature relating to SDG 7, the results of which are also presented in this paper. The paper concludes with a discussion of the approach and suggests future work to extend the method with more advance NLP and machine learning techniques.

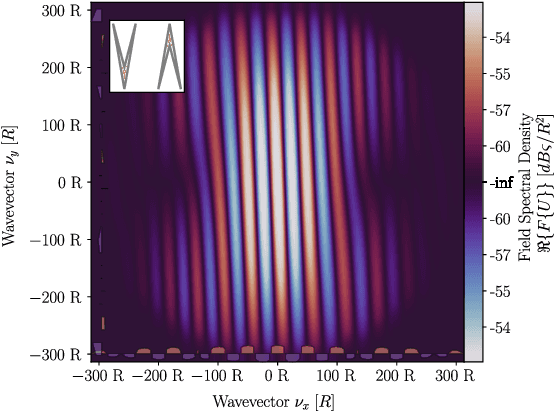

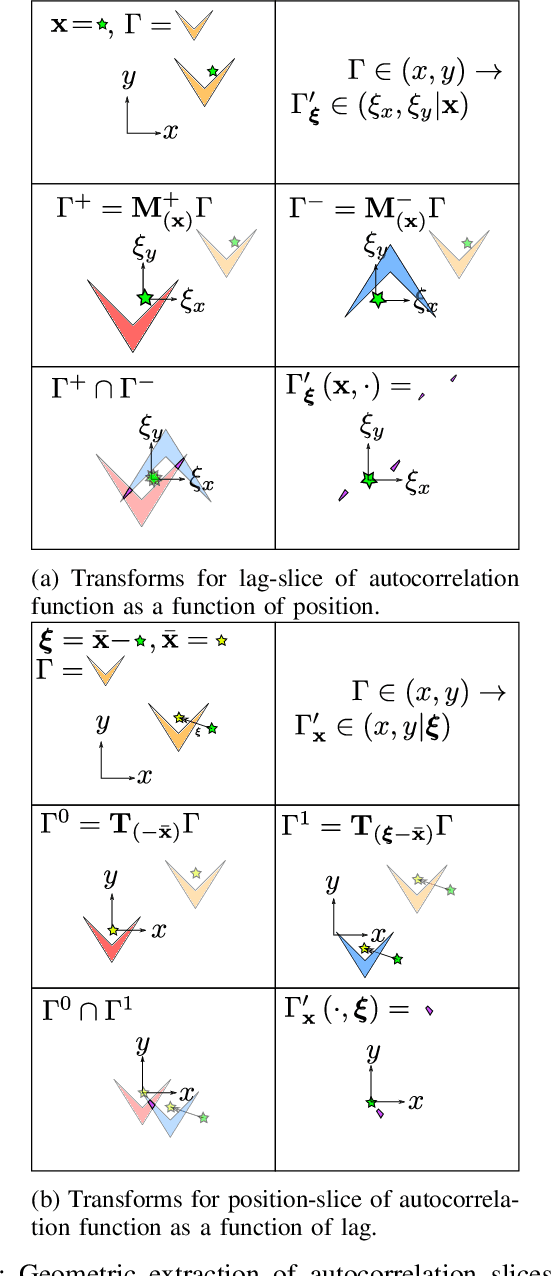

Autocorrelation, Wigner and Ambiguity Transforms on Polygons for Coherent Radiation Rendering

Feb 06, 2022

Abstract:Simulating the radar illumination of large scenes generally relies on a geometric model of light transport which largely ignores prominent wave effects. This can be remedied through coherence ray-tracing, but this requires the Wigner transform of the aperture. This diffraction function has been historically difficult to generate, and is relevant in the fields of optics, holography, synchrotron-radiation, quantum systems and radar. In this paper we provide the Wigner transform of arbitrary polygons through geometric transforms and the Stokes Fourier transform; and display its use in Monte-Carlo rendering.

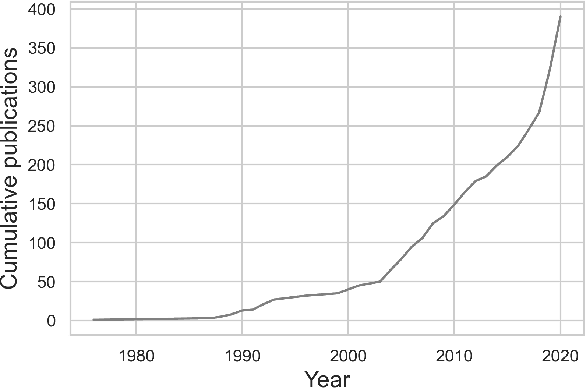

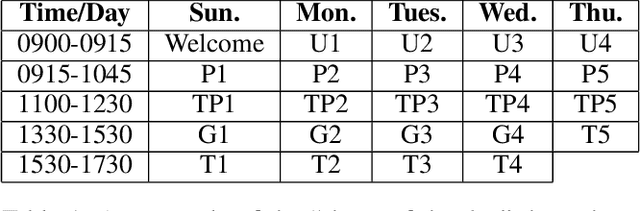

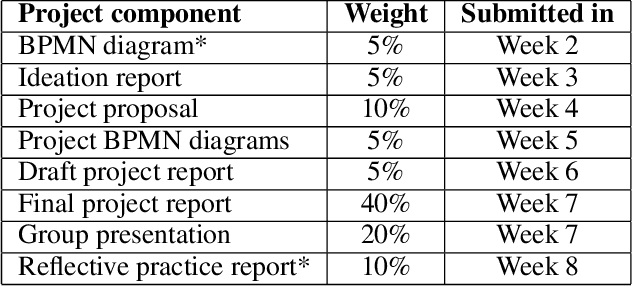

An Experience Report of Executive-Level Artificial Intelligence Education in the United Arab Emirates

Feb 02, 2022

Abstract:Teaching artificial intelligence (AI) is challenging. It is a fast moving field and therefore difficult to keep people updated with the state-of-the-art. Educational offerings for students are ever increasing, beyond university degree programs where AI education traditionally lay. In this paper, we present an experience report of teaching an AI course to business executives in the United Arab Emirates (UAE). Rather than focusing only on theoretical and technical aspects, we developed a course that teaches AI with a view to enabling students to understand how to incorporate it into existing business processes. We present an overview of our course, curriculum and teaching methods, and we discuss our reflections on teaching adult learners, and to students in the UAE.

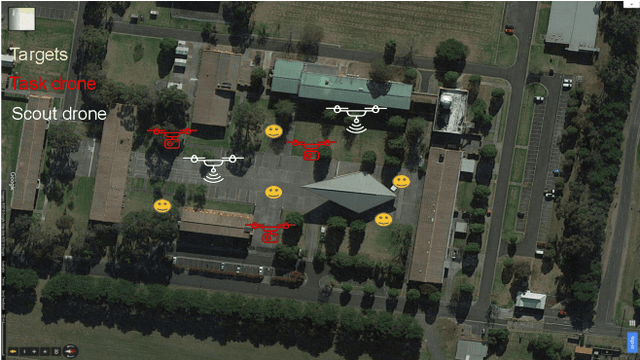

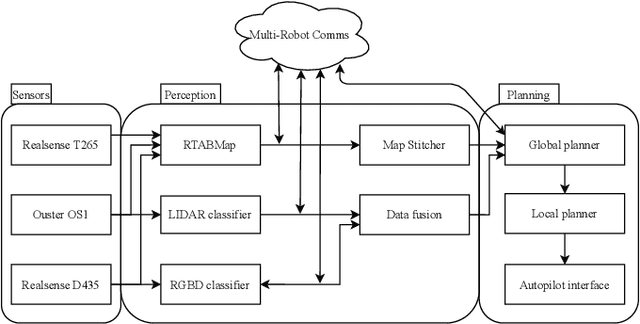

Decentralised Intelligence, Surveillance, and Reconnaissance in Unknown Environments with Heterogeneous Multi-Robot Systems

Jun 17, 2021

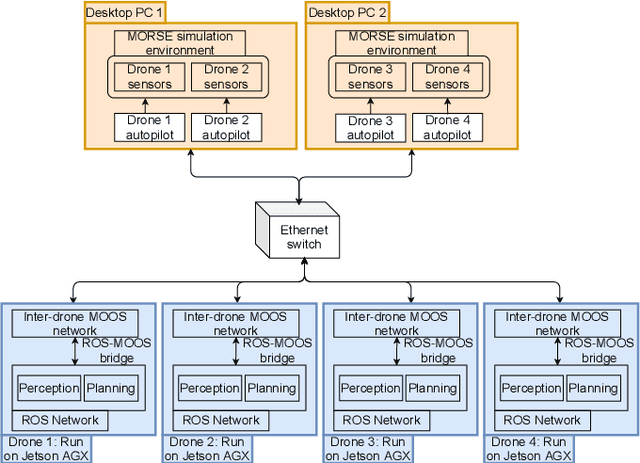

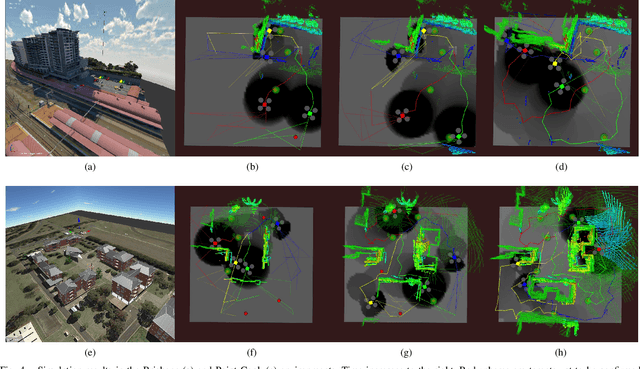

Abstract:We present the design and implementation of a decentralised, heterogeneous multi-robot system for performing intelligence, surveillance and reconnaissance (ISR) in an unknown environment. The team consists of functionally specialised robots that gather information and others that perform a mission-specific task, and is coordinated to achieve simultaneous exploration and exploitation in the unknown environment. We present a practical implementation of such a system, including decentralised inter-robot localisation, mapping, data fusion and coordination. The system is demonstrated in an efficient distributed simulation. We also describe an UAS platform for hardware experiments, and the ongoing progress.

An Upper Confidence Bound for Simultaneous Exploration and Exploitation in Heterogeneous Multi-Robot Systems

May 13, 2021

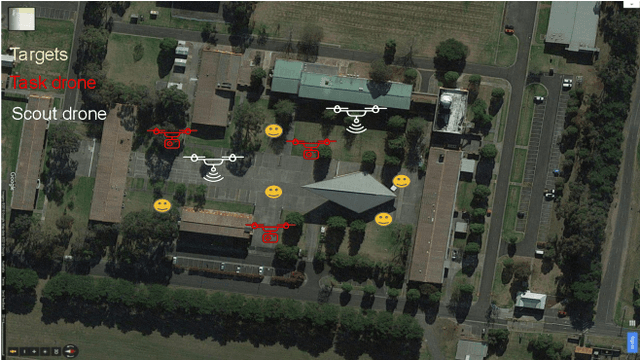

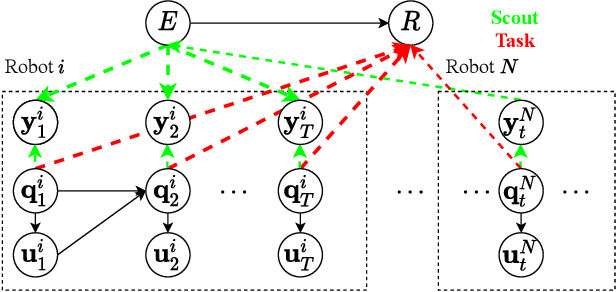

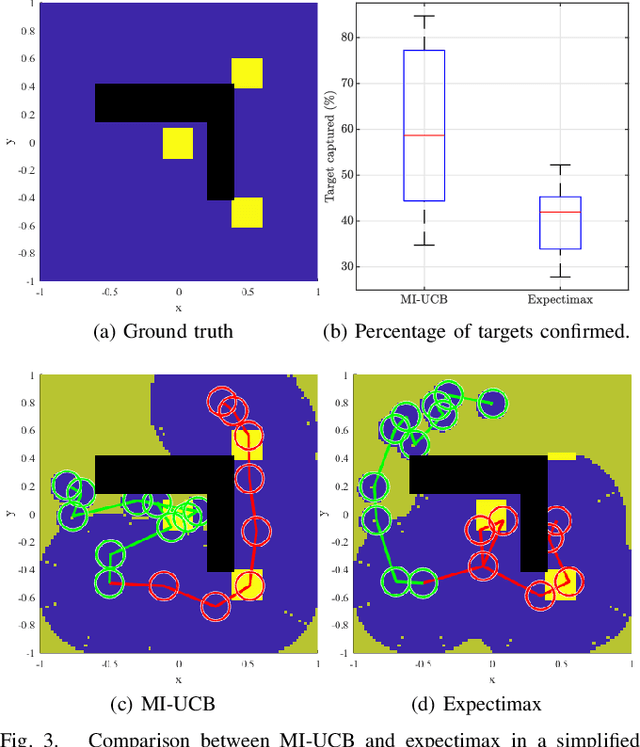

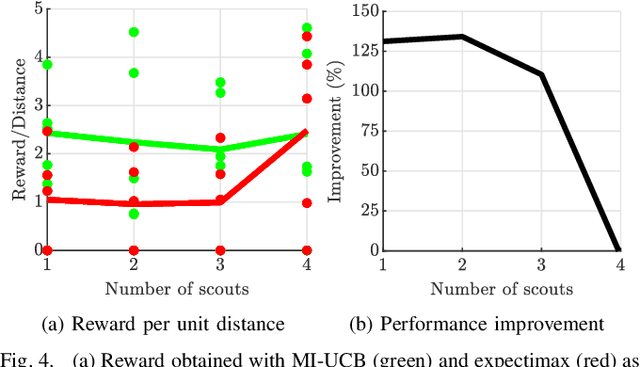

Abstract:Heterogeneous multi-robot systems are advantageous for operations in unknown environments because functionally specialised robots can gather environmental information, while others perform tasks. We define this decomposition as the scout-task robot architecture and show how it avoids the need to explicitly balance exploration and exploitation~by permitting the system to do both simultaneously. The challenge is to guide exploration in a way that improves overall performance for time-limited tasks. We derive a novel upper confidence bound for simultaneous exploration and exploitation based on mutual information and present a general solution for scout-task coordination using decentralised Monte Carlo tree search. We evaluate the performance of our algorithms in a multi-drone surveillance scenario in which scout robots are equipped with low-resolution, long-range sensors and task robots capture detailed information using short-range sensors. The results address a new class of coordination problem for heterogeneous teams that has many practical applications.

"Why did you do that?": Explaining black box models with Inductive Synthesis

Apr 17, 2019

Abstract:By their nature, the composition of black box models is opaque. This makes the ability to generate explanations for the response to stimuli challenging. The importance of explaining black box models has become increasingly important given the prevalence of AI and ML systems and the need to build legal and regulatory frameworks around them. Such explanations can also increase trust in these uncertain systems. In our paper we present RICE, a method for generating explanations of the behaviour of black box models by (1) probing a model to extract model output examples using sensitivity analysis; (2) applying CNPInduce, a method for inductive logic program synthesis, to generate logic programs based on critical input-output pairs; and (3) interpreting the target program as a human-readable explanation. We demonstrate the application of our method by generating explanations of an artificial neural network trained to follow simple traffic rules in a hypothetical self-driving car simulation. We conclude with a discussion on the scalability and usability of our approach and its potential applications to explanation-critical scenarios.

Active Clustering with Model-Based Uncertainty Reduction

Feb 14, 2014

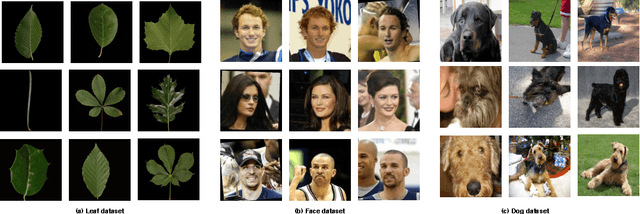

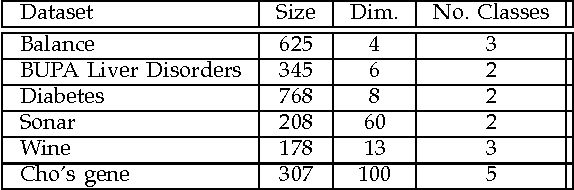

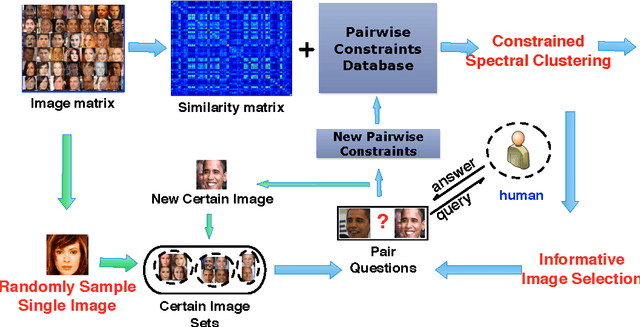

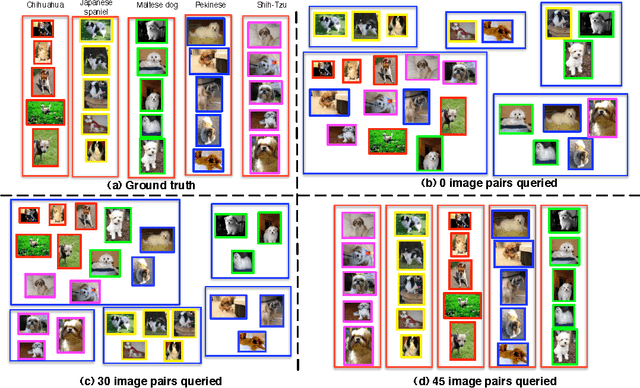

Abstract:Semi-supervised clustering seeks to augment traditional clustering methods by incorporating side information provided via human expertise in order to increase the semantic meaningfulness of the resulting clusters. However, most current methods are \emph{passive} in the sense that the side information is provided beforehand and selected randomly. This may require a large number of constraints, some of which could be redundant, unnecessary, or even detrimental to the clustering results. Thus in order to scale such semi-supervised algorithms to larger problems it is desirable to pursue an \emph{active} clustering method---i.e. an algorithm that maximizes the effectiveness of the available human labor by only requesting human input where it will have the greatest impact. Here, we propose a novel online framework for active semi-supervised spectral clustering that selects pairwise constraints as clustering proceeds, based on the principle of uncertainty reduction. Using a first-order Taylor expansion, we decompose the expected uncertainty reduction problem into a gradient and a step-scale, computed via an application of matrix perturbation theory and cluster-assignment entropy, respectively. The resulting model is used to estimate the uncertainty reduction potential of each sample in the dataset. We then present the human user with pairwise queries with respect to only the best candidate sample. We evaluate our method using three different image datasets (faces, leaves and dogs), a set of common UCI machine learning datasets and a gene dataset. The results validate our decomposition formulation and show that our method is consistently superior to existing state-of-the-art techniques, as well as being robust to noise and to unknown numbers of clusters.

Random Forests for Metric Learning with Implicit Pairwise Position Dependence

Jan 03, 2012

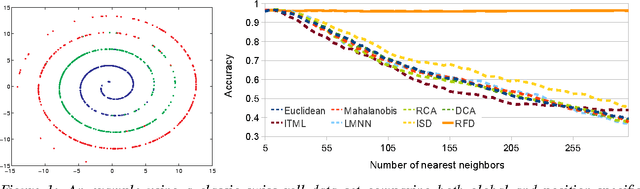

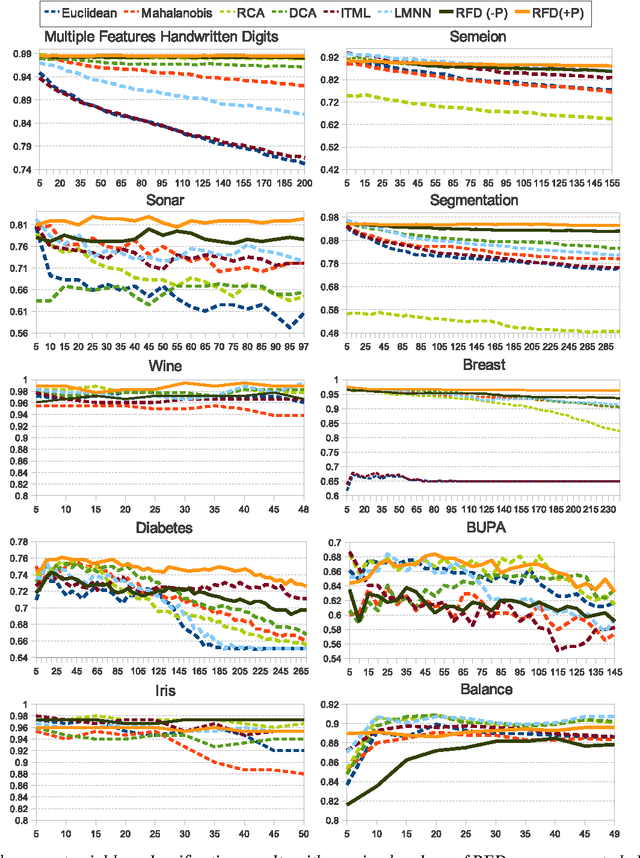

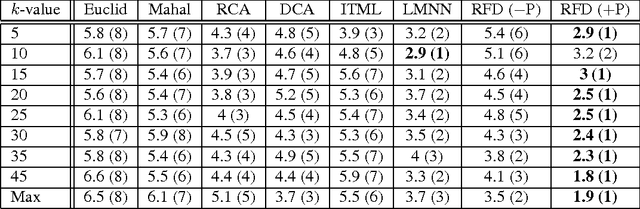

Abstract:Metric learning makes it plausible to learn distances for complex distributions of data from labeled data. However, to date, most metric learning methods are based on a single Mahalanobis metric, which cannot handle heterogeneous data well. Those that learn multiple metrics throughout the space have demonstrated superior accuracy, but at the cost of computational efficiency. Here, we take a new angle to the metric learning problem and learn a single metric that is able to implicitly adapt its distance function throughout the feature space. This metric adaptation is accomplished by using a random forest-based classifier to underpin the distance function and incorporate both absolute pairwise position and standard relative position into the representation. We have implemented and tested our method against state of the art global and multi-metric methods on a variety of data sets. Overall, the proposed method outperforms both types of methods in terms of accuracy (consistently ranked first) and is an order of magnitude faster than state of the art multi-metric methods (16x faster in the worst case).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge