Ricardo Cannizzaro

Physics-Based Causal Reasoning for Safe & Robust Next-Best Action Selection in Robot Manipulation Tasks

Mar 21, 2024

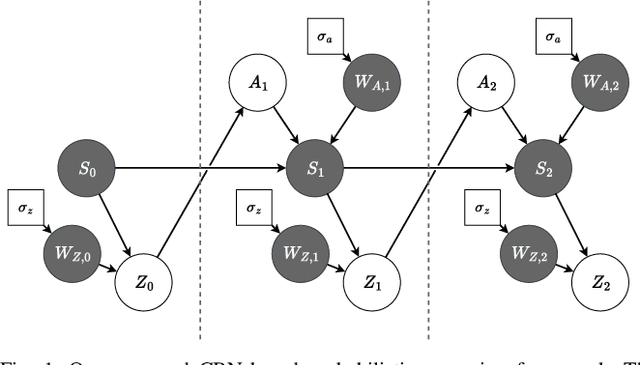

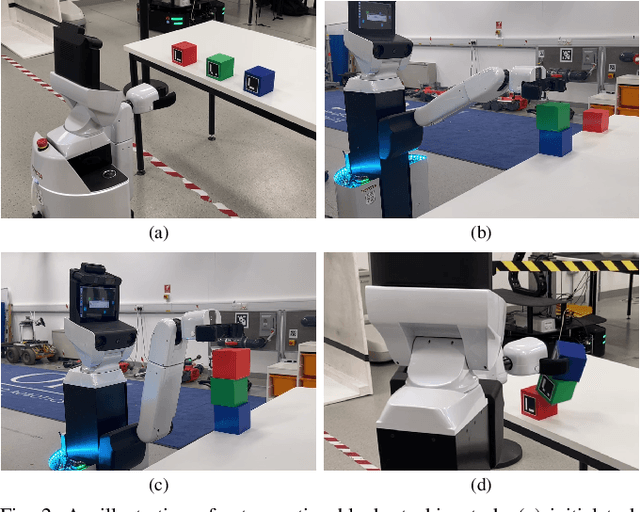

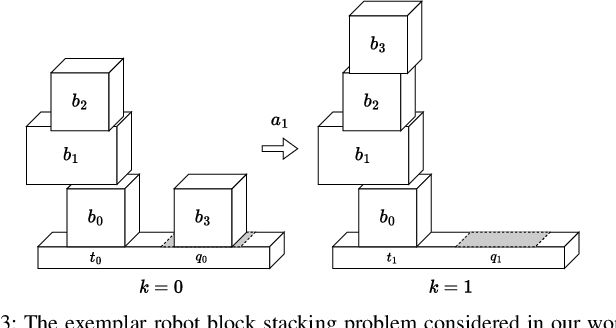

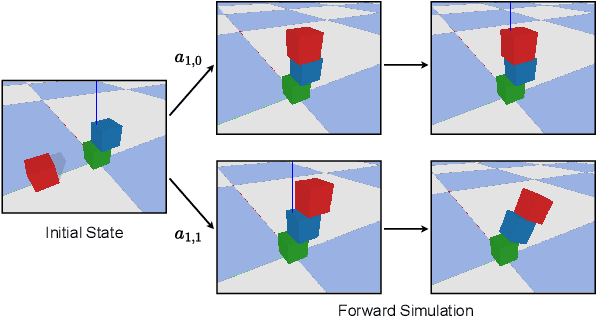

Abstract:Safe and efficient object manipulation is a key enabler of many real-world robot applications. However, this is challenging because robot operation must be robust to a range of sensor and actuator uncertainties. In this paper, we present a physics-informed causal-inference-based framework for a robot to probabilistically reason about candidate actions in a block stacking task in a partially observable setting. We integrate a physics-based simulation of the rigid-body system dynamics with a causal Bayesian network (CBN) formulation to define a causal generative probabilistic model of the robot decision-making process. Using simulation-based Monte Carlo experiments, we demonstrate our framework's ability to successfully: (1) predict block tower stability with high accuracy (Pred Acc: 88.6%); and, (2) select an approximate next-best action for the block stacking task, for execution by an integrated robot system, achieving 94.2% task success rate. We also demonstrate our framework's suitability for real-world robot systems by demonstrating successful task executions with a domestic support robot, with perception and manipulation sub-system integration. Hence, we show that by embedding physics-based causal reasoning into robots' decision-making processes, we can make robot task execution safer, more reliable, and more robust to various types of uncertainty.

Towards Probabilistic Causal Discovery, Inference & Explanations for Autonomous Drones in Mine Surveying Tasks

Aug 19, 2023

Abstract:Causal modelling offers great potential to provide autonomous agents the ability to understand the data-generation process that governs their interactions with the world. Such models capture formal knowledge as well as probabilistic representations of noise and uncertainty typically encountered by autonomous robots in real-world environments. Thus, causality can aid autonomous agents in making decisions and explaining outcomes, but deploying causality in such a manner introduces new challenges. Here we identify challenges relating to causality in the context of a drone system operating in a salt mine. Such environments are challenging for autonomous agents because of the presence of confounders, non-stationarity, and a difficulty in building complete causal models ahead of time. To address these issues, we propose a probabilistic causal framework consisting of: causally-informed POMDP planning, online SCM adaptation, and post-hoc counterfactual explanations. Further, we outline planned experimentation to evaluate the framework integrated with a drone system in simulated mine environments and on a real-world mine dataset.

Towards a Causal Probabilistic Framework for Prediction, Action-Selection & Explanations for Robot Block-Stacking Tasks

Aug 11, 2023Abstract:Uncertainties in the real world mean that is impossible for system designers to anticipate and explicitly design for all scenarios that a robot might encounter. Thus, robots designed like this are fragile and fail outside of highly-controlled environments. Causal models provide a principled framework to encode formal knowledge of the causal relationships that govern the robot's interaction with its environment, in addition to probabilistic representations of noise and uncertainty typically encountered by real-world robots. Combined with causal inference, these models permit an autonomous agent to understand, reason about, and explain its environment. In this work, we focus on the problem of a robot block-stacking task due to the fundamental perception and manipulation capabilities it demonstrates, required by many applications including warehouse logistics and domestic human support robotics. We propose a novel causal probabilistic framework to embed a physics simulation capability into a structural causal model to permit robots to perceive and assess the current state of a block-stacking task, reason about the next-best action from placement candidates, and generate post-hoc counterfactual explanations. We provide exemplar next-best action selection results and outline planned experimentation in simulated and real-world robot block-stacking tasks.

CAR-DESPOT: Causally-Informed Online POMDP Planning for Robots in Confounded Environments

Apr 13, 2023

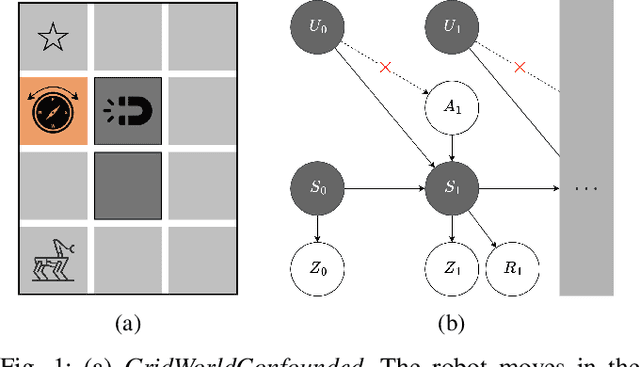

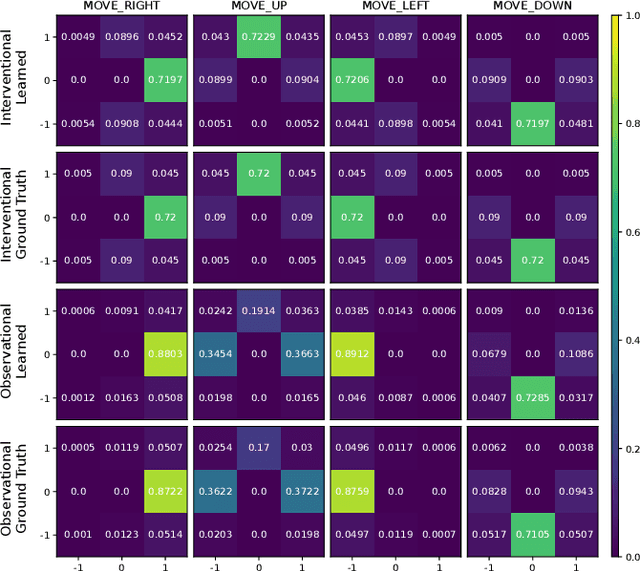

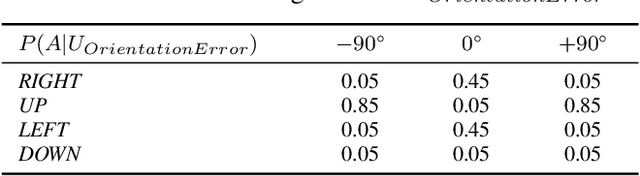

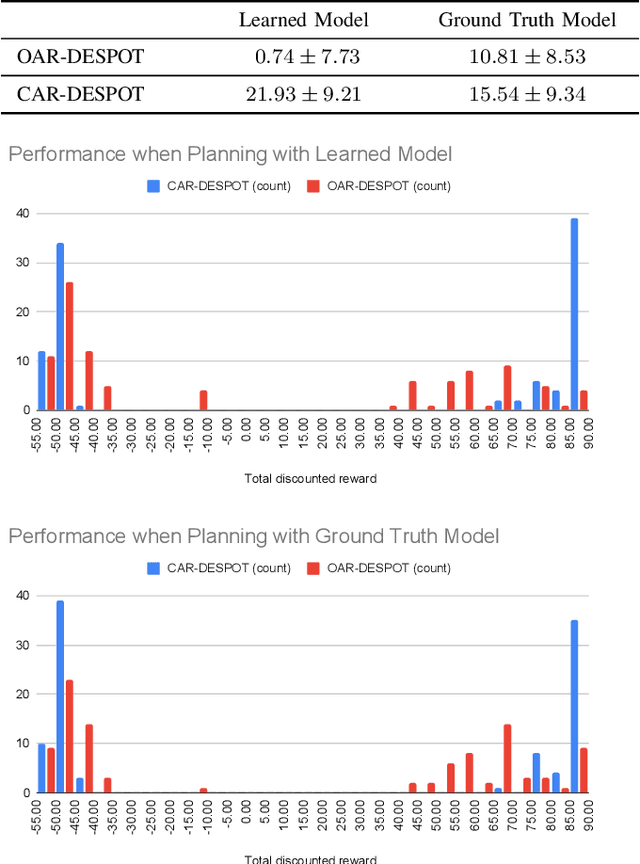

Abstract:Robots operating in real-world environments must reason about possible outcomes of stochastic actions and make decisions based on partial observations of the true world state. A major challenge for making accurate and robust action predictions is the problem of confounding, which if left untreated can lead to prediction errors. The partially observable Markov decision process (POMDP) is a widely-used framework to model these stochastic and partially-observable decision-making problems. However, due to a lack of explicit causal semantics, POMDP planning methods are prone to confounding bias and thus in the presence of unobserved confounders may produce underperforming policies. This paper presents a novel causally-informed extension of "anytime regularized determinized sparse partially observable tree" (AR-DESPOT), a modern anytime online POMDP planner, using causal modelling and inference to eliminate errors caused by unmeasured confounder variables. We further propose a method to learn offline the partial parameterisation of the causal model for planning, from ground truth model data. We evaluate our methods on a toy problem with an unobserved confounder and show that the learned causal model is highly accurate, while our planning method is more robust to confounding and produces overall higher performing policies than AR-DESPOT.

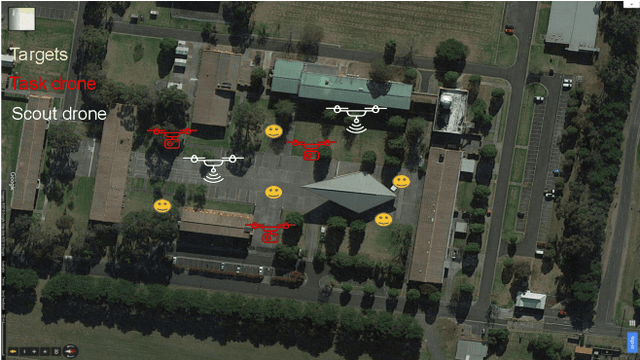

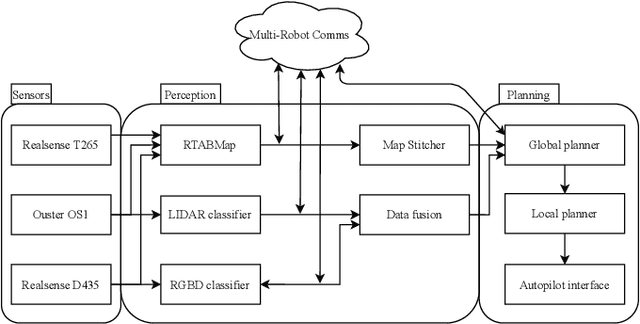

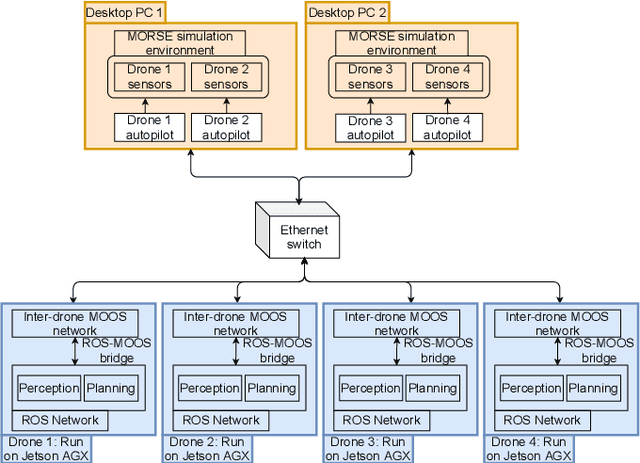

Decentralised Intelligence, Surveillance, and Reconnaissance in Unknown Environments with Heterogeneous Multi-Robot Systems

Jun 17, 2021

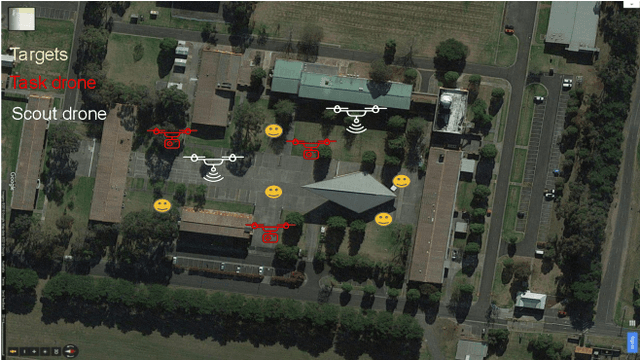

Abstract:We present the design and implementation of a decentralised, heterogeneous multi-robot system for performing intelligence, surveillance and reconnaissance (ISR) in an unknown environment. The team consists of functionally specialised robots that gather information and others that perform a mission-specific task, and is coordinated to achieve simultaneous exploration and exploitation in the unknown environment. We present a practical implementation of such a system, including decentralised inter-robot localisation, mapping, data fusion and coordination. The system is demonstrated in an efficient distributed simulation. We also describe an UAS platform for hardware experiments, and the ongoing progress.

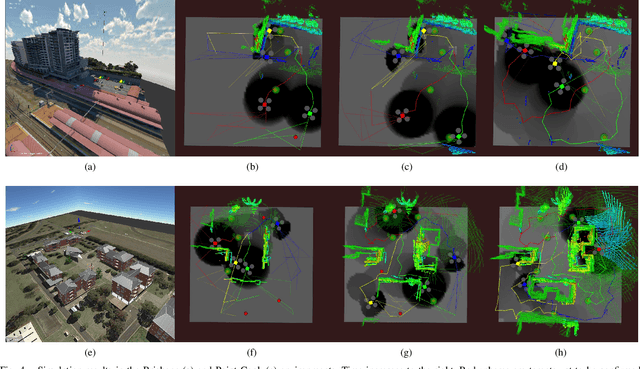

An Upper Confidence Bound for Simultaneous Exploration and Exploitation in Heterogeneous Multi-Robot Systems

May 13, 2021

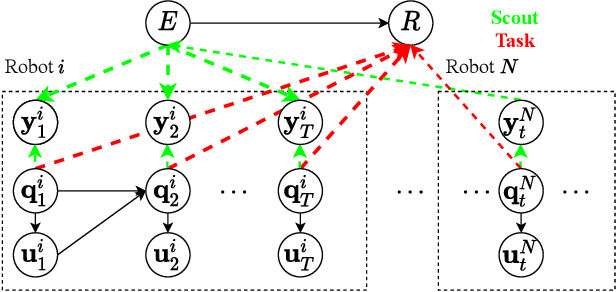

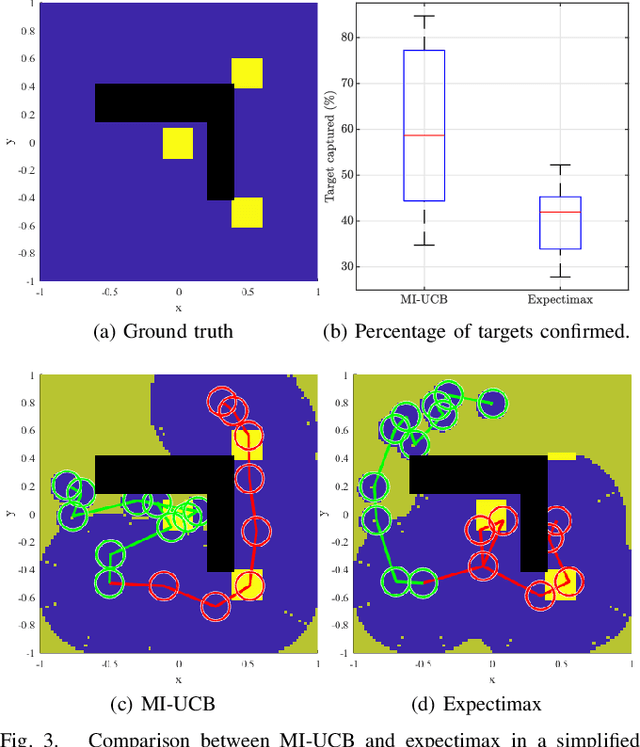

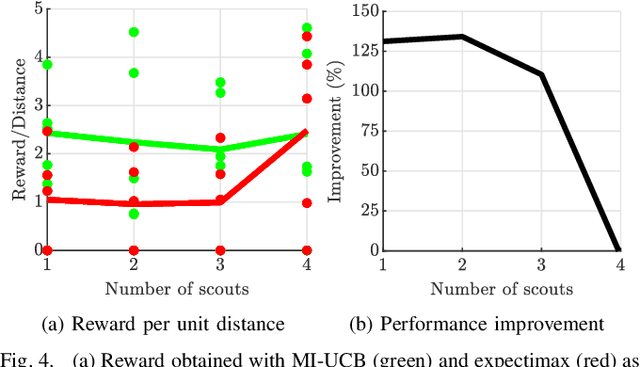

Abstract:Heterogeneous multi-robot systems are advantageous for operations in unknown environments because functionally specialised robots can gather environmental information, while others perform tasks. We define this decomposition as the scout-task robot architecture and show how it avoids the need to explicitly balance exploration and exploitation~by permitting the system to do both simultaneously. The challenge is to guide exploration in a way that improves overall performance for time-limited tasks. We derive a novel upper confidence bound for simultaneous exploration and exploitation based on mutual information and present a general solution for scout-task coordination using decentralised Monte Carlo tree search. We evaluate the performance of our algorithms in a multi-drone surveillance scenario in which scout robots are equipped with low-resolution, long-range sensors and task robots capture detailed information using short-range sensors. The results address a new class of coordination problem for heterogeneous teams that has many practical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge