Rhys Howard

Generating Causal Explanations of Vehicular Agent Behavioural Interactions with Learnt Reward Profiles

Mar 18, 2025

Abstract:Transparency and explainability are important features that responsible autonomous vehicles should possess, particularly when interacting with humans, and causal reasoning offers a strong basis to provide these qualities. However, even if one assumes agents act to maximise some concept of reward, it is difficult to make accurate causal inferences of agent planning without capturing what is of importance to the agent. Thus our work aims to learn a weighting of reward metrics for agents such that explanations for agent interactions can be causally inferred. We validate our approach quantitatively and qualitatively across three real-world driving datasets, demonstrating a functional improvement over previous methods and competitive performance across evaluation metrics.

Extending Structural Causal Models for Use in Autonomous Embodied Systems

Jun 03, 2024

Abstract:Much work has been done to develop causal reasoning techniques across a number of domains, however the utilisation of causality within autonomous systems is still in its infancy. Autonomous systems would greatly benefit from the integration of causality through the use of representations such as structural causal models (SCMs). The system would be afforded a higher level of transparency, it would enable post-hoc explanations of outcomes, and assist in the online inference of exogenous variables. These qualities are either directly beneficial to the autonomous system or a valuable step in building public trust and informing regulation. To such an end we present a case study in which we describe a module-based autonomous driving system comprised of SCMs. Approaching this task requires considerations of a number of challenges when dealing with a system of great complexity and size, that must operate for extended periods of time by itself. Here we describe these challenges, and present solutions. The first of these is SCM contexts, with the remainder being three new variable categories -- two of which are based upon functional programming monads. Finally, we conclude by presenting an example application of the causal capabilities of the autonomous driving system. In this example, we aim to attribute culpability between vehicular agents in a hypothetical road collision incident.

Generating and Explaining Corner Cases Using Learnt Probabilistic Lane Graphs

Aug 25, 2023

Abstract:Validating the safety of Autonomous Vehicles (AVs) operating in open-ended, dynamic environments is challenging as vehicles will eventually encounter safety-critical situations for which there is not representative training data. By increasing the coverage of different road and traffic conditions and by including corner cases in simulation-based scenario testing, the safety of AVs can be improved. However, the creation of corner case scenarios including multiple agents is non-trivial. Our approach allows engineers to generate novel, realistic corner cases based on historic traffic data and to explain why situations were safety-critical. In this paper, we introduce Probabilistic Lane Graphs (PLGs) to describe a finite set of lane positions and directions in which vehicles might travel. The structure of PLGs is learnt directly from spatio-temporal traffic data. The graph model represents the actions of the drivers in response to a given state in the form of a probabilistic policy. We use reinforcement learning techniques to modify this policy and to generate realistic and explainable corner case scenarios which can be used for assessing the safety of AVs.

Towards Probabilistic Causal Discovery, Inference & Explanations for Autonomous Drones in Mine Surveying Tasks

Aug 19, 2023

Abstract:Causal modelling offers great potential to provide autonomous agents the ability to understand the data-generation process that governs their interactions with the world. Such models capture formal knowledge as well as probabilistic representations of noise and uncertainty typically encountered by autonomous robots in real-world environments. Thus, causality can aid autonomous agents in making decisions and explaining outcomes, but deploying causality in such a manner introduces new challenges. Here we identify challenges relating to causality in the context of a drone system operating in a salt mine. Such environments are challenging for autonomous agents because of the presence of confounders, non-stationarity, and a difficulty in building complete causal models ahead of time. To address these issues, we propose a probabilistic causal framework consisting of: causally-informed POMDP planning, online SCM adaptation, and post-hoc counterfactual explanations. Further, we outline planned experimentation to evaluate the framework integrated with a drone system in simulated mine environments and on a real-world mine dataset.

Simulation-Based Counterfactual Causal Discovery on Real World Driver Behaviour

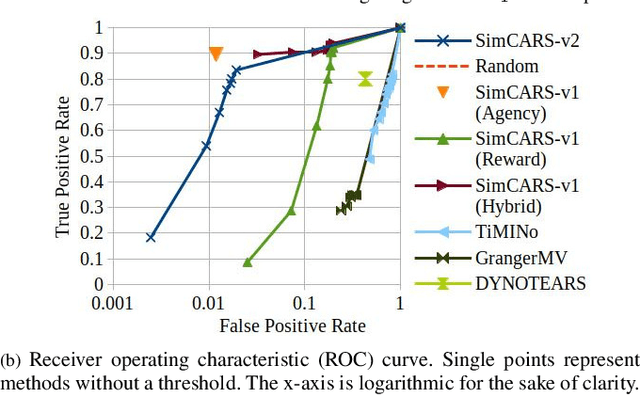

Jun 06, 2023

Abstract:Being able to reason about how one's behaviour can affect the behaviour of others is a core skill required of intelligent driving agents. Despite this, the state of the art struggles to meet the need of agents to discover causal links between themselves and others. Observational approaches struggle because of the non-stationarity of causal links in dynamic environments, and the sparsity of causal interactions while requiring the approaches to work in an online fashion. Meanwhile interventional approaches are impractical as a vehicle cannot experiment with its actions on a public road. To counter the issue of non-stationarity we reformulate the problem in terms of extracted events, while the previously mentioned restriction upon interventions can be overcome with the use of counterfactual simulation. We present three variants of the proposed counterfactual causal discovery method and evaluate these against state of the art observational temporal causal discovery methods across 3396 causal scenes extracted from a real world driving dataset. We find that the proposed method significantly outperforms the state of the art on the proposed task quantitatively and can offer additional insights by comparing the outcome of an alternate series of decisions in a way that observational and interventional approaches cannot.

Evaluating Temporal Observation-Based Causal Discovery Techniques Applied to Road Driver Behaviour

Jan 31, 2023

Abstract:Autonomous robots are required to reason about the behaviour of dynamic agents in their environment. To this end, many approaches assume that causal models describing the interactions of agents are given a priori. However, in many application domains such models do not exist or cannot be engineered. Hence, the learning (or discovery) of high-level causal structures from low-level, temporal observations is a key problem in AI and robotics. However, the application of causal discovery methods to scenarios involving autonomous agents remains in the early stages of research. While a number of methods exist for performing causal discovery on time series data, these usually rely upon assumptions such as sufficiency and stationarity which cannot be guaranteed in interagent behavioural interactions in the real world. In this paper we are applying contemporary observation-based temporal causal discovery techniques to real world and synthetic driving scenarios from multiple datasets. Our evaluation demonstrates and highlights the limitations of state of the art approaches by comparing and contrasting the performance between real and synthetically generated data. Finally, based on our analysis, we discuss open issues related to causal discovery on autonomous robotics scenarios and propose future research directions for overcoming current limitations in the field.

Learning Transferable Push Manipulation Skills in Novel Contexts

Jul 29, 2020

Abstract:This paper is concerned with learning transferable forward models for push manipulation that can be applying to novel contexts and how to improve the quality of prediction when critical information is available. We propose to learn a parametric internal model for push interactions that, similar for humans, enables a robot to predict the outcome of a physical interaction even in novel contexts. Given a desired push action, humans are capable to identify where to place their finger on a new object so to produce a predictable motion of the object. We achieve the same behaviour by factorising the learning into two parts. First, we learn a set of local contact models to represent the geometrical relations between the robot pusher, the object, and the environment. Then we learn a set of parametric local motion models to predict how these contacts change throughout a push. The set of contact and motion models represent our internal model. By adjusting the shapes of the distributions over the physical parameters, we modify the internal model's response. Uniform distributions yield to coarse estimates when no information is available about the novel context (i.e. unbiased predictor). A more accurate predictor can be learned for a specific environment/object pair (e.g. low friction/high mass), i.e. biased predictor. The effectiveness of our approach is shown in a simulated environment in which a Pioneer 3-DX robot needs to predict a push outcome for a novel object, and we provide a proof of concept on a real robot. We train on 2 objects (a cube and a cylinder) for a total of 24,000 pushes in various conditions, and test on 6 objects encompassing a variety of shapes, sizes, and physical parameters for a total of 14,400 predicted push outcomes. Our results show that both biased and unbiased predictors can reliably produce predictions in line with the outcomes of a carefully tuned physics simulator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge