David Blaauw

University of Michigan

Memory-Efficient Acceleration of Block Low-Rank Foundation Models on Resource Constrained GPUs

Dec 24, 2025Abstract:Recent advances in transformer-based foundation models have made them the default choice for many tasks, but their rapidly growing size makes fitting a full model on a single GPU increasingly difficult and their computational cost prohibitive. Block low-rank (BLR) compression techniques address this challenge by learning compact representations of weight matrices. While traditional low-rank (LR) methods often incur sharp accuracy drops, BLR approaches such as Monarch and BLAST can better capture the underlying structure, thus preserving accuracy while reducing computations and memory footprints. In this work, we use roofline analysis to show that, although BLR methods achieve theoretical savings and practical speedups for single-token inference, multi-token inference often becomes memory-bound in practice, increasing latency despite compiler-level optimizations in PyTorch. To address this, we introduce custom Triton kernels with partial fusion and memory layout optimizations for both Monarch and BLAST. On memory-constrained NVIDIA GPUs such as Jetson Orin Nano and A40, our kernels deliver up to $3.76\times$ speedups and $3\times$ model size compression over PyTorch dense baselines using CUDA backend and compiler-level optimizations, while supporting various models including Llama-7/1B, GPT2-S, DiT-XL/2, and ViT-B. Our code is available at https://github.com/pabillam/mem-efficient-blr .

Microscopic Robots That Sense, Think, Act, and Compute

Mar 29, 2025Abstract:While miniaturization has been a goal in robotics for nearly 40 years, roboticists have struggled to access sub-millimeter dimensions without making sacrifices to on-board information processing due to the unique physics of the microscale. Consequently, microrobots often lack the key features that distinguish their macroscopic cousins from other machines, namely on-robot systems for decision making, sensing, feedback, and programmable computation. Here, we take up the challenge of building a microrobot comparable in size to a single-celled paramecium that can sense, think, and act using onboard systems for computation, sensing, memory, locomotion, and communication. Built massively in parallel with fully lithographic processing, these microrobots can execute digitally defined algorithms and autonomously change behavior in response to their surroundings. Combined, these results pave the way for general purpose microrobots that can be programmed many times in a simple setup, cost under $0.01 per machine, and work together to carry out tasks without supervision in uncertain environments.

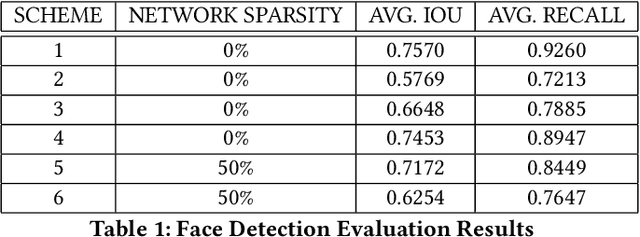

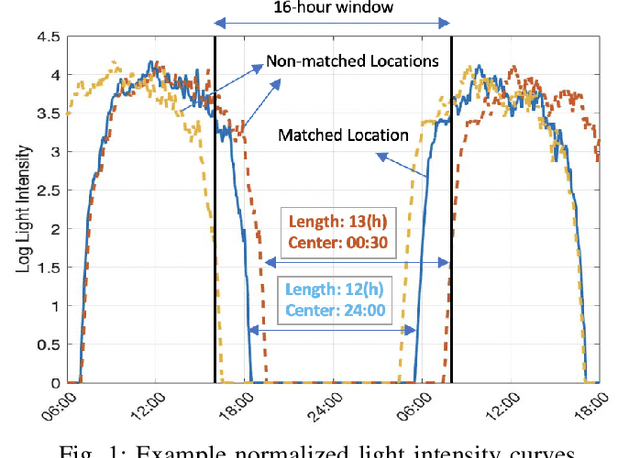

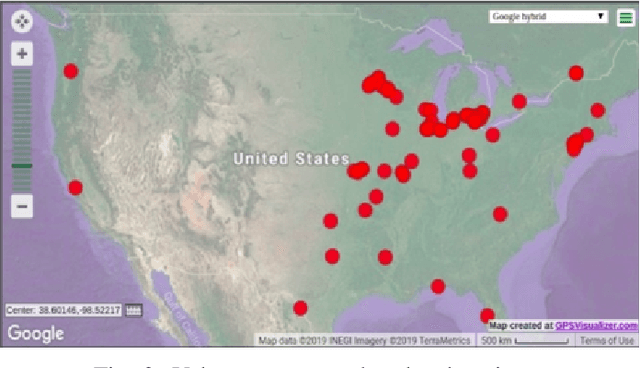

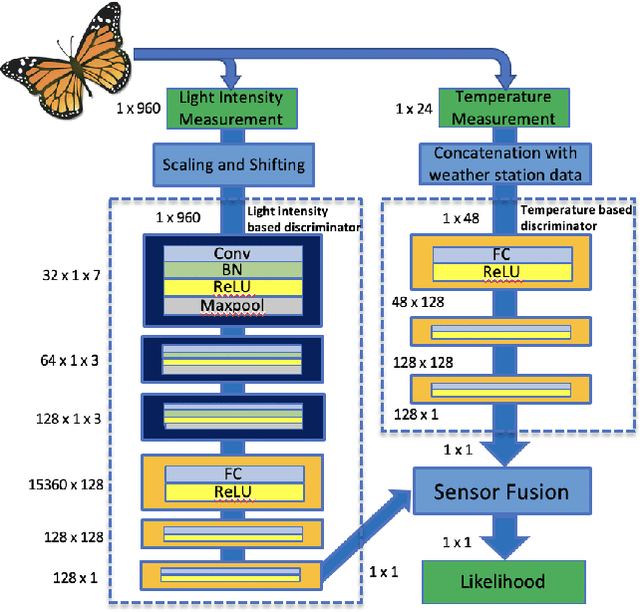

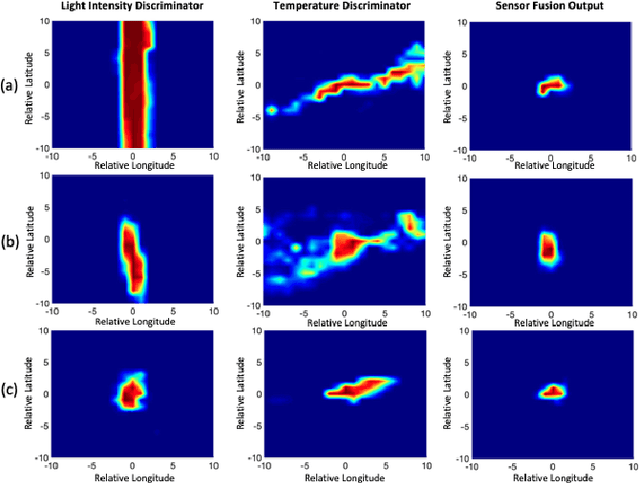

Siamese Learning-based Monarch Butterfly Localization

Jul 04, 2023

Abstract:A new GPS-less, daily localization method is proposed with deep learning sensor fusion that uses daylight intensity and temperature sensor data for Monarch butterfly tracking. Prior methods suffer from the location-independent day length during the equinox, resulting in high localization errors around that date. This work proposes a new Siamese learning-based localization model that improves the accuracy and reduces the bias of daily Monarch butterfly localization using light and temperature measurements. To train and test the proposed algorithm, we use $5658$ daily measurement records collected through a data measurement campaign involving 306 volunteers across the U.S., Canada, and Mexico from 2018 to 2020. This model achieves a mean absolute error of $1.416^\circ$ in latitude and $0.393^\circ$ in longitude coordinates outperforming the prior method.

Variational Mixtures of ODEs for Inferring Cellular Gene Expression Dynamics

Jul 09, 2022

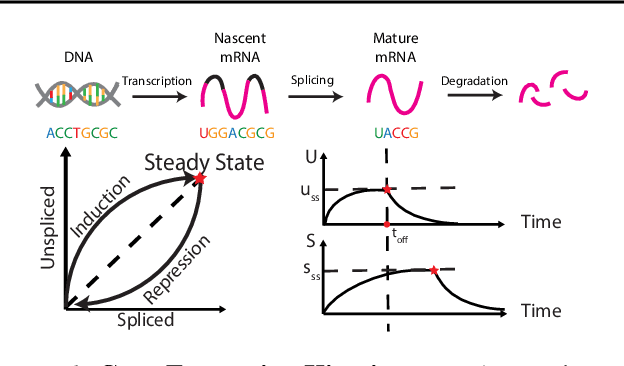

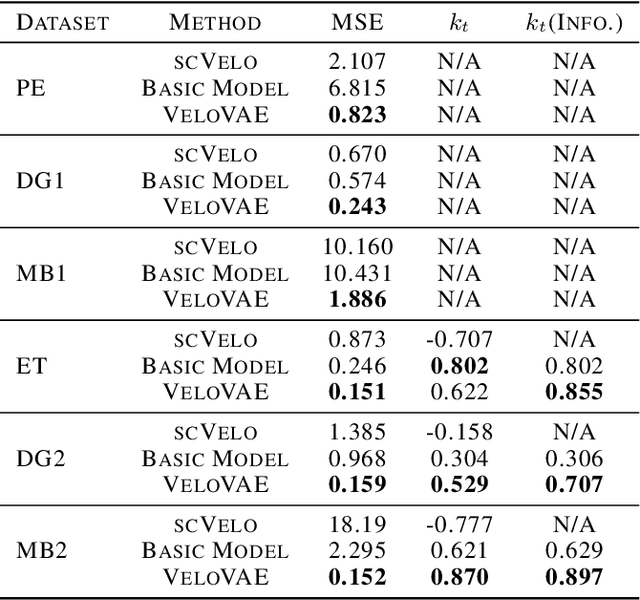

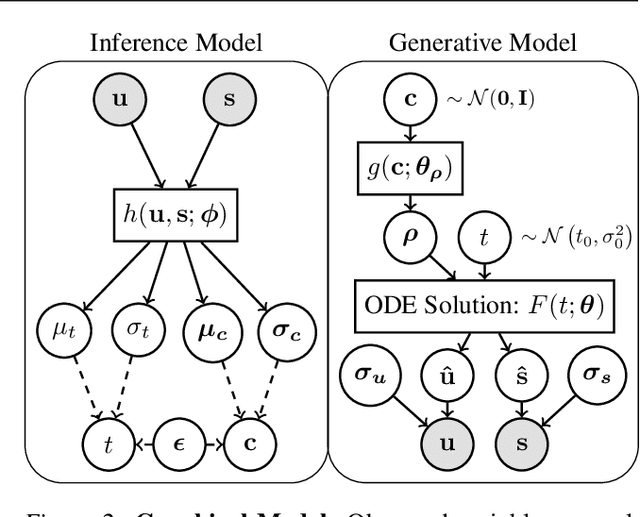

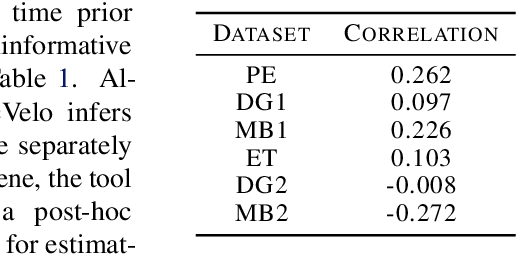

Abstract:A key problem in computational biology is discovering the gene expression changes that regulate cell fate transitions, in which one cell type turns into another. However, each individual cell cannot be tracked longitudinally, and cells at the same point in real time may be at different stages of the transition process. This can be viewed as a problem of learning the behavior of a dynamical system from observations whose times are unknown. Additionally, a single progenitor cell type often bifurcates into multiple child cell types, further complicating the problem of modeling the dynamics. To address this problem, we developed an approach called variational mixtures of ordinary differential equations. By using a simple family of ODEs informed by the biochemistry of gene expression to constrain the likelihood of a deep generative model, we can simultaneously infer the latent time and latent state of each cell and predict its future gene expression state. The model can be interpreted as a mixture of ODEs whose parameters vary continuously across a latent space of cell states. Our approach dramatically improves data fit, latent time inference, and future cell state estimation of single-cell gene expression data compared to previous approaches.

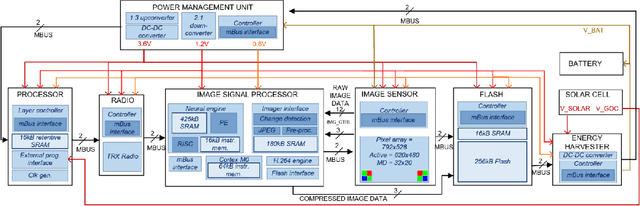

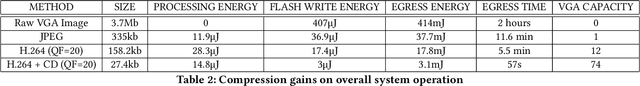

Millimeter-Scale Ultra-Low-Power Imaging System for Intelligent Edge Monitoring

Mar 09, 2022

Abstract:Millimeter-scale embedded sensing systems have unique advantages over larger devices as they are able to capture, analyze, store, and transmit data at the source while being unobtrusive and covert. However, area-constrained systems pose several challenges, including a tight energy budget and peak power, limited data storage, costly wireless communication, and physical integration at a miniature scale. This paper proposes a novel 6.7$\times$7$\times$5mm imaging system with deep-learning and image processing capabilities for intelligent edge applications, and is demonstrated in a home-surveillance scenario. The system is implemented by vertically stacking custom ultra-low-power (ULP) ICs and uses techniques such as dynamic behavior-specific power management, hierarchical event detection, and a combination of data compression methods. It demonstrates a new image-correcting neural network that compensates for non-idealities caused by a mm-scale lens and ULP front-end. The system can store 74 frames or offload data wirelessly, consuming 49.6$\mu$W on average for an expected battery lifetime of 7 days.

Migrating Monarch Butterfly Localization Using Multi-Sensor Fusion Neural Networks

Dec 14, 2019

Abstract:Details of Monarch butterfly migration from the U.S. to Mexico remain a mystery due to lack of a proper localization technology to accurately localize and track butterfly migration. In this paper, we propose a deep learning based butterfly localization algorithm that can estimate a butterfly's daily location by analyzing a light and temperature sensor data log continuously obtained from an ultra-low power, mm-scale sensor attached to the butterfly. To train and test the proposed neural network based multi-sensor fusion localization algorithm, we collected over 1500 days of real world sensor measurement data with 82 volunteers all over the U.S. The proposed algorithm exhibits a mean absolute error of <1.5 degree in latitude and <0.5 degree in longitude Earth coordinate, satisfying our target goal for the Monarch butterfly migration study.

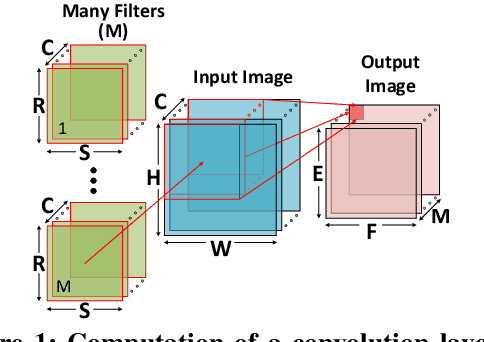

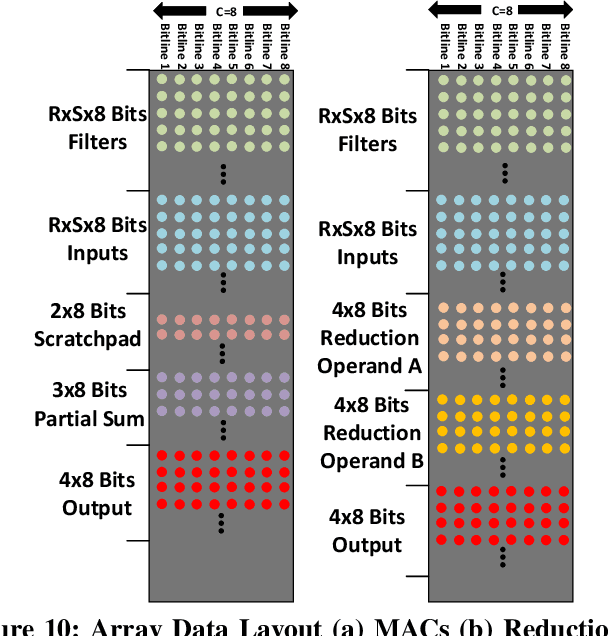

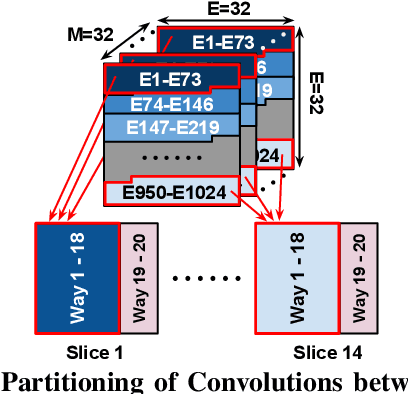

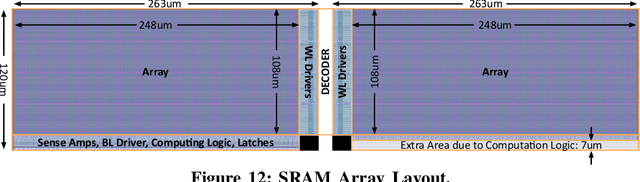

Neural Cache: Bit-Serial In-Cache Acceleration of Deep Neural Networks

May 09, 2018

Abstract:This paper presents the Neural Cache architecture, which re-purposes cache structures to transform them into massively parallel compute units capable of running inferences for Deep Neural Networks. Techniques to do in-situ arithmetic in SRAM arrays, create efficient data mapping and reducing data movement are proposed. The Neural Cache architecture is capable of fully executing convolutional, fully connected, and pooling layers in-cache. The proposed architecture also supports quantization in-cache. Our experimental results show that the proposed architecture can improve inference latency by 18.3x over state-of-art multi-core CPU (Xeon E5), 7.7x over server class GPU (Titan Xp), for Inception v3 model. Neural Cache improves inference throughput by 12.4x over CPU (2.2x over GPU), while reducing power consumption by 50% over CPU (53% over GPU).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge