Dario Fuoli

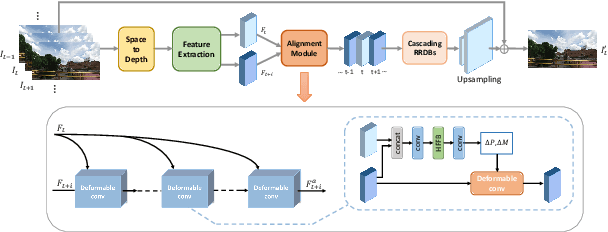

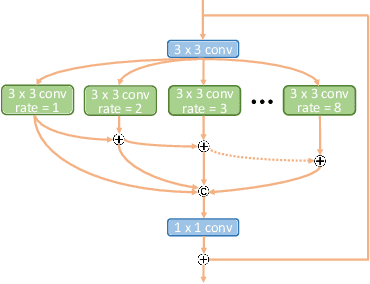

Fast Online Video Super-Resolution with Deformable Attention Pyramid

Feb 03, 2022

Abstract:Video super-resolution (VSR) has many applications that pose strict causal, real-time, and latency constraints, including video streaming and TV. We address the VSR problem under these settings, which poses additional important challenges since information from future frames are unavailable. Importantly, designing efficient, yet effective frame alignment and fusion modules remain central problems. In this work, we propose a recurrent VSR architecture based on a deformable attention pyramid (DAP). Our DAP aligns and integrates information from the recurrent state into the current frame prediction. To circumvent the computational cost of traditional attention-based methods, we only attend to a limited number of spatial locations, which are dynamically predicted by the DAP. Comprehensive experiments and analysis of the proposed key innovations show the effectiveness of our approach. We significantly reduce processing time in comparison to state-of-the-art methods, while maintaining a high performance. We surpass state-of-the-art method EDVR-M on two standard benchmarks with a speed-up of over 3x.

Fourier Space Losses for Efficient Perceptual Image Super-Resolution

Jun 01, 2021

Abstract:Many super-resolution (SR) models are optimized for high performance only and therefore lack efficiency due to large model complexity. As large models are often not practical in real-world applications, we investigate and propose novel loss functions, to enable SR with high perceptual quality from much more efficient models. The representative power for a given low-complexity generator network can only be fully leveraged by strong guidance towards the optimal set of parameters. We show that it is possible to improve the performance of a recently introduced efficient generator architecture solely with the application of our proposed loss functions. In particular, we use a Fourier space supervision loss for improved restoration of missing high-frequency (HF) content from the ground truth image and design a discriminator architecture working directly in the Fourier domain to better match the target HF distribution. We show that our losses' direct emphasis on the frequencies in Fourier-space significantly boosts the perceptual image quality, while at the same time retaining high restoration quality in comparison to previously proposed loss functions for this task. The performance is further improved by utilizing a combination of spatial and frequency domain losses, as both representations provide complementary information during training. On top of that, the trained generator achieves comparable results with and is 2.4x and 48x faster than state-of-the-art perceptual SR methods RankSRGAN and SRFlow respectively.

An Efficient Recurrent Adversarial Framework for Unsupervised Real-Time Video Enhancement

Dec 24, 2020

Abstract:Video enhancement is a challenging problem, more than that of stills, mainly due to high computational cost, larger data volumes and the difficulty of achieving consistency in the spatio-temporal domain. In practice, these challenges are often coupled with the lack of example pairs, which inhibits the application of supervised learning strategies. To address these challenges, we propose an efficient adversarial video enhancement framework that learns directly from unpaired video examples. In particular, our framework introduces new recurrent cells that consist of interleaved local and global modules for implicit integration of spatial and temporal information. The proposed design allows our recurrent cells to efficiently propagate spatio-temporal information across frames and reduces the need for high complexity networks. Our setting enables learning from unpaired videos in a cyclic adversarial manner, where the proposed recurrent units are employed in all architectures. Efficient training is accomplished by introducing one single discriminator that learns the joint distribution of source and target domain simultaneously. The enhancement results demonstrate clear superiority of the proposed video enhancer over the state-of-the-art methods, in all terms of visual quality, quantitative metrics, and inference speed. Notably, our video enhancer is capable of enhancing over 35 frames per second of FullHD video (1080x1920).

AIM 2020 Challenge on Video Extreme Super-Resolution: Methods and Results

Sep 14, 2020Abstract:This paper reviews the video extreme super-resolution challenge associated with the AIM 2020 workshop at ECCV 2020. Common scaling factors for learned video super-resolution (VSR) do not go beyond factor 4. Missing information can be restored well in this region, especially in HR videos, where the high-frequency content mostly consists of texture details. The task in this challenge is to upscale videos with an extreme factor of 16, which results in more serious degradations that also affect the structural integrity of the videos. A single pixel in the low-resolution (LR) domain corresponds to 256 pixels in the high-resolution (HR) domain. Due to this massive information loss, it is hard to accurately restore the missing information. Track 1 is set up to gauge the state-of-the-art for such a demanding task, where fidelity to the ground truth is measured by PSNR and SSIM. Perceptually higher quality can be achieved in trade-off for fidelity by generating plausible high-frequency content. Track 2 therefore aims at generating visually pleasing results, which are ranked according to human perception, evaluated by a user study. In contrast to single image super-resolution (SISR), VSR can benefit from additional information in the temporal domain. However, this also imposes an additional requirement, as the generated frames need to be consistent along time.

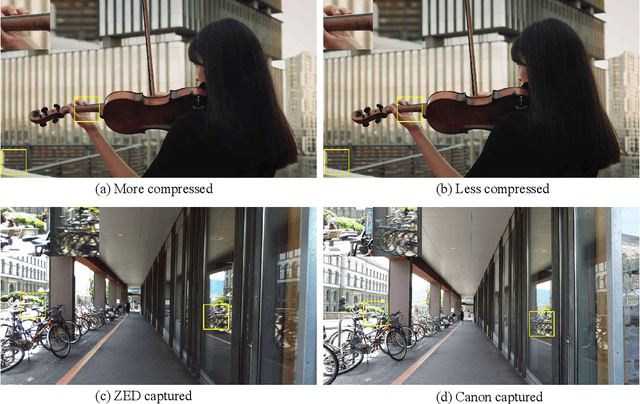

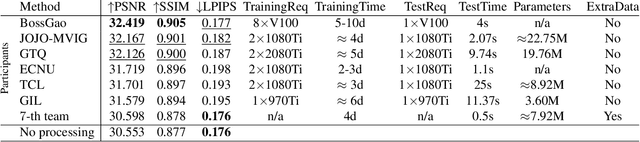

NTIRE 2020 Challenge on Video Quality Mapping: Methods and Results

May 06, 2020

Abstract:This paper reviews the NTIRE 2020 challenge on video quality mapping (VQM), which addresses the issues of quality mapping from source video domain to target video domain. The challenge includes both a supervised track (track 1) and a weakly-supervised track (track 2) for two benchmark datasets. In particular, track 1 offers a new Internet video benchmark, requiring algorithms to learn the map from more compressed videos to less compressed videos in a supervised training manner. In track 2, algorithms are required to learn the quality mapping from one device to another when their quality varies substantially and weakly-aligned video pairs are available. For track 1, in total 7 teams competed in the final test phase, demonstrating novel and effective solutions to the problem. For track 2, some existing methods are evaluated, showing promising solutions to the weakly-supervised video quality mapping problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge