Danda B. Rawat

Digital-Twin Empowered Deep Reinforcement Learning For Site-Specific Radio Resource Management in NextG Wireless Aerial Corridor

Feb 03, 2026Abstract:Joint base station (BS) association and beam selection in multi-UAV aerial corridors constitutes a challenging radio resource management (RRM) problem. It is driven by high-dimensional action spaces, need for substantial overhead to acquire global channel state information (CSI), rapidly varying propagation channels, and stringent latency requirements. Conventional combinatorial optimization methods, while near-optimal, are computationally prohibitive for real-time operation in such dynamic environments. While learning-based approaches can mitigate computational complexity and CSI overhead, the need for extensive site-specific (SS) datasets for model training remains a key challenge. To address these challenges, we develop a Digital Twin (DT)-enabled two-stage optimization framework that couples physics-based beam gain modeling with DRL for scalable online decision-making. In the first stage, a channel twin (CT) is constructed using a high-fidelity ray-tracing solver with geo-spatial contexts, and network information to capture SS propagation characteristics, and dual annealing algorithm is employed to precompute optimal transmission beam directions. In the second stage, a Multi-Head Proximal Policy Optimization (MH-PPO) agent, equipped with a scalable multi-head actor-critic architecture, is trained on the DT-generated channel dataset to directly map complex channel and beam states to jointly execute UAV-BS-beam association decisions. The proposed PPO agent achieves a 44%-121% improvement over DQN and 249%-807% gain over traditional heuristic based optimization schemes in a dense UAV scenario, while reducing inference latency by several orders of magnitude. These results demonstrate that DT-driven training pipelines can deliver high-performance, low-latency RRM policies tailored to SS deployments suitable for real-time resource management in next-generation aerial corridor networks.

Think Like a Person Before Responding: A Multi-Faceted Evaluation of Persona-Guided LLMs for Countering Hate

Jun 04, 2025Abstract:Automated counter-narratives (CN) offer a promising strategy for mitigating online hate speech, yet concerns about their affective tone, accessibility, and ethical risks remain. We propose a framework for evaluating Large Language Model (LLM)-generated CNs across four dimensions: persona framing, verbosity and readability, affective tone, and ethical robustness. Using GPT-4o-Mini, Cohere's CommandR-7B, and Meta's LLaMA 3.1-70B, we assess three prompting strategies on the MT-Conan and HatEval datasets. Our findings reveal that LLM-generated CNs are often verbose and adapted for people with college-level literacy, limiting their accessibility. While emotionally guided prompts yield more empathetic and readable responses, there remain concerns surrounding safety and effectiveness.

Digital Twin Enabled Site Specific Channel Precoding: Over the Air CIR Inference

Jan 27, 2025Abstract:This paper investigates the significance of designing a reliable, intelligent, and true physical environment-aware precoding scheme by leveraging an accurately designed channel twin model to obtain realistic channel state information (CSI) for cellular communication systems. Specifically, we propose a fine-tuned multi-step channel twin design process that can render CSI very close to the CSI of the actual environment. After generating a precise CSI, we execute precoding using the obtained CSI at the transmitter end. We demonstrate a two-step parameters' tuning approach to design channel twin by ray tracing (RT) emulation, then further fine-tuning of CSI by employing an artificial intelligence (AI) based algorithm can significantly reduce the gap between actual CSI and the fine-tuned digital twin (DT) rendered CSI. The simulation results show the effectiveness of the proposed novel approach in designing a true physical environment-aware channel twin model.

Deep Learning Model-Based Channel Estimation for THz Band Massive MIMO with RF Impairments

Sep 24, 2024Abstract:THz band enabled large scale massive MIMO (M-MIMO) is considered as a key enabler for the 6G technology, given its enormous bandwidth and for its low latency connectivity. In the large-scale M-MIMO configuration, enlarged array aperture and small wavelengths of THz results in an amalgamation of both far field and near field paths, which makes tasks such as channel estimation for THz M-MIMO highly challenging. Moreover, at the THz transceiver, radio frequency (RF) impairments such as phase noise (PN) of the analog devices also leads to degradation in channel estimation performance. Classical estimators as well as traditional deep learning (DL) based algorithms struggle to maintain their robustness when performing for large scale antenna arrays i.e., M-MIMO, and when RF impairments are considered for practical usage. To effectively address this issue, it is crucial to utilize a neural network (NN) that has the ability to study the behaviors of the channel and RF impairment correlations, such as a recurrent neural network (RNN). The RF impairments act as sequential noise data which is subsequently incorporated with the channel data, leading to choose a specific type of RNN known as bidirectional long short-term memory (BiLSTM) which is followed by gated recurrent units (GRU) to process the sequential data. Simulation results demonstrate that our proposed model outperforms other benchmark approaches at various signal-to-noise ratio (SNR) levels.

Reinforcement Learning-enabled Satellite Constellation Reconfiguration and Retasking for Mission-Critical Applications

Sep 03, 2024Abstract:The development of satellite constellation applications is rapidly advancing due to increasing user demands, reduced operational costs, and technological advancements. However, a significant gap in the existing literature concerns reconfiguration and retasking issues within satellite constellations, which is the primary focus of our research. In this work, we critically assess the impact of satellite failures on constellation performance and the associated task requirements. To facilitate this analysis, we introduce a system modeling approach for GPS satellite constellations, enabling an investigation into performance dynamics and task distribution strategies, particularly in scenarios where satellite failures occur during mission-critical operations. Additionally, we introduce reinforcement learning (RL) techniques, specifically Q-learning, Policy Gradient, Deep Q-Network (DQN), and Proximal Policy Optimization (PPO), for managing satellite constellations, addressing the challenges posed by reconfiguration and retasking following satellite failures. Our results demonstrate that DQN and PPO achieve effective outcomes in terms of average rewards, task completion rates, and response times.

Neuro-Symbolic AI for Military Applications

Aug 17, 2024Abstract:Artificial Intelligence (AI) plays a significant role in enhancing the capabilities of defense systems, revolutionizing strategic decision-making, and shaping the future landscape of military operations. Neuro-Symbolic AI is an emerging approach that leverages and augments the strengths of neural networks and symbolic reasoning. These systems have the potential to be more impactful and flexible than traditional AI systems, making them well-suited for military applications. This paper comprehensively explores the diverse dimensions and capabilities of Neuro-Symbolic AI, aiming to shed light on its potential applications in military contexts. We investigate its capacity to improve decision-making, automate complex intelligence analysis, and strengthen autonomous systems. We further explore its potential to solve complex tasks in various domains, in addition to its applications in military contexts. Through this exploration, we address ethical, strategic, and technical considerations crucial to the development and deployment of Neuro-Symbolic AI in military and civilian applications. Contributing to the growing body of research, this study represents a comprehensive exploration of the extensive possibilities offered by Neuro-Symbolic AI.

Recent Advances in Generative AI and Large Language Models: Current Status, Challenges, and Perspectives

Jul 23, 2024Abstract:The emergence of Generative Artificial Intelligence (AI) and Large Language Models (LLMs) has marked a new era of Natural Language Processing (NLP), introducing unprecedented capabilities that are revolutionizing various domains. This paper explores the current state of these cutting-edge technologies, demonstrating their remarkable advancements and wide-ranging applications. Our paper contributes to providing a holistic perspective on the technical foundations, practical applications, and emerging challenges within the evolving landscape of Generative AI and LLMs. We believe that understanding the generative capabilities of AI systems and the specific context of LLMs is crucial for researchers, practitioners, and policymakers to collaboratively shape the responsible and ethical integration of these technologies into various domains. Furthermore, we identify and address main research gaps, providing valuable insights to guide future research endeavors within the AI research community.

Metaverse Survey & Tutorial: Exploring Key Requirements, Technologies, Standards, Applications, Challenges, and Perspectives

May 07, 2024

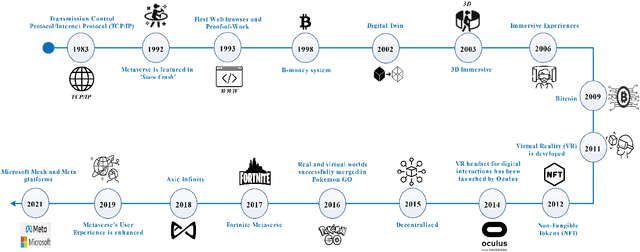

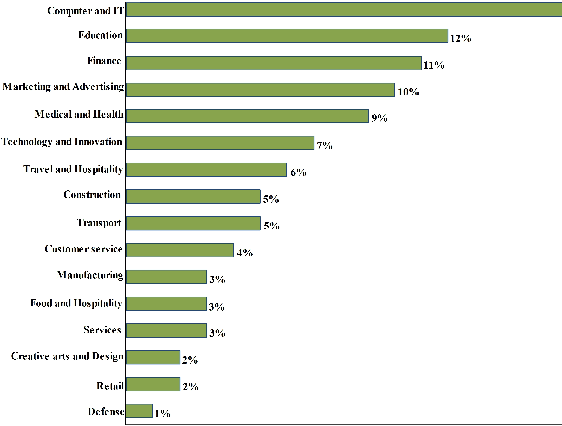

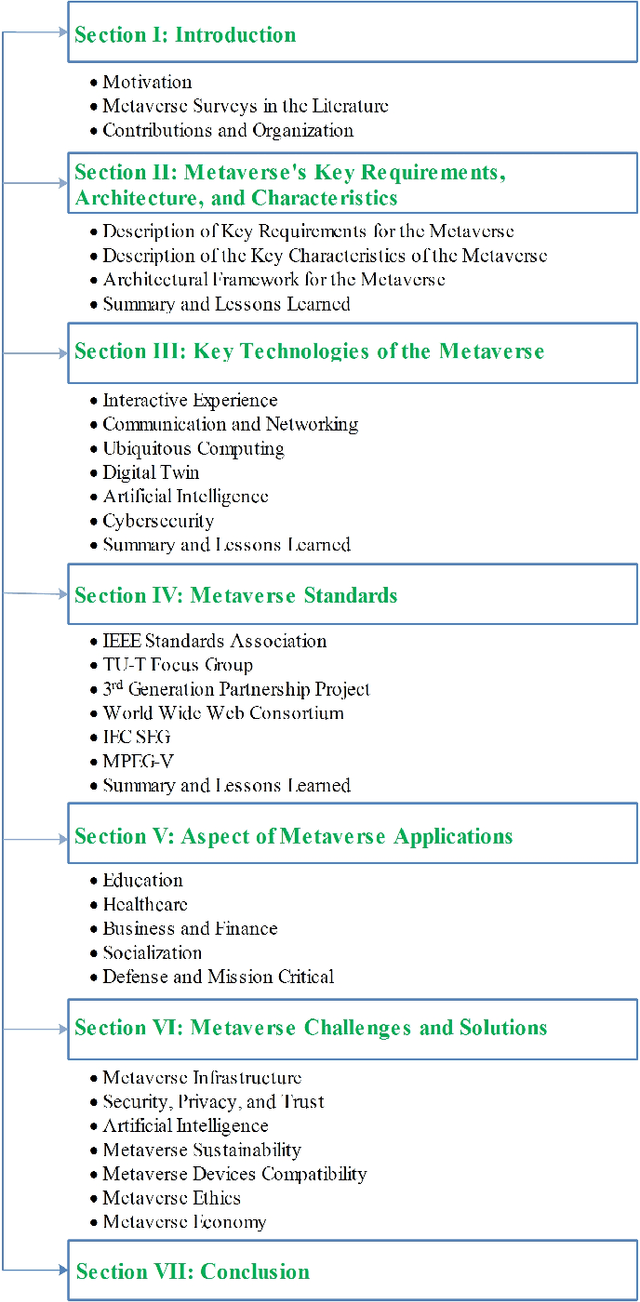

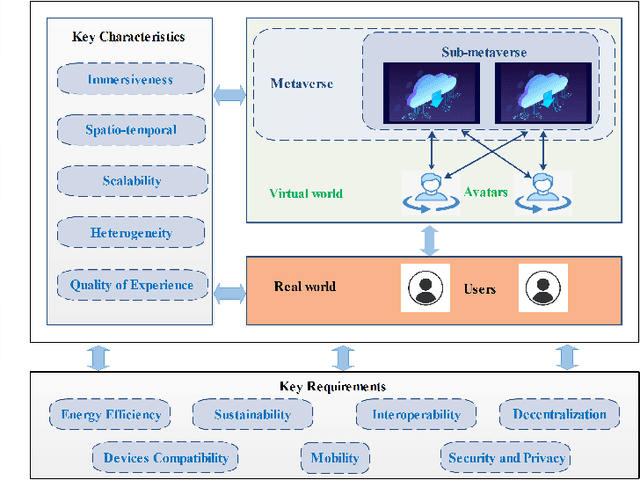

Abstract:In this paper, we present a comprehensive survey of the metaverse, envisioned as a transformative dimension of next-generation Internet technologies. This study not only outlines the structural components of our survey but also makes a substantial scientific contribution by elucidating the foundational concepts underlying the emergence of the metaverse. We analyze its architecture by defining key characteristics and requirements, thereby illuminating the nascent reality set to revolutionize digital interactions. Our analysis emphasizes the importance of collaborative efforts in developing metaverse standards, thereby fostering a unified understanding among industry stakeholders, organizations, and regulatory bodies. We extend our scrutiny to critical technologies integral to the metaverse, including interactive experiences, communication technologies, ubiquitous computing, digital twins, artificial intelligence, and cybersecurity measures. For each technological domain, we rigorously assess current contributions, principal techniques, and representative use cases, providing a nuanced perspective on their potential impacts. Furthermore, we delve into the metaverse's diverse applications across education, healthcare, business, social interactions, industrial sectors, defense, and mission-critical operations, highlighting its extensive utility. Each application is thoroughly analyzed, demonstrating its value and addressing associated challenges. The survey concludes with an overview of persistent challenges and future directions, offering insights into essential considerations and strategies necessary to harness the full potential of the metaverse. Through this detailed investigation, our goal is to articulate the scientific contributions of this survey paper, transcending a mere structural overview to highlight the transformative implications of the metaverse.

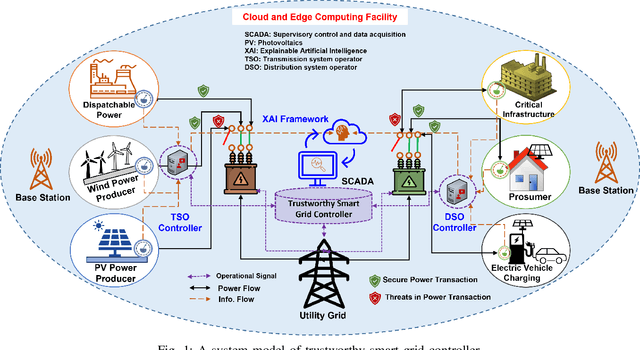

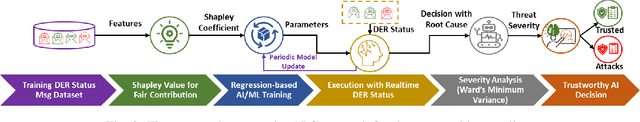

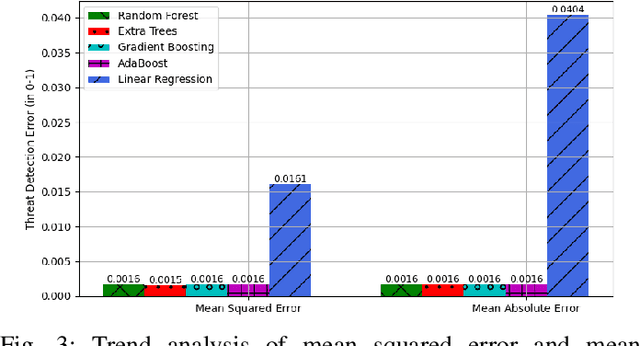

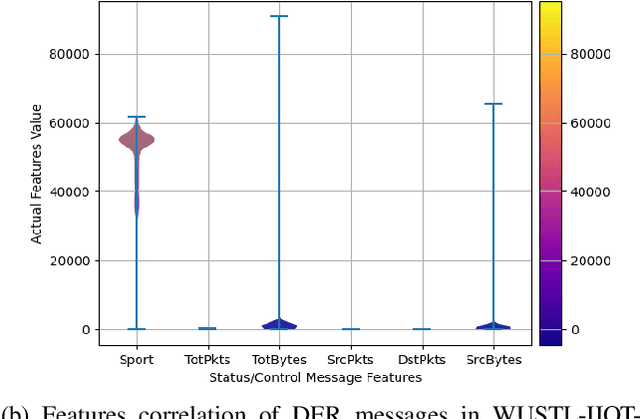

Trustworthy Artificial Intelligence Framework for Proactive Detection and Risk Explanation of Cyber Attacks in Smart Grid

Jun 12, 2023

Abstract:The rapid growth of distributed energy resources (DERs), such as renewable energy sources, generators, consumers, and prosumers in the smart grid infrastructure, poses significant cybersecurity and trust challenges to the grid controller. Consequently, it is crucial to identify adversarial tactics and measure the strength of the attacker's DER. To enable a trustworthy smart grid controller, this work investigates a trustworthy artificial intelligence (AI) mechanism for proactive identification and explanation of the cyber risk caused by the control/status message of DERs. Thus, proposing and developing a trustworthy AI framework to facilitate the deployment of any AI algorithms for detecting potential cyber threats and analyzing root causes based on Shapley value interpretation while dynamically quantifying the risk of an attack based on Ward's minimum variance formula. The experiment with a state-of-the-art dataset establishes the proposed framework as a trustworthy AI by fulfilling the capabilities of reliability, fairness, explainability, transparency, reproducibility, and accountability.

Intrusion Detection Systems Using Support Vector Machines on the KDDCUP'99 and NSL-KDD Datasets: A Comprehensive Survey

Sep 12, 2022Abstract:With the growing rates of cyber-attacks and cyber espionage, the need for better and more powerful intrusion detection systems (IDS) is even more warranted nowadays. The basic task of an IDS is to act as the first line of defense, in detecting attacks on the internet. As intrusion tactics from intruders become more sophisticated and difficult to detect, researchers have started to apply novel Machine Learning (ML) techniques to effectively detect intruders and hence preserve internet users' information and overall trust in the entire internet network security. Over the last decade, there has been an explosion of research on intrusion detection techniques based on ML and Deep Learning (DL) architectures on various cyber security-based datasets such as the DARPA, KDDCUP'99, NSL-KDD, CAIDA, CTU-13, UNSW-NB15. In this research, we review contemporary literature and provide a comprehensive survey of different types of intrusion detection technique that applies Support Vector Machines (SVMs) algorithms as a classifier. We focus only on studies that have been evaluated on the two most widely used datasets in cybersecurity namely: the KDDCUP'99 and the NSL-KDD datasets. We provide a summary of each method, identifying the role of the SVMs classifier, and all other algorithms involved in the studies. Furthermore, we present a critical review of each method, in tabular form, highlighting the performance measures, strengths, and limitations of each of the methods surveyed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge