Md. Shirajum Munir

A Zero Trust Framework for Realization and Defense Against Generative AI Attacks in Power Grid

Mar 11, 2024

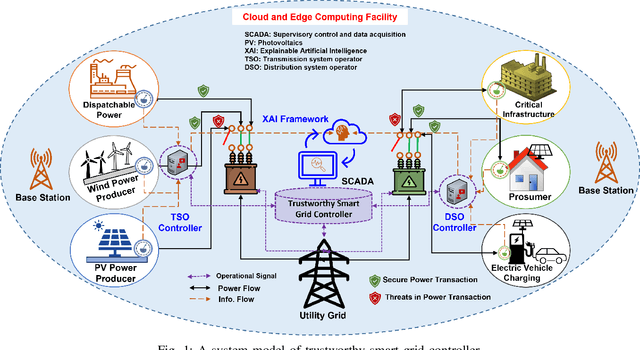

Abstract:Understanding the potential of generative AI (GenAI)-based attacks on the power grid is a fundamental challenge that must be addressed in order to protect the power grid by realizing and validating risk in new attack vectors. In this paper, a novel zero trust framework for a power grid supply chain (PGSC) is proposed. This framework facilitates early detection of potential GenAI-driven attack vectors (e.g., replay and protocol-type attacks), assessment of tail risk-based stability measures, and mitigation of such threats. First, a new zero trust system model of PGSC is designed and formulated as a zero-trust problem that seeks to guarantee for a stable PGSC by realizing and defending against GenAI-driven cyber attacks. Second, in which a domain-specific generative adversarial networks (GAN)-based attack generation mechanism is developed to create a new vulnerability cyberspace for further understanding that threat. Third, tail-based risk realization metrics are developed and implemented for quantifying the extreme risk of a potential attack while leveraging a trust measurement approach for continuous validation. Fourth, an ensemble learning-based bootstrap aggregation scheme is devised to detect the attacks that are generating synthetic identities with convincing user and distributed energy resources device profiles. Experimental results show the efficacy of the proposed zero trust framework that achieves an accuracy of 95.7% on attack vector generation, a risk measure of 9.61% for a 95% stable PGSC, and a 99% confidence in defense against GenAI-driven attack.

Trustworthy Artificial Intelligence Framework for Proactive Detection and Risk Explanation of Cyber Attacks in Smart Grid

Jun 12, 2023

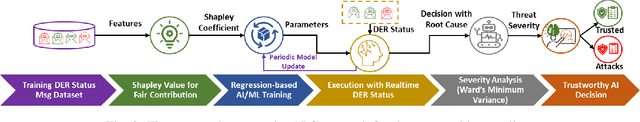

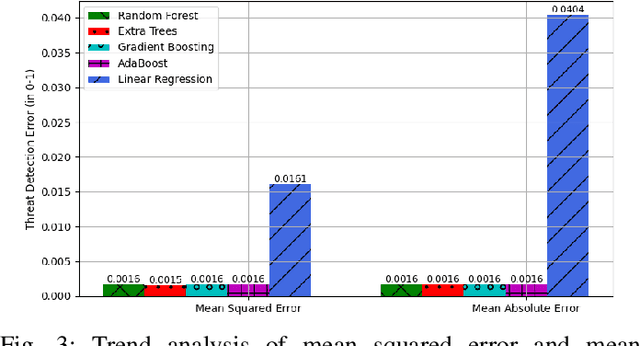

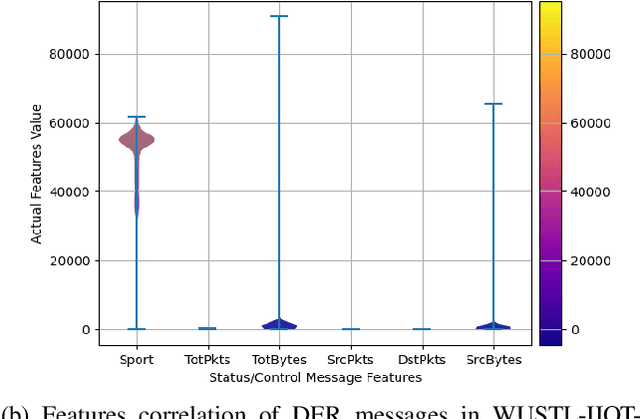

Abstract:The rapid growth of distributed energy resources (DERs), such as renewable energy sources, generators, consumers, and prosumers in the smart grid infrastructure, poses significant cybersecurity and trust challenges to the grid controller. Consequently, it is crucial to identify adversarial tactics and measure the strength of the attacker's DER. To enable a trustworthy smart grid controller, this work investigates a trustworthy artificial intelligence (AI) mechanism for proactive identification and explanation of the cyber risk caused by the control/status message of DERs. Thus, proposing and developing a trustworthy AI framework to facilitate the deployment of any AI algorithms for detecting potential cyber threats and analyzing root causes based on Shapley value interpretation while dynamically quantifying the risk of an attack based on Ward's minimum variance formula. The experiment with a state-of-the-art dataset establishes the proposed framework as a trustworthy AI by fulfilling the capabilities of reliability, fairness, explainability, transparency, reproducibility, and accountability.

MP-FedCL: Multi-Prototype Federated Contrastive Learning for Edge Intelligence

Apr 01, 2023

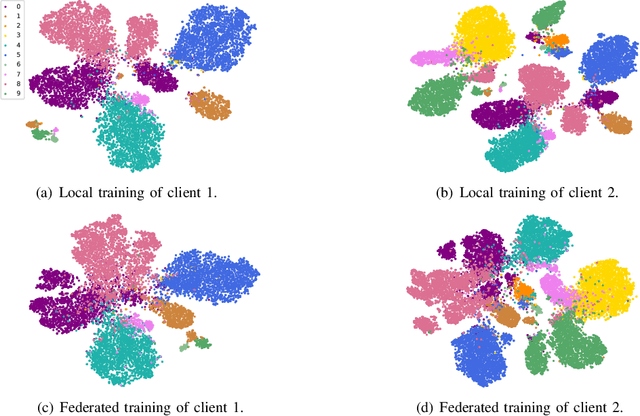

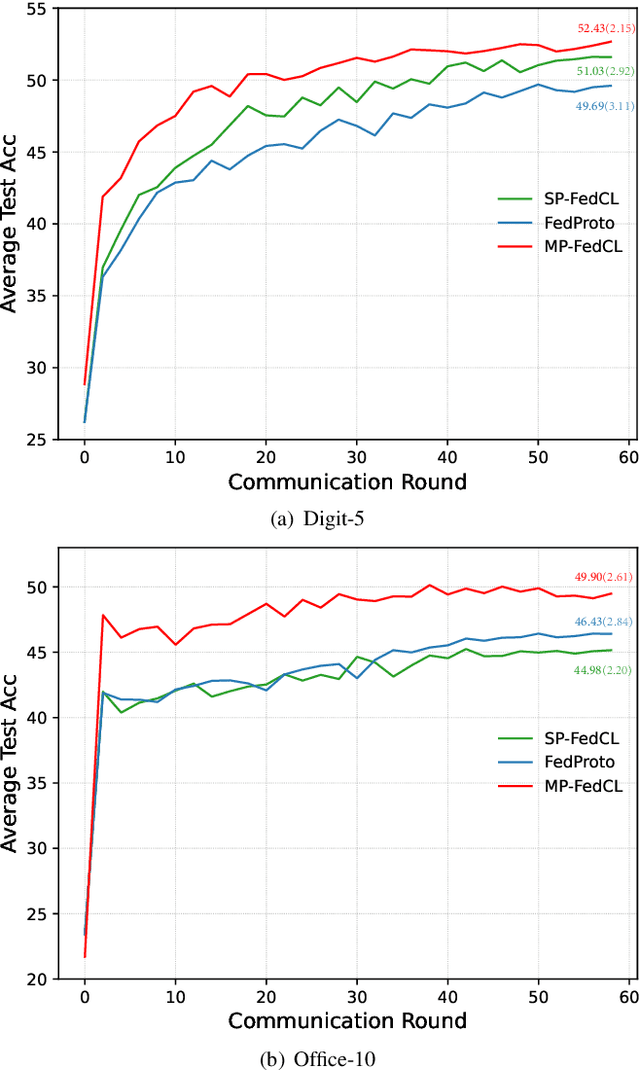

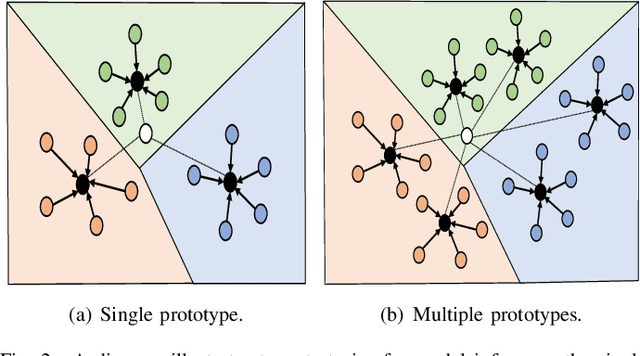

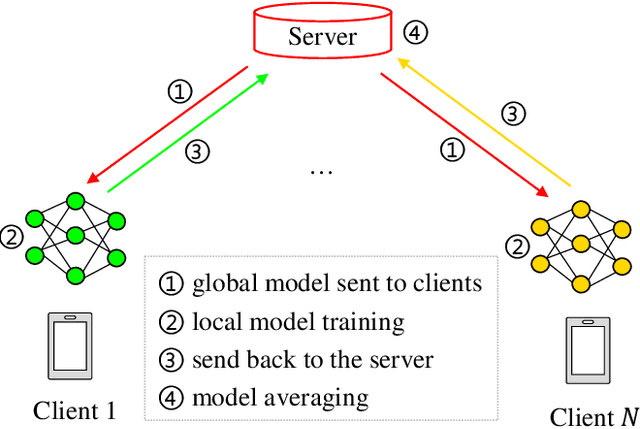

Abstract:Federated learning-assisted edge intelligence enables privacy protection in modern intelligent services. However, not Independent and Identically Distributed (non-IID) distribution among edge clients can impair the local model performance. The existing single prototype-based strategy represents a sample by using the mean of the feature space. However, feature spaces are usually not clustered, and a single prototype may not represent a sample well. Motivated by this, this paper proposes a multi-prototype federated contrastive learning approach (MP-FedCL) which demonstrates the effectiveness of using a multi-prototype strategy over a single-prototype under non-IID settings, including both label and feature skewness. Specifically, a multi-prototype computation strategy based on \textit{k-means} is first proposed to capture different embedding representations for each class space, using multiple prototypes ($k$ centroids) to represent a class in the embedding space. In each global round, the computed multiple prototypes and their respective model parameters are sent to the edge server for aggregation into a global prototype pool, which is then sent back to all clients to guide their local training. Finally, local training for each client minimizes their own supervised learning tasks and learns from shared prototypes in the global prototype pool through supervised contrastive learning, which encourages them to learn knowledge related to their own class from others and reduces the absorption of unrelated knowledge in each global iteration. Experimental results on MNIST, Digit-5, Office-10, and DomainNet show that our method outperforms multiple baselines, with an average test accuracy improvement of about 4.6\% and 10.4\% under feature and label non-IID distributions, respectively.

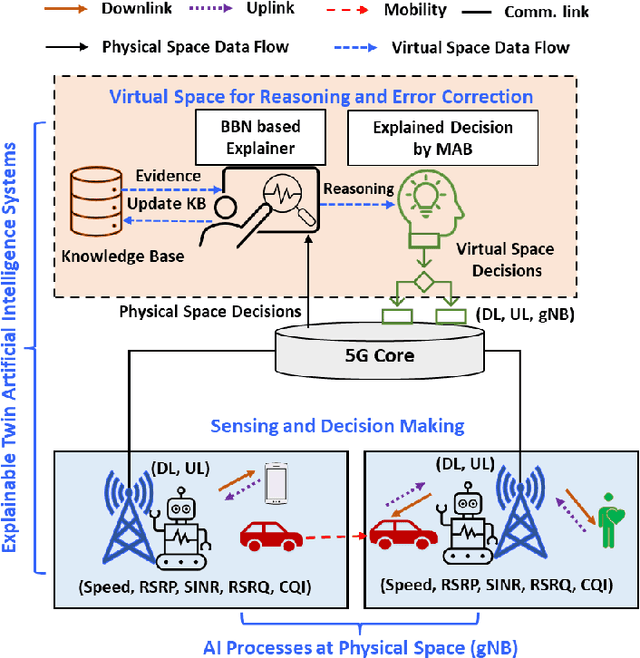

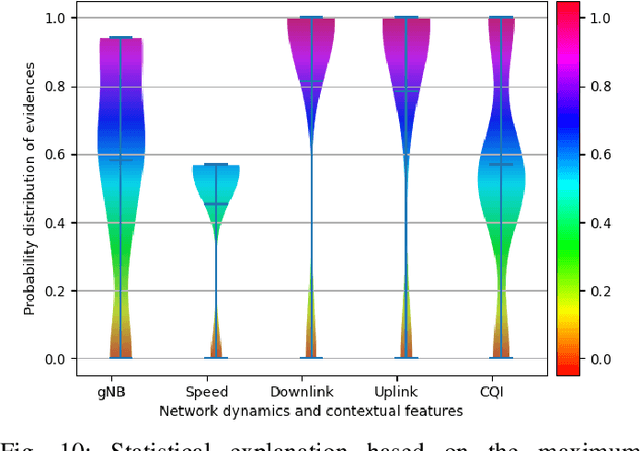

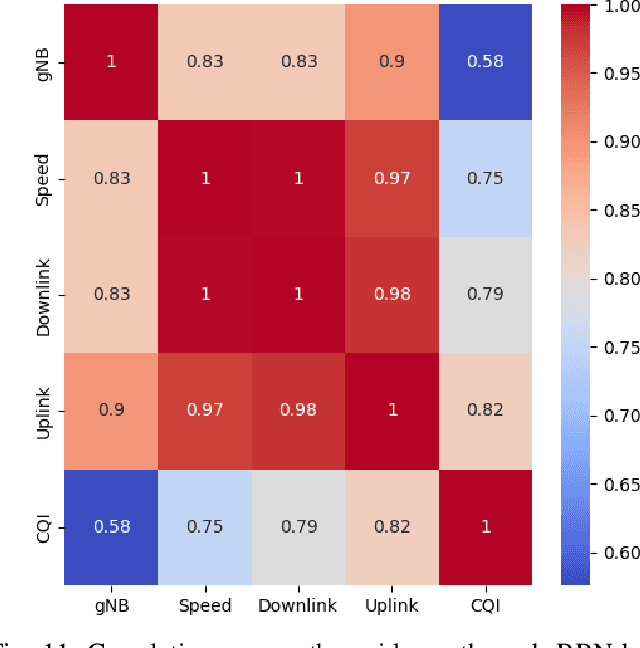

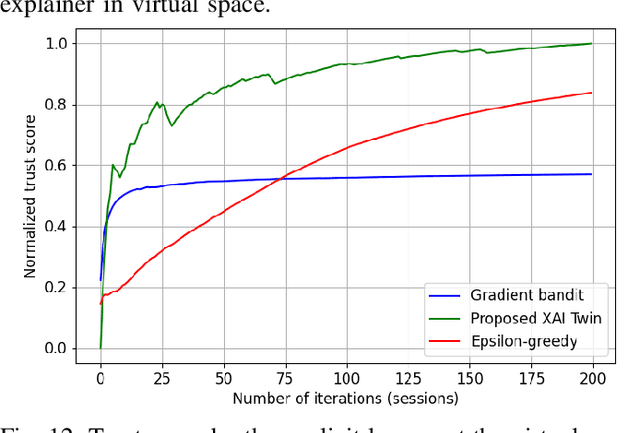

Neuro-symbolic Explainable Artificial Intelligence Twin for Zero-touch IoE in Wireless Network

Oct 13, 2022

Abstract:Explainable artificial intelligence (XAI) twin systems will be a fundamental enabler of zero-touch network and service management (ZSM) for sixth-generation (6G) wireless networks. A reliable XAI twin system for ZSM requires two composites: an extreme analytical ability for discretizing the physical behavior of the Internet of Everything (IoE) and rigorous methods for characterizing the reasoning of such behavior. In this paper, a novel neuro-symbolic explainable artificial intelligence twin framework is proposed to enable trustworthy ZSM for a wireless IoE. The physical space of the XAI twin executes a neural-network-driven multivariate regression to capture the time-dependent wireless IoE environment while determining unconscious decisions of IoE service aggregation. Subsequently, the virtual space of the XAI twin constructs a directed acyclic graph (DAG)-based Bayesian network that can infer a symbolic reasoning score over unconscious decisions through a first-order probabilistic language model. Furthermore, a Bayesian multi-arm bandits-based learning problem is proposed for reducing the gap between the expected explained score and the current obtained score of the proposed neuro-symbolic XAI twin. To address the challenges of extensible, modular, and stateless management functions in ZSM, the proposed neuro-symbolic XAI twin framework consists of two learning systems: 1) an implicit learner that acts as an unconscious learner in physical space, and 2) an explicit leaner that can exploit symbolic reasoning based on implicit learner decisions and prior evidence. Experimental results show that the proposed neuro-symbolic XAI twin can achieve around 96.26% accuracy while guaranteeing from 18% to 44% more trust score in terms of reasoning and closed-loop automation.

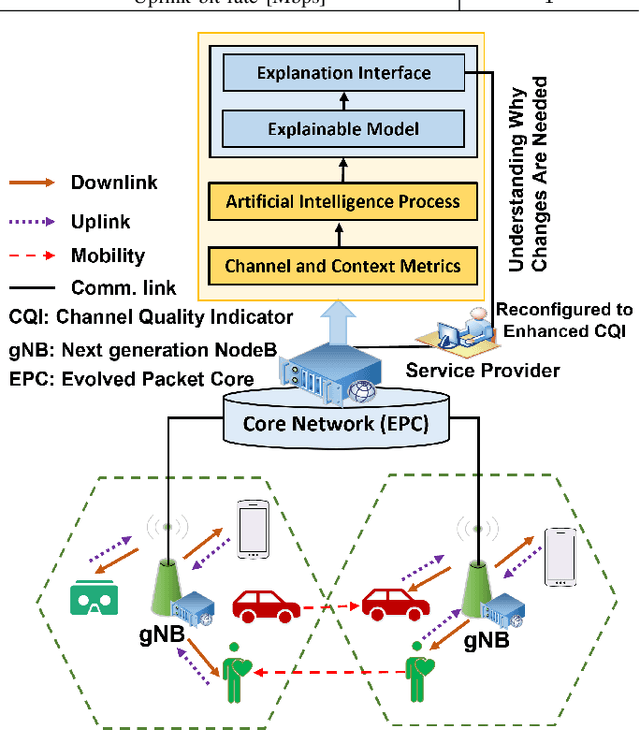

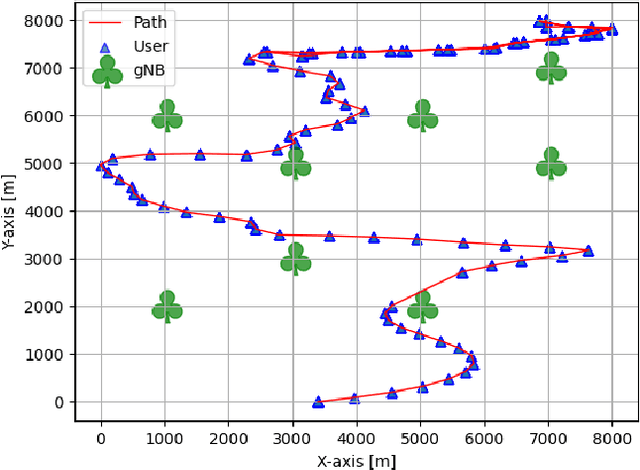

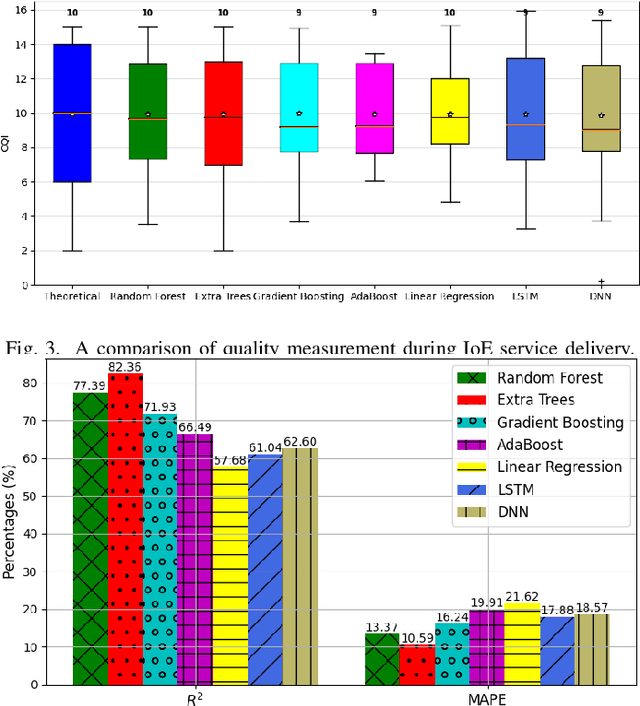

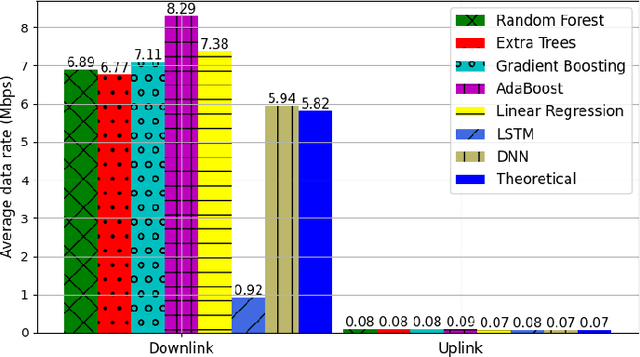

An Explainable Artificial Intelligence Framework for Quality-Aware IoE Service Delivery

Jan 26, 2022

Abstract:One of the core envisions of the sixth-generation (6G) wireless networks is to accumulate artificial intelligence (AI) for autonomous controlling of the Internet of Everything (IoE). Particularly, the quality of IoE services delivery must be maintained by analyzing contextual metrics of IoE such as people, data, process, and things. However, the challenges incorporate when the AI model conceives a lake of interpretation and intuition to the network service provider. Therefore, this paper provides an explainable artificial intelligence (XAI) framework for quality-aware IoE service delivery that enables both intelligence and interpretation. First, a problem of quality-aware IoE service delivery is formulated by taking into account network dynamics and contextual metrics of IoE, where the objective is to maximize the channel quality index (CQI) of each IoE service user. Second, a regression problem is devised to solve the formulated problem, where explainable coefficients of the contextual matrices are estimated by Shapley value interpretation. Third, the XAI-enabled quality-aware IoE service delivery algorithm is implemented by employing ensemble-based regression models for ensuring the interpretation of contextual relationships among the matrices to reconfigure network parameters. Finally, the experiment results show that the uplink improvement rate becomes 42.43% and 16.32% for the AdaBoost and Extra Trees, respectively, while the downlink improvement rate reaches up to 28.57% and 14.29%. However, the AdaBoost-based approach cannot maintain the CQI of IoE service users. Therefore, the proposed Extra Trees-based regression model shows significant performance gain for mitigating the trade-off between accuracy and interpretability than other baselines.

Risk Adversarial Learning System for Connected and Autonomous Vehicle Charging

Aug 02, 2021

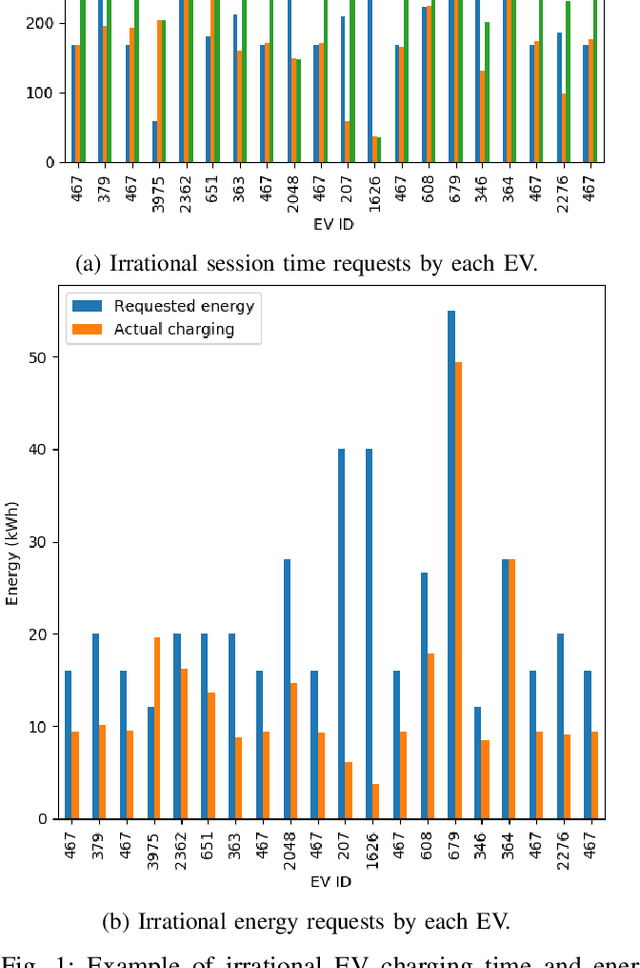

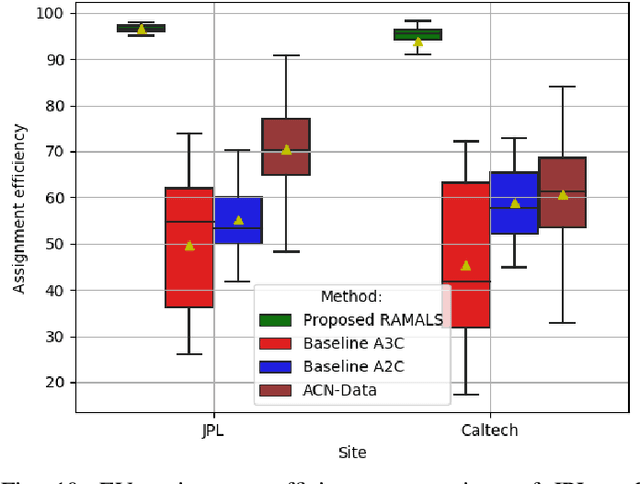

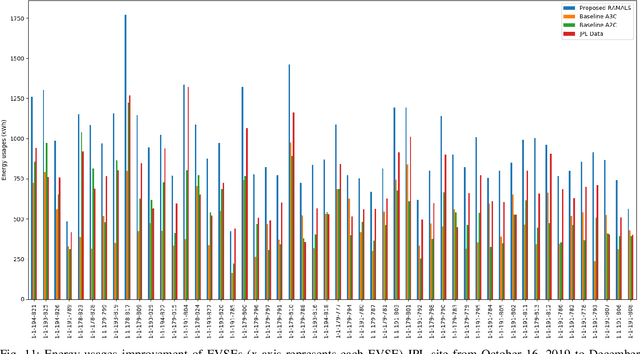

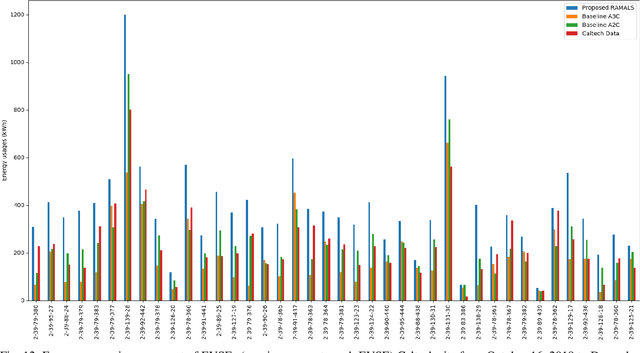

Abstract:In this paper, the design of a rational decision support system (RDSS) for a connected and autonomous vehicle charging infrastructure (CAV-CI) is studied. In the considered CAV-CI, the distribution system operator (DSO) deploys electric vehicle supply equipment (EVSE) to provide an EV charging facility for human-driven connected vehicles (CVs) and autonomous vehicles (AVs). The charging request by the human-driven EV becomes irrational when it demands more energy and charging period than its actual need. Therefore, the scheduling policy of each EVSE must be adaptively accumulated the irrational charging request to satisfy the charging demand of both CVs and AVs. To tackle this, we formulate an RDSS problem for the DSO, where the objective is to maximize the charging capacity utilization by satisfying the laxity risk of the DSO. Thus, we devise a rational reward maximization problem to adapt the irrational behavior by CVs in a data-informed manner. We propose a novel risk adversarial multi-agent learning system (RAMALS) for CAV-CI to solve the formulated RDSS problem. In RAMALS, the DSO acts as a centralized risk adversarial agent (RAA) for informing the laxity risk to each EVSE. Subsequently, each EVSE plays the role of a self-learner agent to adaptively schedule its own EV sessions by coping advice from RAA. Experiment results show that the proposed RAMALS affords around 46.6% improvement in charging rate, about 28.6% improvement in the EVSE's active charging time and at least 33.3% more energy utilization, as compared to a currently deployed ACN EVSE system, and other baselines.

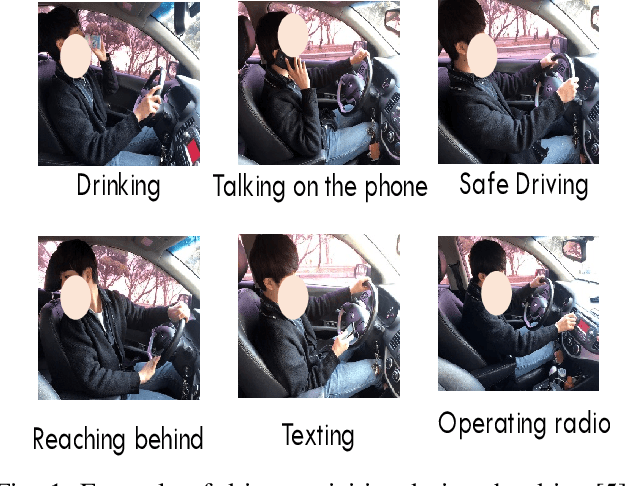

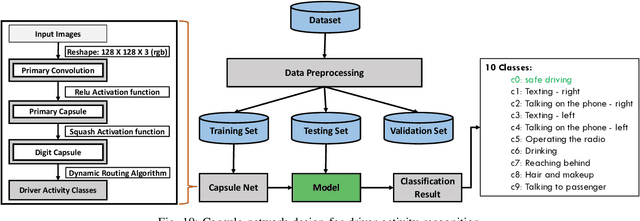

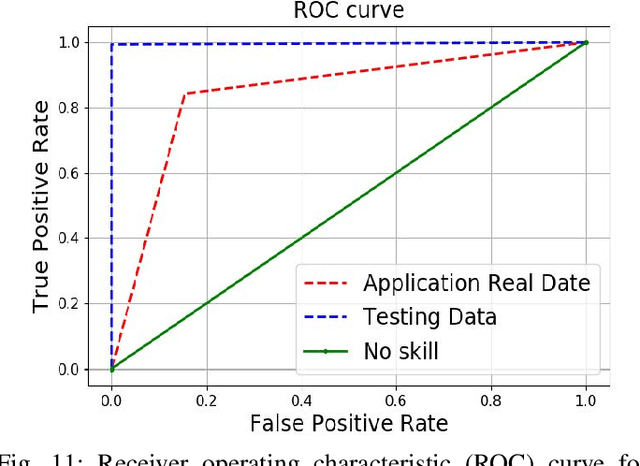

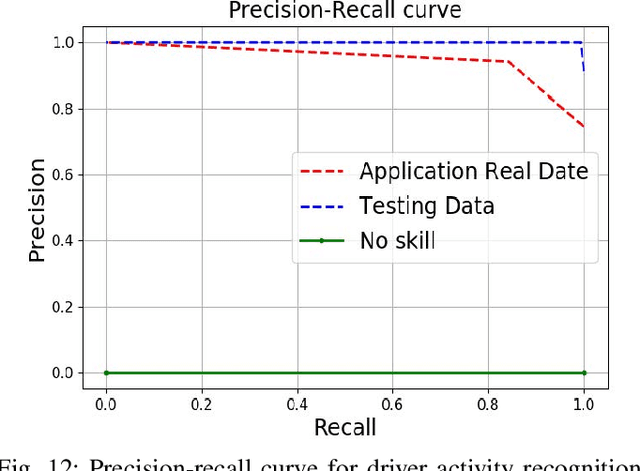

Drive Safe: Cognitive-Behavioral Mining for Intelligent Transportation Cyber-Physical System

Aug 24, 2020

Abstract:This paper presents a cognitive behavioral-based driver mood repairment platform in intelligent transportation cyber-physical systems (IT-CPS) for road safety. In particular, we propose a driving safety platform for distracted drivers, namely \emph{drive safe}, in IT-CPS. The proposed platform recognizes the distracting activities of the drivers as well as their emotions for mood repair. Further, we develop a prototype of the proposed drive safe platform to establish proof-of-concept (PoC) for the road safety in IT-CPS. In the developed driving safety platform, we employ five AI and statistical-based models to infer a vehicle driver's cognitive-behavioral mining to ensure safe driving during the drive. Especially, capsule network (CN), maximum likelihood (ML), convolutional neural network (CNN), Apriori algorithm, and Bayesian network (BN) are deployed for driver activity recognition, environmental feature extraction, mood recognition, sequential pattern mining, and content recommendation for affective mood repairment of the driver, respectively. Besides, we develop a communication module to interact with the systems in IT-CPS asynchronously. Thus, the developed drive safe PoC can guide the vehicle drivers when they are distracted from driving due to the cognitive-behavioral factors. Finally, we have performed a qualitative evaluation to measure the usability and effectiveness of the developed drive safe platform. We observe that the P-value is 0.0041 (i.e., < 0.05) in the ANOVA test. Moreover, the confidence interval analysis also shows significant gains in prevalence value which is around 0.93 for a 95% confidence level. The aforementioned statistical results indicate high reliability in terms of driver's safety and mental state.

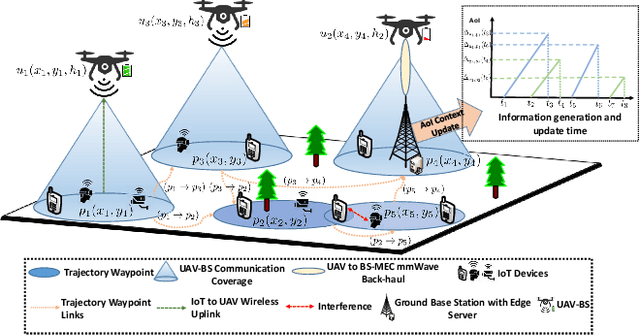

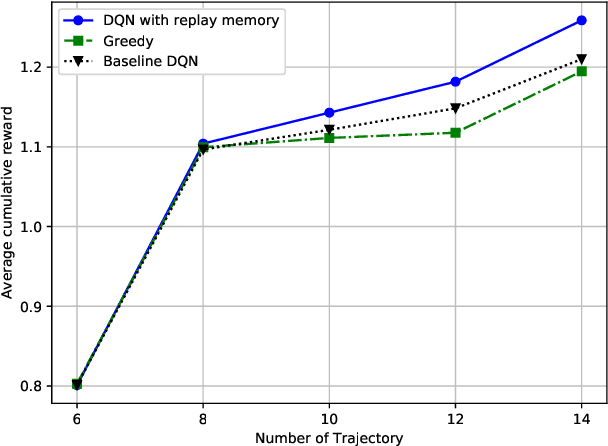

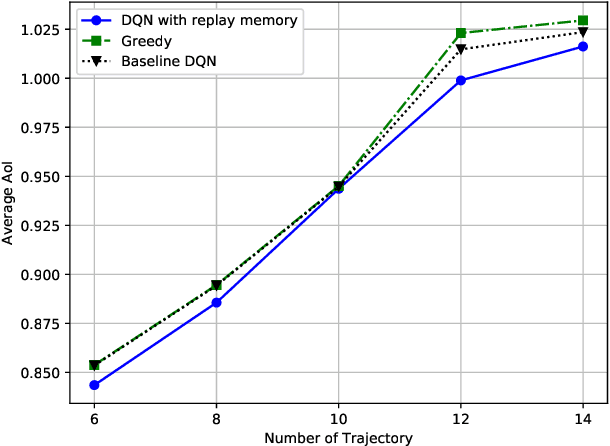

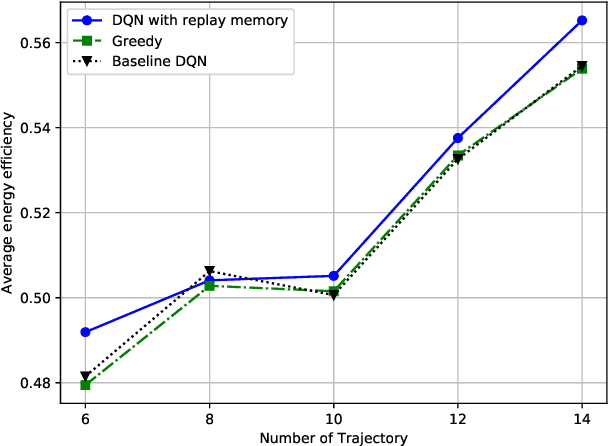

Data Freshness and Energy-Efficient UAV Navigation Optimization: A Deep Reinforcement Learning Approach

Feb 21, 2020

Abstract:In this paper, we design a navigation policy for multiple unmanned aerial vehicles (UAVs) where mobile base stations (BSs) are deployed to improve the data freshness and connectivity to the Internet of Things (IoT) devices. First, we formulate an energy-efficient trajectory optimization problem in which the objective is to maximize the energy efficiency by optimizing the UAV-BS trajectory policy. We also incorporate different contextual information such as energy and age of information (AoI) constraints to ensure the data freshness at the ground BS. Second, we propose an agile deep reinforcement learning with experience replay model to solve the formulated problem concerning the contextual constraints for the UAV-BS navigation. Moreover, the proposed approach is well-suited for solving the problem, since the state space of the problem is extremely large and finding the best trajectory policy with useful contextual features is too complex for the UAV-BSs. By applying the proposed trained model, an effective real-time trajectory policy for the UAV-BSs captures the observable network states over time. Finally, the simulation results illustrate the proposed approach is 3.6% and 3.13% more energy efficient than those of the greedy and baseline deep Q Network (DQN) approaches.

Risk-Aware Energy Scheduling for Edge Computing with Microgrid: A Multi-Agent Deep Reinforcement Learning Approach

Feb 21, 2020

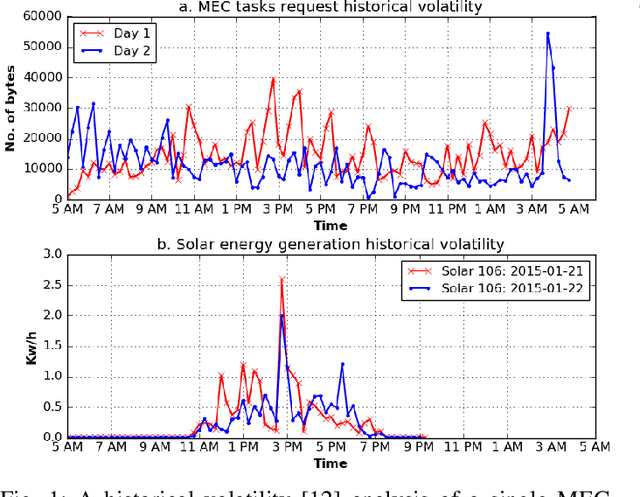

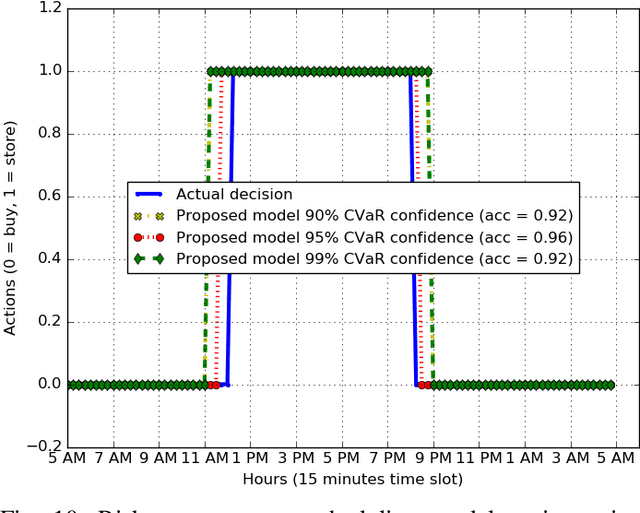

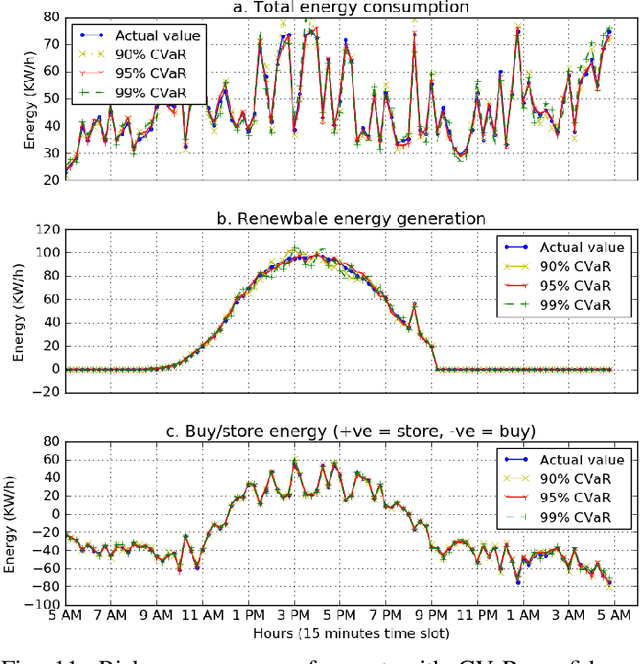

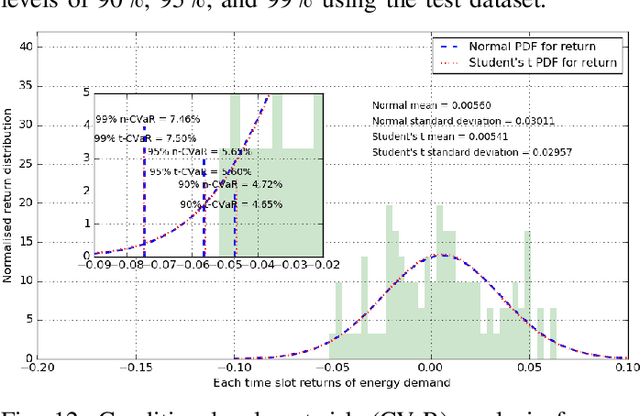

Abstract:In recent years, multi-access edge computing (MEC) is a key enabler for handling the massive expansion of Internet of Things (IoT) applications and services. However, energy consumption of a MEC network depends on volatile tasks that induces risk for energy demand estimations. As an energy supplier, a microgrid can facilitate seamless energy supply. However, the risk associated with energy supply is also increased due to unpredictable energy generation from renewable and non-renewable sources. Especially, the risk of energy shortfall is involved with uncertainties in both energy consumption and generation. In this paper, we study a risk-aware energy scheduling problem for a microgrid-powered MEC network. First, we formulate an optimization problem considering the conditional value-at-risk (CVaR) measurement for both energy consumption and generation, where the objective is to minimize the loss of energy shortfall of the MEC networks and we show this problem is an NP-hard problem. Second, we analyze our formulated problem using a multi-agent stochastic game that ensures the joint policy Nash equilibrium, and show the convergence of the proposed model. Third, we derive the solution by applying a multi-agent deep reinforcement learning (MADRL)-based asynchronous advantage actor-critic (A3C) algorithm with shared neural networks. This method mitigates the curse of dimensionality of the state space and chooses the best policy among the agents for the proposed problem. Finally, the experimental results establish a significant performance gain by considering CVaR for high accuracy energy scheduling of the proposed model than both the single and random agent models.

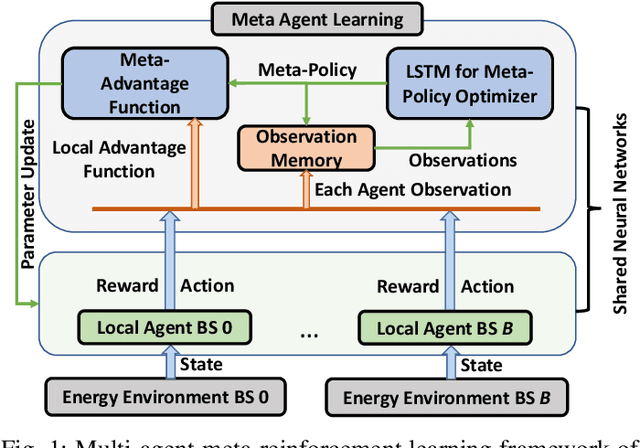

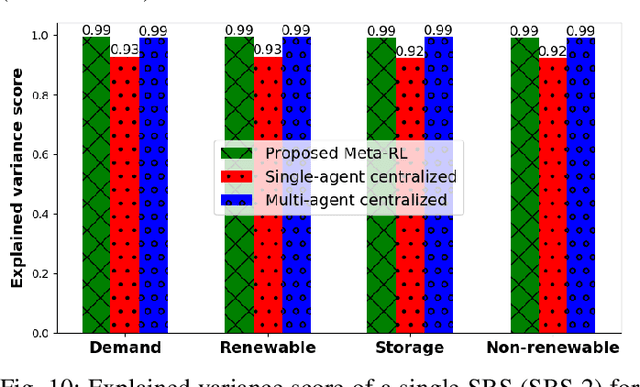

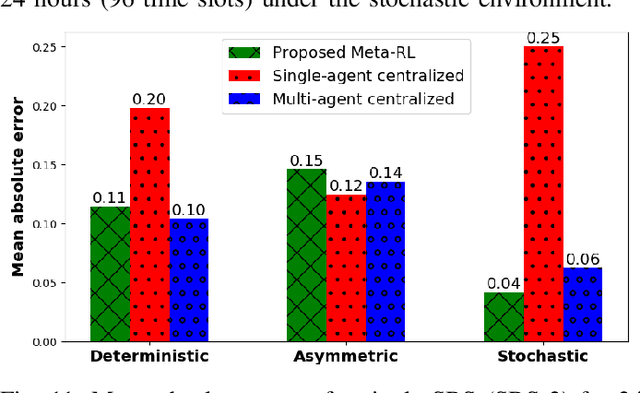

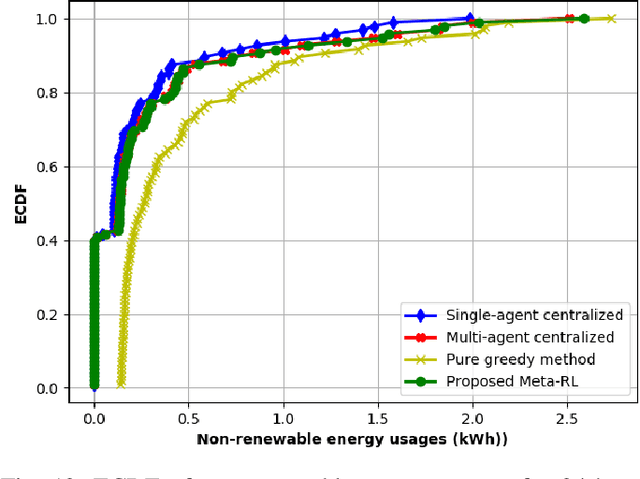

Multi-Agent Meta-Reinforcement Learning for Self-Powered and Sustainable Edge Computing Systems

Feb 20, 2020

Abstract:The stringent requirements of mobile edge computing (MEC) applications and functions fathom the high capacity and dense deployment of MEC hosts to the upcoming wireless networks. However, operating such high capacity MEC hosts can significantly increase energy consumption. Thus, a BS unit can act as a self-powered BS. In this paper, an effective energy dispatch mechanism for self-powered wireless networks with edge computing capabilities is studied. First, a two-stage linear stochastic programming problem is formulated with the goal of minimizing the total energy consumption cost of the system while fulfilling the energy demand. Second, a semi-distributed data-driven solution is proposed by developing a novel multi-agent meta-reinforcement learning (MAMRL) framework to solve the formulated problem. In particular, each BS plays the role of a local agent that explores a Markovian behavior for both energy consumption and generation while each BS transfers time-varying features to a meta-agent. Sequentially, the meta-agent optimizes (i.e., exploits) the energy dispatch decision by accepting only the observations from each local agent with its own state information. Meanwhile, each BS agent estimates its own energy dispatch policy by applying the learned parameters from meta-agent. Finally, the proposed MAMRL framework is benchmarked by analyzing deterministic, asymmetric, and stochastic environments in terms of non-renewable energy usages, energy cost, and accuracy. Experimental results show that the proposed MAMRL model can reduce up to 11% non-renewable energy usage and by 22.4% the energy cost (with 95.8% prediction accuracy), compared to other baseline methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge