Clare Zhu

The NeurIPS 2022 Neural MMO Challenge: A Massively Multiagent Competition with Specialization and Trade

Nov 07, 2023

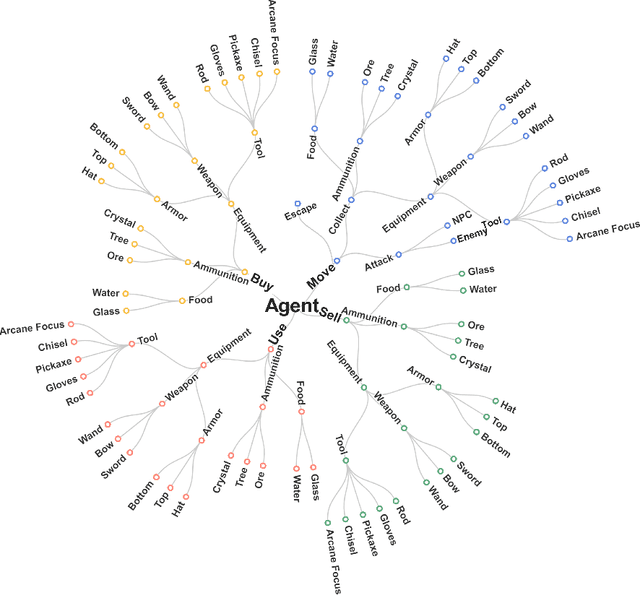

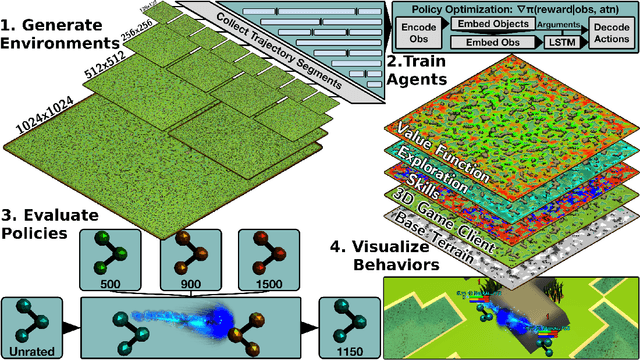

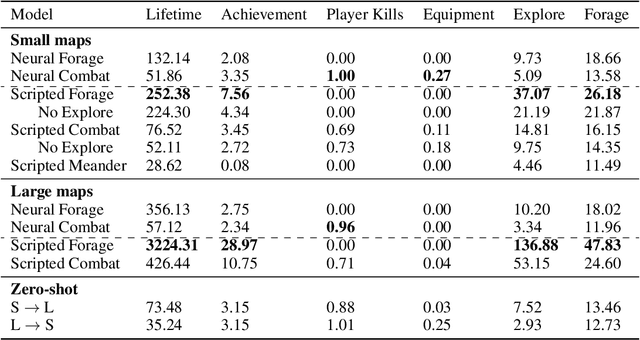

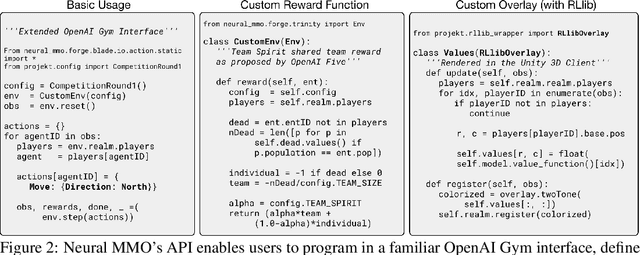

Abstract:In this paper, we present the results of the NeurIPS-2022 Neural MMO Challenge, which attracted 500 participants and received over 1,600 submissions. Like the previous IJCAI-2022 Neural MMO Challenge, it involved agents from 16 populations surviving in procedurally generated worlds by collecting resources and defeating opponents. This year's competition runs on the latest v1.6 Neural MMO, which introduces new equipment, combat, trading, and a better scoring system. These elements combine to pose additional robustness and generalization challenges not present in previous competitions. This paper summarizes the design and results of the challenge, explores the potential of this environment as a benchmark for learning methods, and presents some practical reinforcement learning training approaches for complex tasks with sparse rewards. Additionally, we have open-sourced our baselines, including environment wrappers, benchmarks, and visualization tools for future research.

Benchmarking Robustness and Generalization in Multi-Agent Systems: A Case Study on Neural MMO

Aug 30, 2023

Abstract:We present the results of the second Neural MMO challenge, hosted at IJCAI 2022, which received 1600+ submissions. This competition targets robustness and generalization in multi-agent systems: participants train teams of agents to complete a multi-task objective against opponents not seen during training. The competition combines relatively complex environment design with large numbers of agents in the environment. The top submissions demonstrate strong success on this task using mostly standard reinforcement learning (RL) methods combined with domain-specific engineering. We summarize the competition design and results and suggest that, as an academic community, competitions may be a powerful approach to solving hard problems and establishing a solid benchmark for algorithms. We will open-source our benchmark including the environment wrapper, baselines, a visualization tool, and selected policies for further research.

The Neural MMO Platform for Massively Multiagent Research

Oct 14, 2021

Abstract:Neural MMO is a computationally accessible research platform that combines large agent populations, long time horizons, open-ended tasks, and modular game systems. Existing environments feature subsets of these properties, but Neural MMO is the first to combine them all. We present Neural MMO as free and open source software with active support, ongoing development, documentation, and additional training, logging, and visualization tools to help users adapt to this new setting. Initial baselines on the platform demonstrate that agents trained in large populations explore more and learn a progression of skills. We raise other more difficult problems such as many-team cooperation as open research questions which Neural MMO is well-suited to answer. Finally, we discuss current limitations of the platform, potential mitigations, and plans for continued development.

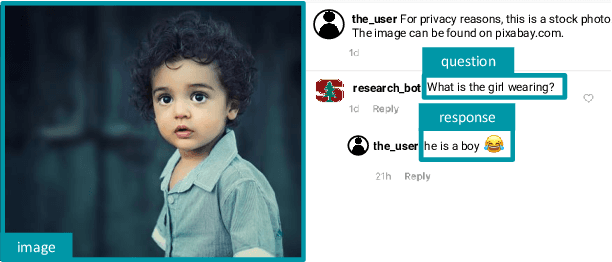

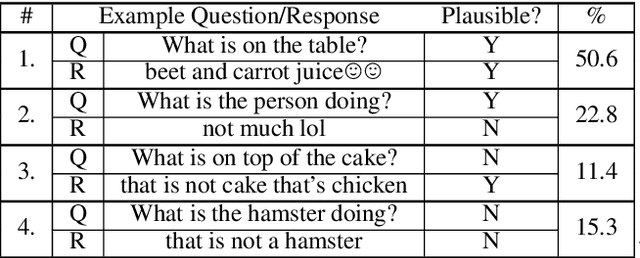

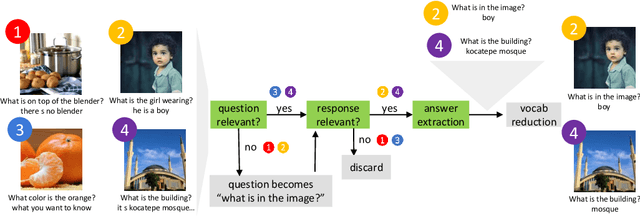

Determining Question-Answer Plausibility in Crowdsourced Datasets Using Multi-Task Learning

Nov 10, 2020

Abstract:Datasets extracted from social networks and online forums are often prone to the pitfalls of natural language, namely the presence of unstructured and noisy data. In this work, we seek to enable the collection of high-quality question-answer datasets from social media by proposing a novel task for automated quality analysis and data cleaning: question-answer (QA) plausibility. Given a machine or user-generated question and a crowd-sourced response from a social media user, we determine if the question and response are valid; if so, we identify the answer within the free-form response. We design BERT-based models to perform the QA plausibility task, and we evaluate the ability of our models to generate a clean, usable question-answer dataset. Our highest-performing approach consists of a single-task model which determines the plausibility of the question, followed by a multi-task model which evaluates the plausibility of the response as well as extracts answers (Question Plausibility AUROC=0.75, Response Plausibility AUROC=0.78, Answer Extraction F1=0.665).

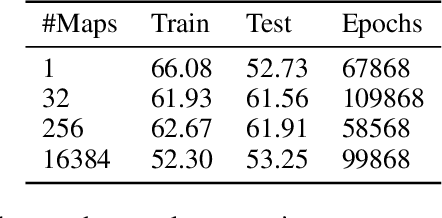

Effective Approaches to Batch Parallelization for Dynamic Neural Network Architectures

Jul 08, 2017

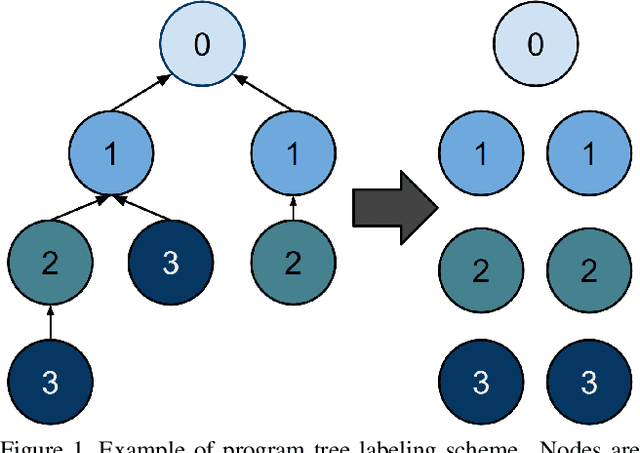

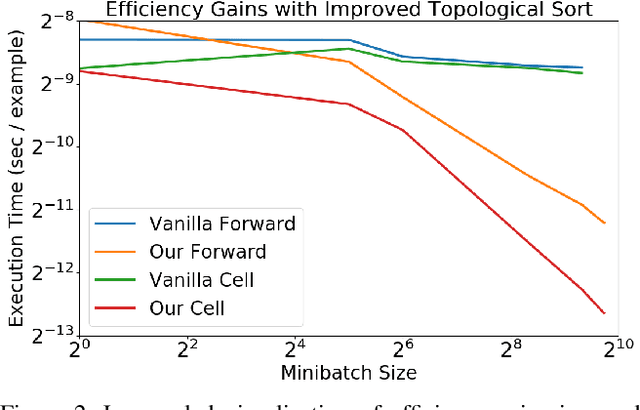

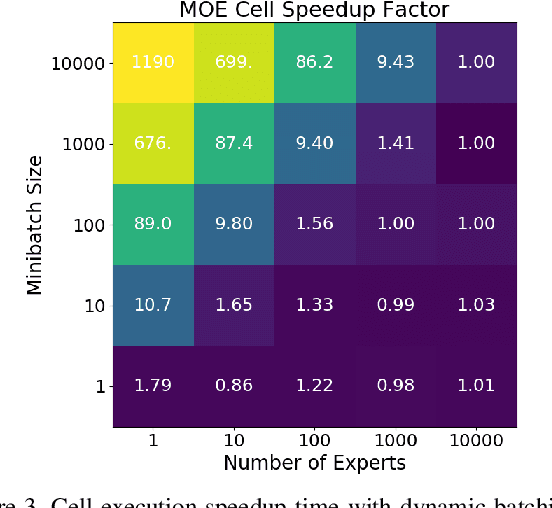

Abstract:We present a simple dynamic batching approach applicable to a large class of dynamic architectures that consistently yields speedups of over 10x. We provide performance bounds when the architecture is not known a priori and a stronger bound in the special case where the architecture is a predetermined balanced tree. We evaluate our approach on Johnson et al.'s recent visual question answering (VQA) result of his CLEVR dataset by Inferring and Executing Programs (IEP). We also evaluate on sparsely gated mixture of experts layers and achieve speedups of up to 1000x over the naive implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge