Christian Weilbach

Prospective Messaging: Learning in Networks with Communication Delays

Jul 09, 2024Abstract:Inter-neuron communication delays are ubiquitous in physically realized neural networks such as biological neural circuits and neuromorphic hardware. These delays have significant and often disruptive consequences on network dynamics during training and inference. It is therefore essential that communication delays be accounted for, both in computational models of biological neural networks and in large-scale neuromorphic systems. Nonetheless, communication delays have yet to be comprehensively addressed in either domain. In this paper, we first show that delays prevent state-of-the-art continuous-time neural networks called Latent Equilibrium (LE) networks from learning even simple tasks despite significant overparameterization. We then propose to compensate for communication delays by predicting future signals based on currently available ones. This conceptually straightforward approach, which we call prospective messaging (PM), uses only neuron-local information, and is flexible in terms of memory and computation requirements. We demonstrate that incorporating PM into delayed LE networks prevents reaction lags, and facilitates successful learning on Fourier synthesis and autoregressive video prediction tasks.

All-in-one simulation-based inference

Apr 15, 2024

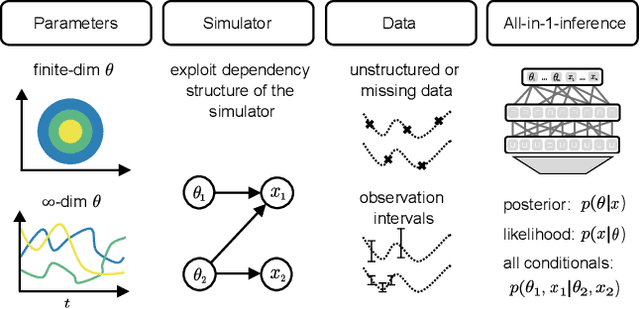

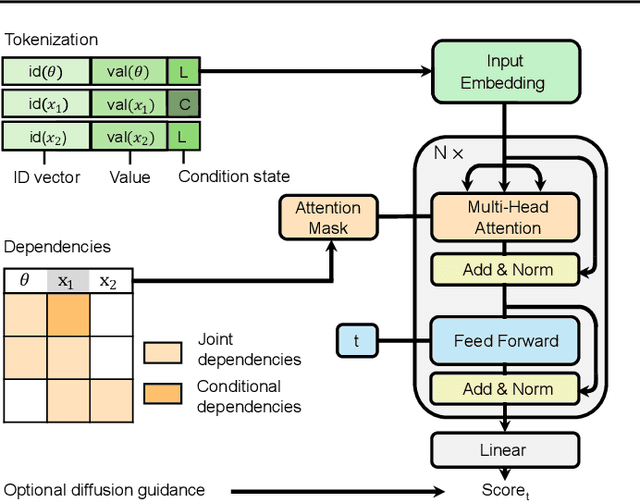

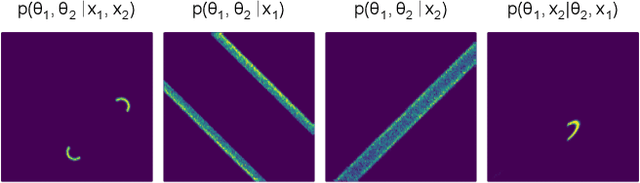

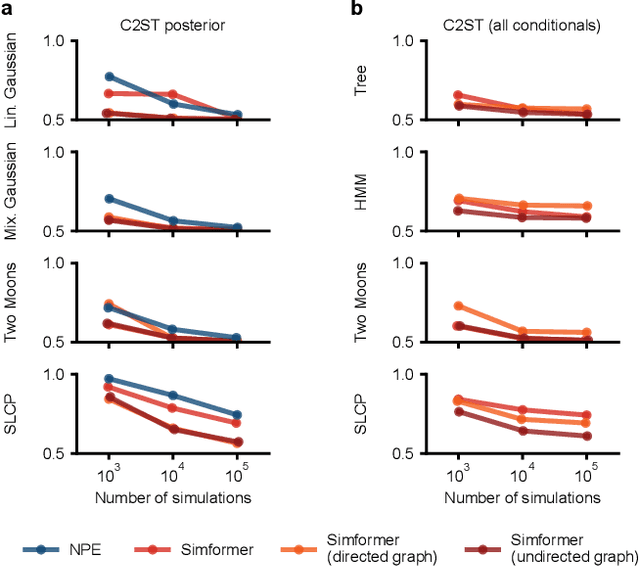

Abstract:Amortized Bayesian inference trains neural networks to solve stochastic inference problems using model simulations, thereby making it possible to rapidly perform Bayesian inference for any newly observed data. However, current simulation-based amortized inference methods are simulation-hungry and inflexible: They require the specification of a fixed parametric prior, simulator, and inference tasks ahead of time. Here, we present a new amortized inference method -- the Simformer -- which overcomes these limitations. By training a probabilistic diffusion model with transformer architectures, the Simformer outperforms current state-of-the-art amortized inference approaches on benchmark tasks and is substantially more flexible: It can be applied to models with function-valued parameters, it can handle inference scenarios with missing or unstructured data, and it can sample arbitrary conditionals of the joint distribution of parameters and data, including both posterior and likelihood. We showcase the performance and flexibility of the Simformer on simulators from ecology, epidemiology, and neuroscience, and demonstrate that it opens up new possibilities and application domains for amortized Bayesian inference on simulation-based models.

If the Sources Could Talk: Evaluating Large Language Models for Research Assistance in History

Oct 16, 2023Abstract:The recent advent of powerful Large-Language Models (LLM) provides a new conversational form of inquiry into historical memory (or, training data, in this case). We show that by augmenting such LLMs with vector embeddings from highly specialized academic sources, a conversational methodology can be made accessible to historians and other researchers in the Humanities. Concretely, we evaluate and demonstrate how LLMs have the ability of assisting researchers while they examine a customized corpora of different types of documents, including, but not exclusive to: (1). primary sources, (2). secondary sources written by experts, and (3). the combination of these two. Compared to established search interfaces for digital catalogues, such as metadata and full-text search, we evaluate the richer conversational style of LLMs on the performance of two main types of tasks: (1). question-answering, and (2). extraction and organization of data. We demonstrate that LLMs semantic retrieval and reasoning abilities on problem-specific tasks can be applied to large textual archives that have not been part of the its training data. Therefore, LLMs can be augmented with sources relevant to specific research projects, and can be queried privately by researchers.

Trans-Dimensional Generative Modeling via Jump Diffusion Models

May 25, 2023Abstract:We propose a new class of generative models that naturally handle data of varying dimensionality by jointly modeling the state and dimension of each datapoint. The generative process is formulated as a jump diffusion process that makes jumps between different dimensional spaces. We first define a dimension destroying forward noising process, before deriving the dimension creating time-reversed generative process along with a novel evidence lower bound training objective for learning to approximate it. Simulating our learned approximation to the time-reversed generative process then provides an effective way of sampling data of varying dimensionality by jointly generating state values and dimensions. We demonstrate our approach on molecular and video datasets of varying dimensionality, reporting better compatibility with test-time diffusion guidance imputation tasks and improved interpolation capabilities versus fixed dimensional models that generate state values and dimensions separately.

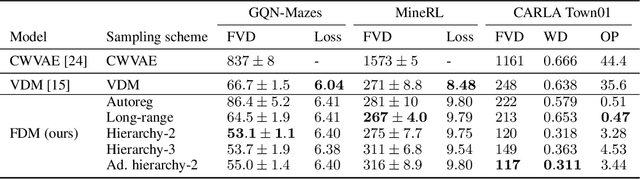

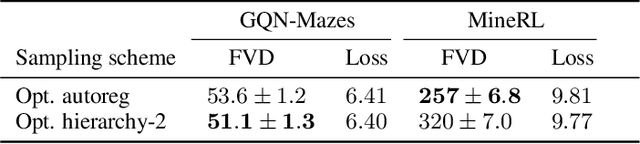

Flexible Diffusion Modeling of Long Videos

May 23, 2022

Abstract:We present a framework for video modeling based on denoising diffusion probabilistic models that produces long-duration video completions in a variety of realistic environments. We introduce a generative model that can at test-time sample any arbitrary subset of video frames conditioned on any other subset and present an architecture adapted for this purpose. Doing so allows us to efficiently compare and optimize a variety of schedules for the order in which frames in a long video are sampled and use selective sparse and long-range conditioning on previously sampled frames. We demonstrate improved video modeling over prior work on a number of datasets and sample temporally coherent videos over 25 minutes in length. We additionally release a new video modeling dataset and semantically meaningful metrics based on videos generated in the CARLA self-driving car simulator.

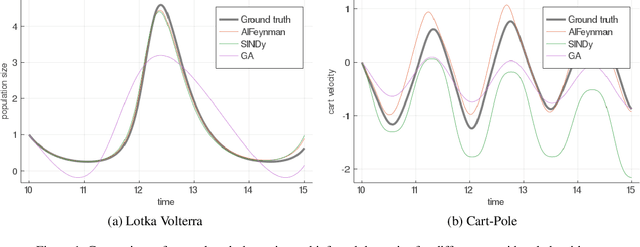

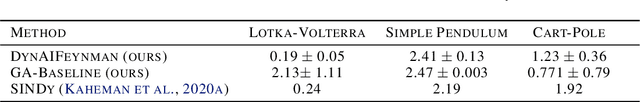

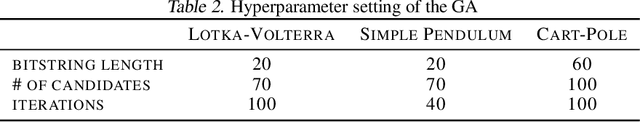

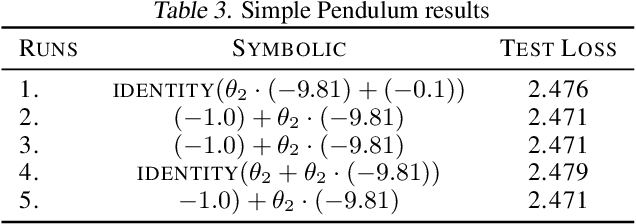

Inferring the Structure of Ordinary Differential Equations

Jul 05, 2021

Abstract:Understanding physical phenomena oftentimes means understanding the underlying dynamical system that governs observational measurements. While accurate prediction can be achieved with black box systems, they often lack interpretability and are less amenable for further expert investigation. Alternatively, the dynamics can be analysed via symbolic regression. In this paper, we extend the approach by (Udrescu et al., 2020) called AIFeynman to the dynamic setting to perform symbolic regression on ODE systems based on observations from the resulting trajectories. We compare this extension to state-of-the-art approaches for symbolic regression empirically on several dynamical systems for which the ground truth equations of increasing complexity are available. Although the proposed approach performs best on this benchmark, we observed difficulties of all the compared symbolic regression approaches on more complex systems, such as Cart-Pole.

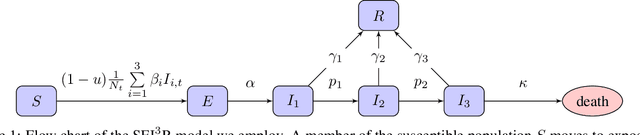

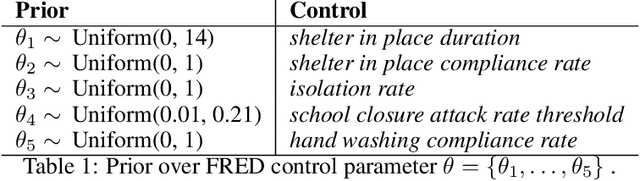

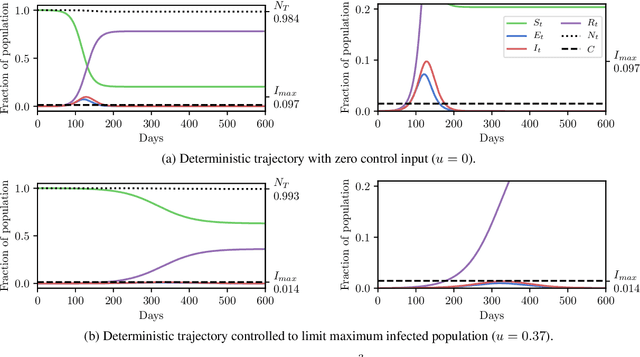

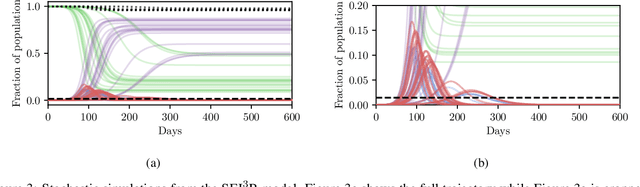

Planning as Inference in Epidemiological Models

Apr 03, 2020

Abstract:In this work we demonstrate how existing software tools can be used to automate parts of infectious disease-control policy-making via performing inference in existing epidemiological dynamics models. The kind of inference tasks undertaken include computing, for planning purposes, the posterior distribution over putatively controllable, via direct policy-making choices, simulation model parameters that give rise to acceptable disease progression outcomes. Neither the full capabilities of such inference automation software tools nor their utility for planning is widely disseminated at the current time. Timely gains in understanding about these tools and how they can be used may lead to more fine-grained and less economically damaging policy prescriptions, particularly during the current COVID-19 pandemic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge