Christian Brommer

Consistent Pose Estimation of Unmanned Ground Vehicles through Terrain-Aided Multi-Sensor Fusion on Geometric Manifolds

Aug 20, 2025

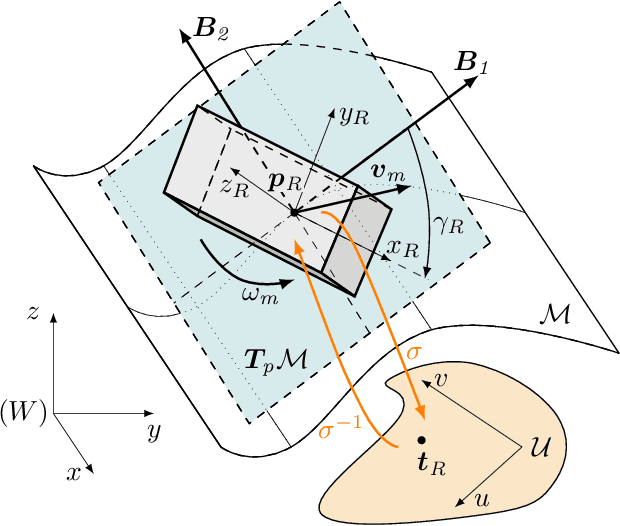

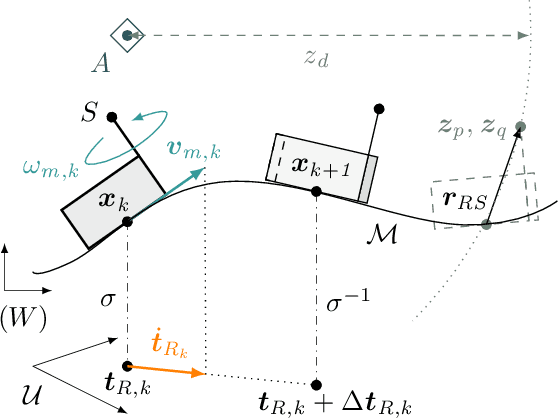

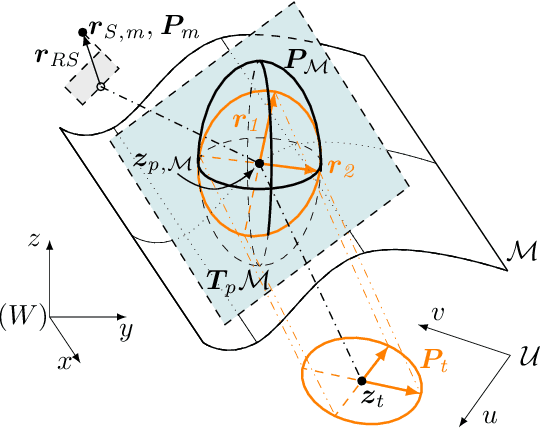

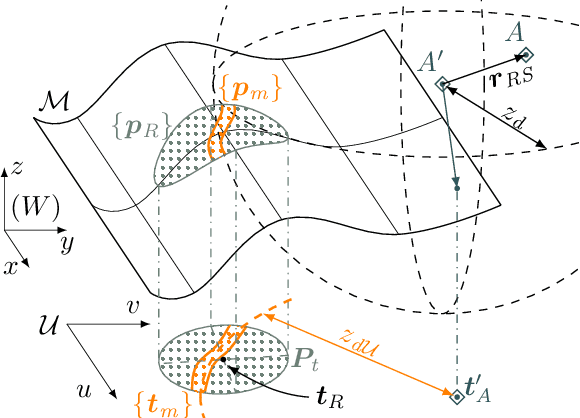

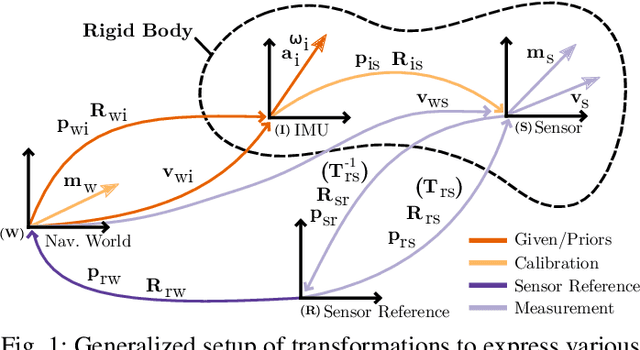

Abstract:Aiming to enhance the consistency and thus long-term accuracy of Extended Kalman Filters for terrestrial vehicle localization, this paper introduces the Manifold Error State Extended Kalman Filter (M-ESEKF). By representing the robot's pose in a space with reduced dimensionality, the approach ensures feasible estimates on generic smooth surfaces, without introducing artificial constraints or simplifications that may degrade a filter's performance. The accompanying measurement models are compatible with common loosely- and tightly-coupled sensor modalities and also implicitly account for the ground geometry. We extend the formulation by introducing a novel correction scheme that embeds additional domain knowledge into the sensor data, giving more accurate uncertainty approximations and further enhancing filter consistency. The proposed estimator is seamlessly integrated into a validated modular state estimation framework, demonstrating compatibility with existing implementations. Extensive Monte Carlo simulations across diverse scenarios and dynamic sensor configurations show that the M-ESEKF outperforms classical filter formulations in terms of consistency and stability. Moreover, it eliminates the need for scenario-specific parameter tuning, enabling its application in a variety of real-world settings.

Sensor Model Identification via Simultaneous Model Selection and State Variable Determination

Jun 12, 2025

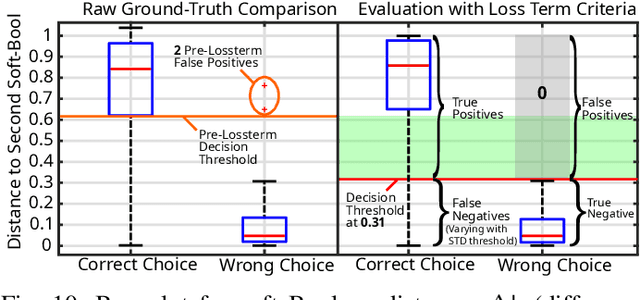

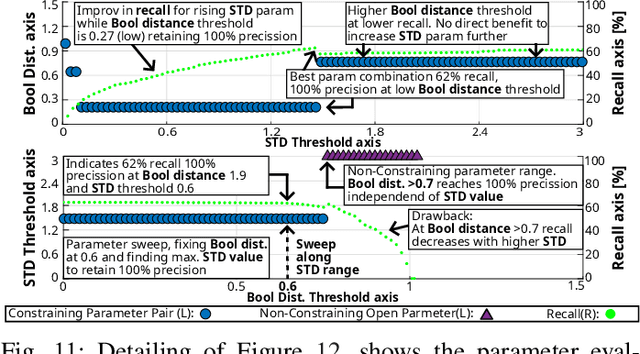

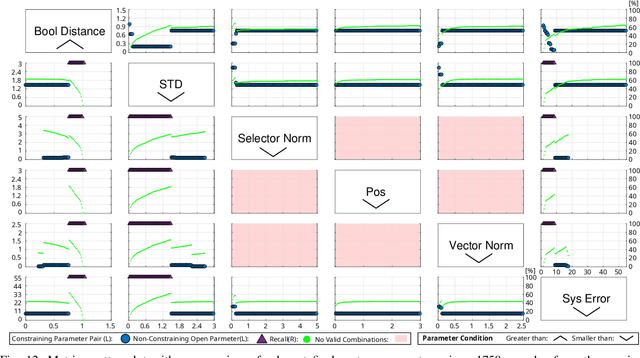

Abstract:We present a method for the unattended gray-box identification of sensor models commonly used by localization algorithms in the field of robotics. The objective is to determine the most likely sensor model for a time series of unknown measurement data, given an extendable catalog of predefined sensor models. Sensor model definitions may require states for rigid-body calibrations and dedicated reference frames to replicate a measurement based on the robot's localization state. A health metric is introduced, which verifies the outcome of the selection process in order to detect false positives and facilitate reliable decision-making. In a second stage, an initial guess for identified calibration states is generated, and the necessity of sensor world reference frames is evaluated. The identified sensor model with its parameter information is then used to parameterize and initialize a state estimation application, thus ensuring a more accurate and robust integration of new sensor elements. This method is helpful for inexperienced users who want to identify the source and type of a measurement, sensor calibrations, or sensor reference frames. It will also be important in the field of modular multi-agent scenarios and modularized robotic platforms that are augmented by sensor modalities during runtime. Overall, this work aims to provide a simplified integration of sensor modalities to downstream applications and circumvent common pitfalls in the usage and development of localization approaches.

Autonomous Control of Redundant Hydraulic Manipulator Using Reinforcement Learning with Action Feedback

Apr 22, 2025Abstract:This article presents an entirely data-driven approach for autonomous control of redundant manipulators with hydraulic actuation. The approach only requires minimal system information, which is inherited from a simulation model. The non-linear hydraulic actuation dynamics are modeled using actuator networks from the data gathered during the manual operation of the manipulator to effectively emulate the real system in a simulation environment. A neural network control policy for autonomous control, based on end-effector (EE) position tracking is then learned using Reinforcement Learning (RL) with Ornstein-Uhlenbeck process noise (OUNoise) for efficient exploration. The RL agent also receives feedback based on supervised learning of the forward kinematics which facilitates selecting the best suitable action from exploration. The control policy directly provides the joint variables as outputs based on provided target EE position while taking into account the system dynamics. The joint variables are then mapped to the hydraulic valve commands, which are then fed to the system without further modifications. The proposed approach is implemented on a scaled hydraulic forwarder crane with three revolute and one prismatic joint to track the desired position of the EE in 3-Dimensional (3D) space. With the emulated dynamics and extensive learning in simulation, the results demonstrate the feasibility of deploying the learned controller directly on the real system.

AIVIO: Closed-loop, Object-relative Navigation of UAVs with AI-aided Visual Inertial Odometry

Oct 08, 2024Abstract:Object-relative mobile robot navigation is essential for a variety of tasks, e.g. autonomous critical infrastructure inspection, but requires the capability to extract semantic information about the objects of interest from raw sensory data. While deep learning-based (DL) methods excel at inferring semantic object information from images, such as class and relative 6 degree of freedom (6-DoF) pose, they are computationally demanding and thus often not suitable for payload constrained mobile robots. In this letter we present a real-time capable unmanned aerial vehicle (UAV) system for object-relative, closed-loop navigation with a minimal sensor configuration consisting of an inertial measurement unit (IMU) and RGB camera. Utilizing a DL-based object pose estimator, solely trained on synthetic data and optimized for companion board deployment, the object-relative pose measurements are fused with the IMU data to perform object-relative localization. We conduct multiple real-world experiments to validate the performance of our system for the challenging use case of power pole inspection. An example closed-loop flight is presented in the supplementary video.

Revisiting multi-GNSS Navigation for UAVs -- An Equivariant Filtering Approach

Oct 16, 2023Abstract:In this work, we explore the recent advances in equivariant filtering for inertial navigation systems to improve state estimation for uncrewed aerial vehicles (UAVs). Traditional state-of-the-art estimation methods, e.g., the multiplicative Kalman filter (MEKF), have some limitations concerning their consistency, errors in the initial state estimate, and convergence performance. Symmetry-based methods, such as the equivariant filter (EqF), offer significant advantages for these points by exploiting the mathematical properties of the system - its symmetry. These filters yield faster convergence rates and robustness to wrong initial state estimates through their error definition. To demonstrate the usability of EqFs, we focus on the sensor-fusion problem with the most common sensors in outdoor robotics: global navigation satellite system (GNSS) sensors and an inertial measurement unit (IMU). We provide an implementation of such an EqF leveraging the semi-direct product of the symmetry group to derive the filter equations. To validate the practical usability of EqFs in real-world scenarios, we evaluate our method using data from all outdoor runs of the INSANE Dataset. Our results demonstrate the performance improvements of the EqF in real-world environments, highlighting its potential for enhancing state estimation for UAVs.

AI-Based Multi-Object Relative State Estimation with Self-Calibration Capabilities

Mar 01, 2023Abstract:The capability to extract task specific, semantic information from raw sensory data is a crucial requirement for many applications of mobile robotics. Autonomous inspection of critical infrastructure with Unmanned Aerial Vehicles (UAVs), for example, requires precise navigation relative to the structure that is to be inspected. Recently, Artificial Intelligence (AI)-based methods have been shown to excel at extracting semantic information such as 6 degree-of-freedom (6-DoF) poses of objects from images. In this paper, we propose a method combining a state-of-the-art AI-based pose estimator for objects in camera images with data from an inertial measurement unit (IMU) for 6-DoF multi-object relative state estimation of a mobile robot. The AI-based pose estimator detects multiple objects of interest in camera images along with their relative poses. These measurements are fused with IMU data in a state-of-the-art sensor fusion framework. We illustrate the feasibility of our proposed method with real world experiments for different trajectories and number of arbitrarily placed objects. We show that the results can be reliably reproduced due to the self-calibrating capabilities of our approach.

INSANE: Cross-Domain UAV Data Sets with Increased Number of Sensors for developing Advanced and Novel Estimators

Oct 17, 2022

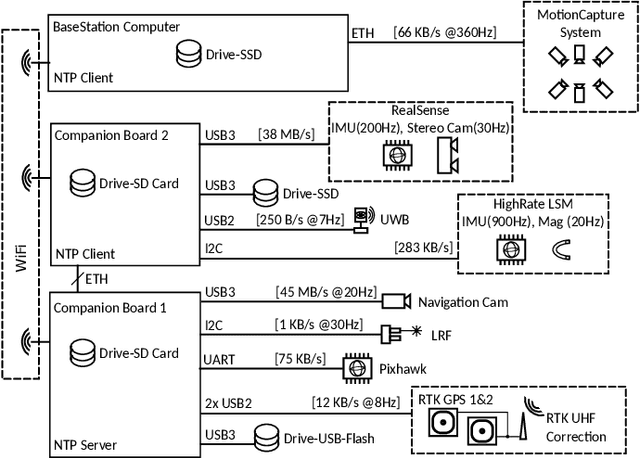

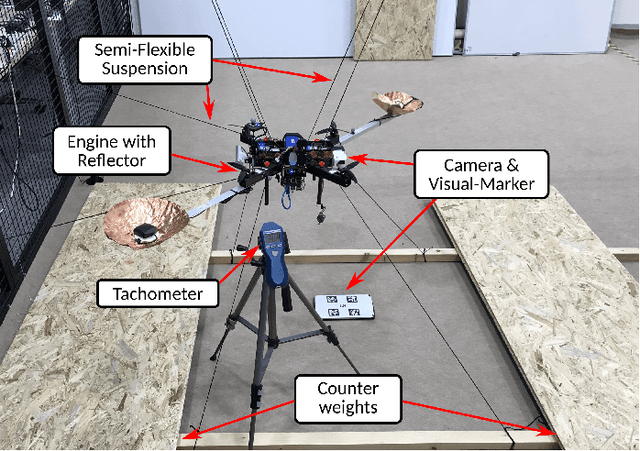

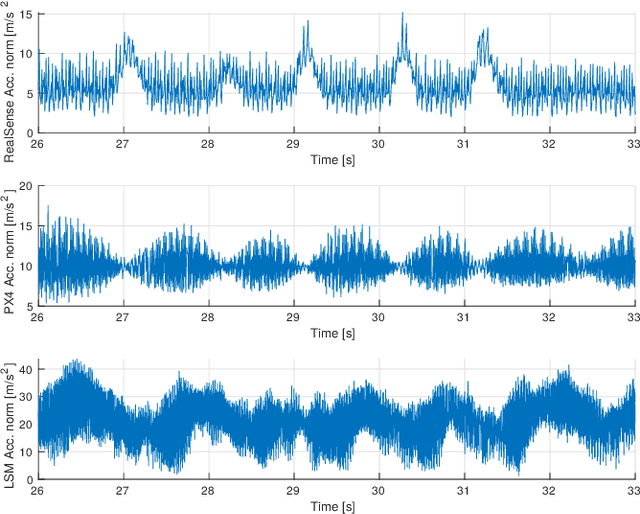

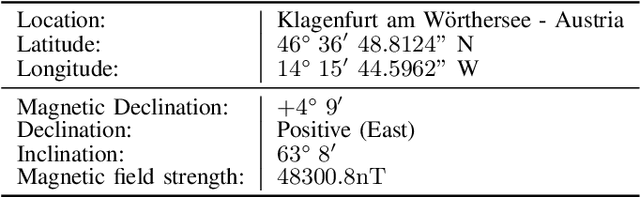

Abstract:For real-world applications, autonomous mobile robotic platforms must be capable of navigating safely in a multitude of different and dynamic environments with accurate and robust localization being a key prerequisite. To support further research in this domain, we present the INSANE data sets - a collection of versatile Micro Aerial Vehicle (MAV) data sets for cross-environment localization. The data sets provide various scenarios with multiple stages of difficulty for localization methods. These scenarios range from trajectories in the controlled environment of an indoor motion capture facility, to experiments where the vehicle performs an outdoor maneuver and transitions into a building, requiring changes of sensor modalities, up to purely outdoor flight maneuvers in a challenging Mars analog environment to simulate scenarios which current and future Mars helicopters would need to perform. The presented work aims to provide data that reflects real-world scenarios and sensor effects. The extensive sensor suite includes various sensor categories, including multiple Inertial Measurement Units (IMUs) and cameras. Sensor data is made available as raw measurements and each data set provides highly accurate ground truth, including the outdoor experiments where a dual Real-Time Kinematic (RTK) Global Navigation Satellite System (GNSS) setup provides sub-degree and centimeter accuracy (1-sigma). The sensor suite also includes a dedicated high-rate IMU to capture all the vibration dynamics of the vehicle during flight to support research on novel machine learning-based sensor signal enhancement methods for improved localization. The data sets and post-processing tools are available at: https://sst.aau.at/cns/datasets

Overcoming Bias: Equivariant Filter Design for Biased Attitude Estimation with Online Calibration

Sep 24, 2022

Abstract:Stochastic filters for on-line state estimation are a core technology for autonomous systems. The performance of such filters is one of the key limiting factors to a system's capability. Both asymptotic behavior (e.g.,~for regular operation) and transient response (e.g.,~for fast initialization and reset) of such filters are of crucial importance in guaranteeing robust operation of autonomous systems. This paper introduces a new generic formulation for a gyroscope aided attitude estimator using N direction measurements including both body-frame and reference-frame direction type measurements. The approach is based on an integrated state formulation that incorporates navigation, extrinsic calibration for all direction sensors, and gyroscope bias states in a single equivariant geometric structure. This newly proposed symmetry allows modular addition of different direction measurements and their extrinsic calibration while maintaining the ability to include bias states in the same symmetry. The subsequently proposed filter-based estimator using this symmetry noticeably improves the transient response, and the asymptotic bias and extrinsic calibration estimation compared to state-of-the-art approaches. The estimator is verified in statistically representative simulations and is tested in real-world experiments.

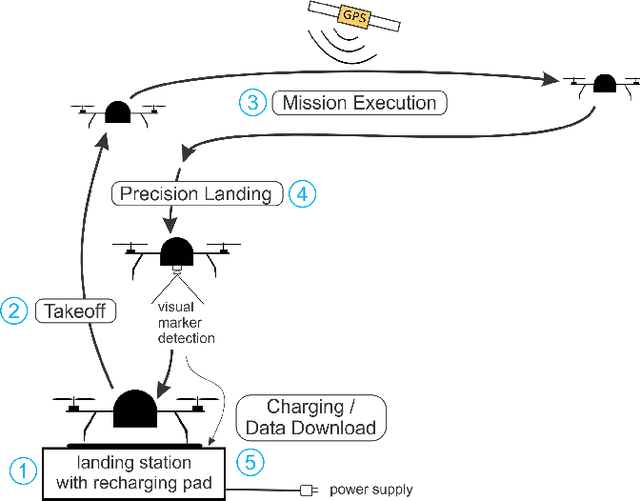

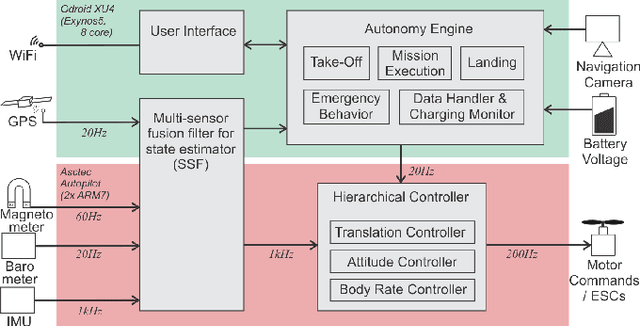

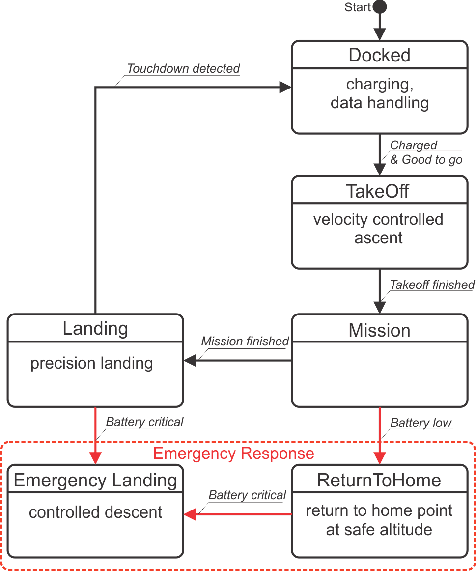

Long-Duration Fully Autonomous Operation of Rotorcraft Unmanned Aerial Systems for Remote-Sensing Data Acquisition

Aug 18, 2019

Abstract:Recent applications of unmanned aerial systems (UAS) to precision agriculture have shown increased ease and efficiency in data collection at precise remote locations. However, further enhancement of the field requires operation over long periods of time, e.g. days or weeks. This has so far been impractical due to the limited flight times of such platforms and the requirement of humans in the loop for operation. To overcome these limitations, we propose a fully autonomous rotorcraft UAS that is capable of performing repeated flights for long-term observation missions without any human intervention. We address two key technologies that are critical for such a system: full platform autonomy to enable mission execution independently from human operators and the ability of vision-based precision landing on a recharging station for automated energy replenishment. High-level autonomous decision making is implemented as a hierarchy of master and slave state machines. Vision-based precision landing is enabled by estimating the landing pad's pose using a bundle of AprilTag fiducials configured for detection from a wide range of altitudes. We provide an extensive evaluation of the landing pad pose estimation accuracy as a function of the bundle's geometry. The functionality of the complete system is demonstrated through two indoor experiments with a duration of 11 and 10.6 hours, and one outdoor experiment with a duration of 4 hours. The UAS executed 16, 48 and 22 flights respectively during these experiments. In the outdoor experiment, the ratio between flying to collect data and charging was 1 to 10, which is similar to past work in this domain. All flights were fully autonomous with no human in the loop. To our best knowledge this is the first research publication about the long-term outdoor operation of a quadrotor system with no human interaction.

* 38 pages, 28 figures

Long-Duration Autonomy for Small Rotorcraft UAS including Recharging

Oct 12, 2018

Abstract:Many unmanned aerial vehicle surveillance and monitoring applications require observations at precise locations over long periods of time, ideally days or weeks at a time (e.g. ecosystem monitoring), which has been impractical due to limited endurance and the requirement of humans in the loop for operation. To overcome these limitations, we propose a fully autonomous small rotorcraft UAS that is capable of performing repeated sorties for long-term observation missions without any human intervention. We address two key technologies that are critical for such a system: full platform autonomy including emergency response to enable mission execution independently from human operators, and the ability of vision-based precision landing on a recharging station for automated energy replenishment. Experimental results of up to 11 hours of fully autonomous operation in indoor and outdoor environments illustrate the capability of our system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge