Robert Mahony

Vision-Aided Relative State Estimation for Approach and Landing on a Moving Platform with Inertial Measurements

Dec 22, 2025

Abstract:This paper tackles the problem of estimating the relative position, orientation, and velocity between a UAV and a planar platform undergoing arbitrary 3D motion during approach and landing. The estimation relies on measurements from Inertial Measurement Units (IMUs) mounted on both systems, assuming there is a suitable communication channel to exchange data, together with visual information provided by an onboard monocular camera, from which the bearing (line-of-sight direction) to the platform's center and the normal vector of its planar surface are extracted. We propose a cascade observer with a complementary filter on SO(3) to reconstruct the relative attitude, followed by a linear Riccati observer for relative position and velocity estimation. Convergence of both observers is established under persistently exciting conditions, and the cascade is shown to be almost globally asymptotically and locally exponentially stable. We further extend the design to the case where the platform's rotation is restricted to its normal axis and show that its measured linear acceleration can be exploited to recover the remaining unobservable rotation angle. A sufficient condition to ensure local exponential convergence in this setting is provided. The performance of the proposed observers is validated through extensive simulations.

Asynchronous Multi-Object Tracking with an Event Camera

May 12, 2025Abstract:Events cameras are ideal sensors for enabling robots to detect and track objects in highly dynamic environments due to their low latency output, high temporal resolution, and high dynamic range. In this paper, we present the Asynchronous Event Multi-Object Tracking (AEMOT) algorithm for detecting and tracking multiple objects by processing individual raw events asynchronously. AEMOT detects salient event blob features by identifying regions of consistent optical flow using a novel Field of Active Flow Directions built from the Surface of Active Events. Detected features are tracked as candidate objects using the recently proposed Asynchronous Event Blob (AEB) tracker in order to construct small intensity patches of each candidate object. A novel learnt validation stage promotes or discards candidate objects based on classification of their intensity patches, with promoted objects having their position, velocity, size, and orientation estimated at their event rate. We evaluate AEMOT on a new Bee Swarm Dataset, where it tracks dozens of small bees with precision and recall performance exceeding that of alternative event-based detection and tracking algorithms by over 37%. Source code and the labelled event Bee Swarm Dataset will be open sourced

Equivariant Filter Design for Range-only SLAM

Mar 05, 2025

Abstract:Range-only Simultaneous Localisation and Mapping (RO-SLAM) is of interest due to its practical applications in ultra-wideband (UWB) and Bluetooth Low Energy (BLE) localisation in terrestrial and aerial applications and acoustic beacon localisation in submarine applications. In this work, we consider a mobile robot equipped with an inertial measurement unit (IMU) and a range sensor that measures distances to a collection of fixed landmarks. We derive an equivariant filter (EqF) for the RO-SLAM problem based on a symmetry Lie group that is compatible with the range measurements. The proposed filter does not require bootstrapping or initialisation of landmark positions, and demonstrates robustness to the no-prior situation. The filter is demonstrated on a real-world dataset, and it is shown to significantly outperform a state-of-the-art EKF alternative in terms of both accuracy and robustness.

Equivariant IMU Preintegration with Biases: a Galilean Group Approach

Nov 24, 2024

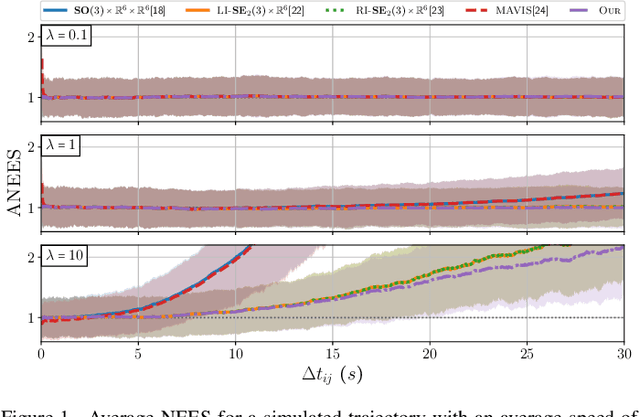

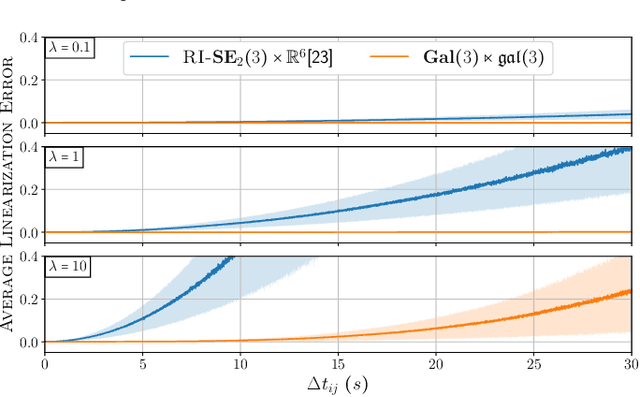

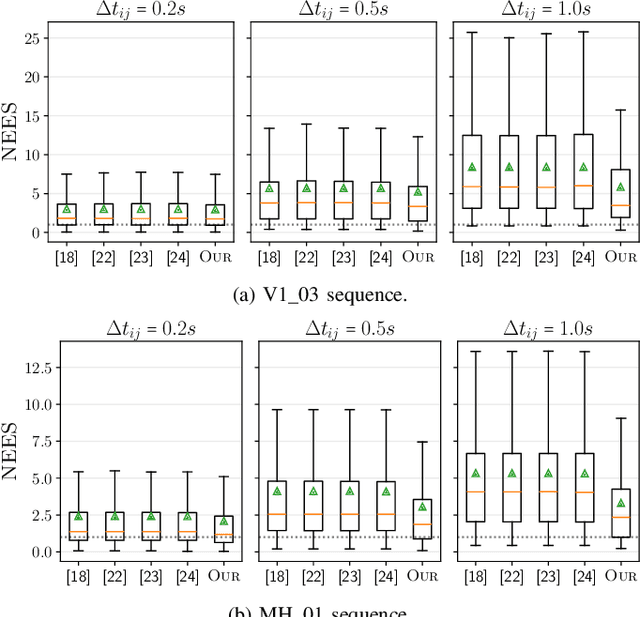

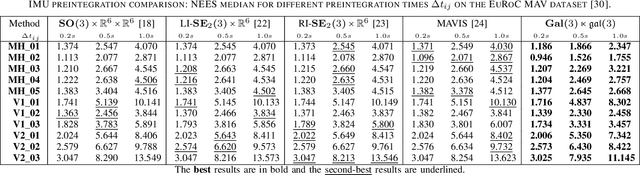

Abstract:This letter proposes a new approach for Inertial Measurement Unit (IMU) preintegration, a fundamental building block that can be leveraged in different optimization-based Inertial Navigation System (INS) localization solutions. Inspired by recent advances in equivariant theory applied to biased INSs, we derive a discrete-time formulation of the IMU preintegration on ${\mathbf{Gal}(3) \ltimes \mathfrak{gal}(3)}$, the left-trivialization of the tangent group of the Galilean group $\mathbf{Gal}(3)$. We define a novel preintegration error that geometrically couples the navigation states and the bias leading to lower linearization error. Our method improves in consistency compared to existing preintegration approaches which treat IMU biases as a separate state-space. Extensive validation against state-of-the-art methods, both in simulation and with real-world IMU data, implementation in the Lie++ library, and open-source code are provided.

Equivariant IMU Preintegration with Biases: an Inhomogeneous Galilean Group Approach

Nov 08, 2024

Abstract:This letter proposes a new approach for Inertial Measurement Unit (IMU) preintegration, a fundamental building block that can be leveraged in different optimization-based Inertial Navigation System (INS) localization solutions. Inspired by recent advancements in equivariant theory applied to biased INSs, we derive a discrete-time formulation of the IMU preintegration on $\mathbf{G}(3) \ltimes \mathfrak{g}(3)$, the tangent group of the inhomogeneous Galilean group $\mathbf{G}(3)$. We define a novel preintegration error that geometrically couples the navigation states and the bias leading to lower linearization error. Our method improves in consistency compared to existing preintegration approaches which treat IMU biases as a separate state-space. Extensive validation against state-of-the-art methods, both in simulation and with real-world IMU data, implementation in the Lie++ library, and open-sourcing of the code are provided.

Real-time Structure Flow

Jun 26, 2024

Abstract:This article introduces the structure flow field; a flow field that can provide high-speed robo-centric motion information for motion control of highly dynamic robotic devices and autonomous vehicles. Structure flow is the angular 3D velocity of the scene at a given pixel. We show that structure flow posses an elegant evolution model in the form of a Partial Differential Equation (PDE) that enables us to create dense flow predictions forward in time. We exploit this structure to design a predictor-update algorithm to compute structure flow in real time using image and depth measurements. The prediction stage takes the previous estimate of the structure flow and propagates it forward in time using a numerical implementation of the structure flow PDE. The predicted flow is then updated using new image and depth data. The algorithm runs up to 600 Hz on a Desktop GPU machine for 512x512 images with flow values up to 8 pixels. We provide ground truth validation on high-speed synthetic image sequences as well as results on real-life video on driving scenarios.

End-to-end Multi-Instance Robotic Reaching from Monocular Vision

Jan 22, 2024Abstract:Multi-instance scenes are especially challenging for end-to-end visuomotor (image-to-control) learning algorithms. "Pipeline" visual servo control algorithms use separate detection, selection and servo stages, allowing algorithms to focus on a single object instance during servo control. End-to-end systems do not have separate detection and selection stages and need to address the visual ambiguities introduced by the presence of arbitrary number of visually identical or similar objects during servo control. However, end-to-end schemes avoid embedding errors from detection and selection stages in the servo control behaviour, are more dynamically robust to changing scenes, and are algorithmically simpler. In this paper, we present a real-time end-to-end visuomotor learning algorithm for multi-instance reaching. The proposed algorithm uses a monocular RGB image and the manipulator's joint angles as the input to a light-weight fully-convolutional network (FCN) to generate control candidates. A key innovation of the proposed method is identifying the optimal control candidate by regressing a control-Lyapunov function (cLf) value. The multi-instance capability emerges naturally from the stability analysis associated with the cLf formulation. We demonstrate the proposed algorithm effectively reaching and grasping objects from different categories on a table-top amid other instances and distractors from an over-the-shoulder monocular RGB camera. The network is able to run up to approximately 160 fps during inference on one GTX 1080 Ti GPU.

MSCEqF: A Multi State Constraint Equivariant Filter for Vision-aided Inertial Navigation

Nov 20, 2023

Abstract:This letter re-visits the problem of visual-inertial navigation system (VINS) and presents a novel filter design we dub the multi state constraint equivariant filter (MSCEqF, in analogy to the well known MSCKF). We define a symmetry group and corresponding group action that allow specifically the design of an equivariant filter for the problem of visual-inertial odometry (VIO) including IMU bias, and camera intrinsic and extrinsic calibration states. In contrast to state-of-the-art invariant extended Kalman filter (IEKF) approaches that simply tack IMU bias and other states onto the $\mathbf{SE}_2(3)$ group, our filter builds upon a symmetry that properly includes all the states in the group structure. Thus, we achieve improved behavior, particularly when linearization points largely deviate from the truth (i.e., on transients upon state disturbances). Our approach is inherently consistent even during convergence phases from significant errors without the need for error uncertainty adaptation, observability constraint, or other consistency enforcing techniques. This leads to greatly improved estimator behavior for significant error and unexpected state changes during, e.g., long-duration missions. We evaluate our approach with a multitude of different experiments using three different prominent real-world datasets.

High Frequency, High Accuracy Pointing onboard Nanosats using Neuromorphic Event Sensing and Piezoelectric Actuation

Sep 11, 2023Abstract:As satellites become smaller, the ability to maintain stable pointing decreases as external forces acting on the satellite come into play. At the same time, reaction wheels used in the attitude determination and control system (ADCS) introduce high frequency jitter which can disrupt pointing stability. For space domain awareness (SDA) tasks that track objects tens of thousands of kilometres away, the pointing accuracy offered by current nanosats, typically in the range of 10 to 100 arcseconds, is not sufficient. In this work, we develop a novel payload that utilises a neuromorphic event sensor (for high frequency and highly accurate relative attitude estimation) paired in a closed loop with a piezoelectric stage (for active attitude corrections) to provide highly stable sensor-specific pointing. Event sensors are especially suited for space applications due to their desirable characteristics of low power consumption, asynchronous operation, and high dynamic range. We use the event sensor to first estimate a reference background star field from which instantaneous relative attitude is estimated at high frequency. The piezoelectric stage works in a closed control loop with the event sensor to perform attitude corrections based on the discrepancy between the current and desired attitude. Results in a controlled setting show that we can achieve a pointing accuracy in the range of 1-5 arcseconds using our novel payload at an operating frequency of up to 50Hz using a prototype built from commercial-off-the-shelf components. Further details can be found at https://ylatif.github.io/ultrafinestabilisation

Equivariant Symmetries for Inertial Navigation Systems

Sep 07, 2023

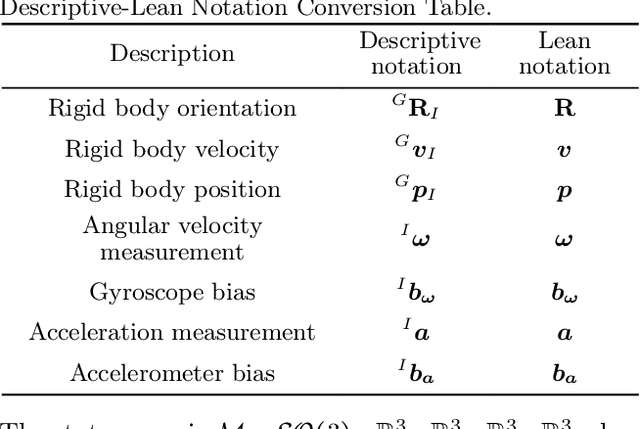

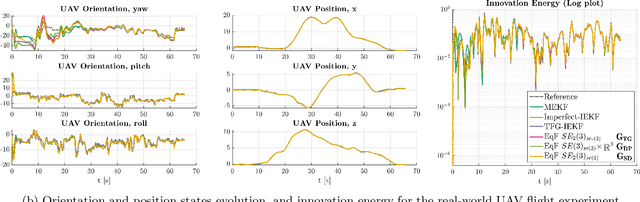

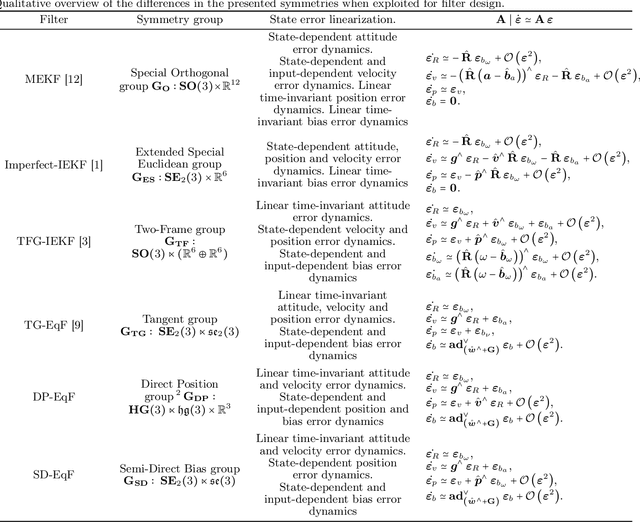

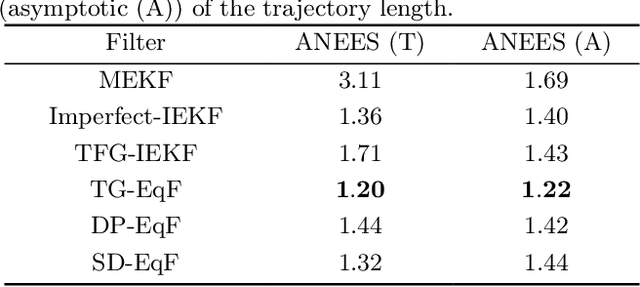

Abstract:This paper investigates the problem of inertial navigation system (INS) filter design through the lens of symmetry. The extended Kalman filter (EKF) and its variants, have been the staple of INS filtering for 50 years; however, recent advances in inertial navigation systems have exploited matrix Lie group structure to design stochastic filters and state observers that have been shown to display superior performance compared to classical solutions. In this work we consider the case where a vehicle has an inertial measurement unit (IMU) and a global navigation satellite system (GNSS) receiver. We show that all the modern variants of the EKF for these sensors can be interpreted as the recently proposed Equivariant Filter (EqF) design methodology applied to different choices of symmetry group for the INS problem. This leads us to propose two new symmetries for the INS problem that have not been considered in the prior literature, and provide a discussion of the relative strengths and weaknesses of all the different algorithms. We believe the collection of symmetries that we present here capture all the sensible choices of symmetry for this problem and sensor suite, and that the analysis provided is indicative of the relative real-world performance potential of the different algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge