Martin Scheiber

AIVIO: Closed-loop, Object-relative Navigation of UAVs with AI-aided Visual Inertial Odometry

Oct 08, 2024Abstract:Object-relative mobile robot navigation is essential for a variety of tasks, e.g. autonomous critical infrastructure inspection, but requires the capability to extract semantic information about the objects of interest from raw sensory data. While deep learning-based (DL) methods excel at inferring semantic object information from images, such as class and relative 6 degree of freedom (6-DoF) pose, they are computationally demanding and thus often not suitable for payload constrained mobile robots. In this letter we present a real-time capable unmanned aerial vehicle (UAV) system for object-relative, closed-loop navigation with a minimal sensor configuration consisting of an inertial measurement unit (IMU) and RGB camera. Utilizing a DL-based object pose estimator, solely trained on synthetic data and optimized for companion board deployment, the object-relative pose measurements are fused with the IMU data to perform object-relative localization. We conduct multiple real-world experiments to validate the performance of our system for the challenging use case of power pole inspection. An example closed-loop flight is presented in the supplementary video.

Revisiting multi-GNSS Navigation for UAVs -- An Equivariant Filtering Approach

Oct 16, 2023Abstract:In this work, we explore the recent advances in equivariant filtering for inertial navigation systems to improve state estimation for uncrewed aerial vehicles (UAVs). Traditional state-of-the-art estimation methods, e.g., the multiplicative Kalman filter (MEKF), have some limitations concerning their consistency, errors in the initial state estimate, and convergence performance. Symmetry-based methods, such as the equivariant filter (EqF), offer significant advantages for these points by exploiting the mathematical properties of the system - its symmetry. These filters yield faster convergence rates and robustness to wrong initial state estimates through their error definition. To demonstrate the usability of EqFs, we focus on the sensor-fusion problem with the most common sensors in outdoor robotics: global navigation satellite system (GNSS) sensors and an inertial measurement unit (IMU). We provide an implementation of such an EqF leveraging the semi-direct product of the symmetry group to derive the filter equations. To validate the practical usability of EqFs in real-world scenarios, we evaluate our method using data from all outdoor runs of the INSANE Dataset. Our results demonstrate the performance improvements of the EqF in real-world environments, highlighting its potential for enhancing state estimation for UAVs.

INSANE: Cross-Domain UAV Data Sets with Increased Number of Sensors for developing Advanced and Novel Estimators

Oct 17, 2022

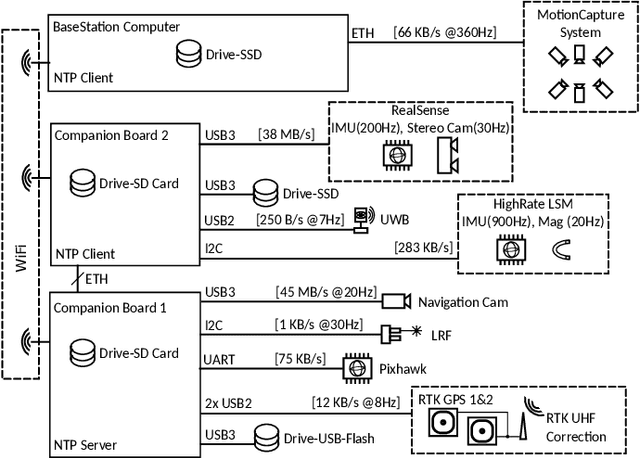

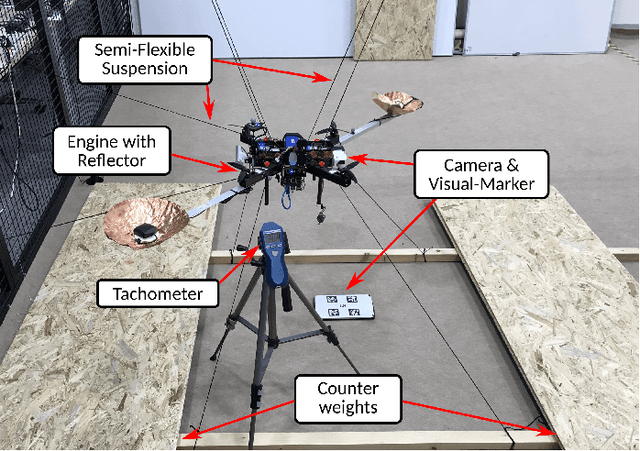

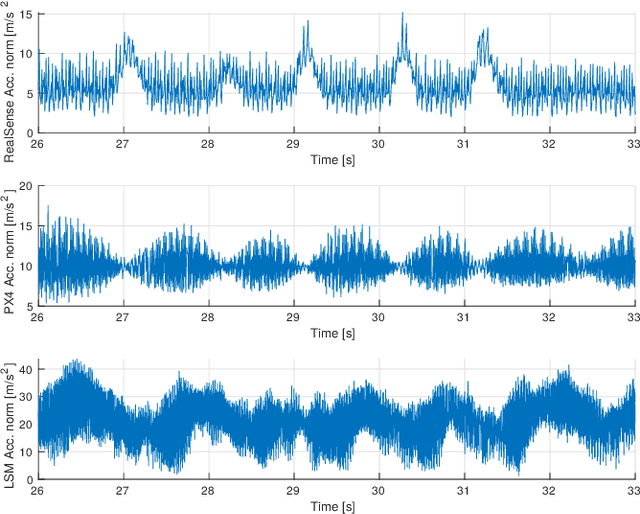

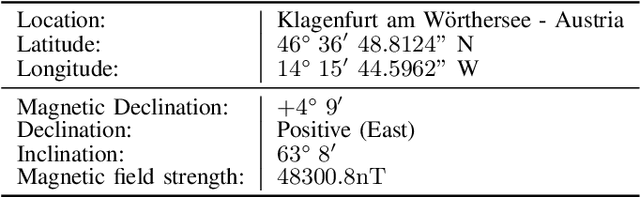

Abstract:For real-world applications, autonomous mobile robotic platforms must be capable of navigating safely in a multitude of different and dynamic environments with accurate and robust localization being a key prerequisite. To support further research in this domain, we present the INSANE data sets - a collection of versatile Micro Aerial Vehicle (MAV) data sets for cross-environment localization. The data sets provide various scenarios with multiple stages of difficulty for localization methods. These scenarios range from trajectories in the controlled environment of an indoor motion capture facility, to experiments where the vehicle performs an outdoor maneuver and transitions into a building, requiring changes of sensor modalities, up to purely outdoor flight maneuvers in a challenging Mars analog environment to simulate scenarios which current and future Mars helicopters would need to perform. The presented work aims to provide data that reflects real-world scenarios and sensor effects. The extensive sensor suite includes various sensor categories, including multiple Inertial Measurement Units (IMUs) and cameras. Sensor data is made available as raw measurements and each data set provides highly accurate ground truth, including the outdoor experiments where a dual Real-Time Kinematic (RTK) Global Navigation Satellite System (GNSS) setup provides sub-degree and centimeter accuracy (1-sigma). The sensor suite also includes a dedicated high-rate IMU to capture all the vibration dynamics of the vehicle during flight to support research on novel machine learning-based sensor signal enhancement methods for improved localization. The data sets and post-processing tools are available at: https://sst.aau.at/cns/datasets

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge