Chris Finlay

Multi-Resolution Continuous Normalizing Flows

Jun 22, 2021

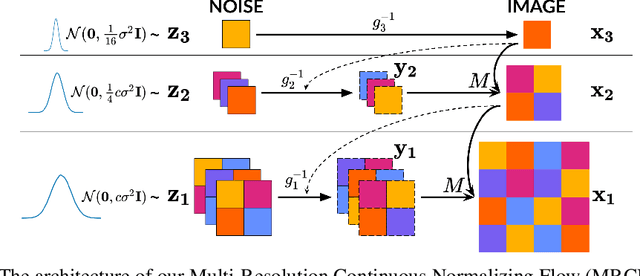

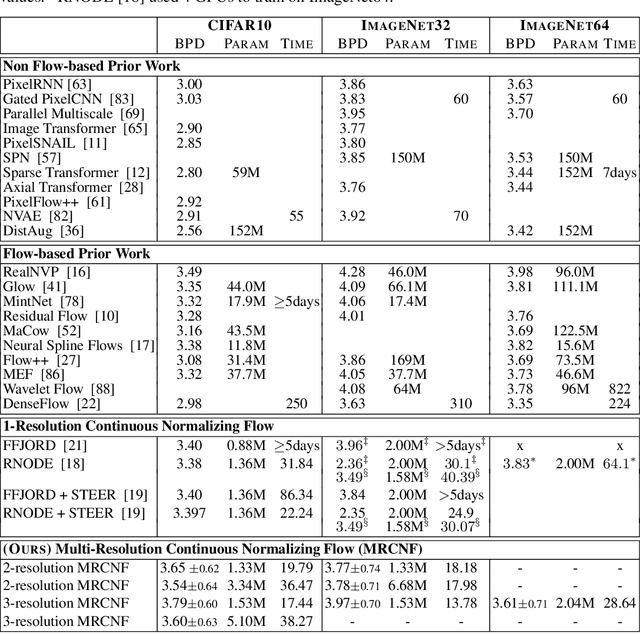

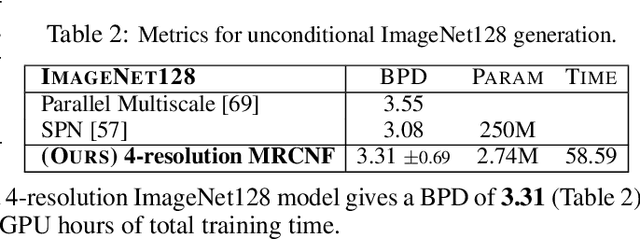

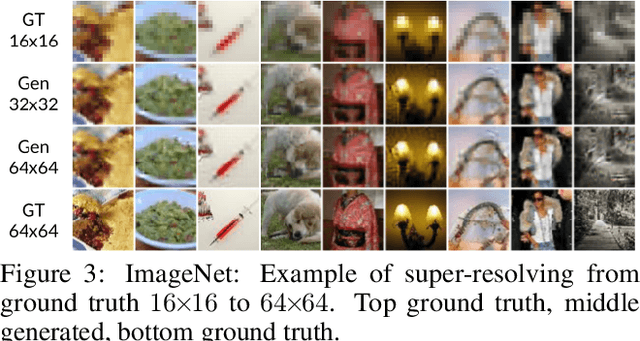

Abstract:Recent work has shown that Neural Ordinary Differential Equations (ODEs) can serve as generative models of images using the perspective of Continuous Normalizing Flows (CNFs). Such models offer exact likelihood calculation, and invertible generation/density estimation. In this work we introduce a Multi-Resolution variant of such models (MRCNF), by characterizing the conditional distribution over the additional information required to generate a fine image that is consistent with the coarse image. We introduce a transformation between resolutions that allows for no change in the log likelihood. We show that this approach yields comparable likelihood values for various image datasets, with improved performance at higher resolutions, with fewer parameters, using only 1 GPU. Further, we examine the out-of-distribution properties of (Multi-Resolution) Continuous Normalizing Flows, and find that they are similar to those of other likelihood-based generative models.

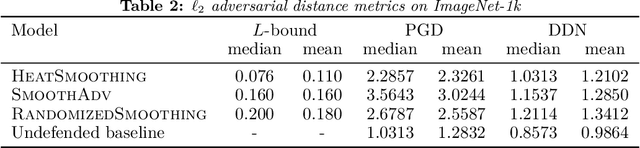

Adversarial Boot Camp: label free certified robustness in one epoch

Oct 05, 2020

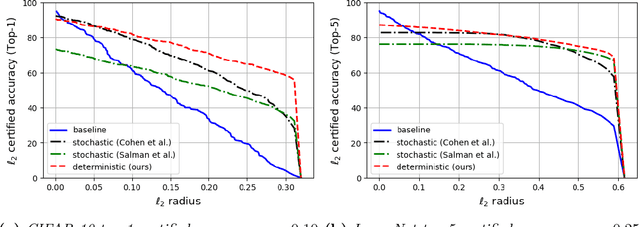

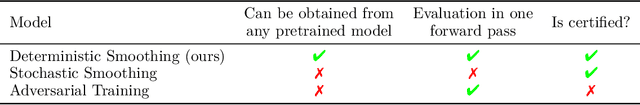

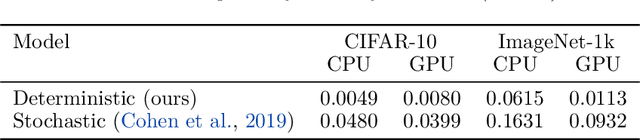

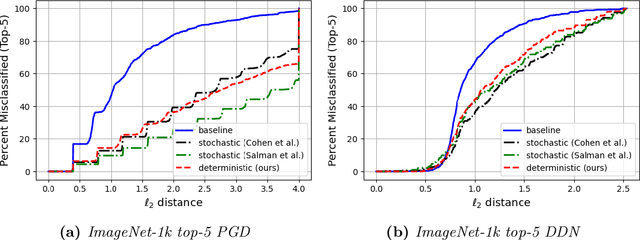

Abstract:Machine learning models are vulnerable to adversarial attacks. One approach to addressing this vulnerability is certification, which focuses on models that are guaranteed to be robust for a given perturbation size. A drawback of recent certified models is that they are stochastic: they require multiple computationally expensive model evaluations with random noise added to a given input. In our work, we present a deterministic certification approach which results in a certifiably robust model. This approach is based on an equivalence between training with a particular regularized loss, and the expected values of Gaussian averages. We achieve certified models on ImageNet-1k by retraining a model with this loss for one epoch without the use of label information.

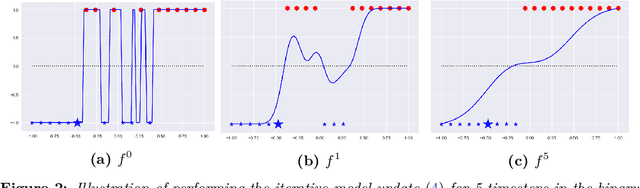

Deterministic Gaussian Averaged Neural Networks

Jun 10, 2020

Abstract:We present a deterministic method to compute the Gaussian average of neural networks used in regression and classification. Our method is based on an equivalence between training with a particular regularized loss, and the expected values of Gaussian averages. We use this equivalence to certify models which perform well on clean data but are not robust to adversarial perturbations. In terms of certified accuracy and adversarial robustness, our method is comparable to known stochastic methods such as randomized smoothing, but requires only a single model evaluation during inference.

Learning normalizing flows from Entropy-Kantorovich potentials

Jun 10, 2020

Abstract:We approach the problem of learning continuous normalizing flows from a dual perspective motivated by entropy-regularized optimal transport, in which continuous normalizing flows are cast as gradients of scalar potential functions. This formulation allows us to train a dual objective comprised only of the scalar potential functions, and removes the burden of explicitly computing normalizing flows during training. After training, the normalizing flow is easily recovered from the potential functions.

How to train your neural ODE

Feb 07, 2020

Abstract:Training neural ODEs on large datasets has not been tractable due to the necessity of allowing the adaptive numerical ODE solver to refine its step size to very small values. In practice this leads to dynamics equivalent to many hundreds or even thousands of layers. In this paper, we overcome this apparent difficulty by introducing a theoretically-grounded combination of both optimal transport and stability regularizations which encourage neural ODEs to prefer simpler dynamics out of all the dynamics that solve a problem well. Simpler dynamics lead to faster convergence and to fewer discretizations of the solver, considerably decreasing wall-clock time without loss in performance. Our approach allows us to train neural ODE based generative models to the same performance as the unregularized dynamics in just over a day on one GPU, whereas unregularized dynamics can take up to 4-6 days of training time on multiple GPUs. This brings neural ODEs significantly closer to practical relevance in large-scale applications.

Farkas layers: don't shift the data, fix the geometry

Oct 04, 2019

Abstract:Successfully training deep neural networks often requires either batch normalization, appropriate weight initialization, both of which come with their own challenges. We propose an alternative, geometrically motivated method for training. Using elementary results from linear programming, we introduce Farkas layers: a method that ensures at least one neuron is active at a given layer. Focusing on residual networks with ReLU activation, we empirically demonstrate a significant improvement in training capacity in the absence of batch normalization or methods of initialization across a broad range of network sizes on benchmark datasets.

A principled approach for generating adversarial images under non-smooth dissimilarity metrics

Aug 05, 2019

Abstract:Deep neural networks are vulnerable to adversarial perturbations: small changes in the input easily lead to misclassification. In this work, we propose an attack methodology catered not only for cases where the perturbations are measured by $\ell_p$ norms, but in fact any adversarial dissimilarity metric with a closed proximal form. This includes, but is not limited to, $\ell_1$, $\ell_2$, $\ell_\infty$ perturbations, and the $\ell_0$ counting "norm", i.e. true sparseness. Our approach to generating perturbations is a natural extension of our recent work, the LogBarrier attack, which previously required the metric to be differentiable. We demonstrate our new algorithm, ProxLogBarrier, on the MNIST, CIFAR10, and ImageNet-1k datasets. We attack undefended and defended models, and show that our algorithm transfers to various datasets with little parameter tuning. In particular, in the $\ell_0$ case, our algorithm finds significantly smaller perturbations compared to multiple existing methods

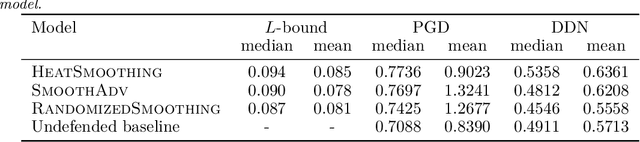

Scaleable input gradient regularization for adversarial robustness

May 27, 2019

Abstract:Input gradient regularization is not thought to be an effective means for promoting adversarial robustness. In this work we revisit this regularization scheme with some new ingredients. First, we derive new per-image theoretical robustness bounds based on local gradient information, and curvature information when available. These bounds strongly motivate input gradient regularization. Second, we implement a scaleable version of input gradient regularization which avoids double backpropagation: adversarially robust ImageNet models are trained in 33 hours on four consumer grade GPUs. Finally, we show experimentally that input gradient regularization is competitive with adversarial training.

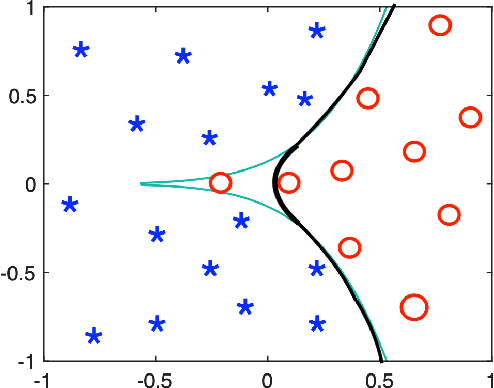

The LogBarrier adversarial attack: making effective use of decision boundary information

Mar 25, 2019

Abstract:Adversarial attacks for image classification are small perturbations to images that are designed to cause misclassification by a model. Adversarial attacks formally correspond to an optimization problem: find a minimum norm image perturbation, constrained to cause misclassification. A number of effective attacks have been developed. However, to date, no gradient-based attacks have used best practices from the optimization literature to solve this constrained minimization problem. We design a new untargeted attack, based on these best practices, using the established logarithmic barrier method. On average, our attack distance is similar or better than all state-of-the-art attacks on benchmark datasets (MNIST, CIFAR10, ImageNet-1K). In addition, our method performs significantly better on the most challenging images, those which normally require larger perturbations for misclassification. We employ the LogBarrier attack on several adversarially defended models, and show that it adversarially perturbs all images more efficiently than other attacks: the distance needed to perturb all images is significantly smaller with the LogBarrier attack than with other state-of-the-art attacks.

Empirical confidence estimates for classification by deep neural networks

Mar 21, 2019

Abstract:How well can we estimate the probability that the classification, $C(f(x))$, predicted by a deep neural network is correct (or in the Top 5)? We consider the case of a classification neural network trained with the KL divergence which is assumed to generalize, as measured empirically by the test error and test loss. We present conditional probabilities for predictions based on the histogram of uncertainty metrics, which have a significant Bayes ratio. Previous work in this area includes Bayesian neural networks. Our metric is twice as predictive, based on the expected Bayes ratio, on ImageNet compared to our best tuned implementation of Bayesian dropout~\cite{gal2016dropout}. Our method uses just the softmax values and a stored histogram so it is essentially free to compute, compared to many times inference cost for Bayesian dropout.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge