Chongrong Fang

Aucamp: An Underwater Camera-Based Multi-Robot Platform with Low-Cost, Distributed, and Robust Localization

Jun 11, 2025

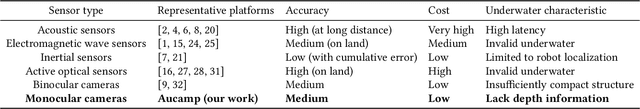

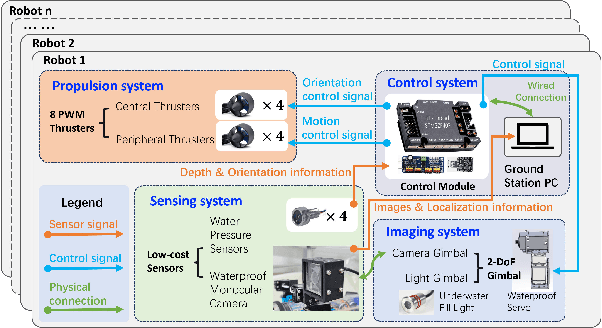

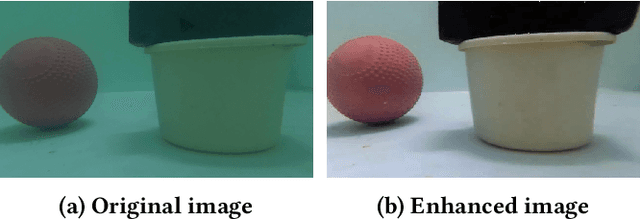

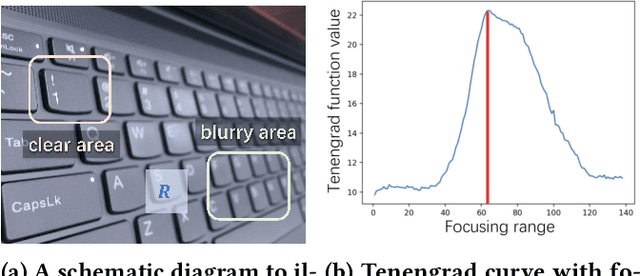

Abstract:This paper introduces an underwater multi-robot platform, named Aucamp, characterized by cost-effective monocular-camera-based sensing, distributed protocol and robust orientation control for localization. We utilize the clarity feature to measure the distance, present the monocular imaging model, and estimate the position of the target object. We achieve global positioning in our platform by designing a distributed update protocol. The distributed algorithm enables the perception process to simultaneously cover a broader range, and greatly improves the accuracy and robustness of the positioning. Moreover, the explicit dynamics model of the robot in our platform is obtained, based on which, we propose a robust orientation control framework. The control system ensures that the platform maintains a balanced posture for each robot, thereby ensuring the stability of the localization system. The platform can swiftly recover from an forced unstable state to a stable horizontal posture. Additionally, we conduct extensive experiments and application scenarios to evaluate the performance of our platform. The proposed new platform may provide support for extensive marine exploration by underwater sensor networks.

Smart Predict-then-Optimize Method with Dependent Data: Risk Bounds and Calibration of Autoregression

Nov 19, 2024Abstract:The predict-then-optimize (PTO) framework is indispensable for addressing practical stochastic decision-making tasks. It consists of two crucial steps: initially predicting unknown parameters of an optimization model and subsequently solving the problem based on these predictions. Elmachtoub and Grigas [1] introduced the Smart Predict-then-Optimize (SPO) loss for the framework, which gauges the decision error arising from predicted parameters, and a convex surrogate, the SPO+ loss, which incorporates the underlying structure of the optimization model. The consistency of these different loss functions is guaranteed under the assumption of i.i.d. training data. Nevertheless, various types of data are often dependent, such as power load fluctuations over time. This dependent nature can lead to diminished model performance in testing or real-world applications. Motivated to make intelligent predictions for time series data, we present an autoregressive SPO method directly targeting the optimization problem at the decision stage in this paper, where the conditions of consistency are no longer met. Therefore, we first analyze the generalization bounds of the SPO loss within our autoregressive model. Subsequently, the uniform calibration results in Liu and Grigas [2] are extended in the proposed model. Finally, we conduct experiments to empirically demonstrate the effectiveness of the SPO+ surrogate compared to the absolute loss and the least squares loss, especially when the cost vectors are determined by stationary dynamical systems and demonstrate the relationship between normalized regret and mixing coefficients.

Affordance-Driven Next-Best-View Planning for Robotic Grasping

Sep 18, 2023Abstract:Grasping occluded objects in cluttered environments is an essential component in complex robotic manipulation tasks. In this paper, we introduce an AffordanCE-driven Next-Best-View planning policy (ACE-NBV) that tries to find a feasible grasp for target object via continuously observing scenes from new viewpoints. This policy is motivated by the observation that the grasp affordances of an occluded object can be better-measured under the view when the view-direction are the same as the grasp view. Specifically, our method leverages the paradigm of novel view imagery to predict the grasps affordances under previously unobserved view, and select next observation view based on the gain of the highest imagined grasp quality of the target object. The experimental results in simulation and on the real robot demonstrate the effectiveness of the proposed affordance-driven next-best-view planning policy. Additional results, code, and videos of real robot experiments can be found in the supplementary materials.

Toward Global Sensing Quality Maximization: A Configuration Optimization Scheme for Camera Networks

Nov 28, 2022Abstract:The performance of a camera network monitoring a set of targets depends crucially on the configuration of the cameras. In this paper, we investigate the reconfiguration strategy for the parameterized camera network model, with which the sensing qualities of the multiple targets can be optimized globally and simultaneously. We first propose to use the number of pixels occupied by a unit-length object in image as a metric of the sensing quality of the object, which is determined by the parameters of the camera, such as intrinsic, extrinsic, and distortional coefficients. Then, we form a single quantity that measures the sensing quality of the targets by the camera network. This quantity further serves as the objective function of our optimization problem to obtain the optimal camera configuration. We verify the effectiveness of our approach through extensive simulations and experiments, and the results reveal its improved performance on the AprilTag detection tasks. Codes and related utilities for this work are open-sourced and available at https://github.com/sszxc/MultiCam-Simulation.

Moving Target Interception Considering Dynamic Environment

May 16, 2022

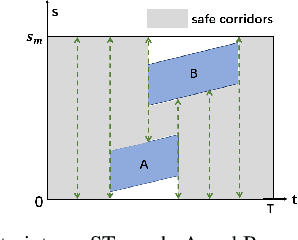

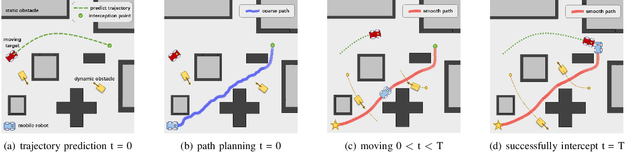

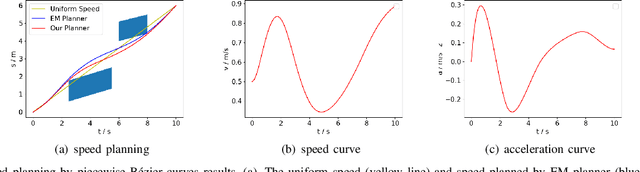

Abstract:The interception of moving targets is a widely studied issue. In this paper, we propose an algorithm of intercepting the moving target with a wheeled mobile robot in a dynamic environment. We first predict the future position of the target through polynomial fitting. The algorithm then generates an interception trajectory with path and speed decoupling. We use Hybrid A* search to plan a path and optimize it via gradient decent method. To avoid the dynamic obstacles in the environment, we introduce ST graph for speed planning. The speed curve is represented by piecewise B\'ezier curves for further optimization. Compared with other interception algorithms, we consider a dynamic environment and plan a safety trajectory which satisfies the kinematic characteristics of the wheeled robot while ensuring the accuracy of interception. Simulation illustrates that the algorithm successfully achieves the interception tasks and has high computational efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge